Efficient Video Processing in Rust: Leveraging Hardware Acceleration for High-Resolution Content

Introduction In the world of video processing, the rise of 4K, 8K, and even higher-resolution content has pushed traditional CPU-based approaches to their limits. Whether it’s video editing, live streaming, or format conversion, developers often grapple with excessive CPU usage under heavy workloads. Hardware acceleration steps in as a game-changer, offloading encoding and decoding tasks to GPUs or specialized chips, boosting performance while freeing up CPU resources for a smoother user experience. Rust, with its blend of performance and safety, offers a robust ecosystem for tackling these demands—tools like ez-ffmpeg make hardware-accelerated video processing accessible and efficient. The Problem: Why Hardware Acceleration Matters Picture this: you’re building a video transcoding tool to convert a 4K clip into H.264 format. Relying solely on the CPU, the process could take minutes, with usage spiking to 100%, slowing down everything else on the system. Or consider a real-time streaming app—high latency and dropped frames can ruin the viewer’s experience. These challenges highlight a key truth: modern video processing often exceeds what CPUs alone can handle. By tapping into GPUs or dedicated hardware like Intel Quick Sync or Nvidia NVENC, hardware acceleration slashes processing times and eases system strain, delivering results in a fraction of the time. How It Works: Implementing Hardware Acceleration At its core, hardware acceleration leverages hardware-specific APIs (think VideoToolbox, CUDA, or Direct3D) and codecs to streamline video workflows. In Rust, FFmpeg serves as a powerful foundation, and higher-level wrappers simplify its use. Here’s a quick example of hardware-accelerated transcoding in Rust: use ez_ffmpeg::{FfmpegContext, Input, Output}; fn main() -> Result { let mut input: Input = "test.mp4".into(); let mut output: Output = "output.mp4".into(); // Example for macOS: Use VideoToolbox for hardware acceleration input = input.set_hwaccel("videotoolbox"); output = output.set_video_codec("h264_videotoolbox"); FfmpegContext::builder() .input(input) .output(output) .build()? .start()? .wait()?; Ok(()) } Breaking It Down set_hwaccel("videotoolbox"): Activates VideoToolbox on macOS for decoding. set_video_codec("h264_videotoolbox"): Sets the H.264 codec with VideoToolbox for encoding. Outcome: The input test.mp4 is transcoded to output.mp4 with hardware acceleration, far outpacing CPU-only methods. Cross-Platform Flexibility: Adapting to Different Hardware Hardware acceleration varies across platforms and devices, posing a common hurdle for developers. Fortunately, it’s adaptable to diverse setups: Windows: Use Direct3D 12 Video Acceleration (d3d12va) for decoding and Media Foundation for encoding: input = input.set_hwaccel("d3d12va"); output = output.set_video_codec("h264_mf"); Nvidia GPUs: Pair CUDA decoding with NVENC encoding: input = input.set_hwaccel("cuda").set_video_codec("h264_cuvid"); output = output.set_video_codec("h264_nvenc"); Heads-Up: Availability depends on your hardware and system. Nvidia GPUs require proper drivers, while VideoToolbox needs Apple hardware. Always verify compatibility before diving in. Wrapping Up From speeding up video workflows to cutting resource usage, hardware acceleration is a must-have in today’s development toolkit. Rust’s clean APIs and strong ecosystem make it a breeze to harness this power for high-performance video processing. If you’re on the hunt for a practical solution, check out open-source projects like ez-ffmpeg—it’s a solid launchpad for Rust developers looking to dive in.

Introduction

In the world of video processing, the rise of 4K, 8K, and even higher-resolution content has pushed traditional CPU-based approaches to their limits. Whether it’s video editing, live streaming, or format conversion, developers often grapple with excessive CPU usage under heavy workloads. Hardware acceleration steps in as a game-changer, offloading encoding and decoding tasks to GPUs or specialized chips, boosting performance while freeing up CPU resources for a smoother user experience. Rust, with its blend of performance and safety, offers a robust ecosystem for tackling these demands—tools like ez-ffmpeg make hardware-accelerated video processing accessible and efficient.

The Problem: Why Hardware Acceleration Matters

Picture this: you’re building a video transcoding tool to convert a 4K clip into H.264 format. Relying solely on the CPU, the process could take minutes, with usage spiking to 100%, slowing down everything else on the system. Or consider a real-time streaming app—high latency and dropped frames can ruin the viewer’s experience. These challenges highlight a key truth: modern video processing often exceeds what CPUs alone can handle. By tapping into GPUs or dedicated hardware like Intel Quick Sync or Nvidia NVENC, hardware acceleration slashes processing times and eases system strain, delivering results in a fraction of the time.

How It Works: Implementing Hardware Acceleration

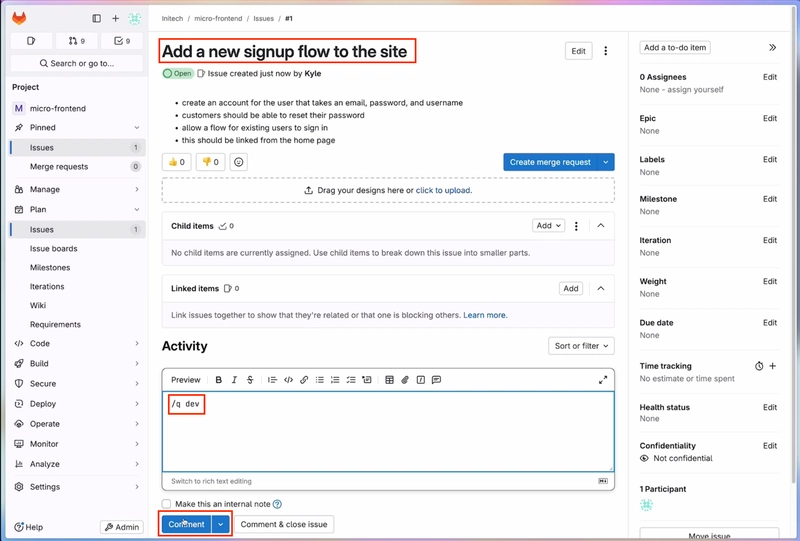

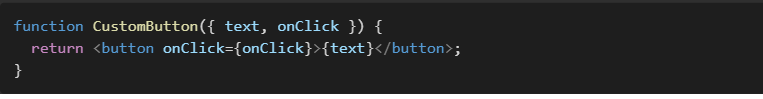

At its core, hardware acceleration leverages hardware-specific APIs (think VideoToolbox, CUDA, or Direct3D) and codecs to streamline video workflows. In Rust, FFmpeg serves as a powerful foundation, and higher-level wrappers simplify its use. Here’s a quick example of hardware-accelerated transcoding in Rust:

use ez_ffmpeg::{FfmpegContext, Input, Output};

fn main() -> Result<(), Box<dyn std::error::Error>> {

let mut input: Input = "test.mp4".into();

let mut output: Output = "output.mp4".into();

// Example for macOS: Use VideoToolbox for hardware acceleration

input = input.set_hwaccel("videotoolbox");

output = output.set_video_codec("h264_videotoolbox");

FfmpegContext::builder()

.input(input)

.output(output)

.build()?

.start()?

.wait()?;

Ok(())

}

Breaking It Down

-

set_hwaccel("videotoolbox"): Activates VideoToolbox on macOS for decoding. -

set_video_codec("h264_videotoolbox"): Sets the H.264 codec with VideoToolbox for encoding. -

Outcome: The input

test.mp4is transcoded tooutput.mp4with hardware acceleration, far outpacing CPU-only methods.

Cross-Platform Flexibility: Adapting to Different Hardware

Hardware acceleration varies across platforms and devices, posing a common hurdle for developers. Fortunately, it’s adaptable to diverse setups:

- Windows: Use Direct3D 12 Video Acceleration (d3d12va) for decoding and Media Foundation for encoding:

input = input.set_hwaccel("d3d12va");

output = output.set_video_codec("h264_mf");

- Nvidia GPUs: Pair CUDA decoding with NVENC encoding:

input = input.set_hwaccel("cuda").set_video_codec("h264_cuvid");

output = output.set_video_codec("h264_nvenc");

Heads-Up: Availability depends on your hardware and system. Nvidia GPUs require proper drivers, while VideoToolbox needs Apple hardware. Always verify compatibility before diving in.

Wrapping Up

From speeding up video workflows to cutting resource usage, hardware acceleration is a must-have in today’s development toolkit. Rust’s clean APIs and strong ecosystem make it a breeze to harness this power for high-performance video processing. If you’re on the hunt for a practical solution, check out open-source projects like ez-ffmpeg—it’s a solid launchpad for Rust developers looking to dive in.