A Beginner’s Trial of MCP Server: SafeLine MCP

This article is written by one of SafeLine users: 弹霄博科 Original source: https://www.txisfine.cn/archives/fcd1738c.html SafeLine is a WAF powered by a semantic analysis engine, unlike traditional signature-based solutions. It's self-hosted and very easy to deploy & configure. Github: https://github.com/chaitin/SafeLine Website: https://safepoint.cloud/landing/safeline What is MCP MCP (Model Context Protocol) is one of the hottest topics in the AI programming community these days. In fact, MCP was first proposed by Anthropic in November 2024. The most widely adopted version of the MCP specification is the 2025-03-26 version. MCP is a standardized protocol designed to facilitate interactions between AI models and both local and remote resources. It functions like a USB-C interface for AI applications, providing a standardized way for AI models to connect with different data sources and tools. MCP adopts a Client/Server (C/S) architecture, where an MCP Host can connect to multiple MCP Servers to extend AI capabilities. As shown in the diagram above, MCP involves several key roles: MCP Hosts: Programs such as Claude Desktop, IDEs, or AI tools that wish to access resources via MCP. MCP Clients: Protocol clients that maintain a 1:1 connection with servers. MCP Servers: Lightweight programs that expose specific functionalities via the standardized Model Context Protocol. Local Resources: Computer resources (databases, files, services) that MCP servers can securely access. Remote Resources: Internet-accessible resources (e.g., APIs) that MCP servers can connect to. MCP provides AI models with capabilities beyond just tools, but the primary focus today is on Tool functionalities. Our goal today is to create an MCP Server for SafeLine WAF to enable automated WAF management via AI. Initial Implementation Since SafeLine version 6.x, API capabilities have been made publicly available. Users can create API Tokens through the management interface, providing a favorable condition for implementing an MCP Server. As shown in the image below, an API Token is created, which will be configured in the MCP Server. For the MCP protocol, official SDKs are available in Python, TypeScript, and Java, while the community provides a Golang SDK. We use github.com/mark3labs/mcp-go for our implementation. Functionality Implementation The project has a simple structure, where main.go creates an SSE-based MCP Server. The utils directory defines the communication protocol with SafeLine API and includes helper functions. The specific MCP tools are defined in the tools directory. Currently Implemented Features: Creating protected applications Retrieving certificates Fetching attack events and logs Attack event statistics WAF-protected website response code statistics Common time calculations Project Repository: https://cnb.cool/hex/go-mcp-safeline Getting Started Edit .env to start the service. TRANSPORT=sse MCPS_ADDR=http://127.0.0.1:8099 SAFELINE_APISERVER= SAFELINE_APITOKEN= DEBUG=true Use in Cursor { "mcpServers": { "mcp-safeline": { "url": "http://127.0.0.1:8099/sse", "env": {} } } } Demo I created two demonstration scenarios showcasing how MCP can be used for automated WAF management. Full Video Demonstration: https://www.bilibili.com/video/BV1aDZWYuEBu/ Scenario 1: What attacks did the WAF block in the last month? The LLM analyzed the user's request, autonomously calculated the current time and the date one month ago, fetched all attack logs, and summarized the results for the user. Scenario 2: What certificates are configured on the WAF? The LLM retrieved the list of certificates and their details from the WAF and presented them to the user. Conclusion Implementing an MCP Server requires a significant development effort. We need to understand the API, plan tools effectively, and write comprehensive descriptions for each variable and function. Writing MCP descriptions can be even more demanding than adding comments in traditional projects. In my perspective, MCP Server development is like teaching an LLM how to use tools—it’s not just about providing tools but also explaining their background and usage clearly. Although MCP decouples LLMs from specific tools, ensuring an effective implementation still requires skilled orchestration. The LLM, acting as the brain, must have not only strong reasoning abilities but also the capacity to select and plan MCP Server calls efficiently, maximizing tool utilization. A well-thought-out tool invocation strategy, combined with intelligent LLM scheduling, can significantly enhance application intelligence, making it more adaptable and practical.

This article is written by one of SafeLine users: 弹霄博科

Original source: https://www.txisfine.cn/archives/fcd1738c.html

SafeLine is a WAF powered by a semantic analysis engine, unlike traditional signature-based solutions. It's self-hosted and very easy to deploy & configure.

Github: https://github.com/chaitin/SafeLine

Website: https://safepoint.cloud/landing/safeline

What is MCP

MCP (Model Context Protocol) is one of the hottest topics in the AI programming community these days. In fact, MCP was first proposed by Anthropic in November 2024. The most widely adopted version of the MCP specification is the 2025-03-26 version.

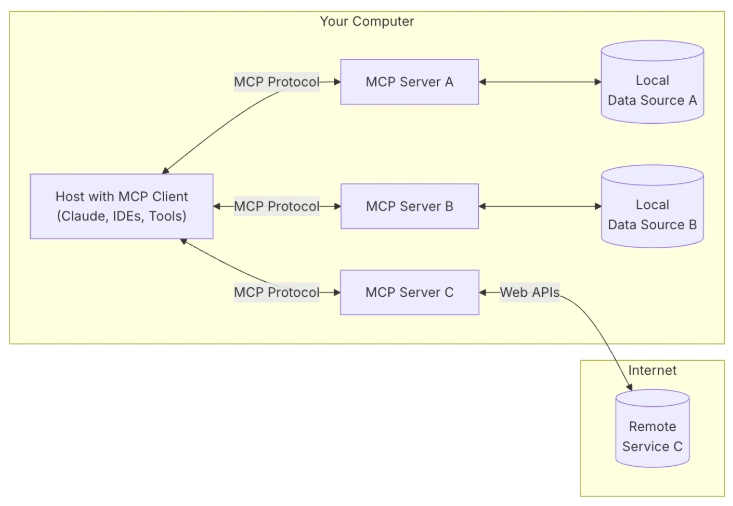

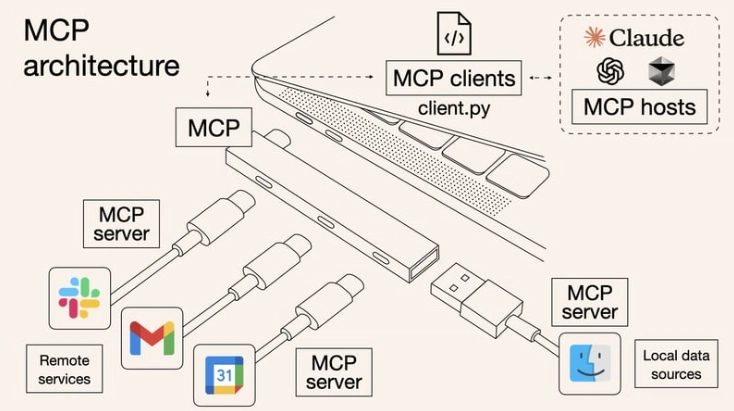

MCP is a standardized protocol designed to facilitate interactions between AI models and both local and remote resources. It functions like a USB-C interface for AI applications, providing a standardized way for AI models to connect with different data sources and tools.

MCP adopts a Client/Server (C/S) architecture, where an MCP Host can connect to multiple MCP Servers to extend AI capabilities.

As shown in the diagram above, MCP involves several key roles:

- MCP Hosts: Programs such as Claude Desktop, IDEs, or AI tools that wish to access resources via MCP.

- MCP Clients: Protocol clients that maintain a 1:1 connection with servers.

- MCP Servers: Lightweight programs that expose specific functionalities via the standardized Model Context Protocol.

- Local Resources: Computer resources (databases, files, services) that MCP servers can securely access.

- Remote Resources: Internet-accessible resources (e.g., APIs) that MCP servers can connect to.

MCP provides AI models with capabilities beyond just tools, but the primary focus today is on Tool functionalities. Our goal today is to create an MCP Server for SafeLine WAF to enable automated WAF management via AI.

Initial Implementation

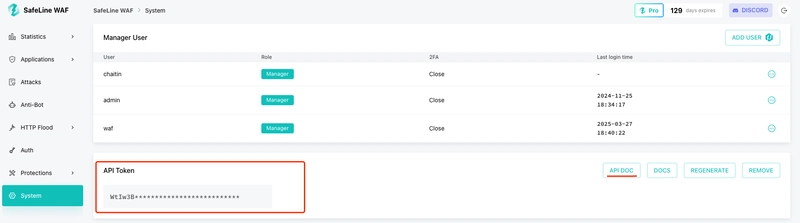

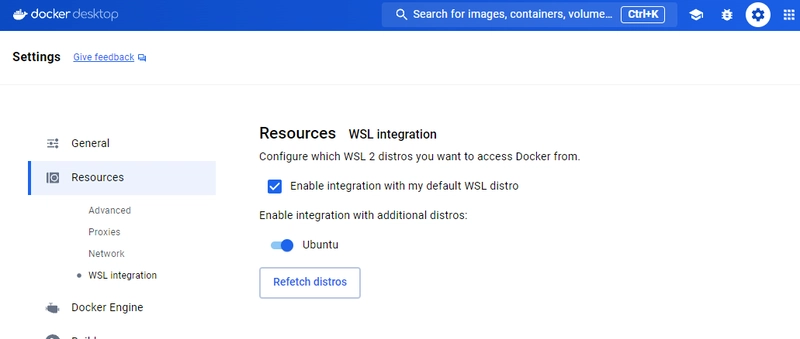

Since SafeLine version 6.x, API capabilities have been made publicly available. Users can create API Tokens through the management interface, providing a favorable condition for implementing an MCP Server. As shown in the image below, an API Token is created, which will be configured in the MCP Server.

For the MCP protocol, official SDKs are available in Python, TypeScript, and Java, while the community provides a Golang SDK. We use github.com/mark3labs/mcp-go for our implementation.

Functionality Implementation

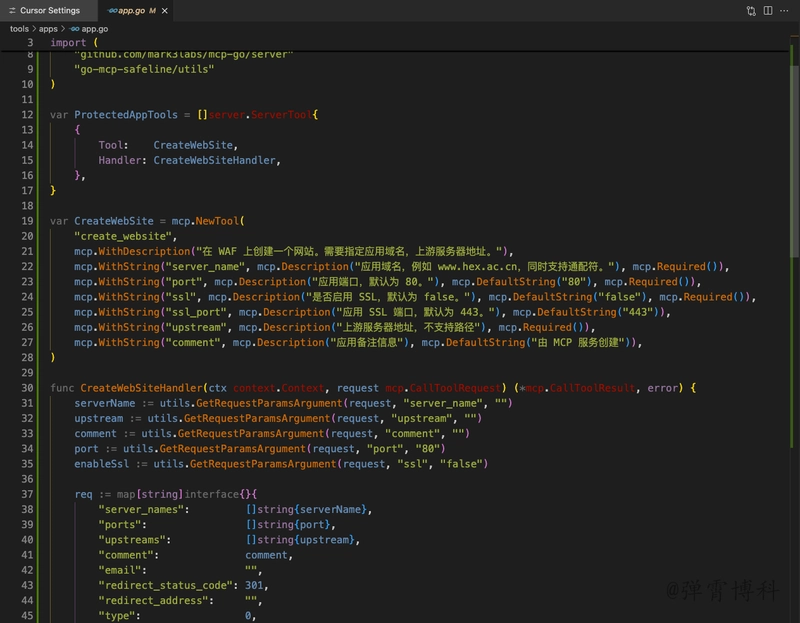

The project has a simple structure, where main.go creates an SSE-based MCP Server. The utils directory defines the communication protocol with SafeLine API and includes helper functions.

The specific MCP tools are defined in the tools directory.

Currently Implemented Features:

- Creating protected applications

- Retrieving certificates

- Fetching attack events and logs

- Attack event statistics

- WAF-protected website response code statistics

- Common time calculations

Project Repository: https://cnb.cool/hex/go-mcp-safeline

Getting Started

Edit .env to start the service.

TRANSPORT=sse

MCPS_ADDR=http://127.0.0.1:8099

SAFELINE_APISERVER=

SAFELINE_APITOKEN=

DEBUG=true

Use in Cursor

{

"mcpServers": {

"mcp-safeline": {

"url": "http://127.0.0.1:8099/sse",

"env": {}

}

}

}

Demo

I created two demonstration scenarios showcasing how MCP can be used for automated WAF management.

Full Video Demonstration: https://www.bilibili.com/video/BV1aDZWYuEBu/

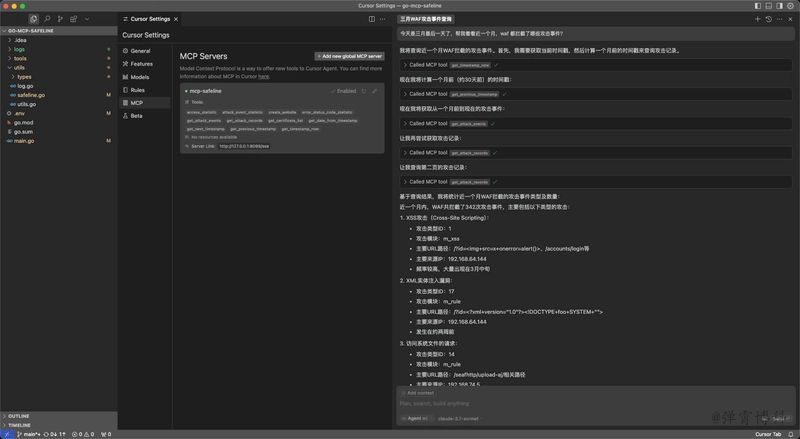

Scenario 1: What attacks did the WAF block in the last month?

The LLM analyzed the user's request, autonomously calculated the current time and the date one month ago, fetched all attack logs, and summarized the results for the user.

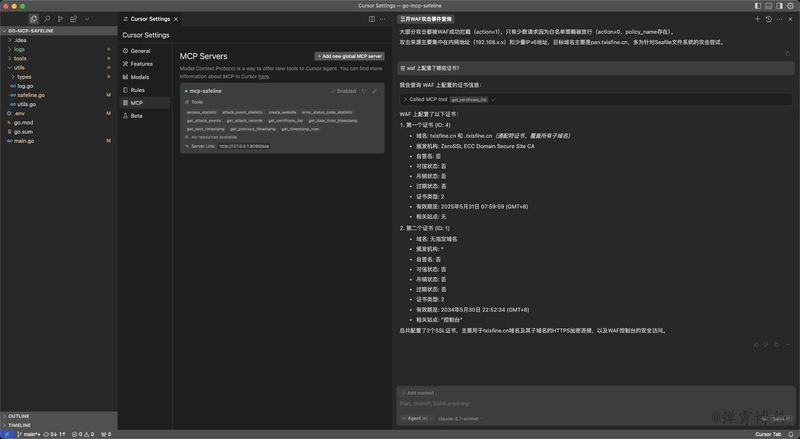

Scenario 2: What certificates are configured on the WAF?

The LLM retrieved the list of certificates and their details from the WAF and presented them to the user.

Conclusion

Implementing an MCP Server requires a significant development effort. We need to understand the API, plan tools effectively, and write comprehensive descriptions for each variable and function. Writing MCP descriptions can be even more demanding than adding comments in traditional projects.

In my perspective, MCP Server development is like teaching an LLM how to use tools—it’s not just about providing tools but also explaining their background and usage clearly.

Although MCP decouples LLMs from specific tools, ensuring an effective implementation still requires skilled orchestration. The LLM, acting as the brain, must have not only strong reasoning abilities but also the capacity to select and plan MCP Server calls efficiently, maximizing tool utilization.

A well-thought-out tool invocation strategy, combined with intelligent LLM scheduling, can significantly enhance application intelligence, making it more adaptable and practical.

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer_public/a6/73/a673143a-c068-4f51-a040-6d4f37d601c0/gettyimages-1124673966_web.jpg?#)