Story of CocoIndex, at 1k stars

We have been working on CocoIndex - a real-time data framework for AI for a while, with lots of excitement from the community. We officially crossed 1k stars earlier this week. Huge thanks to everyone who starred, forked, contributed, or shared the love ❤️! CocoIndex is an ultra performant data transformation framework, with its core engine written in Rust. The problem it tries to solve is to make it easy to prepare fresh data for AI - either creating embedding, building knowledge graphs, or performing other data transformations - and take real-time data pipelines beyond traditional SQL. The philosophy is to have the framework handle the source updates, and having developers only worry about defining a series of data transformation, inspired by spreadsheet. Here's a bit history about our open source journey: Data Flow Programming Unlike a workflow orchestration framework where data is usually opaque, in CocoIndex, data and data operations are first class citizens. CocoIndex follows the idea of Dataflow programming model. Each transformation creates a new field solely based on input fields, without hidden states and value mutation. All data before/after each transformation is observable, with lineage out of the box. For example this is a kind of a data flow: Parse files -> Data Mapping -> Data Extraction -> Knowledge Graph Particularly, users don't define data operations like creation, update, or deletion. Rather, they define something like - for a set of source data, this is the transformation or formula. The framework takes care of the data operations such as when to create, update, or delete. With operations like # ingest data['content'] = flow_builder.add_source(...) # transform data['out'] = data['content'] .transform(...) .transform(...) # collect data collector.collect(...) # export to db, vector db, graph db ... collector.export(...) Data Freshness As a data framework, CocoIndex takes data freshness to the next level. Incremental processing is one of the core values provided by CocoIndex. The framework takes care of: Change data capture Figuring out what exactly needs to be updated, and only updating that without having to recompute everything throughout. This makes it fast to reflect any source updates to the target store. If you have concerns with surfacing stale data to AI agents and are spending lots of effort working on infrastructure pieces to optimize latency, the framework actually handles it for you. Built-in modules and custom modules We understand preparing data is highly use-case based and there is no one-size-fits-all solution. With all the latest advancements in models, there is no single best solution for all cases, and it's also a balance of multiple factors in terms of quality, performance, pricing, etc. There are a lot of efforts in the data infra ecosystem that optimize for different goals - parsers, embedding models, vector DBs, graph DBs, and many others. We take the composition approach, and instead of building everything, we provide native plugins to embrace the ecosystem and make it easier to plug in and swap any module by standardizing the interface - exactly like LEGO. And we can focus on what we're best at - building the best incremental pipeline and compute engine in the AI era. Tooling In addition to standard statistics and reports with CocoIndex, we are building a product called CocoInsight. It has zero pipeline data retention and connects to your on-premise CocoIndex server for pipeline insights. This makes data directly visible and easy to develop ETL pipelines. Especially, as we mentioned earlier, CocoIndex is heavily influenced by spreadsheets. This is directly reflected in CocoInsight, as you can see all the data in the spreadsheet and how it looks after each transformation. This is an example of mapping: In addition to the data insights for data transformation, we also aim to provide tooling to make it directly visible to understand the LLM transformations, for example: Understand and trouble shooting the chunking, if there's any, and debug why a chunk is showing from search results Understand the relation extraction for Graphs and more Data should be transparent, especially for ETL frameworks. Support us We are constantly improving, and more features and examples are coming soon. If you love this article, please drop us a star ⭐ at GitHub repo to help us grow. You can also find us on Discord, I try to be there 24/7

We have been working on CocoIndex - a real-time data framework for AI for a while, with lots of excitement from the community. We officially crossed 1k stars earlier this week. Huge thanks to everyone who starred, forked, contributed, or shared the love ❤️!

CocoIndex is an ultra performant data transformation framework, with its core engine written in Rust. The problem it tries to solve is to make it easy to prepare fresh data for AI - either creating embedding, building knowledge graphs, or performing other data transformations - and take real-time data pipelines beyond traditional SQL.

The philosophy is to have the framework handle the source updates, and having developers only worry about defining a series of data transformation, inspired by spreadsheet.

Here's a bit history about our open source journey:

Data Flow Programming

Unlike a workflow orchestration framework where data is usually opaque, in CocoIndex, data and data operations are first class citizens. CocoIndex follows the idea of Dataflow programming model. Each transformation creates a new field solely based on input fields, without hidden states and value mutation. All data before/after each transformation is observable, with lineage out of the box.

For example this is a kind of a data flow: Parse files -> Data Mapping -> Data Extraction -> Knowledge Graph

Particularly, users don't define data operations like creation, update, or deletion. Rather, they define something like -

for a set of source data, this is the transformation or formula. The framework takes care of the data operations such as when to create, update, or delete.

With operations like

# ingest

data['content'] = flow_builder.add_source(...)

# transform

data['out'] = data['content']

.transform(...)

.transform(...)

# collect data

collector.collect(...)

# export to db, vector db, graph db ...

collector.export(...)

Data Freshness

As a data framework, CocoIndex takes data freshness to the next level. Incremental processing is one of the core values provided by CocoIndex.

The framework takes care of:

- Change data capture

- Figuring out what exactly needs to be updated, and only updating that without having to recompute everything throughout.

This makes it fast to reflect any source updates to the target store. If you have concerns with surfacing stale data to AI agents and are spending lots of effort working on infrastructure pieces to optimize latency, the framework actually handles it for you.

Built-in modules and custom modules

We understand preparing data is highly use-case based and there is no one-size-fits-all solution. With all the latest advancements in models, there is no single best solution for all cases, and it's also a balance of multiple factors in terms of quality, performance, pricing, etc. There are a lot of efforts in the data infra ecosystem that optimize for different goals - parsers, embedding models, vector DBs, graph DBs, and many others.

We take the composition approach, and instead of building everything, we provide native plugins to embrace the ecosystem and make it easier to plug in and swap any module by standardizing the interface - exactly like LEGO.

And we can focus on what we're best at - building the best incremental pipeline and compute engine in the AI era.

Tooling

In addition to standard statistics and reports with CocoIndex, we are building a product called CocoInsight. It has zero pipeline data retention and connects to your on-premise CocoIndex server for pipeline insights. This makes data directly visible and easy to develop ETL pipelines.

Especially, as we mentioned earlier, CocoIndex is heavily influenced by spreadsheets. This is directly reflected in CocoInsight, as you can see all the data in the spreadsheet and how it looks after each transformation.

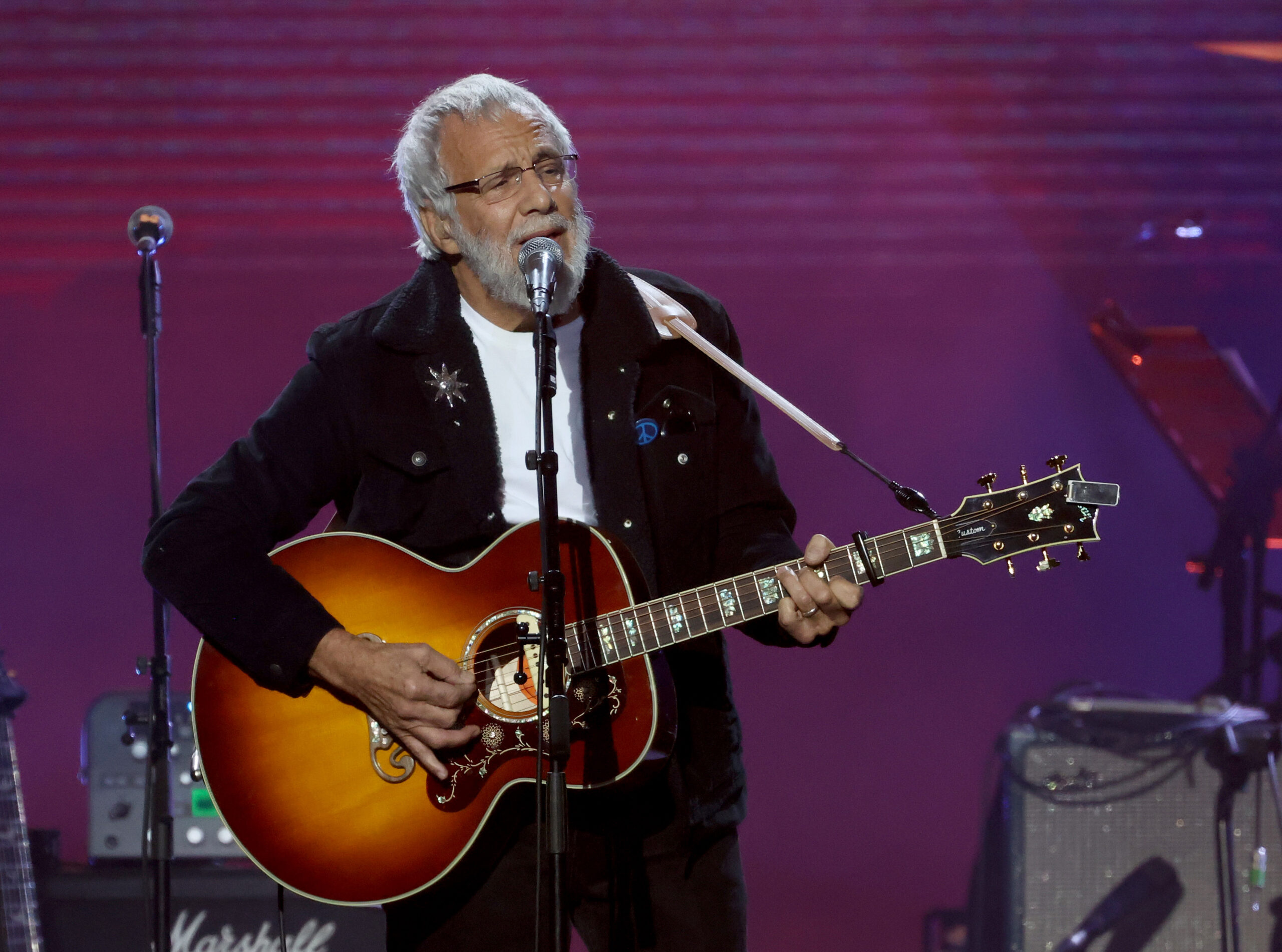

This is an example of mapping:

In addition to the data insights for data transformation, we also aim to provide tooling to make it directly visible to understand the LLM transformations, for example:

- Understand and trouble shooting the chunking, if there's any, and debug why a chunk is showing from search results

- Understand the relation extraction for Graphs

- and more

Data should be transparent, especially for ETL frameworks.

Support us

We are constantly improving, and more features and examples are coming soon. If you love this article, please drop us a star ⭐ at GitHub repo to help us grow. You can also find us on Discord, I try to be there 24/7

_ElenaBs_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Bitten By Bed Bugs At Luxor—Rushed To Hospital, All They Did Was Waive Her Resort Fee. Now She’s Suing [Roundup]](https://viewfromthewing.com/wp-content/uploads/2025/05/luxor.jpg?#)