Salesforce AI Research Introduces New Benchmarks, Guardrails, and Model Architectures to Advance Trustworthy and Capable AI Agents

Salesforce AI Research has outlined a comprehensive roadmap for building more intelligent, reliable, and versatile AI agents. The recent initiative focuses on addressing foundational limitations in current AI systems—particularly their inconsistent task performance, lack of robustness, and challenges in adapting to complex enterprise workflows. By introducing new benchmarks, model architectures, and safety mechanisms, Salesforce is […] The post Salesforce AI Research Introduces New Benchmarks, Guardrails, and Model Architectures to Advance Trustworthy and Capable AI Agents appeared first on MarkTechPost.

Salesforce AI Research has outlined a comprehensive roadmap for building more intelligent, reliable, and versatile AI agents. The recent initiative focuses on addressing foundational limitations in current AI systems—particularly their inconsistent task performance, lack of robustness, and challenges in adapting to complex enterprise workflows. By introducing new benchmarks, model architectures, and safety mechanisms, Salesforce is establishing a multi-layered framework to scale agentic systems responsibly.

Addressing “Jagged Intelligence” Through Targeted Benchmarks

One of the central challenges highlighted in this research is what Salesforce terms jagged intelligence: the erratic behavior of AI agents across tasks of similar complexity. To systematically diagnose and reduce this problem, the team introduced the SIMPLE benchmark. This dataset contains 225 straightforward, reasoning-oriented questions that humans answer with near-perfect consistency but remain non-trivial for language models. The goal is to reveal gaps in models’ ability to generalize across seemingly uniform problems, particularly in real-world reasoning scenarios.

Complementing SIMPLE is ContextualJudgeBench, which evaluates an agent’s ability to maintain accuracy and faithfulness in context-specific answers. This benchmark emphasizes not only factual correctness but also the agent’s ability to recognize when to abstain from answering—an important trait for trust-sensitive applications such as legal, financial, and healthcare domains.

Strengthening Safety and Robustness with Trust Mechanisms

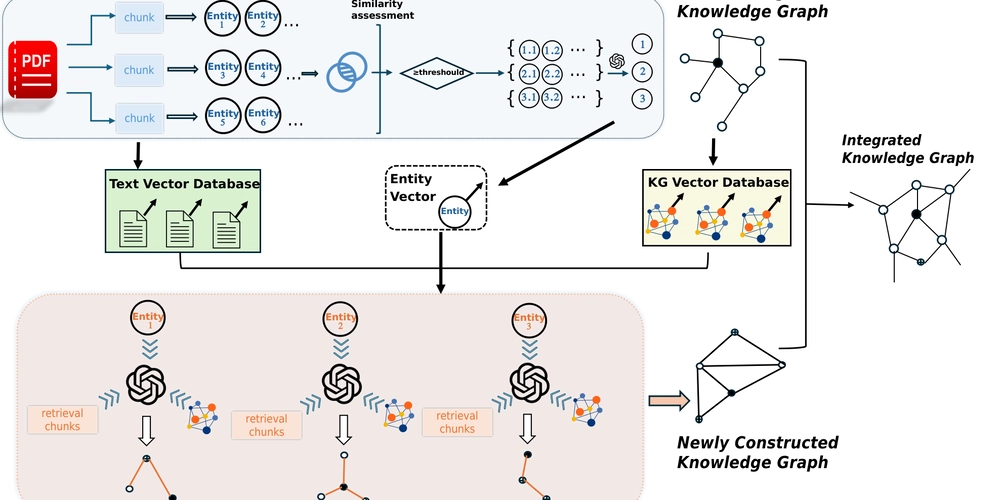

Recognizing the importance of AI reliability in enterprise settings, Salesforce is expanding its Trust Layer with new safeguards. The SFR-Guard model family has been trained on both open-domain and domain-specific (CRM) data to detect prompt injections, toxic outputs, and hallucinated content. These models serve as dynamic filters, supporting real-time inference with contextual moderation capabilities.

Another component, CRMArena, is a simulation-based evaluation suite designed to test agent performance under conditions that mimic real CRM workflows. This ensures AI agents can generalize beyond training prompts and operate predictably across varied enterprise tasks.

Specialized Model Families for Reasoning and Action

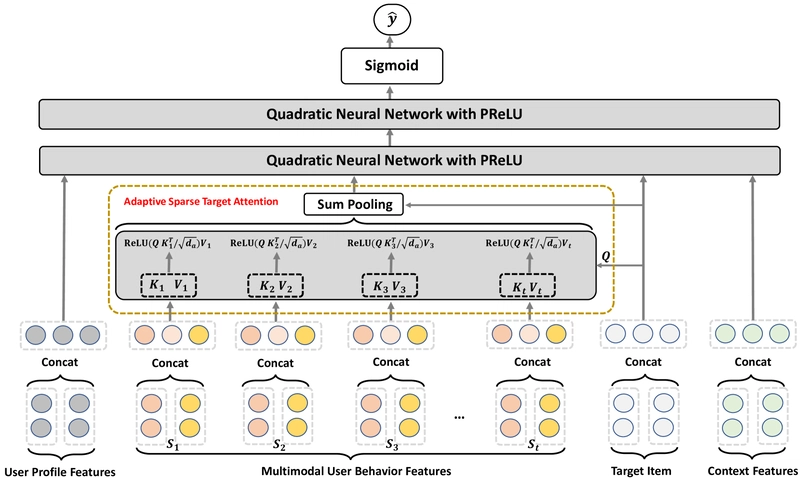

To support more structured, goal-directed behavior in agents, Salesforce introduced two new model families: xLAM and TACO.

The xLAM (eXtended Language and Action Models) series is optimized for tool use, multi-turn interaction, and function calling. These models vary in scale (from 1B to 200B+ parameters) and are built to support enterprise-grade deployments, where integration with APIs and internal knowledge sources is essential.

TACO (Thought-and-Action Chain Optimization) models aim to improve agent planning capabilities. By explicitly modeling intermediate reasoning steps and corresponding actions, TACO enhances the agent’s ability to decompose complex goals into sequences of operations. This structure is particularly relevant for use cases like document automation, analytics, and decision support systems.

Operationalizing Agents via Agentforce

These capabilities are being unified under Agentforce, Salesforce’s platform for building and deploying autonomous agents. The platform includes a no-code Agent Builder, which allows developers and domain experts to specify agent behaviors and constraints using natural language. Integration with the broader Salesforce ecosystem ensures agents can access customer data, invoke workflows, and remain auditable.

A study by Valoir found that teams using Agentforce can build production-ready agents 16 times faster compared to traditional software approaches, while improving operational accuracy by up to 75%. Importantly, Agentforce agents are embedded within the Salesforce Trust Layer, inheriting the safety and compliance features required in enterprise contexts.

Conclusion

Salesforce’s research agenda reflects a shift toward more deliberate, architecture-aware AI development. By combining targeted evaluations, fine-grained safety models, and purpose-built architectures for reasoning and action, the company is laying the groundwork for next-generation agentic systems. These advances are not only technical but structural—emphasizing reliability, adaptability, and alignment with the nuanced needs of enterprise software.

Check out the Technical details. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.

![Southwest’s Free Wi-Fi Trial Could Backfire—Here’s Why [Roundup]](https://viewfromthewing.com/wp-content/uploads/2025/04/southwest-airlines-jet.jpeg?#)