New AI Model Predicts Clicks: Quadratic Interest Network (QIN) Outperforms in Multimodal CTR Prediction Challenge

This is a Plain English Papers summary of a research paper called New AI Model Predicts Clicks: Quadratic Interest Network (QIN) Outperforms in Multimodal CTR Prediction Challenge. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Advancing Recommender Systems with Multimodal CTR Prediction Multimodal click-through rate (CTR) prediction represents a cornerstone technology in modern recommender systems. These systems leverage diverse data types—text descriptions, product images, and user behavior logs—to better understand user preferences and predict interactions. The primary challenge lies in effectively utilizing this rich multimodal information while maintaining the speed required for real-time applications. To address these challenges, the Quadratic Interest Network (QIN) introduces a novel architecture for multimodal CTR prediction. This model ranked second in the Multimodal CTR Prediction Challenge Track of the WWW 2025 EReL@MIR Workshop, demonstrating its practical effectiveness. QIN incorporates two key innovations: Adaptive Sparse Target Attention (ASTA) for extracting relevant user behavior features, and Quadratic Neural Networks (QNN) for modeling complex feature interactions. This combination allows the model to dynamically focus on important aspects of user history while capturing sophisticated relationships between features. Figure 1. The architecture of Quadratic Interest Network. The model builds upon previous work in CTR prediction such as the Deep Interest Network, but introduces substantial improvements in how it processes multimodal data and models feature interactions. Rank Team Name 1st Place momo 2nd Place DISCO.AHU 3rd Place jzzx.NTU Task 1 Winner delorean Task 2 Winner zhou123 Table 1: Top-5 teams on the leaderboard The Quadratic Interest Network Architecture QIN's architecture processes multimodal features through specialized components designed to capture both user interests and feature interactions. The model consists of two main components that work together to produce accurate CTR predictions. Capturing User Interests with Adaptive Sparse Target Attention The Adaptive Sparse Target Attention (ASTA) mechanism represents a significant advancement in modeling user behavior sequences. ASTA treats the target item as a query and the user's behavior history as keys and values in an attention framework. This approach allows the model to dynamically assign importance to different items in the user's history based on their relevance to the current target item. Unlike standard attention mechanisms that use SoftMax activation to produce a probability distribution over all items, ASTA employs sparse attention through ReLU activation. This sparsity allows the model to focus more precisely on truly relevant behaviors while ignoring irrelevant ones, reducing noise and improving generalization. The approach aligns with research trends seen in the Multi-Granularity Interest Retrieval Refinement Network, which similarly emphasizes selective focus on user interests at multiple levels of granularity. Modeling Complex Feature Interactions with Quadratic Neural Networks The Quadratic Neural Network (QNN) forms the second key component of QIN. Unlike traditional neural networks that process raw features directly, QNN uses quadratic polynomials as its fundamental input units. This approach enables the explicit modeling of pairwise feature interactions, capturing more complex relationships than standard architectures like MultiLayer Perceptrons (MLPs) or CrossNet. Figure 2. Comparison of MLP and QNN. The input to the MLP consists of raw features, while the QNN uses linearly independent quadratic polynomials as input. QNN leverages the Khatri-Rao product to efficiently implement quadratic interactions between features. This mathematical foundation allows QIN to model the complex interplay between different aspects of user behavior and item characteristics. For example, the model can capture how a user's past purchase history interacts with the visual characteristics of a potential item to influence click likelihood. This approach shares conceptual similarities with the Collaborative Contrastive Network, which also emphasizes learning rich feature interactions, though through different technical mechanisms. Optimizing the Network with Effective Training Procedures QIN employs a straightforward training approach, using a linear layer to transform the QNN output into a logit followed by a Sigmoid function to obtain probability predictions. The model is trained using binary cross-entropy loss, which effectively measures the discrepancy between predicted probabilities and true labels. The researchers experimented with various loss augmentation methods, including BCE + BPR Loss, Focal Loss, and Contrastive Loss, but found that the standard binary cross-entropy provided competitive

This is a Plain English Papers summary of a research paper called New AI Model Predicts Clicks: Quadratic Interest Network (QIN) Outperforms in Multimodal CTR Prediction Challenge. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Advancing Recommender Systems with Multimodal CTR Prediction

Multimodal click-through rate (CTR) prediction represents a cornerstone technology in modern recommender systems. These systems leverage diverse data types—text descriptions, product images, and user behavior logs—to better understand user preferences and predict interactions. The primary challenge lies in effectively utilizing this rich multimodal information while maintaining the speed required for real-time applications.

To address these challenges, the Quadratic Interest Network (QIN) introduces a novel architecture for multimodal CTR prediction. This model ranked second in the Multimodal CTR Prediction Challenge Track of the WWW 2025 EReL@MIR Workshop, demonstrating its practical effectiveness.

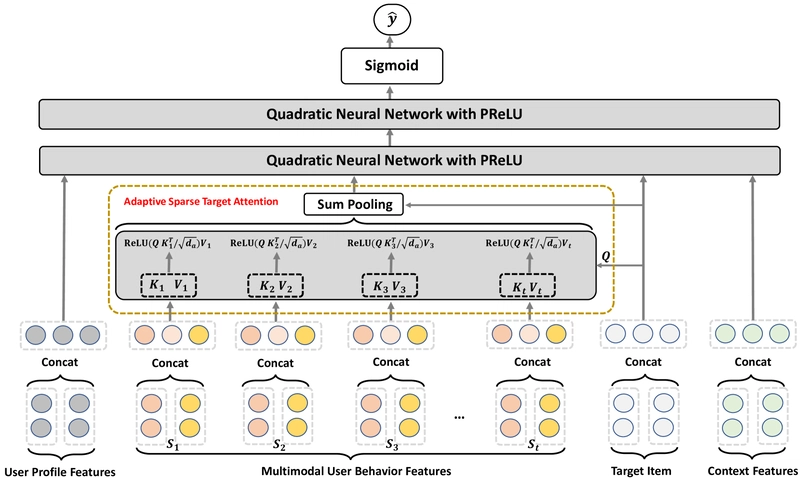

QIN incorporates two key innovations: Adaptive Sparse Target Attention (ASTA) for extracting relevant user behavior features, and Quadratic Neural Networks (QNN) for modeling complex feature interactions. This combination allows the model to dynamically focus on important aspects of user history while capturing sophisticated relationships between features.

Figure 1. The architecture of Quadratic Interest Network.

The model builds upon previous work in CTR prediction such as the Deep Interest Network, but introduces substantial improvements in how it processes multimodal data and models feature interactions.

| Rank | Team Name |

|---|---|

| 1st Place | momo |

| 2nd Place | DISCO.AHU |

| 3rd Place | jzzx.NTU |

| Task 1 Winner | delorean |

| Task 2 Winner | zhou123 |

Table 1: Top-5 teams on the leaderboard

The Quadratic Interest Network Architecture

QIN's architecture processes multimodal features through specialized components designed to capture both user interests and feature interactions. The model consists of two main components that work together to produce accurate CTR predictions.

Capturing User Interests with Adaptive Sparse Target Attention

The Adaptive Sparse Target Attention (ASTA) mechanism represents a significant advancement in modeling user behavior sequences. ASTA treats the target item as a query and the user's behavior history as keys and values in an attention framework. This approach allows the model to dynamically assign importance to different items in the user's history based on their relevance to the current target item.

Unlike standard attention mechanisms that use SoftMax activation to produce a probability distribution over all items, ASTA employs sparse attention through ReLU activation. This sparsity allows the model to focus more precisely on truly relevant behaviors while ignoring irrelevant ones, reducing noise and improving generalization.

The approach aligns with research trends seen in the Multi-Granularity Interest Retrieval Refinement Network, which similarly emphasizes selective focus on user interests at multiple levels of granularity.

Modeling Complex Feature Interactions with Quadratic Neural Networks

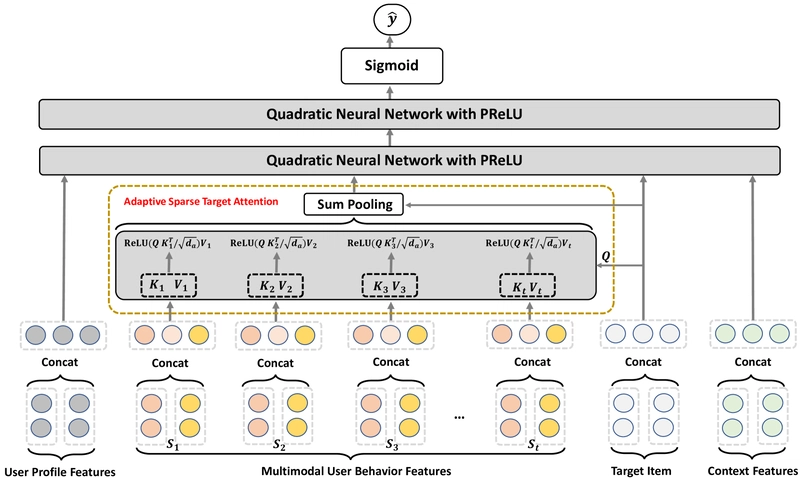

The Quadratic Neural Network (QNN) forms the second key component of QIN. Unlike traditional neural networks that process raw features directly, QNN uses quadratic polynomials as its fundamental input units. This approach enables the explicit modeling of pairwise feature interactions, capturing more complex relationships than standard architectures like MultiLayer Perceptrons (MLPs) or CrossNet.

Figure 2. Comparison of MLP and QNN. The input to the MLP consists of raw features, while the QNN uses linearly independent quadratic polynomials as input.

QNN leverages the Khatri-Rao product to efficiently implement quadratic interactions between features. This mathematical foundation allows QIN to model the complex interplay between different aspects of user behavior and item characteristics. For example, the model can capture how a user's past purchase history interacts with the visual characteristics of a potential item to influence click likelihood.

This approach shares conceptual similarities with the Collaborative Contrastive Network, which also emphasizes learning rich feature interactions, though through different technical mechanisms.

Optimizing the Network with Effective Training Procedures

QIN employs a straightforward training approach, using a linear layer to transform the QNN output into a logit followed by a Sigmoid function to obtain probability predictions. The model is trained using binary cross-entropy loss, which effectively measures the discrepancy between predicted probabilities and true labels.

The researchers experimented with various loss augmentation methods, including BCE + BPR Loss, Focal Loss, and Contrastive Loss, but found that the standard binary cross-entropy provided competitive performance. This aligns with observations in related work like the MIM: Multi-Modal Content Interest Modeling framework, which also prioritizes effective but straightforward training approaches for multimodal recommendation systems.

Experimental Evaluation and Analysis

Setting Up the Experiments

All experiments were conducted on an NVIDIA GeForce RTX 4090 GPU using the FuxiCTR framework. The implementation used a learning rate of 2e-3, embedding weight decay of 2e-4, batch size of 8192, and feature embedding dimension of 128. For the QNN component, the researchers set L=M=4 and employed a Dropout rate of 0.1 for regularization.

The code, training logs, hyperparameter configurations, and model checkpoints are available on GitHub, providing full transparency and reproducibility for the research community.

Superior Performance Against the Baseline

QIN was evaluated against the Deep Interest Network (DIN), a classic CTR prediction model based on user behavior sequences that served as the official baseline for the challenge. The results demonstrate QIN's substantial improvement over the baseline.

| Methods | AUC on valid set |

|---|---|

| DIN | 0.8655 |

| QIN | 0.9701 |

Table 2: Performance Comparison with DIN

The improvement of 0.1046 in AUC score represents a significant advance in prediction accuracy, reinforcing the effectiveness of QIN's approach to multimodal CTR prediction.

Component Analysis: What Makes QIN Work?

To understand the contribution of individual components, the researchers conducted a comprehensive ablation study. They created several variants of QIN by removing or modifying key components and measured the impact on performance.

| Variety | AUC on valid set |

|---|---|

| QIN w/o QNN | 0.7396 |

| QIN w/o ASTA | 0.9321 |

| ASTA w/ SoftMax | 0.9490 |

| QNN w/o PReLU | 0.9584 |

| ASTA w/ Dropout | 0.9681 |

| QIN | 0.9701 |

Table 3: Ablation Study of QIN.

The most dramatic performance drop occurred when replacing QNN with a standard MLP (QIN w/o QNN), resulting in an AUC decrease from 0.9701 to 0.7396. This highlights the crucial role QNN plays in modeling complex feature interactions.

Removing the Adaptive Sparse Target Attention (QIN w/o ASTA) reduced performance to 0.9321, confirming the value of attention-based user interest modeling. Interestingly, replacing ASTA's ReLU-based sparse attention with standard SoftMax attention (ASTA w/ SoftMax) lowered performance to 0.9490, supporting the claim that sparse attention provides better noise reduction and generalization.

The choice of activation function in QNN also proved important, with PReLU outperforming ReLU. Finally, adding Dropout to ASTA slightly reduced performance, suggesting that the sparse nature of ASTA already provides sufficient regularization.

Future Implications for Multimodal Recommender Systems

The Quadratic Interest Network demonstrates significant potential for advancing multimodal recommender systems in practical applications. By effectively combining sparse attention mechanisms with quadratic neural networks, QIN establishes a powerful framework for processing diverse data types while maintaining the efficiency needed for industrial deployment.

The strong performance in the Multimodal CTR Prediction Challenge confirms the practical value of this approach. With an AUC of 0.9798 on the leaderboard, QIN provides a solid foundation for future research in multimodal recommendation systems.

The synergistic relationship between QNN and ASTA represents a particularly promising direction for future work. As recommender systems continue to incorporate richer and more diverse data sources, architectures that can effectively model complex relationships while focusing attention on the most relevant information will become increasingly valuable.