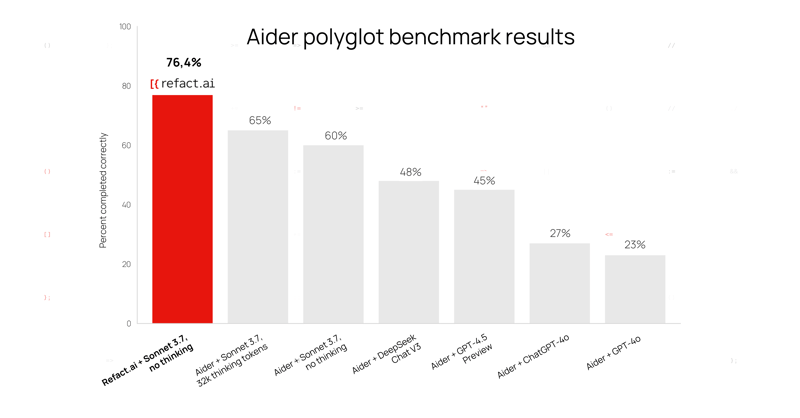

Our AI Agent + 3.7 Sonnet ranked #1 on Aider’s polyglot bench — a 76.4% score

We’ve built an open-source AI Agent for programming in IDE, and it ranked #1 on Aider’s Polyglot Benchmark with a 76.4% score. The benchmark tests autonomous problem-solving on 225 of the hardest coding exercises across C++, Go, Java, JavaScript, Python, and Rust. With this score, we’ve outperformed Aider’s own 60.4% with the same model, Claude 3.7 Sonnet, as well as DeepSeek Chat V3, GPT-4.5 Preview, and ChatGPT-4o. How? Our AI Agent uses an iterative problem-solving approach: it writes code, validates it, fixes errors, and repeats until the task is solved correctly. No shortcuts — just reliable, production-ready results. While SWE Bench gets a lot of attention, we’ve found Polyglot to be a far better measure of AI agents’ problem-solving abilities. It’s not just about passing tests or raw code generation — it’s about reasoning, precision, and delivering working solutions. For more details on our tech setup and approach, check out our blog post. We’d love to hear your thoughts and feedback! About Aider’s polyglot benchmark The benchmark evaluates how well AI can handle 225 of the hardest coding exercises from Exercism across C++, Go, Java, JavaScript, Python, and Rust. It focuses exclusively on the most challenging problems and measures: Can AI write new code that integrates seamlessly into existing codebases? Can AI successfully apply all its changes to source files without human intervention? The full test set in the Aider polyglot benchmark repo on GitHub. Why Polyglot > SWE Bench SWE Bench is popular and often seen as a key benchmark for AI coding agents. However, it has significant limitations: Only tests Python Relies on just 12 repositories (e.g., Django, SymPy) Benchmarked models are often pre-trained on these repos (skewing results) Only one file is changed per task (unrealistic for typical development work) Human-AI interaction is oversimplified (in reality, devs adjust how they collaborate with AI). Because of these constraints, SWE Bench doesn’t truly measure an AI agent’s efficiency in software engineering workflows, not in a controlled environment. In contrast, Polyglot is much more representative and realistic — it imitates the environments developers work in every day and reflects actual needs. It measures how well AI can autonomously interact with diverse, multi-language projects. So, we’d like to thank Aider for introducing this comprehensive benchmark! It provides great insights into AI coding tools and helps drive better solutions. Our approach: How Refact.ai achieved #1 in the leaderboard Many AI Agents rely on a single-shot approach — get a task, generate code once and hope for the best. But LLMs aren’t all-knowing — they have limits and make mistakes, so their first attempt often isn’t accurate and reliable. _Sure, you can pre-train models to ace specific tasks that trend on X… But what’s the point? This doesn’t translate to real-world performance. _ At Refact.ai, we do things differently.** Our AI Agent uses a multi-step process:** instead of settling for the first attempt, it validates its work through testing and iteration until the task is done right. We call it a feedback loop: Writes code: The agent generates code based on the task description. Fixes errors: Runs automated checks for issues. Iterates: If problems are found, the agent corrects the code, fixes bugs, and re-tests until the task is successfully completed. Delivers the result, which will be correct most of the time! This drives the real value of AI — actually solving problems, not just scoring well on benchmarks. Read more about the tech side in our blog. Key features of Refact.ai’s autonomous AI Agent Refact.ai’s advanced AI Agent thinks and acts like a developer, handling software engineering tasks end-to-end. Autonomous task execution Deep contextual understanding Dev tools integration (GitHub, Docker, PostgreSQL, MCP Servers, and more) Memory and continuous improvement Human-AI collaboration Open source Try Refact.ai in your IDE Vibe coding is the future of software development. Get 10x productivity with Refact.ai Agent in your IDE, handling complex programming tasks for you while you focus on core work. ✅ Available to everyone in VS Code and JetBrains. We'd be happy if you test Refact.ai Agent for your software developmebnt tasks and share you opinion!

We’ve built an open-source AI Agent for programming in IDE, and it ranked #1 on Aider’s Polyglot Benchmark with a 76.4% score. The benchmark tests autonomous problem-solving on 225 of the hardest coding exercises across C++, Go, Java, JavaScript, Python, and Rust.

With this score, we’ve outperformed Aider’s own 60.4% with the same model, Claude 3.7 Sonnet, as well as DeepSeek Chat V3, GPT-4.5 Preview, and ChatGPT-4o.

How? Our AI Agent uses an iterative problem-solving approach: it writes code, validates it, fixes errors, and repeats until the task is solved correctly. No shortcuts — just reliable, production-ready results.

While SWE Bench gets a lot of attention, we’ve found Polyglot to be a far better measure of AI agents’ problem-solving abilities. It’s not just about passing tests or raw code generation — it’s about reasoning, precision, and delivering working solutions.

For more details on our tech setup and approach, check out our blog post.

We’d love to hear your thoughts and feedback!

About Aider’s polyglot benchmark

The benchmark evaluates how well AI can handle 225 of the hardest coding exercises from Exercism across C++, Go, Java, JavaScript, Python, and Rust. It focuses exclusively on the most challenging problems and measures:

- Can AI write new code that integrates seamlessly into existing codebases?

- Can AI successfully apply all its changes to source files without human intervention?

The full test set in the Aider polyglot benchmark repo on GitHub.

Why Polyglot > SWE Bench

SWE Bench is popular and often seen as a key benchmark for AI coding agents. However, it has significant limitations:

- Only tests Python

- Relies on just 12 repositories (e.g., Django, SymPy)

- Benchmarked models are often pre-trained on these repos (skewing results)

- Only one file is changed per task (unrealistic for typical development work)

- Human-AI interaction is oversimplified (in reality, devs adjust how they collaborate with AI).

Because of these constraints, SWE Bench doesn’t truly measure an AI agent’s efficiency in software engineering workflows, not in a controlled environment.

In contrast, Polyglot is much more representative and realistic — it imitates the environments developers work in every day and reflects actual needs. It measures how well AI can autonomously interact with diverse, multi-language projects.

So, we’d like to thank Aider for introducing this comprehensive benchmark! It provides great insights into AI coding tools and helps drive better solutions.

Our approach: How Refact.ai achieved #1 in the leaderboard

Many AI Agents rely on a single-shot approach — get a task, generate code once and hope for the best. But LLMs aren’t all-knowing — they have limits and make mistakes, so their first attempt often isn’t accurate and reliable.

_Sure, you can pre-train models to ace specific tasks that trend on X… But what’s the point? This doesn’t translate to real-world performance.

_

At Refact.ai, we do things differently.** Our AI Agent uses a multi-step process:** instead of settling for the first attempt, it validates its work through testing and iteration until the task is done right.

We call it a feedback loop:

- Writes code: The agent generates code based on the task description.

- Fixes errors: Runs automated checks for issues.

- Iterates: If problems are found, the agent corrects the code, fixes bugs, and re-tests until the task is successfully completed.

- Delivers the result, which will be correct most of the time!

This drives the real value of AI — actually solving problems, not just scoring well on benchmarks.

Read more about the tech side in our blog.

Key features of Refact.ai’s autonomous AI Agent

Refact.ai’s advanced AI Agent thinks and acts like a developer, handling software engineering tasks end-to-end.

- Autonomous task execution

- Deep contextual understanding

- Dev tools integration (GitHub, Docker, PostgreSQL, MCP Servers, and more)

- Memory and continuous improvement

- Human-AI collaboration

- Open source

Try Refact.ai in your IDE

Vibe coding is the future of software development. Get 10x productivity with Refact.ai Agent in your IDE, handling complex programming tasks for you while you focus on core work.

✅ Available to everyone in VS Code and JetBrains.

We'd be happy if you test Refact.ai Agent for your software developmebnt tasks and share you opinion!