Creating an AI Assistant for Technical Documentation – Part 1: Why and How I Started This Project

This post is also available in Portuguese: Read in Portuguese Hello! I'm Gustavo — a back-end software developer with a passion for Java, Python, automation, software design, and more recently, using AI for practical application. I'm beginning my technical writing here, sharing some of the knowledge and experience derived from projects that I've been developing myself. I've also always been a learn-by-doing kind of person — break things, repair them, and work with actual issues. So in addition to picking up new tools, I thought I'd blog the experience along the way — perhaps it's helpful to someone out there, or at least provokes some interesting discussions in here. The Project: an AI Assistant for Technical Documentation Ever feel lost in oceans of technical documentation, or spend too much time wondering how a codebase really works? That's what surprised me — and made me curious to learn more about AI, automation, and architecture — and prompted me to begin a side project: a smart assistant that can comprehend the structure of a Java project and respond to questions in the style of an "internal team chat." Why I Started This Project The concept arose while developing the TrustScore API, a Java Spring Boot product review application. I wondered how it was feasible to automate code base comprehension, besides discovering AI concepts applied to software development. It also served as a great opportunity to hone: Python and FastAPI for real projects Data extraction and structuring Data-oriented architecture and API design Source code integration and AI Project Overview The plan is to create a system whereby an AI assistant can respond to inquiries regarding the code of a project. To this end, I laid out the following steps: Crawler: a script that crawls .java files, extracting class names, methods, attributes, and their corresponding comments. Storage: all the data is organized and saved in a local SQLite database. API with FastAPI: a REST API displays the data extracted in a well-formatted way. AI Agent: an LLM will use the API data to respond in a natural way. Interface: a basic chat-style front-end to communicate with the AI. First Steps: Creating the Crawler Before any AI can respond to questions about a codebase, it must first know what's in there. So I began by creating a basic Python crawler to learn the layout of the Java application I had created (the TrustScore API). Its purpose was to obtain large amounts of information from .java files, i.e.: names of classes and their locations; methods, their signatures, and related comments; applicable variables and attributes. My idea was a straightforward line-by-line file read with some regular expressions. Nothing too complicated — just the goal was to get something working reasonably quickly and locally, without needing to use larger external libraries. Data scraped is stored in a SQLite database, formatted in a way that will facilitate future querying. The database will act as the baseline for the future steps — particularly for the API and subsequent AI integration. Soon to come is a special post on how I constructed this crawler from scratch — step-by-step process, challenges, and how I approached the data structure. GitHub Repository: https://github.com/gustavogutkoski/ProjectInsight Next Steps Currently, I am trying to create the API using FastAPI to expose the code data obtained. The goal is to have this API prepared to be consumed by an AI agent in the near future. After that, I plan to: Add an LLM to provide context-based answers Create a simple front-end with a chat-like interface Enrich the retrieved information to add question context --- ## Wrapping Up This project has been both a technical and artistic challenge — taking code scraping, REST APIs, and AI concepts and turning them into something that could be truly useful in the everyday workflow of any dev team. In future posts, I'll delve more into the way I'm constructing the API and then how I intend to add an AI model to it all. I'm always receptive to ideas, suggestions, and feedback! Follow me here on Dev.to to keep up to date for the next parts of this series. ⚙️ This text was written with the support of an AI assistant for writing and editing. All ideas, project structure, and technical implementations are my own.

This post is also available in Portuguese: Read in Portuguese

Hello! I'm Gustavo — a back-end software developer with a passion for Java, Python, automation, software design, and more recently, using AI for practical application. I'm beginning my technical writing here, sharing some of the knowledge and experience derived from projects that I've been developing myself.

I've also always been a learn-by-doing kind of person — break things, repair them, and work with actual issues. So in addition to picking up new tools, I thought I'd blog the experience along the way — perhaps it's helpful to someone out there, or at least provokes some interesting discussions in here.

The Project: an AI Assistant for Technical Documentation

Ever feel lost in oceans of technical documentation, or spend too much time wondering how a codebase really works?

That's what surprised me — and made me curious to learn more about AI, automation, and architecture — and prompted me to begin a side project: a smart assistant that can comprehend the structure of a Java project and respond to questions in the style of an "internal team chat."

Why I Started This Project

The concept arose while developing the TrustScore API, a Java Spring Boot product review application. I wondered how it was feasible to automate code base comprehension, besides discovering AI concepts applied to software development.

It also served as a great opportunity to hone:

Python and FastAPI for real projects

Data extraction and structuring

Data-oriented architecture and API design

Source code integration and AI

Project Overview

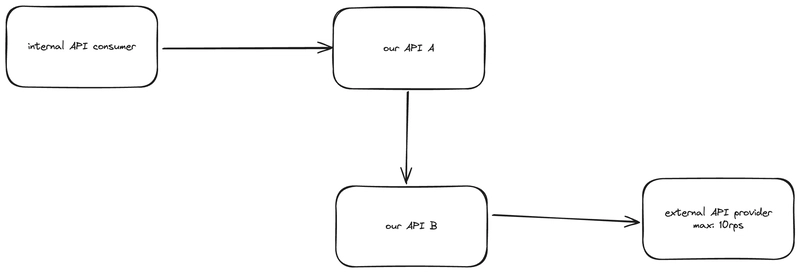

The plan is to create a system whereby an AI assistant can respond to inquiries regarding the code of a project. To this end, I laid out the following steps:

Crawler: a script that crawls

.javafiles, extracting class names, methods, attributes, and their corresponding comments.Storage: all the data is organized and saved in a local SQLite database.

API with FastAPI: a REST API displays the data extracted in a well-formatted way.

AI Agent: an LLM will use the API data to respond in a natural way.

Interface: a basic chat-style front-end to communicate with the AI.

First Steps: Creating the Crawler

Before any AI can respond to questions about a codebase, it must first know what's in there. So I began by creating a basic Python crawler to learn the layout of the Java application I had created (the TrustScore API).

Its purpose was to obtain large amounts of information from .java files, i.e.:

names of classes and their locations;

methods, their signatures, and related comments;

applicable variables and attributes.

My idea was a straightforward line-by-line file read with some regular expressions. Nothing too complicated — just the goal was to get something working reasonably quickly and locally, without needing to use larger external libraries.

Data scraped is stored in a SQLite database, formatted in a way that will facilitate future querying. The database will act as the baseline for the future steps — particularly for the API and subsequent AI integration.

Soon to come is a special post on how I constructed this crawler from scratch — step-by-step process, challenges, and how I approached the data structure.

GitHub Repository: https://github.com/gustavogutkoski/ProjectInsight

Next Steps

Currently, I am trying to create the API using FastAPI to expose the code data obtained. The goal is to have this API prepared to be consumed by an AI agent in the near future.

After that, I plan to:

Add an LLM to provide context-based answers

Create a simple front-end with a chat-like interface

Enrich the retrieved information to add question context

--- ## Wrapping Up This project has been both a technical and artistic challenge — taking code scraping, REST APIs, and AI concepts and turning them into something that could be truly useful in the everyday workflow of any dev team. In future posts, I'll delve more into the way I'm constructing the API and then how I intend to add an AI model to it all. I'm always receptive to ideas, suggestions, and feedback! Follow me here on Dev.to to keep up to date for the next parts of this series.

⚙️ This text was written with the support of an AI assistant for writing and editing. All ideas, project structure, and technical implementations are my own.

![Ditching a Microsoft Job to Enter Startup Hell with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)