AI & The Future of Software Engineering: Advanced Prompting Techniques for 10x Efficiency

Introduction In my previous article, I shared my experiment demonstrating how AI tools allowed me to build an application with an unfamiliar cloud service in just a week and a half—a task that would have typically taken me 10-15 times longer using traditional development methods. The response was overwhelming: many of you reported similar productivity gains, with some achieving even faster development cycles and improved code quality through strategic AI prompting techniques. Others shared struggles with inconsistent results and the challenge of getting AI to produce production-ready code. Today, I'm focusing on the how—the specific prompting techniques and strategies that can help you consistently achieve these dramatic efficiency gains. As AI becomes integral to our development workflow, prompt engineering is emerging as a critical meta-skill that separates highly productive developers from the rest. The Evolution of Developer-AI Collaboration We've quickly moved beyond basic code completion. Today's AI tools can generate entire components, troubleshoot complex issues, and even suggest architectural approaches. Yet many developers still experience frustration when their prompts yield generic, often incorrect results. The difference between mediocre and exceptional AI output almost always comes down to how you frame your requests. Just as clear requirements lead to better human-written code, well-crafted prompts lead to better AI-generated solutions. Key Principles of Effective AI Prompting for Developers 1. Contextual Anchoring & Rules-Based Frameworks Before requesting any code, provide precise project context. Let's look at a concrete example: // Weak prompt: Write a user authentication system using Firebase. // Strong prompt: I'm building a React Native mobile app (Expo SDK 48) with Firebase backend. We need a user authentication system that supports: - Email/password login - Google OAuth - Phone verification The app uses Redux for state management and follows Material Design guidelines. We handle form validation with Formik and Yup. The authentication state should persist between app launches. Here's how we've structured our Redux store so far: { user: { isAuthenticated: boolean, profile: UserProfile | null, authError: string | null, isLoading: boolean } } The difference is dramatic. The second prompt gives the AI a clear understanding of your tech stack, design patterns, and specific requirements, resulting in code that integrates seamlessly with your existing project. Modern IDEs like Cursor are taking this concept even further by implementing rules-based frameworks that let you predefine project context and coding standards. Similarly, platforms like Claude Projects (which is not an IDE, but rather a workspace for organizing conversations and artifacts) allow users to collect and reuse prompt templates and contextual information. Cursor's implementation uses the Model Context Protocol (MCP), which provides a standardized way for AI models to access external context and tools. For example, you can create a .cursor/rules file for a React Native project: # React Native Development Standards You are an expert in React Native development with the following standards: - Use functional components with hooks, never class components - Follow atomic design principles for UI components - Use TypeScript for type safety with proper interfaces for all props - Implement responsive layouts using React Native's flexbox - Follow the project's established folder structure: - /src/components - UI components - /src/screens - Screen components - /src/navigation - Navigation configuration - /src/hooks - Custom hooks - /src/utils - Helper functions - /src/services - API clients and services - /src/store - Redux store setup and slices Reference our standard imports: - `import { colors, spacing } from '../theme';` - `import { useAppDispatch, useAppSelector } from '../hooks';` These rules are automatically applied whenever the AI generates code for matching files, ensuring consistency across your codebase. Resources like cursordirectory.com offer collections of pre-built rules for various frameworks and languages. 2. Constraint Definition Explicitly state your boundaries and requirements: // Additional constraints to add to your prompt: The solution must: - Be optimized for mobile performance (minimize bundle size) - Include appropriate error handling for network issues - Follow security best practices (e.g., token refresh, secure storage) - Use functional components and hooks (no class components) - Include TypeScript type definitions - Follow our project's eslint configuration (we use Airbnb style guide) The more specific your constraints, the more tailored the output will be to your needs. 3. Progressive Iteration Start with architecture before diving into implementation. Here's an e

Introduction

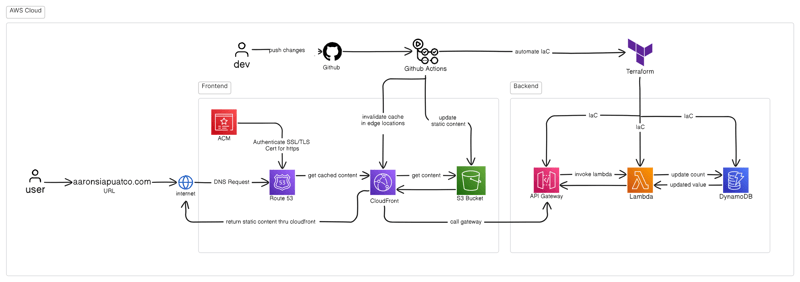

In my previous article, I shared my experiment demonstrating how AI tools allowed me to build an application with an unfamiliar cloud service in just a week and a half—a task that would have typically taken me 10-15 times longer using traditional development methods. The response was overwhelming: many of you reported similar productivity gains, with some achieving even faster development cycles and improved code quality through strategic AI prompting techniques. Others shared struggles with inconsistent results and the challenge of getting AI to produce production-ready code.

Today, I'm focusing on the how—the specific prompting techniques and strategies that can help you consistently achieve these dramatic efficiency gains. As AI becomes integral to our development workflow, prompt engineering is emerging as a critical meta-skill that separates highly productive developers from the rest.

The Evolution of Developer-AI Collaboration

We've quickly moved beyond basic code completion. Today's AI tools can generate entire components, troubleshoot complex issues, and even suggest architectural approaches. Yet many developers still experience frustration when their prompts yield generic, often incorrect results.

The difference between mediocre and exceptional AI output almost always comes down to how you frame your requests. Just as clear requirements lead to better human-written code, well-crafted prompts lead to better AI-generated solutions.

Key Principles of Effective AI Prompting for Developers

1. Contextual Anchoring & Rules-Based Frameworks

Before requesting any code, provide precise project context. Let's look at a concrete example:

// Weak prompt:

Write a user authentication system using Firebase.

// Strong prompt:

I'm building a React Native mobile app (Expo SDK 48) with Firebase backend. We need a user authentication system that supports:

- Email/password login

- Google OAuth

- Phone verification

The app uses Redux for state management and follows Material Design guidelines. We handle form validation with Formik and Yup. The authentication state should persist between app launches.

Here's how we've structured our Redux store so far:

{

user: {

isAuthenticated: boolean,

profile: UserProfile | null,

authError: string | null,

isLoading: boolean

}

}

The difference is dramatic. The second prompt gives the AI a clear understanding of your tech stack, design patterns, and specific requirements, resulting in code that integrates seamlessly with your existing project.

Modern IDEs like Cursor are taking this concept even further by implementing rules-based frameworks that let you predefine project context and coding standards. Similarly, platforms like Claude Projects (which is not an IDE, but rather a workspace for organizing conversations and artifacts) allow users to collect and reuse prompt templates and contextual information. Cursor's implementation uses the Model Context Protocol (MCP), which provides a standardized way for AI models to access external context and tools.

For example, you can create a .cursor/rules file for a React Native project:

# React Native Development Standards

You are an expert in React Native development with the following standards:

- Use functional components with hooks, never class components

- Follow atomic design principles for UI components

- Use TypeScript for type safety with proper interfaces for all props

- Implement responsive layouts using React Native's flexbox

- Follow the project's established folder structure:

- /src/components - UI components

- /src/screens - Screen components

- /src/navigation - Navigation configuration

- /src/hooks - Custom hooks

- /src/utils - Helper functions

- /src/services - API clients and services

- /src/store - Redux store setup and slices

Reference our standard imports:

- `import { colors, spacing } from '../theme';`

- `import { useAppDispatch, useAppSelector } from '../hooks';`

These rules are automatically applied whenever the AI generates code for matching files, ensuring consistency across your codebase. Resources like cursordirectory.com offer collections of pre-built rules for various frameworks and languages.

2. Constraint Definition

Explicitly state your boundaries and requirements:

// Additional constraints to add to your prompt:

The solution must:

- Be optimized for mobile performance (minimize bundle size)

- Include appropriate error handling for network issues

- Follow security best practices (e.g., token refresh, secure storage)

- Use functional components and hooks (no class components)

- Include TypeScript type definitions

- Follow our project's eslint configuration (we use Airbnb style guide)

The more specific your constraints, the more tailored the output will be to your needs.

3. Progressive Iteration

Start with architecture before diving into implementation. Here's an effective sequence I use:

- First prompt: Request a high-level design/architecture

- Second prompt: Ask for implementation of a specific component based on the architecture

- Third prompt: Refine the implementation based on specific requirements

- Fourth prompt: Ask for tests or edge case handling

Breaking complex systems into manageable chunks like this creates a more manageable workflow and produces better results.

4. Error Resolution Patterns

When AI-generated code doesn't work as expected (and this will happen), these troubleshooting approaches can dramatically improve resolution time:

// Instead of this:

This code doesn't work. Fix it.

// Try this:

When implementing the Google OAuth flow, I'm getting the following error:

[EXACT ERROR MESSAGE]

Here's the relevant code:

[CODE SNIPPET WITH LINE NUMBERS]

I think the issue might be related to how we're handling the auth credentials. Can you:

1. Explain what's causing this error

2. Provide a fixed implementation

3. Explain why your solution fixes the issue

Learning to effectively diagnose issues, escape repetitive error loops, and knowing when to switch tools or approaches is crucial for productive AI collaboration.

Advanced Techniques I've Tested

1. Schema-First Development

This approach has been particularly effective for me. Before asking for implementation, I provide the complete data model:

// Example schema-first prompt:

Here's our PostgreSQL schema for a blogging platform:

CREATE TABLE users (

id UUID PRIMARY KEY DEFAULT uuid_generate_v4(),

email TEXT UNIQUE NOT NULL,

name TEXT NOT NULL,

bio TEXT,

created_at TIMESTAMP WITH TIME ZONE DEFAULT NOW()

);

CREATE TABLE posts (

id UUID PRIMARY KEY DEFAULT uuid_generate_v4(),

title TEXT NOT NULL,

body TEXT NOT NULL,

user_id UUID REFERENCES users(id) ON DELETE CASCADE,

published BOOLEAN DEFAULT false,

created_at TIMESTAMP WITH TIME ZONE DEFAULT NOW(),

updated_at TIMESTAMP WITH TIME ZONE DEFAULT NOW()

);

CREATE TABLE comments (

id UUID PRIMARY KEY DEFAULT uuid_generate_v4(),

body TEXT NOT NULL,

user_id UUID REFERENCES users(id) ON DELETE CASCADE,

post_id UUID REFERENCES posts(id) ON DELETE CASCADE,

created_at TIMESTAMP WITH TIME ZONE DEFAULT NOW()

);

Using Supabase as our backend and Next.js API routes, implement the following:

1. A complete CRUD API for posts

2. Proper error handling

3. Authentication middleware to ensure users can only modify their own posts

This approach gives the AI a precise understanding of your data relationships, making the generated code much more accurate.

2. Test-Driven Prompting

Writing test cases before requesting implementation provides implicit requirements that AI can understand:

// Test-first prompt example:

I need to implement a utility function called `calculateDiscountedPrice` with the following test cases:

describe('calculateDiscountedPrice', () => {

test('applies percentage discount correctly', () => {

expect(calculateDiscountedPrice(100, { type: 'percentage', value: 20 })).toBe(80);

});

test('applies fixed amount discount correctly', () => {

expect(calculateDiscountedPrice(100, { type: 'fixed', value: 15 })).toBe(85);

});

test('never returns less than zero', () => {

expect(calculateDiscountedPrice(10, { type: 'fixed', value: 15 })).toBe(0);

});

test('handles tiered discounts correctly', () => {

const tieredDiscount = {

type: 'tiered',

tiers: [

{ threshold: 0, percentage: 0 },

{ threshold: 50, percentage: 5 },

{ threshold: 100, percentage: 10 }

]

};

expect(calculateDiscountedPrice(40, tieredDiscount)).toBe(40);

expect(calculateDiscountedPrice(75, tieredDiscount)).toBe(71.25);

expect(calculateDiscountedPrice(200, tieredDiscount)).toBe(180);

});

});

Please implement the calculateDiscountedPrice function that satisfies all these test cases.

This approach is particularly effective because it precisely defines the expected behavior through concrete examples.

3. Comparative Prompting

Asking for multiple solution approaches to the same problem helps you understand tradeoffs:

// Comparative prompt example:

I need to implement a caching layer for API responses in our React application. Please provide three different approaches:

1. A solution using React Query

2. A custom hook implementation with localStorage

3. A Redux middleware approach

For each approach, please include:

- Complete implementation code

- Pros and cons

- Performance considerations

- When this approach would be most appropriate

This technique is excellent for learning and making informed architectural decisions.

4. Multi-Tool Orchestration

I've evolved my workflow over time, and my current approach leverages different tools for their specific strengths:

Cursor: My primary tool for in-IDE completions, project-specific code generation, and day-to-day development. Its context-aware completions and integration with the development environment make it my go-to coding companion.

Claude: I use Claude (Desktop or Web) to create projects that centralize all my conversations and contextual documents before implementation. This becomes my planning and architectural thinking space before I move to Cursor for implementation.

ChatGPT: I rarely use this directly anymore, except occasionally through Cursor's interface when specific capabilities are needed.

This workflow combines the strengths of each tool: Claude's strong reasoning and context management for initial planning, and Cursor's integrated development experience for implementation. The key insight is that different phases of development benefit from different AI interaction patterns.

Practical Framework: The PREP Method

After months of refining my approach, I've developed what I call the PREP method:

- Problem definition: Clearly articulate what you're solving

- Requirements specification: List constraints and expectations

- Example integration: Show how this fits into your larger system

- Pattern suggestion: Guide the AI toward preferred approaches

Here's a template I use for complex tasks:

Problem Definition

I need to implement [specific functionality] for [specific purpose].

Requirements

- Functional requirements: [list]

- Non-functional requirements: [performance, security, etc.]

- Must use: [required libraries/frameworks]

- Must not use: [prohibited approaches]

System Context

This component will integrate with our system as follows:

- Upstream dependencies: [what provides input to this component]

- Downstream consumers: [what consumes output from this component]

- Related systems: [other systems this interacts with]

Current Implementation / Examples

Here's how we've implemented similar functionality elsewhere:

[code snippet or description]

Preferred Patterns

We prefer to use [specific design patterns] in our codebase.

This framework has consistently yielded higher-quality code with fewer iterations across various projects and AI tools.

Real-World Example: Building a Complex Component with Supabase

Here's a recent example of how I approached building a real-time collaboration feature using Supabase:

Problem Definition

I need to implement a real-time collaborative document editing feature using Supabase's Realtime functionality.

Requirements

- Users should see others' cursor positions and selections

- Changes should propagate to all connected users within 500ms

- The solution should handle conflict resolution

- Must work with our existing React/TypeScript frontend

- Must use Supabase Realtime (not WebSockets directly)

- Should maintain a change history for undo/redo functionality

System Context

- We use Supabase for authentication and data storage

- Our document editor is built on Slate.js

- Document structure is stored in a PostgreSQL table with jsonb

Current Implementation

Here's our current document schema:

[schema details]

Here's how we currently load documents (without real-time):

[code snippet]

Preferred Patterns

- We use React Query for data fetching

- We prefer custom hooks for encapsulating functionality

- We use Zustand for local state management

This detailed prompt resulted in a high-quality implementation that required minimal adjustments to integrate with our system.

Measuring Success: Quality Metrics

Beyond simply "getting the job done," I track the following metrics to evaluate my AI collaboration effectiveness:

- Iteration Count: How many prompt-response cycles were needed to get to a working solution

- Time-to-Working-Solution: Total time from initial prompt to working implementation

- Code Review Feedback: Qualitative feedback from teammates on AI-generated code

- Maintainability Score: How well does the generated code conform to our project's style and patterns

Using these metrics, I've been able to refine my prompting techniques and achieve increasingly better results over time.

The Skill Development Curve

Effective prompting improves with deliberate practice. Start with smaller, well-defined tasks and gradually increase complexity as you learn what works. I recommend keeping a "prompt journal" documenting particularly effective or ineffective approaches.

Common pitfalls to avoid:

- Being too vague or general

- Providing insufficient context

- Not specifying constraints clearly

- Expecting the AI to infer your specific preferences

- Asking for too much in a single prompt

"Vibe Coding" vs. Knowledge-Based Approaches

The term "Vibe Coding" has recently gained popularity in the developer community. Coined by AI researcher Andrej Karpathy in early 2025, it refers to an AI-dependent programming technique where developers describe a problem in plain language and let the AI generate code without fully understanding the implementation details. As defined by Wikipedia, vibe coding "shifts the programmer's role from manual coding to guiding, testing, and refining the AI-generated source code."

While this approach can be incredibly efficient for prototyping and simple applications, I advocate for what I call "Knowledge-Based Vibe Coding." This hybrid approach embraces AI's speed while maintaining a developer's critical understanding of architecture, security implications, and best practices.

Simon Willison, a respected developer, cautions that "vibe coding your way to a production codebase is clearly risky" since most software engineering involves evolving existing systems where code quality and understandability are crucial.

The knowledge-based approach becomes especially important in several scenarios:

// When things break

Instead of: "Fix this error: [paste error message]"

Try: "I'm getting this error: [error message]. I think it's related to how we're handling authentication state. The Redux store might not be properly updated after token refresh. Can you explain what might be happening and suggest a solution?"

// When considering security

Instead of: "Add user registration to my app"

Try: "Add user registration with proper password hashing, email verification, and protection against common vulnerabilities like SQL injection and XSS attacks. Follow OWASP security best practices."

// When thinking about scalability

Instead of: "Create a database for my app"

Try: "Design a database schema that will scale to millions of users with efficient indexing strategies. Consider read/write patterns and potential bottlenecks."

This approach ensures you're leveraging AI's capabilities while maintaining the critical thinking and domain expertise that separates professional developers from those just "vibing" with AI.

Advanced Tools: MCP and RAG Integration

Modern AI-enhanced IDEs are implementing sophisticated frameworks for context management and retrieval:

Model Context Protocol (MCP)

MCP is an open standard designed to facilitate interaction between large language models and external data sources. It establishes a client-server architecture that allows AI coding assistants to access and use additional context beyond what's provided in the prompt.

For example, in Cursor IDE, MCP servers can:

- Retrieve API documentation dynamically

- Access your project's custom libraries and frameworks

- Provide real-time linting feedback

- Execute and test code in safe environments

- Connect to databases or other systems

Setting up MCP is relatively straightforward. In Cursor, you can configure it in settings:

Settings > Cursor Settings > MCP Servers > Enable

Then add specific MCP servers based on your needs. Popular options include:

- GitHub repository access

- Documentation retrieval

- Web scraping capabilities

- Advanced code testing

Retrieval Augmented Generation (RAG)

RAG is another powerful technique that enhances AI by retrieving relevant information from external sources before generating responses. In the context of software development, this means the AI can access your codebase, documentation, and external resources to produce more accurate and contextually appropriate code.

Both MCP and RAG represent the next evolution in AI-assisted development, moving beyond simple prompting to create systems that actively extend the AI's capabilities with external tools and knowledge.

Looking Forward: The Future of Developer-AI Collaboration

As AI capabilities continue to evolve, the relationship between developers and their AI tools will transform. The most valuable skills will increasingly center on:

- Architectural thinking and system design

- Effective communication with both humans and AI

- The ability to orchestrate complex systems rather than writing every line of code

- Judgment about when to use AI and when to code manually

- Creating and managing effective rules and context systems

Forward-thinking developers are already building shared knowledge bases of effective prompts, rules, and strategies. Resources like cursordirectory.com showcase how the community is optimizing AI-developer collaboration across different frameworks and languages.

In the next article of this series, I'll explore how to leverage AI assistance in rebuilding legacy applications—perhaps one of the most promising applications of these technologies.

This is the second article in my series "Navigating the New AI Landscape in Software Development." If you missed the first installment about my productivity experiment with AI tools, you can find it [here].

What prompting techniques have you found effective in your own development work? Share your experiences in the comments.

About the author: I'm a software engineer with 10 years of dedicated engineering experience and nearly 30 years in the IT industry overall. My career has spanned organizations of all sizes—from large corporations like Nortel (a major Cisco competitor in its day) to most recently a startup with just 6 employees. Currently seeking my next opportunity, I'm deeply invested in understanding how AI is reshaping our industry and what it means for experienced engineers like myself.