A Step-by-Step Coding Guide to Integrate Dappier AI’s Real-Time Search and Recommendation Tools with OpenAI’s Chat API

In this tutorial, we will learn how to harness the power of Dappier AI, a suite of real-time search and recommendation tools, to enhance our conversational applications. By combining Dappier’s cutting-edge RealTimeSearchTool with its AIRecommendationTool, we can query the latest information from across the web and surface personalized article suggestions from custom data models. We […] The post A Step-by-Step Coding Guide to Integrate Dappier AI’s Real-Time Search and Recommendation Tools with OpenAI’s Chat API appeared first on MarkTechPost.

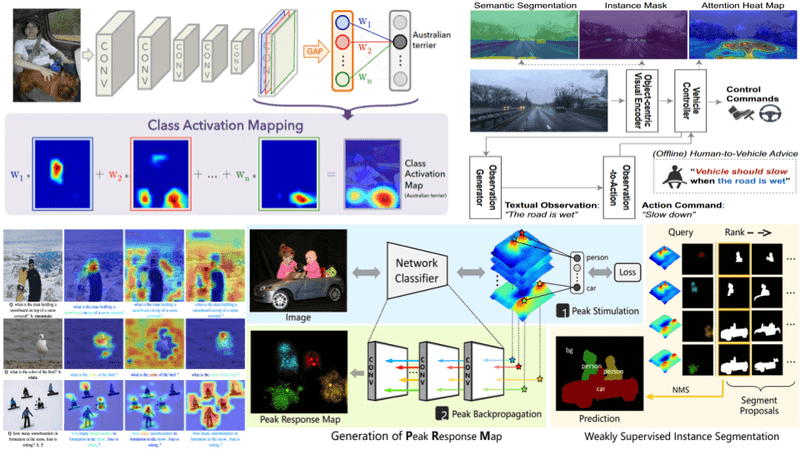

In this tutorial, we will learn how to harness the power of Dappier AI, a suite of real-time search and recommendation tools, to enhance our conversational applications. By combining Dappier’s cutting-edge RealTimeSearchTool with its AIRecommendationTool, we can query the latest information from across the web and surface personalized article suggestions from custom data models. We guide you step-by-step through setting up our Google Colab environment, installing dependencies, securely loading API keys, and initializing each Dappier module. We will then integrate these tools with an OpenAI chat model (e.g., gpt-3.5-turbo), construct a composable prompt chain, and execute end-to-end queries, all within nine concise notebook cells. Whether we need up-to-the-minute news retrieval or AI-driven content curation, this tutorial provides a flexible framework for building intelligent, data-driven chat experiences.

!pip install -qU langchain-dappier langchain langchain-openai langchain-community langchain-core openaiWe bootstrap our Colab environment by installing the core LangChain libraries, both the Dappier extensions and the community integrations, alongside the official OpenAI client. With these packages in place, we will have seamless access to Dappier’s real-time search and recommendation tools, the latest LangChain runtimes, and the OpenAI API, all in one environment.

import os

from getpass import getpass

os.environ["DAPPIER_API_KEY"] = getpass("Enter our Dappier API key: ")

os.environ["OPENAI_API_KEY"] = getpass("Enter our OpenAI API key: ")We securely capture our Dappier and OpenAI API credentials at runtime, thereby avoiding the hard-coding of sensitive keys in our notebook. By using getpass, the prompts ensure our inputs remain hidden, and setting them as environment variables makes them available to all subsequent cells without exposing them in logs.

from langchain_dappier import DappierRealTimeSearchTool

search_tool = DappierRealTimeSearchTool()

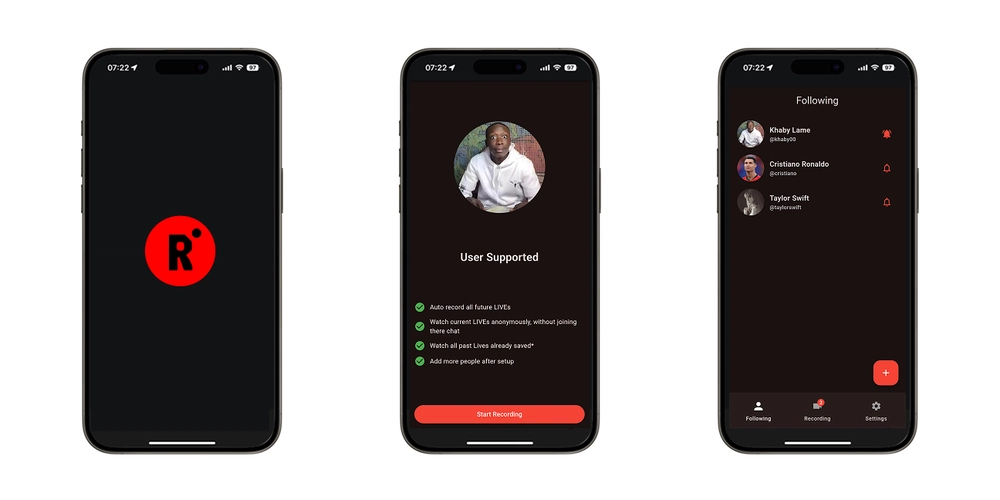

print("Real-time search tool ready:", search_tool)We import Dappier’s real‐time search module and create an instance of the DappierRealTimeSearchTool, enabling our notebook to execute live web queries. The print statement confirms that the tool has been initialized successfully and is ready to handle search requests.

from langchain_dappier import DappierAIRecommendationTool

recommendation_tool = DappierAIRecommendationTool(

data_model_id="dm_01j0pb465keqmatq9k83dthx34",

similarity_top_k=3,

ref="sportsnaut.com",

num_articles_ref=2,

search_algorithm="most_recent",

)

print("Recommendation tool ready:", recommendation_tool)We set up Dappier’s AI-powered recommendation engine by specifying our custom data model, the number of similar articles to retrieve, and the source domain for context. The DappierAIRecommendationTool instance will now use the “most_recent” algorithm to pull in the top-k relevant articles (here, two) from our specified reference, ready for query-driven content suggestions.

from langchain.chat_models import init_chat_model

llm = init_chat_model(

model="gpt-3.5-turbo",

model_provider="openai",

temperature=0,

)

llm_with_tools = llm.bind_tools([search_tool])

print(" llm_with_tools ready")

llm_with_tools ready")We create an OpenAI chat model instance using gpt-3.5-turbo with a temperature of 0 to ensure consistent responses, and then bind the previously initialized search tool so that the LLM can invoke real-time searches. The final print statement confirms that our LLM is ready to call Dappier’s tools within our conversational flows.

import datetime

from langchain_core.prompts import ChatPromptTemplate

today = datetime.datetime.today().strftime("%Y-%m-%d")

prompt = ChatPromptTemplate([

("system", f"we are a helpful assistant. Today is {today}."),

("human", "{user_input}"),

("placeholder", "{messages}"),

])

llm_chain = prompt | llm_with_tools

print(" llm_chain built")

llm_chain built")We construct the conversational “chain” by first building a ChatPromptTemplate that injects the current date into a system prompt and defines slots for user input and prior messages. By piping the template (|) into our llm_with_tools, we create an llm_chain that automatically formats prompts, invokes the LLM (with real-time search capability), and handles responses in a seamless workflow. The final print confirms the chain is ready to drive end-to-end interactions.

from langchain_core.runnables import RunnableConfig, chain

@chain

def tool_chain(user_input: str, config: RunnableConfig):

ai_msg = llm_chain.invoke({"user_input": user_input}, config=config)

tool_msgs = search_tool.batch(ai_msg.tool_calls, config=config)

return llm_chain.invoke(

{"user_input": user_input, "messages": [ai_msg, *tool_msgs]},

config=config

)

print(" tool_chain defined")

tool_chain defined")We define an end-to-end tool_chain that first sends our prompt to the LLM (capturing any requested tool calls), then executes those calls via search_tool.batch, and finally feeds both the AI’s initial message and the tool outputs back into the LLM for a cohesive response. The @chain decorator transforms this into a single, runnable pipeline, allowing us to simply call tool_chain.invoke(…) to handle both thinking and searching in a single step.

res = search_tool.invoke({"query": "What happened at the last Wrestlemania"})

print(" read more

read more