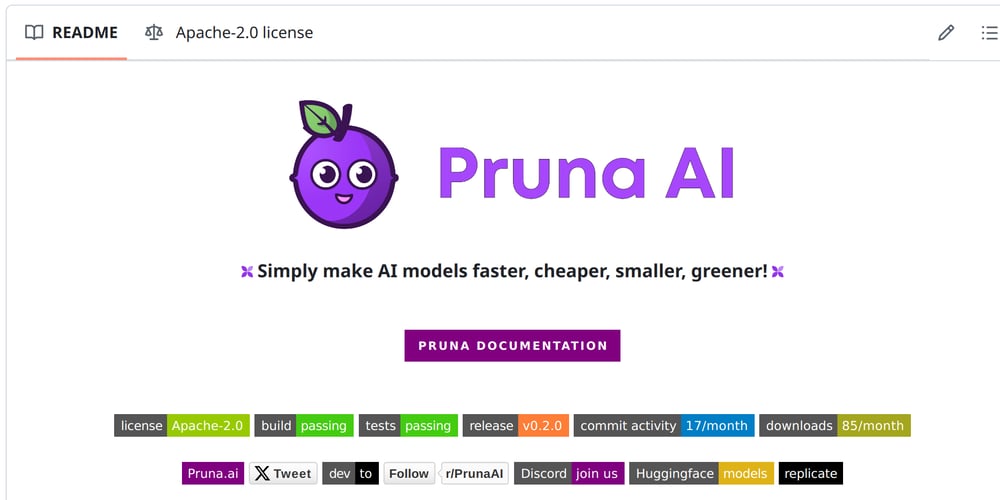

The AI efficiency framework from Pruna AI is now open-source

I am Bertrand from Pruna AI. Together with John, Rayan, Stephan, we created Pruna AI to tackle challenges in AI model optimization. We’re a group of researchers in AI efficiency and reliability, originally from TUM. Since we got so many times questions on how compression of AI models was working under the hood, we decided to open-source the pruna package with the help of all the Pruna AI team. As a whole, the pruna package is an AI efficiency framework that can be installed with pip install pruna to compress models, and thus save memory and compute power when running AI models for inference. With open-sourcing, people can now inspect and contribute to the open code. Beyond the code, we provide detailed readme, tutorials, benchmarks, and documentation (https://docs.pruna.ai/en/stable/index.html) to make transparent compression, evaluation, and saving/loading/serving of AI models. Beyond the open-source package, we commercially offer pruna_pro with advanced compression methods, recovery methods, and an optimization agent to unlock greater efficiency and productivity gains. We are pleased to share this with you all. We would be glad to hear your thoughts and questions in the comments :)

I am Bertrand from Pruna AI. Together with John, Rayan, Stephan, we created Pruna AI to tackle challenges in AI model optimization. We’re a group of researchers in AI efficiency and reliability, originally from TUM.

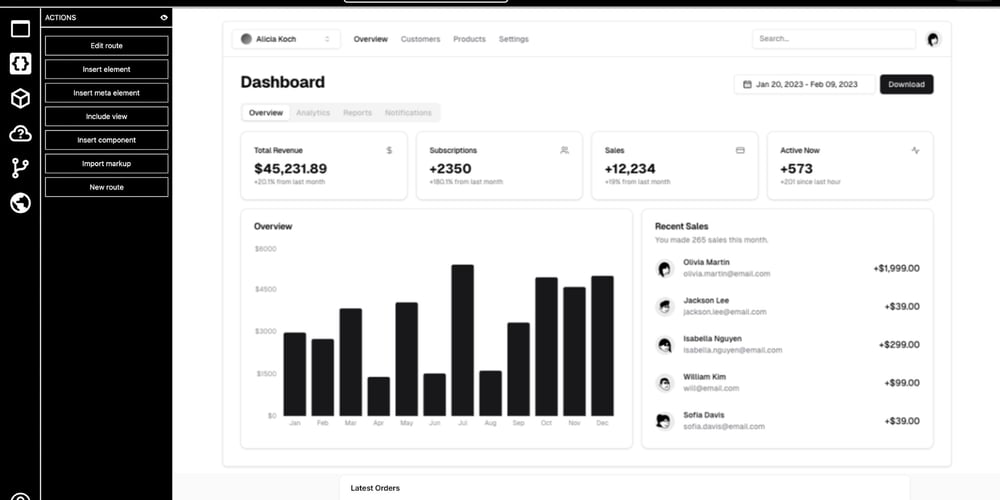

Since we got so many times questions on how compression of AI models was working under the hood, we decided to open-source the pruna package with the help of all the Pruna AI team. As a whole, the pruna package is an AI efficiency framework that can be installed with pip install pruna to compress models, and thus save memory and compute power when running AI models for inference.

With open-sourcing, people can now inspect and contribute to the open code. Beyond the code, we provide detailed readme, tutorials, benchmarks, and documentation (https://docs.pruna.ai/en/stable/index.html) to make transparent compression, evaluation, and saving/loading/serving of AI models.

Beyond the open-source package, we commercially offer pruna_pro with advanced compression methods, recovery methods, and an optimization agent to unlock greater efficiency and productivity gains.

We are pleased to share this with you all. We would be glad to hear your thoughts and questions in the comments :)