Model Performance Begins with Data: Researchers from Ai2 Release DataDecide—A Benchmark Suite to Understand Pretraining Data Impact Across 30K LLM Checkpoints

The Challenge of Data Selection in LLM Pretraining Developing large language models entails substantial computational investment, especially when experimenting with alternative pretraining corpora. Comparing datasets at full scale—on the order of billions of parameters and hundreds of billions of tokens—can consume hundreds of thousands of GPU hours per run. Consequently, practitioners resort to smaller‐scale experiments […] The post Model Performance Begins with Data: Researchers from Ai2 Release DataDecide—A Benchmark Suite to Understand Pretraining Data Impact Across 30K LLM Checkpoints appeared first on MarkTechPost.

The Challenge of Data Selection in LLM Pretraining

Developing large language models entails substantial computational investment, especially when experimenting with alternative pretraining corpora. Comparing datasets at full scale—on the order of billions of parameters and hundreds of billions of tokens—can consume hundreds of thousands of GPU hours per run. Consequently, practitioners resort to smaller‐scale experiments as proxies for large‐model behavior. Yet these “pilot” studies are rarely published, producing a fragmented landscape in which each laboratory repeats similar small‐scale tests without shared benchmarks or methodologies . This opacity impedes reproducibility, underutilizes collective insights, and obscures the true trade‑offs between development compute and final model performance.

DataDecide

To address these limitations, the Allen Institute for AI (AI2), in collaboration with the University of Washington and the University of Pennsylvania, today releases DataDecide—a comprehensive suite of controlled pretraining experiments spanning 25 distinct corpora and 14 model sizes from 4 million to 1 billion parameters. DataDecide’s datasets include well‑known sources such as Dolma, DCLM, RefinedWeb, C4, and FineWeb, alongside variations produced by domain ablation, deduplication, quality filtering, and source mixing. Each model is trained at a fixed token‑to‑parameter ratio of 100 (100 tokens per parameter), reflecting the “overtraining” regime that optimizes inference efficiency. In total, over 1,050 models and more than 30,000 checkpoints—each evaluated across ten downstream tasks—are released to the public.

Technical Structure and Pragmatic Benefits

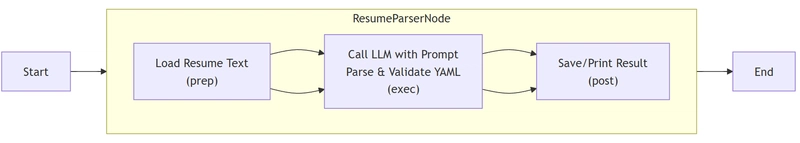

DataDecide orchestrates experiments along three axes:

- Data Recipes: Twenty‑five well‑documented pretraining corpora, each embodying different curation strategies (see Table 1 in the paper for full recipe specifications) .

- Model Scale: Fourteen parameter configurations (4 M–1 B), programmatically derived via the OLMo model ladder to ensure consistent training hyperparameters across scales. Each non‑target scale includes two “early‑stop” seed runs, while the 1 B‑parameter models feature three complete seed reruns to quantify variability.

- Evaluation Suite: The OLMES benchmark of ten multiple‑choice tasks (e.g., MMLU, ARC Easy/Challenge, HellaSwag, MBPP, HumanEval) provides a multifaceted view of language understanding, commonsense reasoning, and code generation performance.

By releasing both pretraining datasets and corresponding models, DataDecide enables researchers to:

- Reuse checkpoints for new evaluations without retraining.

- Experiment with novel prediction methods (e.g., advanced scaling‑law fits, smoothing techniques).

- Investigate benchmark sensitivity to training data and model scale.

Key Findings and Quantitative Insights

DataDecide’s systematic analysis yields four practical guidelines:

- Single‑Scale Baseline Robustness: Ranking corpora by downstream accuracy at a single, small scale (e.g., 150 M parameters) achieves ~80 percent decision accuracy for predicting the best dataset at the 1 B‑parameter target scale. In contrast, eight baseline scaling‑law extrapolations do not surpass this simple heuristic, underscoring its cost‑effectiveness.

- Task‑Dependent Compute Sensitivity: The compute budget required for reliable decisions varies markedly by task. Benchmarks like MMLU and ARC Easy become predictable with less than 0.01 percent of the target compute, whereas HellaSwag and SocialIQA demand orders of magnitude more FLOPs to achieve similar decision accuracy .

- Proxy Metric Selection: Continuous likelihood metrics—specifically the character‑normalized average probability of correct continuations (CORRECT PROB) and total probability (TOTAL PROB)—outperform discrete accuracy measures at small scales. This is most pronounced on code tasks (MBPP, HumanEval), where decision accuracy jumps from near‑random to over 80 percent with CORRECT PROB as the proxy .

- Variance and Spread Considerations: High decision accuracy correlates with low run‑to‑run variance (noise) and ample performance spread across datasets. Proxy metrics that reduce noise or amplify spread thus directly enhance prediction reliability.

Concluding Perspective

DataDecide transforms pretraining data selection from an ad hoc art into a transparent, data‐driven science. By open‑sourcing all 25 corpora, 1,050 models, 30,000+ checkpoints, and evaluation scripts on Hugging Face and GitHub, AI2 invites the community to reproduce findings, extend evaluations to new benchmarks, and innovate on decision‑making methods. As LLM development continues to demand ever‑greater compute resources, DataDecide offers a principled framework for minimizing wasted experiments and maximizing insight—paving the way toward more efficient, reproducible, and collaborative AI research.

Check out the Paper, Model on Hugging Face and Technical details. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.