Model Context Protocol

The Model Context Protocol (MCP) is an open standard designed to connect AI assistants and Large Language Models (LLMs) with external systems containing valuable data and functionality. Released in late 2024, MCP provides a universal way for AI assistants to securely connect and interact with external data sources, APIs, business software, and development tools. Even advanced LLMs often operate in isolation, limited by the data they were trained on and disconnected from live information or the ability to act within external systems. Connecting AI to these services traditionally involved building custom, difficult-to-scale integrations for each data source. MCP overcomes this integration challenge by offering a universal, open protocol for secure, two-way communication. It effectively replaces fragmented, custom integrations with a single, standardized connection method – MCP standardizes how AI applications interact with external data and tools. Why is the Model Context Protocol (MCP) Important? MCP provides significant advantages for developers building capable AI agents and complex, automated workflows using LLMs. Its value compared to other methods includes: Faster Tool Integration: MCP enables developers to add new capabilities to AI applications rapidly. Instead of writing custom code for each integration, if an MCP server exists for a service (like a database or API), any MCP-compatible AI app can connect and use its functions immediately. This plug-and-play integration accelerates automation, allowing AI agents to fetch documents, query databases, or call APIs simply by connecting to the appropriate server. Enhanced AI Autonomy: MCP supports more autonomous AI agent behavior. It allows agents to actively retrieve necessary information or execute actions as part of multi-step workflows. Agents are no longer limited to their internal knowledge; they can interact directly with CRMs, email platforms, databases, and other tools in a coordinated sequence, bringing us closer to truly autonomous task completion. Reduced Integration Complexity: As a universal interface, MCP significantly lowers the effort needed to integrate new APIs or data sources. An application supporting MCP connects to numerous services via one consistent mechanism. This frees up development teams to focus on core application logic rather than repetitive integration coding, simplifying both initial setup and ongoing maintenance. Improved Consistency and Interoperability: MCP uses a standardized request/response format based on JSON-RPC messages. This uniformity simplifies debugging, makes scaling easier, and ensures future compatibility. The interface to external tools remains consistent even if the underlying LLM provider changes, protecting integration investments. Rich, Two-Way Contextual Interaction: MCP facilitates ongoing dialogue and context sharing between the AI model and the external server. Beyond simple function calls (Tools), servers can provide Resources (contextual data) and Prompts (structured templates). This allows for richer interactions, such as feeding reference data to the model or guiding it through complex, predefined workflows. Understanding the Core MCP Architecture MCP operates on a flexible client-server model designed for extensibility: Hosts: These are the primary LLM applications (e.g., AI assistants like Claude Desktop, IDE plugins) that initiate MCP connections. Clients: Protocol clients run within the host application, managing individual connections to MCP servers. These clients are embedded directly into chatbots, coding assistants, or automation agents. Servers: These are lightweight programs exposing specific capabilities (Resources, Tools, Prompts) via the MCP standard. Servers act as secure bridges between the AI application (via the client) and external systems. Local Data Sources: Data residing on the user's machine (files, local databases, services) that MCP servers can securely access based on permissions. Remote Services: External systems are accessible over networks (e.g., web APIs, cloud databases) to which MCP servers connect. In this model, the AI-powered application (Host) contains an MCP Client. Each external service or data source is accessed via a dedicated MCP Server. The server advertises its capabilities, and the client connects to utilize them. The AI model itself doesn't directly interact with external APIs; communication flows through the standardized MCP client-server handshake, ensuring a structured and secure exchange. Key MCP Concepts Explained MCP defines several fundamental concepts that govern client-server interactions and data exchange: Resources: These represent file-like data or content exposed by servers. Clients and LLMs can read resources to gain context for their tasks. Resource access is application-controlled, meaning the host application determines how resource data is used. Resources are identified by unique

The Model Context Protocol (MCP) is an open standard designed to connect AI assistants and Large Language Models (LLMs) with external systems containing valuable data and functionality. Released in late 2024, MCP provides a universal way for AI assistants to securely connect and interact with external data sources, APIs, business software, and development tools.

Even advanced LLMs often operate in isolation, limited by the data they were trained on and disconnected from live information or the ability to act within external systems. Connecting AI to these services traditionally involved building custom, difficult-to-scale integrations for each data source. MCP overcomes this integration challenge by offering a universal, open protocol for secure, two-way communication. It effectively replaces fragmented, custom integrations with a single, standardized connection method – MCP standardizes how AI applications interact with external data and tools.

Why is the Model Context Protocol (MCP) Important?

MCP provides significant advantages for developers building capable AI agents and complex, automated workflows using LLMs. Its value compared to other methods includes:

Faster Tool Integration: MCP enables developers to add new capabilities to AI applications rapidly. Instead of writing custom code for each integration, if an MCP server exists for a service (like a database or API), any MCP-compatible AI app can connect and use its functions immediately. This plug-and-play integration accelerates automation, allowing AI agents to fetch documents, query databases, or call APIs simply by connecting to the appropriate server.

Enhanced AI Autonomy: MCP supports more autonomous AI agent behavior. It allows agents to actively retrieve necessary information or execute actions as part of multi-step workflows. Agents are no longer limited to their internal knowledge; they can interact directly with CRMs, email platforms, databases, and other tools in a coordinated sequence, bringing us closer to truly autonomous task completion.

Reduced Integration Complexity: As a universal interface, MCP significantly lowers the effort needed to integrate new APIs or data sources. An application supporting MCP connects to numerous services via one consistent mechanism. This frees up development teams to focus on core application logic rather than repetitive integration coding, simplifying both initial setup and ongoing maintenance.

Improved Consistency and Interoperability: MCP uses a standardized request/response format based on JSON-RPC messages. This uniformity simplifies debugging, makes scaling easier, and ensures future compatibility. The interface to external tools remains consistent even if the underlying LLM provider changes, protecting integration investments.

Rich, Two-Way Contextual Interaction: MCP facilitates ongoing dialogue and context sharing between the AI model and the external server. Beyond simple function calls (Tools), servers can provide Resources (contextual data) and Prompts (structured templates). This allows for richer interactions, such as feeding reference data to the model or guiding it through complex, predefined workflows.

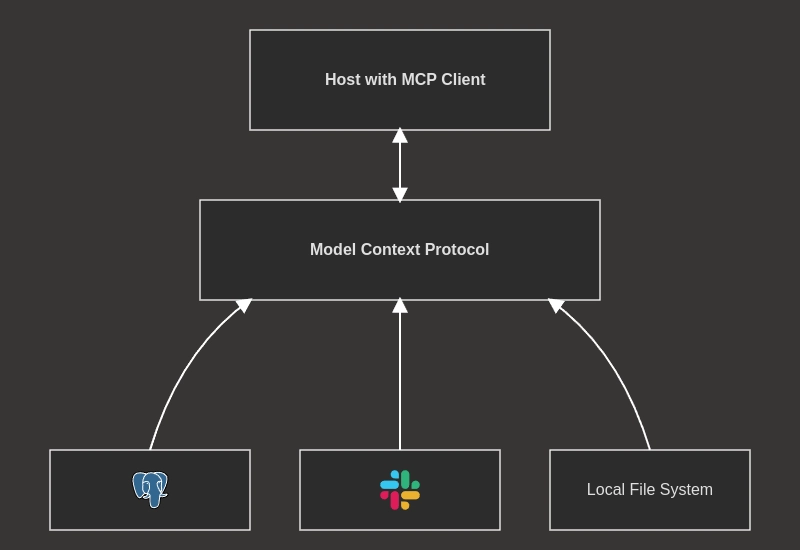

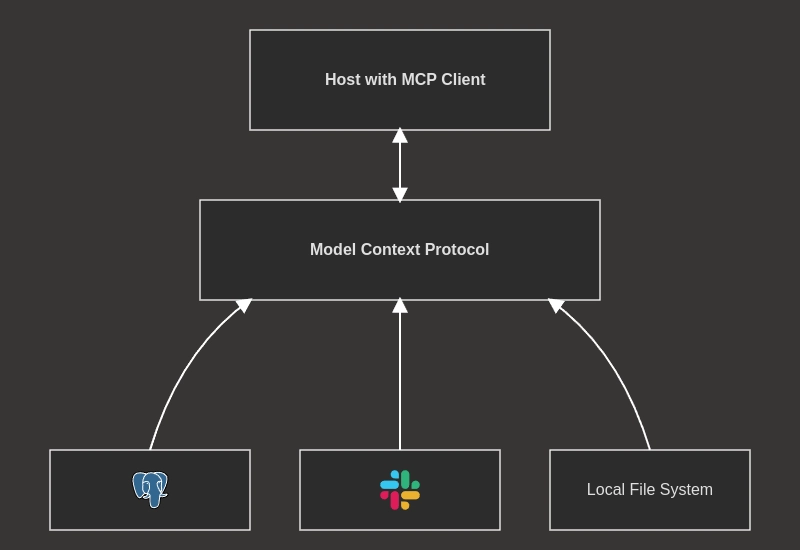

Understanding the Core MCP Architecture

MCP operates on a flexible client-server model designed for extensibility:

- Hosts: These are the primary LLM applications (e.g., AI assistants like Claude Desktop, IDE plugins) that initiate MCP connections.

- Clients: Protocol clients run within the host application, managing individual connections to MCP servers. These clients are embedded directly into chatbots, coding assistants, or automation agents.

- Servers: These are lightweight programs exposing specific capabilities (Resources, Tools, Prompts) via the MCP standard. Servers act as secure bridges between the AI application (via the client) and external systems.

- Local Data Sources: Data residing on the user's machine (files, local databases, services) that MCP servers can securely access based on permissions.

- Remote Services: External systems are accessible over networks (e.g., web APIs, cloud databases) to which MCP servers connect.

In this model, the AI-powered application (Host) contains an MCP Client. Each external service or data source is accessed via a dedicated MCP Server. The server advertises its capabilities, and the client connects to utilize them. The AI model itself doesn't directly interact with external APIs; communication flows through the standardized MCP client-server handshake, ensuring a structured and secure exchange.

Key MCP Concepts Explained

MCP defines several fundamental concepts that govern client-server interactions and data exchange:

-

Resources: These represent file-like data or content exposed by servers. Clients and LLMs can read resources to gain context for their tasks. Resource access is application-controlled, meaning the host application determines how resource data is used. Resources are identified by unique URIs (e.g.,

file:///path/to/file,postgres://db/table,screen://active-window) and can contain text or binary data. Servers can also notify clients about changes to resources. -

Tools: These are executable functions exposed by servers. Tools allow LLMs to interact with external systems, perform calculations, or trigger actions. Tool usage is typically model-controlled, designed for the AI model to invoke automatically (often requiring user confirmation for sensitive actions). Each tool has a name, a description (explaining its purpose to the LLM), and an input schema defined using JSON Schema. The server implements the logic for executing the tool when requested. Tool definitions can include annotations like

readOnlyHintordestructiveHintto inform the model about potential side effects. - Prompts: Pre-defined templates or instructions provided by servers. Prompts help guide users or LLMs in accomplishing specific tasks more effectively. (Note: Sources provide less detail on Prompts compared to Resources and Tools).

- Roots: URIs suggested by clients to servers, indicating the relevant operational boundaries or locations for resources. While often filesystem paths, roots can be any valid URI (e.g., HTTP URLs). Servers should generally operate within the scope defined by these roots.

- Transports: The underlying communication mechanisms defining how MCP messages are exchanged between clients and servers. MCP uses JSON-RPC 2.0 as its standard message format.

MCP Message Types and Transport Options

Communication within MCP relies on JSON-RPC 2.0 messages, falling into four main categories:

- Requests: Messages sent from one party expecting a response from the other. They include a method name and parameters.

- Results: Messages indicating the successful completion of a request, containing the response data.

- Errors: Messages indicating that a request failed, including an error code and descriptive message.

- Notifications: One-way messages sent from one party to the other that do not require or expect a response.

MCP is flexible regarding how these messages are transported:

- Stdio Transport: Uses standard input (stdin) and standard output (stdout) for communication. This is well-suited for local processes where the client and server run on the same machine. Many quickstart guides use stdio.

-

SSE Transport: Uses

HTTP POSTrequests for client-to-server messages and Server-Sent Events (SSE) for server-to-client messages (like notifications). This transport is suitable for remote connections over networks.

Developers can also implement custom transport layers as long as they adhere to the defined Transport interface.

Implementation Resources: SDKs and Examples

To simplify development, the MCP project provides Software Development Kits (SDKs) for popular programming languages:

Quickstart guides and examples are available here demonstrating how to build both MCP client applications (connecting to servers, listing tools, sending requests) and MCP server applications (initializing the server, defining handlers for list_tools, call_tool, etc.).

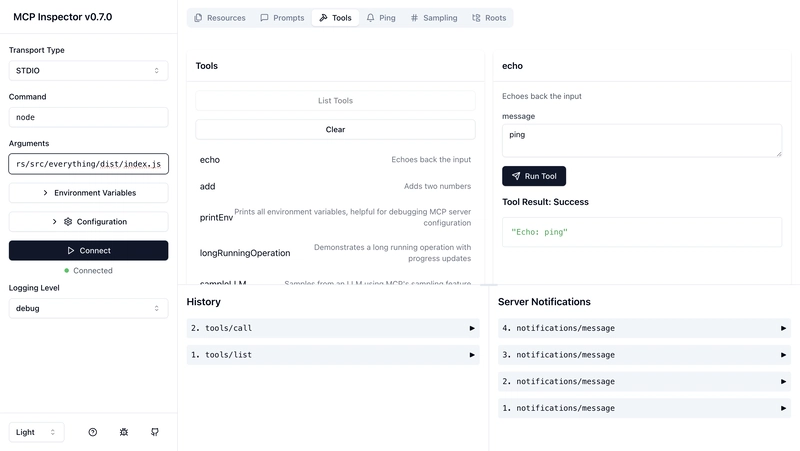

Debugging and Troubleshooting MCP Integrations

Effective debugging is essential when working with MCP. The ecosystem offers resources to help developers diagnose issues:

MCP Inspector: An interactive tool specifically designed for debugging MCP servers. It allows developers to inspect the messages exchanged between clients and servers in detail.

Best Practices: Recommended debugging techniques include comprehensive logging of protocol events, tracing message flows between client and server, implementing health checks for servers, and thoroughly testing different transport mechanisms and error handling scenarios.

Secure Authentication with OAuth 2.0

A significant enhancement to MCP is the adoption of OAuth 2.0 as the standard for secure authentication. Initially, MCP lacked a built-in, standardized authentication method, which complicated secure remote connections and often required manual credential management.

Integrating OAuth 2.0 provides a robust and widely accepted framework for securing interactions, especially with remote servers and multi-user setups. Key benefits include:

- Standardized Security: Leverages the mature OAuth 2.0 protocol for authentication and authorization.

- Dynamic Client Registration (DCR): Allows clients to register with authorization servers dynamically.

- Automatic Endpoint Discovery: Simplifies configuration by allowing clients to discover necessary OAuth endpoints automatically.

- Secure Token Management: Ensures secure handling of access tokens, granting clients access only to authorized resources and actions.

This standardized approach greatly improves security and usability compared to earlier methods.

How MCP Compares to Other Integration Approaches

MCP offers a unique approach compared to previous methods for connecting LLMs to external systems:

- Custom Integrations: MCP eliminates the need for building brittle, time-consuming, one-off integrations for every external service. It provides a reusable, standardized protocol.

- ChatGPT Plugins: While an early effort in tool use, ChatGPT plugins were proprietary, tied to OpenAI's platform, typically focused on single interactions, and lacked a universal authentication standard. MCP offers an open, model-agnostic standard supporting persistent connections and standardized OAuth 2.0 security.

- LLM Tool Frameworks (e.g., LangChain, LlamaIndex): Frameworks like LangChain provide valuable abstractions for developers building AI agents. However, they often still require custom code for the underlying tool implementation. MCP complements these frameworks by standardizing the protocol between the AI application and the tool provider (server). This allows agents built with frameworks like LangChain (which now includes MCP support) to dynamically discover and interact with any MCP-compliant server, shifting standardization from the agent's code to the communication layer.

MCP provides an open, model-agnostic protocol that standardizes and simplifies both integration and authentication, offering a more scalable and interoperable solution than framework-specific or proprietary alternatives.

Real-World MCP Applications and Community Engagement

MCP enables AI systems to securely access and utilize live data and perform actions in the real world. Early adopters are already building MCP servers for popular tools and platforms:

- Brave Search

- Slack

- GitHub

- Databases (PostgreSQL, Redis)

- File systems

These integrations demonstrate MCP's potential for connecting AI agents to enterprise content, developer tools, communication platforms, and critical business systems. Development tool companies are also incorporating MCP support to enhance their AI-powered features.

Potential applications include:

- AI chatbots accessing real-time external data (e.g., checking order status, querying knowledge bases).

- AI-driven automation workflows involving multiple steps across different tools (e.g., processing customer requests from email to CRM).

- Enhancing AI model capabilities by allowing them to use specialized tools for calculation, data analysis, or code execution.

MCP is developed as a collaborative, open-source project. The community actively encourages contributions and feedback through platforms like GitHub Discussions.