Host LLMs from Your Laptop Using LM Studio and Pinggy

Introduction In the era of generative AI, software developers and AI enthusiasts are continuously seeking efficient ways to deploy and share AI models without relying on complex cloud infrastructures. LM Studio provides an intuitive platform for running large language models (LLMs) locally, while Pinggy enables secure internet exposure of local endpoints. This guide offers a step-by-step approach to hosting LLMs from your laptop using LM Studio and Pinggy. Why Host LLMs Locally? Hosting LLMs on your laptop offers several advantages: Cost-Effective: No need for expensive cloud instances. Data Privacy: Your data remains on your local machine. Faster Prototyping: Low-latency model inference. Flexible Access: Share APIs with team members and clients. Combining LM Studio and Pinggy ensures a seamless deployment process. Step 1: Download and Install LM Studio Visit the LM Studio Website Go to LM Studio's official website. Download the installer for your operating system (Windows, macOS, or Linux). Install LM Studio Follow the installation prompts. Launch the application once installation is completed. Download Your Model Open LM Studio and navigate to the Discover tab. Browse available models and download the one you wish to use. Step 2: Enable the Model API Open the Developer Tab In LM Studio, click on the Developer tab. Locate the Status button in the top-left corner. Start the API Server Change the status from Stop to Run. This launches the model's API server at http://localhost:1234. Test the API Endpoint Copy the displayed curl command and test it using Postman or your terminal: curl http://localhost:1234/v1/chat/completions \ -H "Content-Type: application/json" \ -d '{ "model": "qwen2-0.5b-instruct", "messages": [ { "role": "system", "content": "Always answer in rhymes." }, { "role": "user", "content": "What day is it today?" } ], "temperature": 0.7, "max_tokens": -1, "stream": false }' Step 3: Expose Your LM Studio API via Pinggy Install Pinggy (if not already installed) Ensure you have an SSH client installed. Open your terminal and run the following command: ssh -p 443 -R0:localhost:1234 a.pinggy.io Enter your Token If prompted, enter your Pinggy authentication token. Share the Public URL Once connected, Pinggy generates a secure public URL, such as: https://abc123.pinggy.io If the model responds, your API is active locally. Share this URL with collaborators or use it for remote integration. Advanced Tips and Best Practices: Secure Your API: Add basic authentication to your tunnel: ssh -p 443 -R0:localhost:1234 -t a.pinggy.io b:username:password This ensures that only authorized users can access your public endpoint. Monitor Traffic: Use Pinggy's web debugger to track incoming requests and troubleshooting issues. Use Custom Domains: With Pinggy Pro, map your tunnel to a custom domain for branding and credibility. Optimize Performance: Ensure your local machine has sufficient resources to handle multiple requests efficiently. Troubleshooting Tips: Model Fails to Start: Check system requirements and ensure compatibility. Review LM Studio logs for errors. Connection Timeouts: Use Pinggy's TCP mode for unstable networks: while true; do ssh -p 443 -o StrictHostKeyChecking=no -R0:localhost:1234 a.pinggy.io; sleep 10; done Incorrect API Response: Validate curl command syntax. Ensure LM Studio is configured correctly. Conclusion Combining LM Studio's powerful LLM deployment with Pinggy's secure tunneling enables developers to share AI models easily, without cloud dependencies. This solution empowers rapid prototyping, remote collaboration, and seamless integration—all while maintaining full control over data and performance.

Introduction

In the era of generative AI, software developers and AI enthusiasts are continuously seeking efficient ways to deploy and share AI models without relying on complex cloud infrastructures. LM Studio provides an intuitive platform for running large language models (LLMs) locally, while Pinggy enables secure internet exposure of local endpoints. This guide offers a step-by-step approach to hosting LLMs from your laptop using LM Studio and Pinggy.

Why Host LLMs Locally?

Hosting LLMs on your laptop offers several advantages:

- Cost-Effective: No need for expensive cloud instances.

- Data Privacy: Your data remains on your local machine.

- Faster Prototyping: Low-latency model inference.

- Flexible Access: Share APIs with team members and clients.

Combining LM Studio and Pinggy ensures a seamless deployment process.

Step 1: Download and Install LM Studio

Visit the LM Studio Website

Go to LM Studio's official website.

Download the installer for your operating system (Windows, macOS, or Linux).

Install LM Studio

Follow the installation prompts.

Launch the application once installation is completed.

Download Your Model

Open LM Studio and navigate to the Discover tab.

Browse available models and download the one you wish to use.

Step 2: Enable the Model API

Open the Developer Tab

In LM Studio, click on the Developer tab.

Locate the Status button in the top-left corner.

Start the API Server

Change the status from Stop to Run.

This launches the model's API server at

http://localhost:1234.

Test the API Endpoint

Copy the displayed curl command and test it using Postman or your terminal:

curl http://localhost:1234/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "qwen2-0.5b-instruct",

"messages": [

{ "role": "system", "content": "Always answer in rhymes." },

{ "role": "user", "content": "What day is it today?" }

],

"temperature": 0.7,

"max_tokens": -1,

"stream": false

}'

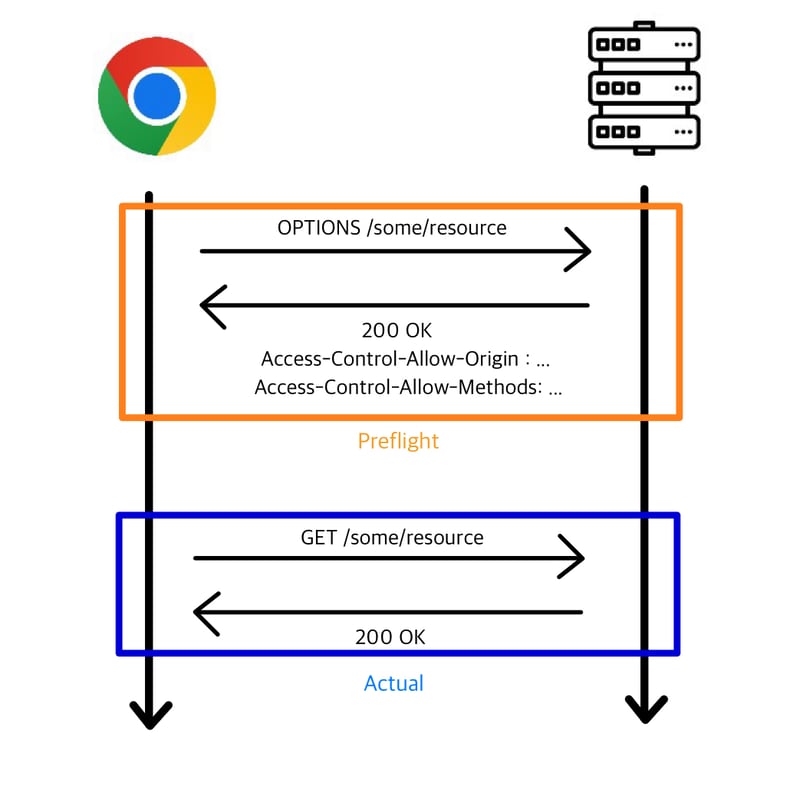

Step 3: Expose Your LM Studio API via Pinggy

Install Pinggy (if not already installed)

Ensure you have an SSH client installed.

Open your terminal and run the following command:

ssh -p 443 -R0:localhost:1234 a.pinggy.io

Enter your Token

If prompted, enter your Pinggy authentication token.

Share the Public URL

Once connected, Pinggy generates a secure public URL, such as:

https://abc123.pinggy.io

If the model responds, your API is active locally.

Share this URL with collaborators or use it for remote integration.

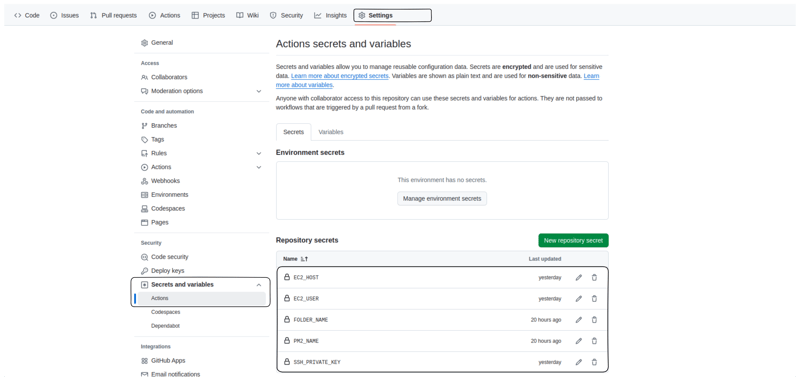

Advanced Tips and Best Practices:

-

Secure Your API:

Add basic authentication to your tunnel:

ssh -p 443 -R0:localhost:1234 -t a.pinggy.io b:username:passwordThis ensures that only authorized users can access your public

endpoint. -

Monitor Traffic:

Use Pinggy's web debugger to track incoming

requests and troubleshooting issues. -

Use Custom Domains:

With Pinggy Pro, map your tunnel to

a custom domain for branding

and credibility. -

Optimize Performance:

Ensure your local machine has sufficient resources to handle

multiple requests efficiently.

Troubleshooting Tips:

-

Model Fails to Start:

Check system requirements and ensure compatibility.

Review LM Studio logs for errors.

-

Connection Timeouts:

Use Pinggy's TCP mode for unstable

networks:

while true; do ssh -p 443 -o StrictHostKeyChecking=no -R0:localhost:1234 a.pinggy.io; sleep 10; done -

Incorrect API Response:

Validate curl command syntax.

Ensure LM Studio is configured correctly.

Conclusion

Combining LM Studio's powerful LLM deployment with Pinggy's secure tunneling enables developers to share AI models easily, without cloud dependencies. This solution empowers rapid prototyping, remote collaboration, and seamless integration—all while maintaining full control over data and performance.