From Zero to EKS and Hybrid-Nodes — Part 2: The EKS and Hybrid Nodes configuration.

Introduction: In the previous part, we set up the VPC and the VPN Site-to-Site connection to prepare for our EKS cluster and hybrid nodes. The network diagram at that stage looked like this: In this second part, we’ll configure the EKS cluster using OpenTofu, including SSM Activation, and manually set up two Hybrid nodes using nodeadm. Setup the EKS cluster Cluster creation is straightforward. We’ll use the state file from the VPC creation to retrieve outputs, and we’ll create the cluster using the terraform-aws-modules/eks/aws module. Full code is available here: https://github.com/csepulveda/modular-aws-resources/tree/main/EKS-HYBRID ################################################################################ # EKS Module ################################################################################ module "eks" { source = "terraform-aws-modules/eks/aws" version = "20.35.0" cluster_name = local.name cluster_version = var.eks_version enable_cluster_creator_admin_permissions = true cluster_endpoint_public_access = true cluster_addons = { coredns = { configuration_values = jsonencode({ replicaCount = 1 }) } eks-pod-identity-agent = {} kube-proxy = {} vpc-cni = {} } vpc_id = data.terraform_remote_state.vpc.outputs.vpc_id subnet_ids = data.terraform_remote_state.vpc.outputs.private_subnets control_plane_subnet_ids = data.terraform_remote_state.vpc.outputs.control_plane_subnet_ids eks_managed_node_groups = { eks-base = { ami_type = "AL2023_x86_64_STANDARD" instance_types = ["t3.small", "t3a.small"] min_size = 1 max_size = 1 desired_size = 1 capacity_type = "SPOT" network_interfaces = [{ delete_on_termination = true }] } } cluster_security_group_additional_rules = { hybrid-all = { cidr_blocks = ["192.168.100.0/23"] description = "Allow all traffic from remote node/pod network" from_port = 0 to_port = 0 protocol = "all" type = "ingress" } } cluster_remote_network_config = { remote_node_networks = { cidrs = ["192.168.100.0/24"] } remote_pod_networks = { cidrs = ["192.168.101.0/24"] } } access_entries = { hybrid-node-role = { principal_arn = module.eks_hybrid_node_role.arn type = "HYBRID_LINUX" } } node_security_group_tags = merge(local.tags, { "karpenter.sh/discovery" = local.name }) tags = local.tags } ################################################################################ # Hybrid nodes Support ################################################################################ module "eks_hybrid_node_role" { source = "terraform-aws-modules/eks/aws//modules/hybrid-node-role" version = "20.35.0" name = "hybrid" tags = local.tags } resource "aws_ssm_activation" "this" { name = "hybrid-node" iam_role = module.eks_hybrid_node_role.name registration_limit = 10 tags = local.tags } resource "local_file" "nodeConfig" { content =

Introduction:

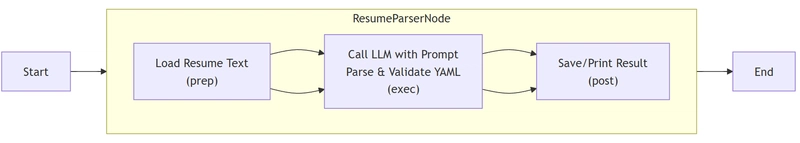

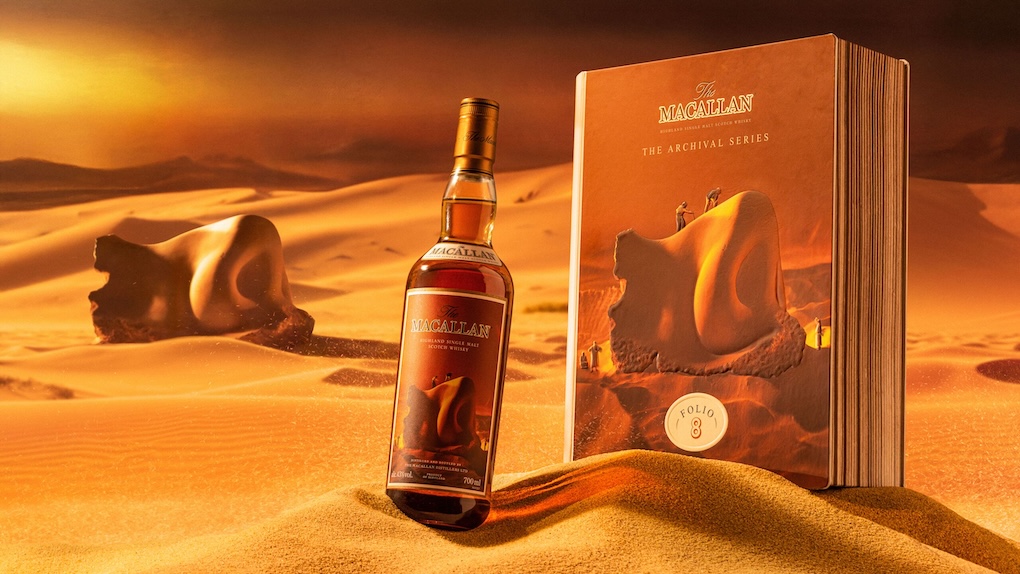

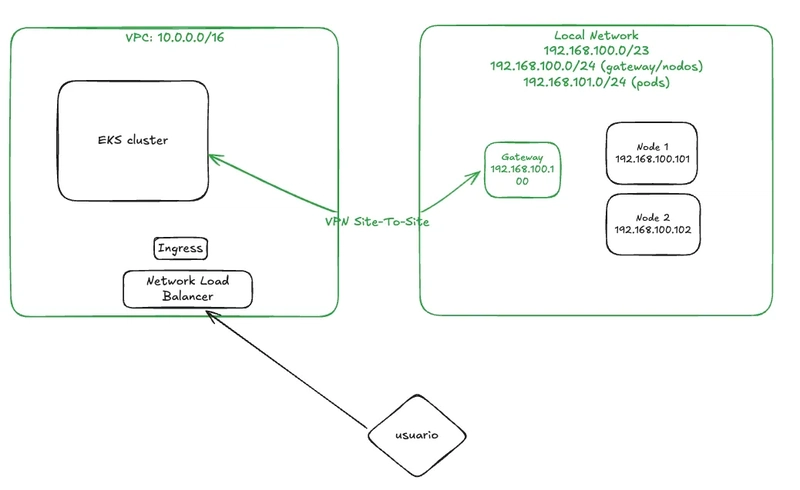

In the previous part, we set up the VPC and the VPN Site-to-Site connection to prepare for our EKS cluster and hybrid nodes. The network diagram at that stage looked like this:

In this second part, we’ll configure the EKS cluster using OpenTofu, including SSM Activation, and manually set up two Hybrid nodes using nodeadm.

Setup the EKS cluster

Cluster creation is straightforward. We’ll use the state file from the VPC creation to retrieve outputs, and we’ll create the cluster using the terraform-aws-modules/eks/aws module.

Full code is available here: https://github.com/csepulveda/modular-aws-resources/tree/main/EKS-HYBRID

################################################################################

# EKS Module

################################################################################

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "20.35.0"

cluster_name = local.name

cluster_version = var.eks_version

enable_cluster_creator_admin_permissions = true

cluster_endpoint_public_access = true

cluster_addons = {

coredns = {

configuration_values = jsonencode({

replicaCount = 1

})

}

eks-pod-identity-agent = {}

kube-proxy = {}

vpc-cni = {}

}

vpc_id = data.terraform_remote_state.vpc.outputs.vpc_id

subnet_ids = data.terraform_remote_state.vpc.outputs.private_subnets

control_plane_subnet_ids = data.terraform_remote_state.vpc.outputs.control_plane_subnet_ids

eks_managed_node_groups = {

eks-base = {

ami_type = "AL2023_x86_64_STANDARD"

instance_types = ["t3.small", "t3a.small"]

min_size = 1

max_size = 1

desired_size = 1

capacity_type = "SPOT"

network_interfaces = [{

delete_on_termination = true

}]

}

}

cluster_security_group_additional_rules = {

hybrid-all = {

cidr_blocks = ["192.168.100.0/23"]

description = "Allow all traffic from remote node/pod network"

from_port = 0

to_port = 0

protocol = "all"

type = "ingress"

}

}

cluster_remote_network_config = {

remote_node_networks = {

cidrs = ["192.168.100.0/24"]

}

remote_pod_networks = {

cidrs = ["192.168.101.0/24"]

}

}

access_entries = {

hybrid-node-role = {

principal_arn = module.eks_hybrid_node_role.arn

type = "HYBRID_LINUX"

}

}

node_security_group_tags = merge(local.tags, {

"karpenter.sh/discovery" = local.name

})

tags = local.tags

}

################################################################################

# Hybrid nodes Support

################################################################################

module "eks_hybrid_node_role" {

source = "terraform-aws-modules/eks/aws//modules/hybrid-node-role"

version = "20.35.0"

name = "hybrid"

tags = local.tags

}

resource "aws_ssm_activation" "this" {

name = "hybrid-node"

iam_role = module.eks_hybrid_node_role.name

registration_limit = 10

tags = local.tags

}

resource "local_file" "nodeConfig" {

content = <<-EOT

apiVersion: node.eks.aws/v1alpha1

kind: NodeConfig

spec:

cluster:

name: ${module.eks.cluster_name}

region: ${local.region}

hybrid:

ssm:

activationId: ${aws_ssm_activation.this.id}

activationCode: ${aws_ssm_activation.this.activation_code}

EOT

filename = "nodeConfig.yaml"

}

Highlights of the configuration:

One EKS-managed node group for core services and ACK controllers (to be installed in Part 3).

VPC CNI enabled via cluster_addons.

Ingress rules to allow traffic from on-premise networks.

Defined remote node and pod networks.

Created a role for hybrid nodes and granted access via access_entries.

Hybrid Nodes and SSM Activation

We define:

An IAM role using the hybrid node module.

An SSM Activation to allow hybrid nodes to join the cluster.

A nodeConfig.yaml file containing the activation credentials, automatically generated during tofu apply.

tofu apply

....

Apply complete! Resources: 50 added, 0 changed, 0 destroyed.

This generates a nodeConfig.yaml file, which is critical for authenticating on-prem nodes via AWS Systems Manager.

Update your kubeconfig after creation:

aws eks update-kubeconfig --region us-east-1 --name my-eks-cluster

Now your infrastructure should look like this:

Setting Up the Hybrid Nodes

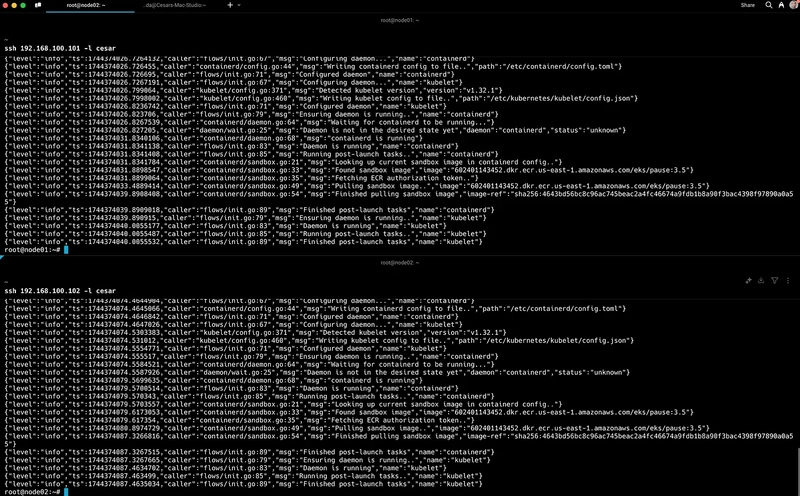

SSH into the nodes (e.g., 192.168.100.101 and 192.168.100.102). These are configured with two networks:

192.168.100.0/24 for nodes.

192.168.101.0/24 for pods.

Transfer the node config:

scp nodeConfig.yaml cesar@192.168.100.101:/tmp/

scp nodeConfig.yaml cesar@192.168.100.102:/tmp/

Install and configure nodeadm:

curl -OL 'https://hybrid-assets.eks.amazonaws.com/releases/latest/bin/linux/arm64/nodeadm'

chmod a+x nodeadm

mv nodeadm /usr/local/bin/.

nodeadm install 1.32 --credential-provider ssm

nodeadm init --config-source file:///tmp/nodeConfig.yaml

Repeat for both nodes.

Once done, check node registration:

kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ip-10-0-19-137.ec2.internal Ready 16m v1.32.1-eks-5d632ec 10.0.19.137 Amazon Linux 2023.7.20250331 6.1.131-143.221.amzn2023.x86_64 containerd://1.7.27

mi-03c1eb4c6173151d6 NotReady 3m4s v1.32.1-eks-5d632ec 192.168.100.102 Ubuntu 22.04.5 LTS 5.15.0-136-generic containerd://1.7.24

mi-091f1dddb980a80ff NotReady 3m51s v1.32.1-eks-5d632ec 192.168.100.101 Ubuntu 22.04.5 LTS 5.15.0-136-generic containerd://1.7.24

The hybrid nodes appear but are NotReady — this is expected until we install a network controller.

Disclaimer time: Although the documentation claims compatibility with Ubuntu 22.04 and 24.04, I couldn’t get 24.04 working due to missing nf_conntrack when kube-proxy attempts to apply iptables rules. If you manage to fix this, please share!

Setting Up the Network with Cilium

We’ll use Cilium as CNI.

cilium-values.yaml:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: eks.amazonaws.com/compute-type

operator: In

values:

- hybrid

ipam:

mode: cluster-pool

operator:

clusterPoolIPv4MaskSize: 24

clusterPoolIPv4PodCIDRList:

- 192.168.101.0/24

operator:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: eks.amazonaws.com/compute-type

operator: In

values:

- hybrid

unmanagedPodWatcher:

restart: false

envoy:

enabled: false

This yaml file indicate the affinity on cilium pods and also the cilium operator to run only en hybrid nodes, also we set the clusterPoolIPv4PodCIDRList pool, using the subnet 192.168.101.0/24

Install Cilium:

helm repo add cilium https://helm.cilium.io/

helm upgrade -i cilium cilium/cilium \

--version 1.17.2 \

--namespace kube-system \

--values cilium-values.yaml

...

Release "cilium" does not exist. Installing it now.

NAME: cilium

LAST DEPLOYED: Fri Apr 11 08:35:31 2025

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble.

Your release version is 1.17.2.

For any further help, visit https://docs.cilium.io/en/v1.17/gettinghelp

After a few minutes the nodes will be ready

kubectl get nodes -o wide

You should see:

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ip-10-0-19-137.ec2.internal Ready 56m v1.32.1-eks-5d632ec 10.0.19.137 Amazon Linux 2023.7.20250331 6.1.131-143.221.amzn2023.x86_64 containerd://1.7.27

mi-03c1eb4c6173151d6 Ready 42m v1.32.1-eks-5d632ec 192.168.100.102 Ubuntu 22.04.5 LTS 5.15.0-136-generic containerd://1.7.24

mi-091f1dddb980a80ff Ready 43m v1.32.1-eks-5d632ec 192.168.100.101 Ubuntu 22.04.5 LTS 5.15.0-136-generic containerd://1.7.24

Your hybrid nodes are now fully integrated with EKS.