Do We Still Need Complex Vision-Language Pipelines? Researchers from ByteDance and WHU Introduce Pixel-SAIL—A Single Transformer Model for Pixel-Level Understanding That Outperforms 7B MLLMs

MLLMs have recently advanced in handling fine-grained, pixel-level visual understanding, thereby expanding their applications to tasks such as precise region-based editing and segmentation. Despite their effectiveness, most existing approaches rely heavily on complex architectures composed of separate components such as vision encoders (e.g., CLIP), segmentation networks, and additional fusion or decoding modules. This reliance on […] The post Do We Still Need Complex Vision-Language Pipelines? Researchers from ByteDance and WHU Introduce Pixel-SAIL—A Single Transformer Model for Pixel-Level Understanding That Outperforms 7B MLLMs appeared first on MarkTechPost.

MLLMs have recently advanced in handling fine-grained, pixel-level visual understanding, thereby expanding their applications to tasks such as precise region-based editing and segmentation. Despite their effectiveness, most existing approaches rely heavily on complex architectures composed of separate components such as vision encoders (e.g., CLIP), segmentation networks, and additional fusion or decoding modules. This reliance on modular systems increases system complexity and limits scalability, especially when adapting to new tasks. Inspired by unified architectures that jointly learn visual and textual features using a single transformer, recent efforts have explored more simplified designs that avoid external components while still enabling strong performance in tasks requiring detailed visual grounding and language interaction.

Historically, vision-language models have evolved from contrastive learning approaches, such as CLIP and ALIGN, progressing toward large-scale models that address open-ended tasks, including visual question answering and optical character recognition. These models typically fuse vision and language features either by injecting language into visual transformers or by appending segmentation networks to large language models. However, such methods often require intricate engineering and are dependent on the performance of individual submodules. Recent research has begun to explore encoder-free designs that unify image and text learning within a single transformer, enabling more efficient training and inference. These approaches have also been extended to tasks such as referring expression segmentation and visual prompt understanding, aiming to support region-level reasoning and interaction without the need for multiple specialized components.

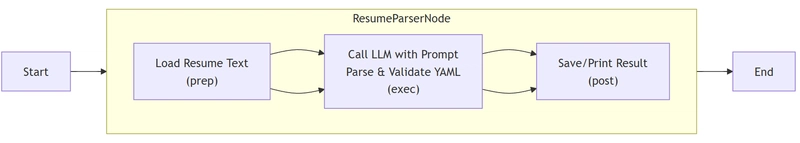

Researchers from ByteDance and WHU present Pixel-SAIL, a single-transformer framework designed for pixel-wise multimodal tasks that does not rely on extra vision encoders. It introduces three key innovations: a learnable upsampling module to refine visual features, a visual prompt injection strategy that maps prompts into text tokens, and a vision expert distillation method to enhance mask quality. Pixel-SAIL is trained on a mixture of referring segmentation, VQA, and visual prompt datasets. It outperforms larger models, such as GLaMM (7B) and OMG-LLaVA (7B), on five benchmarks, including the newly proposed PerBench, while maintaining a significantly simpler architecture.

Pixel-SAIL, a simple yet effective single-transformer model for fine-grained vision-language tasks, eliminates the need for separate vision encoders. They first design a plain encoder-free MLLM baseline and identify its limitations in segmentation quality and visual prompt understanding. To overcome these, Pixel-SAIL introduces: (1) a learnable upsampling module for high-res feature recovery, (2) a visual prompt injection technique enabling early fusion with vision tokens, and (3) a dense feature distillation strategy using expert models like Mask2Former and SAM2. They also introduce PerBench, a new benchmark assessing object captioning, visual-prompt understanding, and V-T RES segmentation across 1,500 annotated examples.

The experiment evaluates the Pixel-SAIL model on various benchmarks using modified SOLO and EVEv2 architectures, showing its effectiveness in segmentation and visual prompt tasks. Pixel-SAIL significantly outperforms other models, including segmentation specialists, with higher cIoU scores on datasets like RefCOCO and gRefCOCO. Scaling up the model size from 0.5B to 3B leads to further improvements. Ablation studies reveal that incorporating visual prompt mechanisms, data scaling, and distillation strategies enhances performance. Visualization analysis reveals that Pixel-SAIL’s image and mask features are denser and more diverse, resulting in improved segmentation results.

In conclusion, Pixel-SAIL, a simplified MLLM for pixel-grounded tasks, achieves strong performance without requiring additional components such as vision encoders or segmentation models. The model incorporates three key innovations: a learnable upsampling module, a visual prompt encoding strategy, and vision expert distillation for enhanced feature extraction. Pixel-SAIL is evaluated on four referring segmentation benchmarks and a new, challenging benchmark, PerBench, which includes tasks such as object description, visual prompt-based Q&A, and referring segmentation. The results show that Pixel-SAIL performs as well as or better than existing models, with a simpler architecture.

Check out the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.