AlphaEvolve: A Comprehensive Report on Gemini-powered Algorithm Discovery

Introduction This report examines Google DeepMind's AlphaEvolve technology, officially released on May 14, 2025. AlphaEvolve is an evolutionary coding agent powered by Gemini large language models, focused on general-purpose algorithm discovery and optimization. The report analyzes AlphaEvolve's technical principles, practical applications, and potential impact on future AI and algorithmic research. Part 1: AlphaEvolve Technical Architecture and Working Principles Core Technology Combination: AlphaEvolve pairs the creative capabilities of large language models with automated evaluators, using an evolutionary framework to improve the most promising algorithmic ideas. Model Ensemble Strategy: The system leverages a combination of Gemini models: Gemini Flash (fastest and most efficient model): Maximizes the breadth of ideas explored Gemini Pro (most powerful model): Provides critical depth with insightful suggestions Workflow Process: Verification Mechanism: AlphaEvolve verifies, runs, and scores proposed programs using automated evaluation metrics, providing objective, quantifiable assessment of solution quality. Applicable Domains: Particularly effective in domains where progress can be clearly and systematically measured, such as mathematics and computer science. Part 2: Practical Applications and Impact Data Center Scheduling Optimization: AlphaEvolve discovered a simple yet remarkably effective heuristic to help Borg orchestrate Google's data centers more efficiently In production for over a year, continuously recovering an average of 0.7% of Google's worldwide compute resources Offers significant operational advantages of human-readable code: interpretability, debuggability, predictability, and ease of deployment Hardware Design Assistance: Proposed a Verilog rewrite that removed unnecessary bits in a key arithmetic circuit for matrix multiplication Integrated into an upcoming Tensor Processing Unit (TPU) Promotes collaboration between AI and hardware engineers to accelerate specialized chip design AI Training and Inference Enhancement: Optimized matrix multiplication operations, speeding up a vital kernel in Gemini's architecture by 23% Led to a 1% reduction in Gemini's training time Reduced kernel optimization engineering time from weeks of expert effort to days of automated experiments Achieved up to 32.5% speedup for the FlashAttention kernel implementation in Transformer-based AI models Part 3: Breakthroughs in Mathematics and Algorithm Discovery Matrix Multiplication Algorithm Innovation: Found an algorithm to multiply 4x4 complex-valued matrices using 48 scalar multiplications Improved upon Strassen's 1969 algorithm, previously known as the best in this setting Demonstrated significant advance over previous work (AlphaTensor), which only found improvements for binary arithmetic Mathematical Open Problem Research: Applied to over 50 open problems in mathematical analysis, geometry, combinatorics, and number theory Rediscovered state-of-the-art solutions in approximately 75% of cases Improved previously best known solutions in 20% of cases Specific Mathematical Breakthrough Case: Advanced the "kissing number problem," a geometric challenge that has fascinated mathematicians for over 300 years Discovered a configuration of 593 outer spheres and established a new lower bound in 11 dimensions Part 4: Community Discussion and Future Outlook Reddit Community Perspectives: Questioning the delay between implementation and disclosure: "If these discoveries are year old and are disclosed only now then what are they doing right now?" Contemplating potential for improvement: "Is this a one-time boost or a predictor of further RL improvement?" Skepticism about data not being used for model training: "Their researchers state in the accompanying interview they haven't really done that yet... this seems incredibly strange" Recognition of AlphaEvolve as a more general RL system: "That's already huge for practical AI applications in science, without needing ASI or anything" Future Development Path: Expected to continue improving alongside large language model capabilities, especially as they become better at coding Google collaborating with People + AI Research team to develop user interface Planning Early Access Program for selected academic users Exploring broader application possibilities including material science, drug discovery, sustainability, and wider technological applications Part 5: Potential Integration with A2A Protocol Google Ecosystem Synergy: As both AlphaEvolve and A2A Protocol are Google products, there is strong potential for mutual integration and support. Enhanced Agent Capabilities: AlphaEvolve could significantly enhance A2A Protocol by providing advanced algorithmic optimization for agent-to-agent communications. Optimi

Introduction

- This report examines Google DeepMind's AlphaEvolve technology, officially released on May 14, 2025.

- AlphaEvolve is an evolutionary coding agent powered by Gemini large language models, focused on general-purpose algorithm discovery and optimization.

- The report analyzes AlphaEvolve's technical principles, practical applications, and potential impact on future AI and algorithmic research.

Part 1: AlphaEvolve Technical Architecture and Working Principles

- Core Technology Combination: AlphaEvolve pairs the creative capabilities of large language models with automated evaluators, using an evolutionary framework to improve the most promising algorithmic ideas.

-

Model Ensemble Strategy: The system leverages a combination of Gemini models:

- Gemini Flash (fastest and most efficient model): Maximizes the breadth of ideas explored

- Gemini Pro (most powerful model): Provides critical depth with insightful suggestions

- Workflow Process:

- Verification Mechanism: AlphaEvolve verifies, runs, and scores proposed programs using automated evaluation metrics, providing objective, quantifiable assessment of solution quality.

- Applicable Domains: Particularly effective in domains where progress can be clearly and systematically measured, such as mathematics and computer science.

Part 2: Practical Applications and Impact

-

Data Center Scheduling Optimization:

- AlphaEvolve discovered a simple yet remarkably effective heuristic to help Borg orchestrate Google's data centers more efficiently

- In production for over a year, continuously recovering an average of 0.7% of Google's worldwide compute resources

- Offers significant operational advantages of human-readable code: interpretability, debuggability, predictability, and ease of deployment

-

Hardware Design Assistance:

- Proposed a Verilog rewrite that removed unnecessary bits in a key arithmetic circuit for matrix multiplication

- Integrated into an upcoming Tensor Processing Unit (TPU)

- Promotes collaboration between AI and hardware engineers to accelerate specialized chip design

-

AI Training and Inference Enhancement:

- Optimized matrix multiplication operations, speeding up a vital kernel in Gemini's architecture by 23%

- Led to a 1% reduction in Gemini's training time

- Reduced kernel optimization engineering time from weeks of expert effort to days of automated experiments

- Achieved up to 32.5% speedup for the FlashAttention kernel implementation in Transformer-based AI models

Part 3: Breakthroughs in Mathematics and Algorithm Discovery

-

Matrix Multiplication Algorithm Innovation:

- Found an algorithm to multiply 4x4 complex-valued matrices using 48 scalar multiplications

- Improved upon Strassen's 1969 algorithm, previously known as the best in this setting

- Demonstrated significant advance over previous work (AlphaTensor), which only found improvements for binary arithmetic

-

Mathematical Open Problem Research:

- Applied to over 50 open problems in mathematical analysis, geometry, combinatorics, and number theory

- Rediscovered state-of-the-art solutions in approximately 75% of cases

- Improved previously best known solutions in 20% of cases

-

Specific Mathematical Breakthrough Case:

- Advanced the "kissing number problem," a geometric challenge that has fascinated mathematicians for over 300 years

- Discovered a configuration of 593 outer spheres and established a new lower bound in 11 dimensions

Part 4: Community Discussion and Future Outlook

-

Reddit Community Perspectives:

- Questioning the delay between implementation and disclosure: "If these discoveries are year old and are disclosed only now then what are they doing right now?"

- Contemplating potential for improvement: "Is this a one-time boost or a predictor of further RL improvement?"

- Skepticism about data not being used for model training: "Their researchers state in the accompanying interview they haven't really done that yet... this seems incredibly strange"

- Recognition of AlphaEvolve as a more general RL system: "That's already huge for practical AI applications in science, without needing ASI or anything"

-

Future Development Path:

- Expected to continue improving alongside large language model capabilities, especially as they become better at coding

- Google collaborating with People + AI Research team to develop user interface

- Planning Early Access Program for selected academic users

- Exploring broader application possibilities including material science, drug discovery, sustainability, and wider technological applications

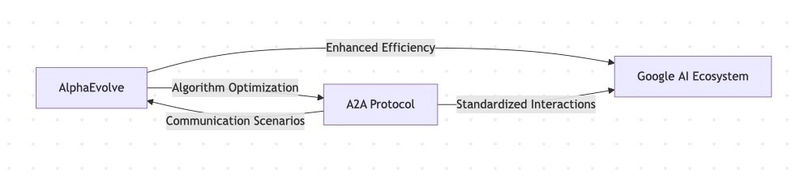

Part 5: Potential Integration with A2A Protocol

- Google Ecosystem Synergy: As both AlphaEvolve and A2A Protocol are Google products, there is strong potential for mutual integration and support.

- Enhanced Agent Capabilities: AlphaEvolve could significantly enhance A2A Protocol by providing advanced algorithmic optimization for agent-to-agent communications.

- Optimized Communication Patterns: The evolutionary approach of AlphaEvolve could discover more efficient communication patterns between AI agents operating within the A2A framework.

- Computational Efficiency: Integration could lead to more computationally efficient agent interactions, reducing latency and resource requirements.

- Standardized Algorithm Development: AlphaEvolve might help establish standardized algorithms for specific agent interaction scenarios, further solidifying Google's position in the AI ecosystem.

Conclusion

- AlphaEvolve represents a significant advancement in algorithm discovery, demonstrating the powerful potential of combining large language models with evolutionary algorithms.

- The technology has already made substantial impacts across Google's computing ecosystem, including data center efficiency improvements, hardware design optimization, and AI training acceleration.

- In mathematics and algorithm research, AlphaEvolve not only reproduces existing best solutions but achieves breakthroughs on certain open problems.

- Community discussions reflect high interest in the technology's development pace and potential, while raising important questions about technical transparency and future applications.

- As large language model capabilities continue to improve, AlphaEvolve has the potential to create transformative impacts across broader domains, particularly in problem areas where solutions can be described as algorithms and automatically verified.

- The potential integration with A2A Protocol highlights Google's strategic approach to creating synergies between its advanced AI technologies, potentially establishing new standards for agent communication and interaction in the evolving AI landscape.

_Dzmitry_Skazau_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![American Airlines Offering 5,000-Mile Main Cabin Awards—Because Coach Seats Aren’t Selling [Roundup]](https://viewfromthewing.com/wp-content/uploads/2017/11/20171129_062837.jpg?#)