"Unlocking Speed: How XAttention Transforms Long-Context Inference in AI"

In the rapidly evolving landscape of artificial intelligence, one challenge looms large: how to efficiently process and infer meaning from long-context data. As we delve into the intricate world of AI, many professionals find themselves grappling with sluggish models that struggle under the weight of extensive information. Have you ever felt frustrated by slow inference times or overwhelmed by complex datasets? You’re not alone. Enter XAttention—a groundbreaking approach poised to revolutionize long-context inference in AI systems. This innovative technique promises not only to enhance speed but also to elevate accuracy, allowing machines to grasp context like never before. In this blog post, we will unravel the mysteries behind XAttention, exploring its scientific foundations and real-world applications that could transform industries ranging from healthcare to finance. We’ll compare traditional methods with this cutting-edge technology and gaze into future trends shaping contextual processing in AI. Join us on this journey as we unlock the secrets of speed transformation—your next breakthrough in harnessing AI awaits! Understanding Long-Context Inference Long-context inference is crucial for processing extensive sequences in Transformer models, particularly as data complexity increases. The introduction of XAttention addresses this challenge by employing block-sparse attention mechanisms that significantly enhance computational efficiency. By utilizing antidiagonal scoring to prune non-essential blocks within the attention matrix, XAttention achieves a remarkable speedup of up to 13.5 times without compromising accuracy. This innovation not only accelerates inference but also maintains the model's performance across various benchmarks, making it an essential tool for applications like video understanding and generation. Key Features of XAttention XAttention’s design focuses on optimizing long-range context processing through advanced techniques such as threshold prediction and strategic block selection. These methods improve both computational efficiency and accuracy while addressing inherent challenges related to sparsity in large language models (LLMs). Furthermore, its ability to balance high-speed inference with robust performance positions it favorably against traditional approaches in handling lengthy sequences effectively. The framework's collaborative development highlights significant advancements in machine learning research, paving the way for future innovations aimed at enhancing memory bandwidth efficiency and overall model performance across diverse AI applications.# What is XAttention? XAttention is an innovative framework designed to enhance long-context inference in Transformer models by utilizing sparse attention mechanisms. By employing antidiagonal scoring, it effectively identifies and eliminates non-essential blocks within the attention matrix, achieving remarkable sparsity that accelerates inference speeds by up to 13.5 times without compromising accuracy. This optimization addresses significant challenges faced by traditional attention methods when processing lengthy sequences, making XAttention particularly effective for real-world applications such as video understanding and generation. Key Features of XAttention The architecture of XAttention incorporates advanced techniques like threshold prediction and block selection strategies that contribute to its computational efficiency while maintaining high levels of performance across various benchmarks. The paper highlights how these methodologies not only improve speed but also ensure reliable outcomes in tasks requiring extensive context comprehension. Furthermore, collaborative contributions from multiple authors underscore the importance of collective expertise in advancing machine learning technologies through frameworks like XAttention, paving the way for future developments in AI-driven applications.# The Science Behind Speed Transformation XAttention introduces a groundbreaking approach to enhancing the efficiency of Transformer models through block-sparse attention mechanisms. By employing antidiagonal scoring, XAttention identifies and prunes non-essential blocks within the attention matrix, achieving remarkable speed improvements—up to 13.5 times faster inference without sacrificing accuracy. This method addresses the inherent challenges associated with long-context processing in large language models (LLMs), where traditional dense attention can become computationally prohibitive. Technical Aspects of XAttention The design of XAttention emphasizes both accuracy and computational efficiency by optimizing how attention is allocated across sequences. Techniques such as threshold prediction and strategic block selection play crucial roles in maximizing performance while minimizing resource consumption. Evaluations across various benchmarks demonstrate that XAttention not only accelerates inference but also mainta

In the rapidly evolving landscape of artificial intelligence, one challenge looms large: how to efficiently process and infer meaning from long-context data. As we delve into the intricate world of AI, many professionals find themselves grappling with sluggish models that struggle under the weight of extensive information. Have you ever felt frustrated by slow inference times or overwhelmed by complex datasets? You’re not alone. Enter XAttention—a groundbreaking approach poised to revolutionize long-context inference in AI systems. This innovative technique promises not only to enhance speed but also to elevate accuracy, allowing machines to grasp context like never before. In this blog post, we will unravel the mysteries behind XAttention, exploring its scientific foundations and real-world applications that could transform industries ranging from healthcare to finance. We’ll compare traditional methods with this cutting-edge technology and gaze into future trends shaping contextual processing in AI. Join us on this journey as we unlock the secrets of speed transformation—your next breakthrough in harnessing AI awaits!

Understanding Long-Context Inference

Long-context inference is crucial for processing extensive sequences in Transformer models, particularly as data complexity increases. The introduction of XAttention addresses this challenge by employing block-sparse attention mechanisms that significantly enhance computational efficiency. By utilizing antidiagonal scoring to prune non-essential blocks within the attention matrix, XAttention achieves a remarkable speedup of up to 13.5 times without compromising accuracy. This innovation not only accelerates inference but also maintains the model's performance across various benchmarks, making it an essential tool for applications like video understanding and generation.

Key Features of XAttention

XAttention’s design focuses on optimizing long-range context processing through advanced techniques such as threshold prediction and strategic block selection. These methods improve both computational efficiency and accuracy while addressing inherent challenges related to sparsity in large language models (LLMs). Furthermore, its ability to balance high-speed inference with robust performance positions it favorably against traditional approaches in handling lengthy sequences effectively.

The framework's collaborative development highlights significant advancements in machine learning research, paving the way for future innovations aimed at enhancing memory bandwidth efficiency and overall model performance across diverse AI applications.# What is XAttention?

XAttention is an innovative framework designed to enhance long-context inference in Transformer models by utilizing sparse attention mechanisms. By employing antidiagonal scoring, it effectively identifies and eliminates non-essential blocks within the attention matrix, achieving remarkable sparsity that accelerates inference speeds by up to 13.5 times without compromising accuracy. This optimization addresses significant challenges faced by traditional attention methods when processing lengthy sequences, making XAttention particularly effective for real-world applications such as video understanding and generation.

Key Features of XAttention

The architecture of XAttention incorporates advanced techniques like threshold prediction and block selection strategies that contribute to its computational efficiency while maintaining high levels of performance across various benchmarks. The paper highlights how these methodologies not only improve speed but also ensure reliable outcomes in tasks requiring extensive context comprehension. Furthermore, collaborative contributions from multiple authors underscore the importance of collective expertise in advancing machine learning technologies through frameworks like XAttention, paving the way for future developments in AI-driven applications.# The Science Behind Speed Transformation

XAttention introduces a groundbreaking approach to enhancing the efficiency of Transformer models through block-sparse attention mechanisms. By employing antidiagonal scoring, XAttention identifies and prunes non-essential blocks within the attention matrix, achieving remarkable speed improvements—up to 13.5 times faster inference without sacrificing accuracy. This method addresses the inherent challenges associated with long-context processing in large language models (LLMs), where traditional dense attention can become computationally prohibitive.

Technical Aspects of XAttention

The design of XAttention emphasizes both accuracy and computational efficiency by optimizing how attention is allocated across sequences. Techniques such as threshold prediction and strategic block selection play crucial roles in maximizing performance while minimizing resource consumption. Evaluations across various benchmarks demonstrate that XAttention not only accelerates inference but also maintains high fidelity in tasks ranging from video understanding to natural language processing, making it an essential advancement for real-world applications requiring rapid contextual analysis.# Real-World Applications of XAttention

XAttention's innovative framework is particularly beneficial in fields requiring efficient long-context inference, such as video understanding and generation. By utilizing block-sparse attention with antidiagonal scoring, it effectively identifies and prunes non-essential blocks within the attention matrix. This results in significant computational speedups—up to 13.5 times faster than traditional methods—while maintaining accuracy across various benchmarks. The application of XAttention extends beyond mere acceleration; its design allows for improved performance in tasks involving extensive data sequences, making it suitable for real-time applications like autonomous driving systems or interactive media.

Key Areas of Impact

-

Video Understanding: In video analysis, where context spans multiple frames, XAttention enhances processing efficiency without sacrificing detail.

-

Natural Language Processing (NLP): For NLP tasks that involve lengthy documents or conversations, this framework streamlines model training and inference time significantly.

-

Computer Vision: With its ability to handle large contexts efficiently, XAttention can improve object detection models by enabling them to consider broader spatial relationships over longer periods.

By addressing the challenges associated with long-range dependencies in Transformer models through optimized attention mechanisms, XAttention stands poised to revolutionize how AI processes complex datasets across various domains.

Comparing Traditional vs. XAttention Methods

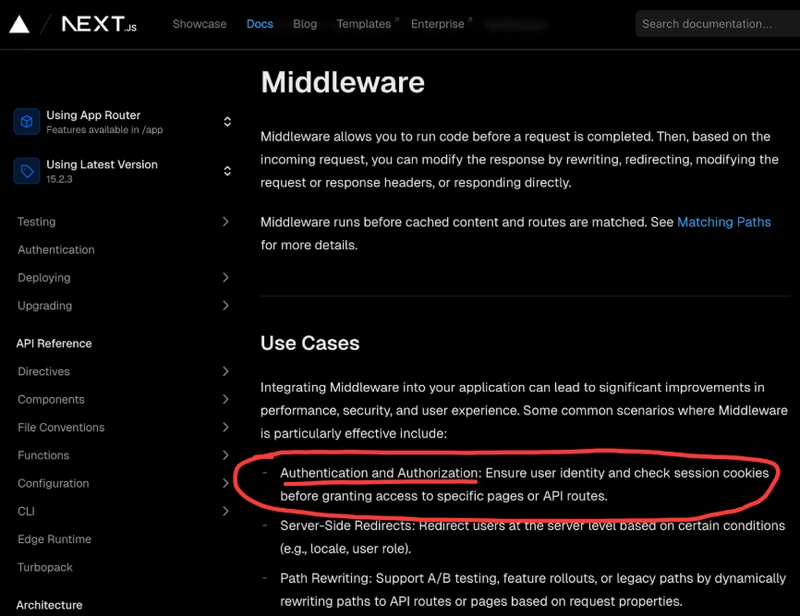

Traditional attention mechanisms in Transformer models often struggle with long-context inference due to their quadratic complexity, which limits scalability and efficiency. In contrast, the XAttention framework introduces a block-sparse attention approach that utilizes antidiagonal scoring to prune non-essential blocks within the attention matrix. This innovative design achieves up to 13.5× speedup while maintaining accuracy across various benchmarks. By balancing computational efficiency and performance, XAttention is particularly advantageous for applications requiring real-time processing of extensive data sequences, such as video understanding and generation.

Key Differences

The primary distinction lies in how each method handles context length; traditional methods apply dense attention across all tokens, leading to significant resource consumption. Meanwhile, XAttention's selective focus on relevant information allows it to process longer sequences more effectively without sacrificing quality or precision. Additionally, techniques like threshold prediction enhance its adaptability further by optimizing block selection based on task requirements—something traditional methods lack.

In summary, while both approaches aim at improving contextual understanding in AI models, XAttention offers substantial improvements over conventional frameworks through advanced sparsity techniques tailored for modern demands in machine learning tasks.

Future Trends in AI and Contextual Processing

The future of AI, particularly in contextual processing, is poised for significant advancements with frameworks like XAttention leading the charge. By utilizing block-sparse attention mechanisms combined with antidiagonal scoring, XAttention optimizes long-context inference within Transformer models. This innovative approach not only enhances computational efficiency but also maintains high accuracy levels across various benchmarks. As industries increasingly rely on real-time data processing—such as video understanding and generation—the demand for efficient algorithms will rise. The integration of techniques such as threshold prediction and selective block selection will further refine these systems, paving the way for more sophisticated applications that require rapid decision-making capabilities.

Innovations Driving Change

Emerging trends indicate a shift towards self-supervised learning methods that enhance representation learning while minimizing resource consumption. Models like Sonata demonstrate this by achieving robust performance in 3D tasks without extensive labeled datasets. Additionally, advancements in autoregressive visual generation through solutions like TokenBridge highlight the potential to merge discrete and continuous token representations effectively. These innovations signal a transformative era where AI can process complex contexts efficiently, enabling breakthroughs across diverse fields from natural language processing to computer vision.

In conclusion, the exploration of XAttention reveals its transformative potential in enhancing long-context inference within AI systems. By understanding the intricacies of long-context processing and how traditional methods often struggle with efficiency, we can appreciate the innovative approach that XAttention brings to the table. Its unique architecture not only accelerates processing speeds but also maintains accuracy across various applications, from natural language processing to complex data analysis. As we compare conventional techniques with XAttention's capabilities, it becomes evident that this advancement paves the way for more sophisticated AI models capable of handling extensive contextual information seamlessly. Looking ahead, as trends in AI continue to evolve towards greater contextual awareness and speed optimization, technologies like XAttention will undoubtedly play a pivotal role in shaping future developments and applications across diverse industries. Embracing these innovations is essential for harnessing the full power of artificial intelligence in our increasingly data-driven world.

FAQs about XAttention and Long-Context Inference

1. What is long-context inference in AI, and why is it important?

Long-context inference refers to the ability of an AI model to process and understand information from extended sequences of data or text. It is crucial because many real-world applications, such as natural language processing (NLP) tasks, require understanding context over longer passages to generate accurate responses or predictions.

2. How does XAttention differ from traditional attention mechanisms in AI?

XAttention introduces a more efficient way of handling long-context inputs by optimizing how attention scores are calculated and applied. Unlike traditional methods that may struggle with computational complexity as input length increases, XAttention streamlines this process, allowing for faster inference times without sacrificing performance.

3. What scientific principles underpin the speed transformation offered by XAttention?

The speed transformation provided by XAttention relies on advanced mathematical techniques that reduce the number of computations needed during the attention mechanism's execution. This includes approximations that maintain accuracy while significantly decreasing processing time, making it feasible to handle larger contexts efficiently.

4. Can you provide examples of real-world applications where XAttention can be beneficial?

XAttention can be particularly advantageous in various fields such as: - Healthcare: Analyzing lengthy patient records for better diagnosis. - Legal: Reviewing extensive legal documents quickly. - Customer Service: Enhancing chatbots' abilities to respond accurately based on historical conversations. These applications benefit from improved contextual understanding due to faster processing capabilities.

5. What future trends might we expect regarding AI and contextual processing technologies like XAttention?

Future trends may include further enhancements in efficiency through hybrid models combining different types of attentional mechanisms, increased integration into everyday technology (like virtual assistants), and advancements towards achieving human-like comprehension levels across diverse domains—ultimately leading toward more intuitive user experiences in AI systems.