Stop Your AI Assistant From Guessing - Introducing interactive-mcp

You ask your AI assistant to refactor a function. It confidently rewrites half the file, breaking tests and introducing bugs. If only it had asked which optimization you wanted! That simple question could have saved an hour of debugging. We've all been there: the powerful LLM just... guesses. Why Can't It Just Ask? Standard LLM interactions are often one-way command lines: request in, response out. This model doesn't easily let the AI pause its work and ask you, the user on your local machine, for real-time clarification. You are now part of the MCP tool To bridge this, tools like Cursor use the Model Context Protocol (MCP). MCP allows richer communication between dev tools and AI models, including requests for user interaction. Introducing interactive-mcp: The Bridge This frustration led me to build interactive-mcp: a small, open-source Node.js/TypeScript server that acts as an MCP endpoint for interaction. When an MCP-compatible AI assistant needs your input, it requests it via interactive-mcp. My server then presents the prompt or notification directly to you on your machine. How It Works: Giving the AI a Voice interactive-mcp exposes several MCP tools: 1. request_user_input Asks you simple questions directly in a terminal window. The LLM sends a message (and optional predefined answers), interactive-mcp shows a command line prompt, and your typed response goes back to the LLM. 2. start/ask/stop_intensive_chat For multi-step interactions (like configurations): start_intensive_chat: Opens a persistent terminal chat session. ask_intensive_chat: Asks follow-up questions in the same window. stop_intensive_chat: Closes the session. 3. message_complete_notification Lets the AI send a simple OS notification, useful for confirming when tasks finish. (Powered by node-notifier for cross-platform support: Win/Mac/Linux). The Benefits Why use interactive-mcp?

You ask your AI assistant to refactor a function. It confidently rewrites half the file, breaking tests and introducing bugs. If only it had asked which optimization you wanted! That simple question could have saved an hour of debugging. We've all been there: the powerful LLM just... guesses.

Why Can't It Just Ask?

Standard LLM interactions are often one-way command lines: request in, response out. This model doesn't easily let the AI pause its work and ask you, the user on your local machine, for real-time clarification.

You are now part of the MCP tool

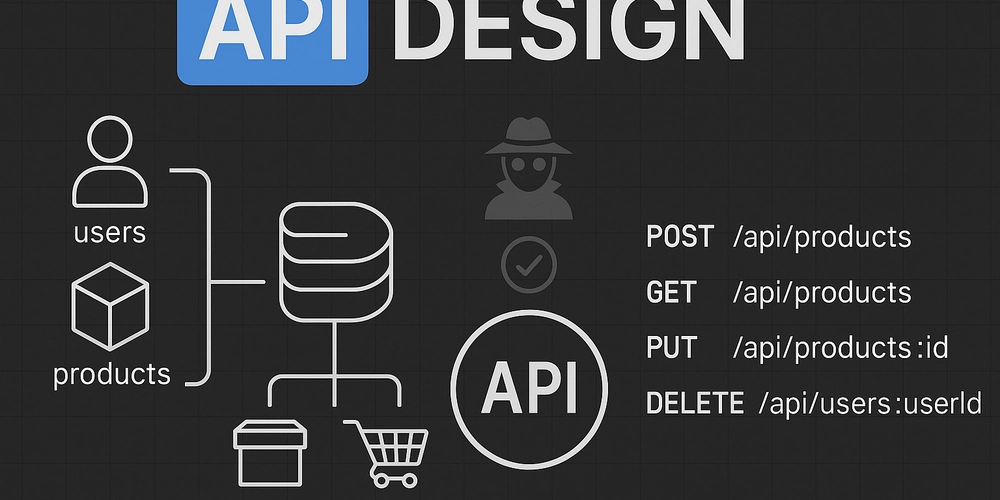

To bridge this, tools like Cursor use the Model Context Protocol (MCP). MCP allows richer communication between dev tools and AI models, including requests for user interaction.

Introducing interactive-mcp: The Bridge

This frustration led me to build interactive-mcp: a small, open-source Node.js/TypeScript server that acts as an MCP endpoint for interaction.

When an MCP-compatible AI assistant needs your input, it requests it via interactive-mcp. My server then presents the prompt or notification directly to you on your machine.

How It Works: Giving the AI a Voice

interactive-mcp exposes several MCP tools:

1. request_user_input

Asks you simple questions directly in a terminal window. The LLM sends a message (and optional predefined answers), interactive-mcp shows a command line prompt, and your typed response goes back to the LLM.

2. start/ask/stop_intensive_chat

For multi-step interactions (like configurations):

-

start_intensive_chat: Opens a persistent terminal chat session. -

ask_intensive_chat: Asks follow-up questions in the same window. -

stop_intensive_chat: Closes the session.

3. message_complete_notification

Lets the AI send a simple OS notification, useful for confirming when tasks finish.

(Powered by node-notifier for cross-platform support: Win/Mac/Linux).

The Benefits

Why use interactive-mcp?