Researchers from AWS and Intuit Propose a Zero Trust Security Framework to Protect the Model Context Protocol (MCP) from Tool Poisoning and Unauthorized Access

AI systems are becoming increasingly dependent on real-time interactions with external data sources and operational tools. These systems are now expected to perform dynamic actions, make decisions in changing environments, and access live information streams. To enable such capabilities, AI architectures are evolving to incorporate standardized interfaces that connect models with services and datasets, thereby […] The post Researchers from AWS and Intuit Propose a Zero Trust Security Framework to Protect the Model Context Protocol (MCP) from Tool Poisoning and Unauthorized Access appeared first on MarkTechPost.

AI systems are becoming increasingly dependent on real-time interactions with external data sources and operational tools. These systems are now expected to perform dynamic actions, make decisions in changing environments, and access live information streams. To enable such capabilities, AI architectures are evolving to incorporate standardized interfaces that connect models with services and datasets, thereby facilitating seamless integration. One of the most significant advancements in this area is the adoption of protocols that allow AI to move beyond static prompts and directly interface with cloud platforms, development environments, and remote tools. As AI becomes more autonomous and embedded in critical enterprise infrastructure, the importance of controlling and securing these interaction channels has grown immensely.

With these capabilities, however, comes a significant security burden. When AI is empowered to execute tasks or make decisions based on input from various external sources, the surface area for attacks expands. Several pressing problems have emerged. Malicious actors may manipulate tool definitions or inject harmful instructions, leading to compromised operations. Sensitive data, previously accessible only through secure internal systems, can now be exposed to misuse or exfiltration if any part of the AI interaction pipeline is compromised. Also, AI models themselves can be tricked into misbehaving through crafted prompts or poisoned tool configurations. This complex trust landscape, spanning the AI model, client, server, tools, and data, poses serious threats to safety, data integrity, and operational reliability.

Historically, developers have relied on broad enterprise security frameworks, such as OAuth 2.0, for access management, Web Application Firewalls for traffic inspection, and general API security measures. While these remain important, they are not tailored to the unique behaviors of the Model Context Protocol (MCP), a dynamic architecture introduced by Anthropic to provide AI models with capabilities for tool invocation and real-time data access. The inherent flexibility and extensibility of MCP make traditional static defenses insufficient. Prior research identified broad categories of threats, but lacked the granularity needed for day-to-day enterprise implementation, especially in settings where MCP is used across multiple environments and serves as the backbone for real-time automation workflows.

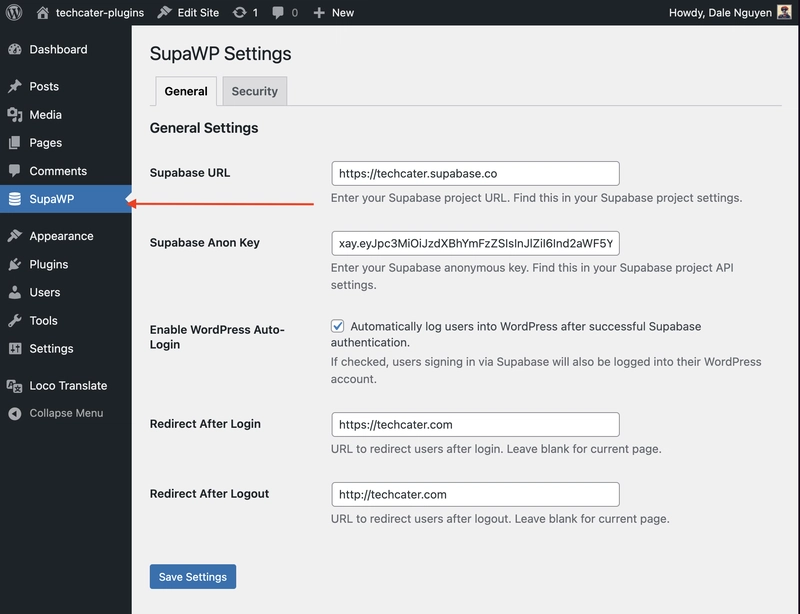

Researchers from Amazon Web Services and Intuit have designed a security framework customized for MCP’s dynamic and complex ecosystem. Their focus is not just on identifying potential vulnerabilities, but rather on translating theoretical risks into structured, practical safeguards. Their work introduces a multi-layered defense system that spans from the MCP host and client to server environments and connected tools. The framework outlines steps that enterprises can take to secure MCP environments in production, including tool authentication, network segmentation, sandboxing, and data validation. Unlike generic guidance, this approach provides fine-tuned strategies that respond directly to the ways MCP is being used in enterprise environments.

The security framework is extensive and built on the principles of Zero Trust. One notable strategy involves implementing “Just-in-Time” access control, where access is provisioned temporarily for the duration of a single session or task. This dramatically reduces the time window in which an attacker could misuse credentials or permissions. Another key method includes behavior-based monitoring, where tools are evaluated not only based on code inspection but also by their runtime behavior and deviation from normal patterns. Furthermore, tool descriptions are treated as potentially dangerous content and subjected to semantic analysis and schema validation to detect tampering or embedded malicious instructions. The researchers have also integrated traditional techniques, such as TLS encryption, secure containerization with AppArmor, and signed tool registries, into their approach, but have modified them specifically for the needs of MCP workflows.

Performance evaluations and test results back the proposed framework. For example, the researchers detail how semantic validation of tool descriptions detected 92% of simulated poisoning attempts. Network segmentation strategies reduced the successful establishment of command-and-control channels by 83% across test cases. Continuous behavior monitoring detected unauthorized API usage in 87% of abnormal tool execution scenarios. When dynamic access provisioning was applied, the attack surface time window was reduced by over 90% compared to persistent access tokens. These numbers demonstrate that a tailored approach significantly strengthens MCP security without requiring fundamental architectural changes.

One of the most significant findings of this research is its ability to consolidate disparate security recommendations and directly map them to the components of the MCP stack. These include the AI foundation models, tool ecosystems, client interfaces, data sources, and server environments. The framework addresses challenges such as prompt injection, schema mismatches, memory-based attacks, tool resource exhaustion, insecure configurations, and cross-agent data leaks. By dissecting the MCP into layers and mapping each one to specific risks and controls, the researchers provide clarity for enterprise security teams aiming to integrate AI safely into their operations.

The paper also provides recommendations for deployment. Three patterns are explored: isolated security zones for MCP, API gateway-backed deployments, and containerized microservices within orchestration systems, such as Kubernetes. Each of these patterns is detailed with its pros and cons. For example, the containerized approach offers operational flexibility but depends heavily on the correct configuration of orchestration tools. Also, integration with existing enterprise systems, such as Identity and Access Management (IAM), Security Information and Event Management (SIEM), and Data Loss Prevention (DLP) platforms, is emphasized to avoid siloed implementations and enable cohesive monitoring.

Several Key Takeaways from the Research include:

- The Model Context Protocol enables real-time AI interaction with external tools and data sources, which significantly increases the security complexity.

- Researchers identified threats using the MAESTRO framework, spanning seven architectural layers, including foundation models, tool ecosystems, and deployment infrastructure.

- Tool poisoning, data exfiltration, command-and-control misuse, and privilege escalation were highlighted as primary risks.

- The security framework introduces Just-in-Time access, enhanced OAuth 2.0+ controls, tool behavior monitoring, and sandboxed execution.

- Semantic validation and tool description sanitization were successful in detecting 92% of simulated attack attempts.

- Deployment patterns such as Kubernetes-based orchestration and secure API gateway models were evaluated for practical adoption.

- Integration with enterprise IAM, SIEM, and DLP systems ensures policy alignment and centralized control across environments.

- Researchers provided actionable playbooks for incident response, including steps for detection, containment, recovery, and forensic analysis.

- While effective, the framework acknowledges limitations like performance overhead, complexity in policy enforcement, and the challenge of vetting third-party tools.

Here is the Paper. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 90k+ ML SubReddit.