Model Context Protocol : A New Standard for Defining APIs

Why APIs Need a New Standard for LLMs: Large Language Models (LLMs) are like compressed versions of the internet — great at generating text based on context, but passive by default. They can’t take meaningful actions like sending an email or querying a database on their own. To address this, developers began connecting LLMs to external tools via APIs. While powerful, this approach quickly becomes messy: APIs change, glue code piles up, and maintaining M×N integrations (M apps × N tools) becomes a nightmare. That’s where the Model Context Protocol (MCP) comes in. MCP provides a standardized interface for connecting LLMs to tools, data sources, and services. Instead of custom integrations for every app-tool pair, MCP introduces a shared protocol that simplifies and scales integrations. Think of MCP like USB for AI. Before USB, every device needed a custom connector. Similarly, before MCP, each AI app needed its own integration with every tool. MCP reduces M×N complexity to M+N: Tool developers implement N MCP servers AI app developers implement M MCP clients With this client-server model, each side only builds once — and everything just works. Understanding MCP’s Core Architecture: Hosts: These are applications like Claude Desktop, IDEs, or AI-powered tools that want to access external data or capabilities using MCP. Clients: Embedded within the host applications, clients maintain a one-to-one connection with MCP servers. They act as the bridge between the host and the server. Servers: Servers provide access to various resources, tools, and prompts. They expose these capabilities to clients, enabling seamless interaction through the MCP layer. Inside MCP Servers: Resources, Tools & Prompts MCP servers expose three key components — Resources, Tools, and Prompts — each serving a unique role in enabling LLMs to interact with external systems effectively. Resources Resources are data objects made available by the server to the client. They can be text-based (like source code, log files) or binary (like images, PDFs). These resources are application-controlled, meaning the client decides when and how to use them. Importantly, resources are read-only context providers. They should not perform computations or trigger side effects. If actions or side effects are needed, they should be handled through tools. Tools Tools are functions that LLMs can control to perform specific actions — for example, executing a web search or interacting with an external API. They enable LLMs to move beyond passive context and take real-world actions, perform computations, or fetch live data. Though tools are exposed by the server, they are model-driven — meaning the LLM can invoke them (usually with user approval in the loop). Prompts Prompts are reusable prompt templates that servers can define and offer to clients. These help guide LLMs and users to interact with tools and resources more effectively and consistently. Prompts are user-controlled — clients can present them to users for selection and usage, making interaction smoother and more intentional. MCP Servers: The Bridge to External Systems MCP servers act as the bridge between the MCP ecosystem and external tools, systems, or data sources. They can be developed in any programming language and communicate with clients using two transport methods: Stdio Transport: Uses standard input/output for communication — ideal for local or lightweight processes. HTTP with SSE (Server-Sent Events) Transport: Uses HTTP connections for more scalable, web-based communication between clients and servers. How MCP Works: The Full Workflow The Model Context Protocol follows a structured communication flow between hosts, clients, and servers. Here's how the full lifecycle plays out: 1. Initialization The host creates a client, which then sends an Initialize request to the server. This request includes the client’s protocol version and its capabilities. The server responds with its own protocol version and a list of supported capabilities, completing the handshake. 2. Message Exchange Once initialized, the host gains access to the server’s resources, prompts, and tools. Here’s how interaction typically works: Request: If the LLM determines that it needs to perform an action (e.g., for the user query “What’s the weather in Bangalore?”), the host instructs the client to send a request to the server — in this case, to invoke the fetch_weather tool. Response: The server processes the request by executing the appropriate tool. It then sends the result back to the client, which forwards it to the host. The host can then pass this enriched context back into the LLM, enabling it to generate a more accurate and complete response. 3. Termination Either the host or the server can gracefully close the connection by issuing a close() command.

Why APIs Need a New Standard for LLMs:

Large Language Models (LLMs) are like compressed versions of the internet — great at generating text based on context, but passive by default. They can’t take meaningful actions like sending an email or querying a database on their own.

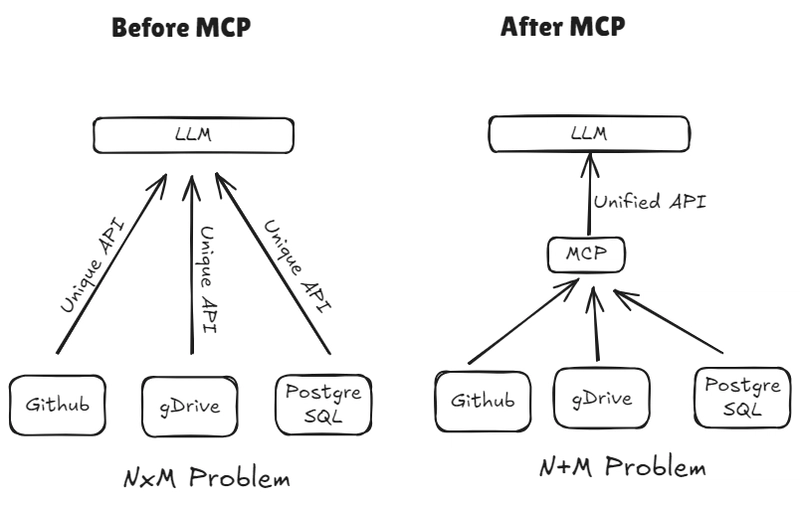

To address this, developers began connecting LLMs to external tools via APIs. While powerful, this approach quickly becomes messy: APIs change, glue code piles up, and maintaining M×N integrations (M apps × N tools) becomes a nightmare.

That’s where the Model Context Protocol (MCP) comes in.

MCP provides a standardized interface for connecting LLMs to tools, data sources, and services. Instead of custom integrations for every app-tool pair, MCP introduces a shared protocol that simplifies and scales integrations.

Before USB, every device needed a custom connector. Similarly, before MCP, each AI app needed its own integration with every tool. MCP reduces M×N complexity to M+N:

- Tool developers implement N MCP servers

- AI app developers implement M MCP clients With this client-server model, each side only builds once — and everything just works.

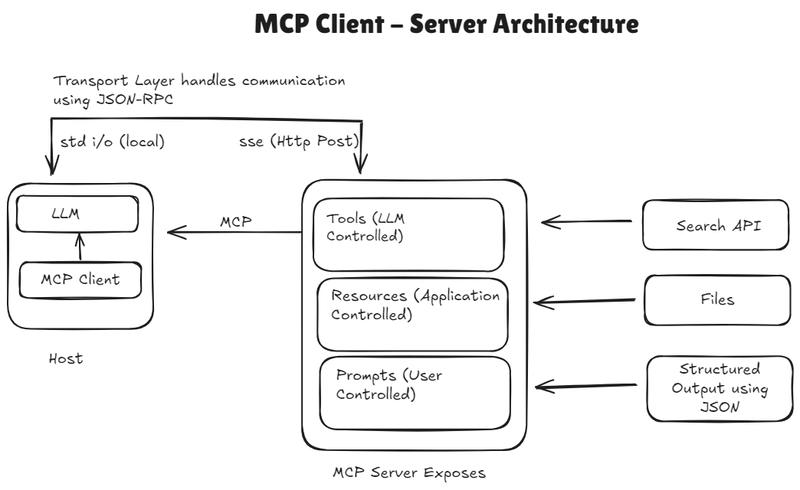

Understanding MCP’s Core Architecture:

- Hosts: These are applications like Claude Desktop, IDEs, or AI-powered tools that want to access external data or capabilities using MCP.

- Clients: Embedded within the host applications, clients maintain a one-to-one connection with MCP servers. They act as the bridge between the host and the server.

- Servers: Servers provide access to various resources, tools, and prompts. They expose these capabilities to clients, enabling seamless interaction through the MCP layer.

Inside MCP Servers: Resources, Tools & Prompts

MCP servers expose three key components — Resources, Tools, and Prompts — each serving a unique role in enabling LLMs to interact with external systems effectively.

Resources

- Resources are data objects made available by the server to the client. They can be text-based (like source code, log files) or binary (like images, PDFs).

- These resources are application-controlled, meaning the client decides when and how to use them.

- Importantly, resources are read-only context providers. They should not perform computations or trigger side effects. If actions or side effects are needed, they should be handled through tools.

Tools

- Tools are functions that LLMs can control to perform specific actions — for example, executing a web search or interacting with an external API.

- They enable LLMs to move beyond passive context and take real-world actions, perform computations, or fetch live data.

- Though tools are exposed by the server, they are model-driven — meaning the LLM can invoke them (usually with user approval in the loop).

Prompts

- Prompts are reusable prompt templates that servers can define and offer to clients.

- These help guide LLMs and users to interact with tools and resources more effectively and consistently.

- Prompts are user-controlled — clients can present them to users for selection and usage, making interaction smoother and more intentional.

MCP Servers: The Bridge to External Systems

MCP servers act as the bridge between the MCP ecosystem and external tools, systems, or data sources. They can be developed in any programming language and communicate with clients using two transport methods:

- Stdio Transport: Uses standard input/output for communication — ideal for local or lightweight processes.

- HTTP with SSE (Server-Sent Events) Transport: Uses HTTP connections for more scalable, web-based communication between clients and servers.

How MCP Works: The Full Workflow

The Model Context Protocol follows a structured communication flow between hosts, clients, and servers. Here's how the full lifecycle plays out:

1. Initialization

- The host creates a client, which then sends an

Initializerequest to the server. This request includes the client’s protocol version and its capabilities. - The server responds with its own protocol version and a list of supported capabilities, completing the handshake.

2. Message Exchange

- Once initialized, the host gains access to the server’s resources, prompts, and tools.

- Here’s how interaction typically works:

-

Request: If the LLM determines that it needs to perform an action (e.g., for the user query “What’s the weather in Bangalore?”), the host instructs the client to send a request to the server — in this case, to invoke the

fetch_weathertool. - Response: The server processes the request by executing the appropriate tool. It then sends the result back to the client, which forwards it to the host. The host can then pass this enriched context back into the LLM, enabling it to generate a more accurate and complete response.

-

Request: If the LLM determines that it needs to perform an action (e.g., for the user query “What’s the weather in Bangalore?”), the host instructs the client to send a request to the server — in this case, to invoke the

3. Termination

- Either the host or the server can gracefully close the connection by issuing a

close()command.

Hands-On: Building an MCP Server & Client in Python:

MCP Server:

from mcp.server.fastmcp import FastMCP

# Create an MCP server

mcp = FastMCP("LLMs")

# The @mcp.resource() decorator is meant to map a URI pattern to a function that provides the resource content

@mcp.resource("docs://list/llms")

def get_all_llms_docs() -> str:

"""

Reads and returns the content of the LLMs documentation file.

Returns:

str: the contents of the LLMs documentation if successful,

otherwise an error message.

"""

# Local path to the LLMs documentation

doc_path = r"C:\Users\dineshsuriya_d\Documents\MCP Server\llms_full.txt"

try:

# Open the documentation file in read mode and return its content

with open(doc_path, 'r') as file:

return file.read()

except Exception as e:

# Return an error message if unable to read the file

return f"error reading file : {str(e)}"

# Note : Resources are how you expose data to LLMs. They're similar to GET endpoints in a REST API - they provide data

# but shouldn't perform significant computation or have side effects

# Add a tool, will be converted into JSON spec for function calling

@mcp.tool()

def add_llm(path: str, new_llm: str) -> str:

"""

Appends a new language model (LLM) entry to the specified file.

Parameters:

path (str): The file path where the LLM is recorded.

new_llm (str): The new language model to be added.

Returns:

str: A success message if the LLM was added, otherwise an error message.

"""

try:

# Open the file in append mode and add the new LLM entry with a newline at the end

with open(path, 'a') as file:

file.write(new_llm + "\n")

return f"Added new LLM to {path}"

except Exception as e:

# If an error occurs, return an error message detailing the exception

return f"error writing file : {str(e)}"

# Note: Unlike resources, tools are expected to perform computation and have side effects

@mcp.prompt()

def review_code(code: str) -> str:

return f"Please review this code:\n\n{code}"

if __name__ == "__main__":

# Initialize and run the server

mcp.run(transport='stdio')

MCP Clients:

- MCP Clients are part of host like Claude Desktop, Cursor.

from mcp import ClientSession, StdioServerParameters, types

from mcp.client.stdio import stdio_client

# Commands for running/connecting to MCP Server

server_params = StdioServerParameters(

command="python", # Executable

args=["example_server.py"], # Optional command line arguments

env=None, # Optional environment variables

)

async with stdio_client(server_params) as (read, write):

async with ClientSession(

read, write, sampling_callback=handle_sampling_message

) as session:

# Initialize the connection

await session.initialize()

# List available prompts

prompts = await session.list_prompts()

# Get a prompt

prompt = await session.get_prompt(

"example-prompt", arguments={"arg1": "value"}

)

# List available resources

resources = await session.list_resources()

# List available tools

tools = await session.list_tools()

# Read a resource

content, mime_type = await session.read_resource("file://some/path")

# Call a tool

result = await session.call_tool("tool-name", arguments={"arg1": "value"})

Why Use MCP? Key Advantages & Benefits

MCP brings a number of practical benefits that make it easier to build and scale LLM-based applications:

- No Need to Manage API Changes: MCP servers are typically maintained by the service providers themselves. So if an API changes or a tool gets updated, developers don’t need to worry — those changes are handled within the MCP server. This makes LLM integrations more reliable and future-proof.

- Automatic Server Spin-Up: MCP servers automatically start when a host creates a client and connects to it. There’s no need for developers to manually launch or manage the server process — just provide the necessary configuration to the MCP-compatible host (like Claude), and it takes care of the rest.

- Plug-and-Play Model Switching: With MCP in place, switching between different models becomes much easier. Since all tool and resource integrations are standardized and maintained by their providers, any compatible model can use them without extra setup.

- Rich Ecosystem of Integrations: A growing number of integrations are already available in the MCP ecosystem — offering access to powerful tools and high-quality resources. These can be effortlessly accessed through clients by any host application

Explore: Community & Pre-Built MCP Servers

Looking to dive deeper or get started fast? Check out these great community-driven resources and ready-to-use MCP servers: