MCP for your API

Model Context Protocol (MCP) is an exciting new way to interact with APIs. Publishing an MCP server helps users interact directly with your API using natural language. Our mission is to improve the API layer of the internet, and we’re excited to announce that you can now use Stainless to automatically generate MCP servers (for free) from your OpenAPI spec alongside your SDKs. The MCP server generated by Stainless lives alongside your TypeScript SDK and is published as a separate npm package to minimize bundle sizes. It provides composable building blocks to create custom tools, controls to define exactly which parts of the API you want to expose, and an easy way for users to filter which tools they want to use. For example, Modern Treasury’s new MCP server enables their customers to do one-off banking operations with simple language: In the demo above, Claude finds an unpaid invoice, voids it, then creates a new one with a different discount using just conversational prompts and the list_invoices, update_invoice, and create_invoice tools. Getting started Adding MCP to your Stainless project is simple. Navigate to your Stainless project dashboard, click Add SDKs, then select MCP Server from the options. The generated MCP server can be tested immediately with MCP clients like Claude Desktop: { "mcpServers": { "your_api": { "command": "npx", "args": ["-y", "your-api-mcp"], "env": { "YOUR_API_KEY": "your-api-key-here" } } } } Once configured, you can ask the LLM to interact with your API directly: "What products do we have available?" or "Create a new product called MCP Demo." The LLM will select the appropriate endpoint, pass the necessary parameters, and return the results. By default, every endpoint is exposed (e.g., POST /products would become a create_product tool). You can fine-tune this by setting enable_all_resources: false in the mcp_server: section of your Stainless configuration and adding specific resources or endpoints with mcp: true. Users can also tailor which tools they would like to expose to the model with options like --tool list_accounts, --resource accounts, or --operation read to reduce context size or prevent access to sensitive data. Deploying remote MCP servers to Cloudflare Workers The examples above are great for devs using local AI clients. But for non-devs using web apps, local MCP servers that rely on API keys for auth aren’t practical. This is where remote MCP servers come in. It’s a newer part of the Model Context Protocol spec that enables OAuth-based authentication between clients and servers. While support is still nascent, you can use this today by deploying your Stainless-generated tools to a Cloudflare Worker. The worker can handle OAuth with your existing provider or you can implement a custom flow directly in the worker. Check out the docs for more info. MCP server generation is currently experimental and is free to all Stainless users. Get started today at stainless.com/login.

Model Context Protocol (MCP) is an exciting new way to interact with APIs. Publishing an MCP server helps users interact directly with your API using natural language.

Our mission is to improve the API layer of the internet, and we’re excited to announce that you can now use Stainless to automatically generate MCP servers (for free) from your OpenAPI spec alongside your SDKs.

The MCP server generated by Stainless lives alongside your TypeScript SDK and is published as a separate npm package to minimize bundle sizes. It provides composable building blocks to create custom tools, controls to define exactly which parts of the API you want to expose, and an easy way for users to filter which tools they want to use.

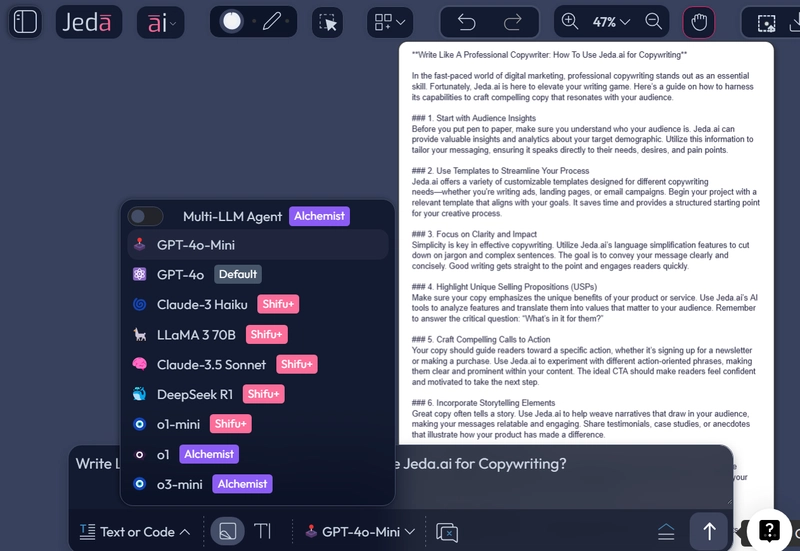

For example, Modern Treasury’s new MCP server enables their customers to do one-off banking operations with simple language:

In the demo above, Claude finds an unpaid invoice, voids it, then creates a new one with a different discount using just conversational prompts and the list_invoices, update_invoice, and create_invoice tools.

Getting started

Adding MCP to your Stainless project is simple.

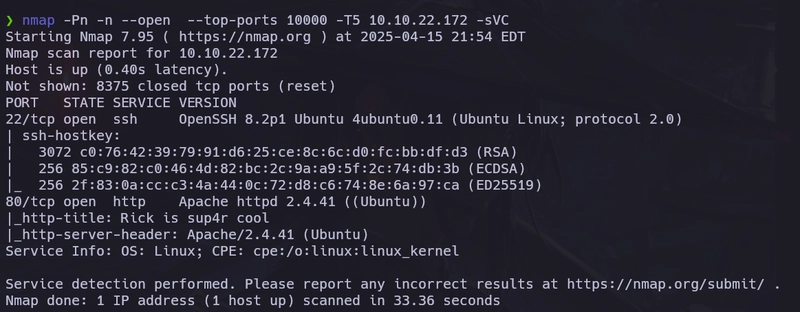

Navigate to your Stainless project dashboard, click Add SDKs, then select MCP Server from the options.

The generated MCP server can be tested immediately with MCP clients like Claude Desktop:

{

"mcpServers": {

"your_api": {

"command": "npx",

"args": ["-y", "your-api-mcp"],

"env": {

"YOUR_API_KEY": "your-api-key-here"

}

}

}

}

Once configured, you can ask the LLM to interact with your API directly: "What products do we have available?" or "Create a new product called MCP Demo." The LLM will select the appropriate endpoint, pass the necessary parameters, and return the results.

By default, every endpoint is exposed (e.g., POST /products would become a create_product tool). You can fine-tune this by setting enable_all_resources: false in the mcp_server: section of your Stainless configuration and adding specific resources or endpoints with mcp: true.

Users can also tailor which tools they would like to expose to the model with options like --tool list_accounts, --resource accounts, or --operation read to reduce context size or prevent access to sensitive data.

Deploying remote MCP servers to Cloudflare Workers

The examples above are great for devs using local AI clients. But for non-devs using web apps, local MCP servers that rely on API keys for auth aren’t practical.

This is where remote MCP servers come in. It’s a newer part of the Model Context Protocol spec that enables OAuth-based authentication between clients and servers. While support is still nascent, you can use this today by deploying your Stainless-generated tools to a Cloudflare Worker.

The worker can handle OAuth with your existing provider or you can implement a custom flow directly in the worker. Check out the docs for more info.

MCP server generation is currently experimental and is free to all Stainless users.

Get started today at stainless.com/login.