Mastering AI Context: How MCP Transforms Conversations into Seamless Interactions

Mastering AI Context: How MCP Transforms Conversations into Seamless Interactions The Frustration of Talking to AI We've all been there—you ask an AI assistant a simple question, only to get a response that makes no sense. Imagine asking, “What’s the weather like today?” and the assistant replies, “Can you clarify what you mean by ‘weather’?” It’s frustrating, right? Despite advancements in AI, many models still struggle with context retention, leading to repetitive, irrelevant, or even nonsensical responses. Whether it's a chatbot, voice assistant, or recommendation engine, the inability to remember and process previous interactions can ruin the user experience. This is a common challenge in industries where AI-driven interactions are crucial. Businesses implementing customer support chatbots, AI-powered search engines, or virtual assistants often face issues where the system fails to understand and retain user context. Just when it seems like AI has reached a dead-end, Model Context Protocol (MCP) emerges as a game-changing approach that makes AI smarter, sharper, and more contextually aware. What is MCP (Model Context Protocol)? MCP is a protocol designed to enhance context retention, state management, and interaction consistency in AI-driven systems. It ensures that models understand and retain relevant historical data, leading to more coherent, accurate, and stateful responses. MCP achieves this by implementing three core principles: Session Persistence – Keeping track of past interactions across requests. Contextual Hierarchy – Assigning priority to contextual elements to optimize responses. Dynamic Adaptation – Allowing models to evolve based on real-time interactions. Core Architecture of MCP The Model Context Protocol (MCP) is built on a flexible, extensible architecture that enables seamless communication between LLM applications and integrations. The architecture consists of three main components: 1. Client Layer (User Interaction) This layer includes frontend applications, chat interfaces, or APIs that interact with the user. It sends user queries and receives AI-generated responses. 2. Server Layer (MCP Processing & Context Handling) MCP acts as an intermediary between the client and the LLM. It manages session persistence, stores past interactions, and prioritizes relevant context before forwarding requests to the LLM. 3. Model Layer (LLM & AI Processing) The LLM (Large Language Model) processes the query, leveraging stored context to generate a meaningful response. It sends the response back to MCP, which refines it before delivering it to the client. How MCP Connects Clients, Servers, and LLMs User Query → Client sends request to MCP. Context Processing → MCP retrieves relevant context from past interactions. LLM Request → MCP forwards the processed request to the LLM. LLM Response → The model generates a response based on the provided context. MCP Refinement → MCP verifies and refines the response for coherence. Final Response → The refined response is sent back to the client. Code Example: Implementing an MCP-based API with FastAPI from fastapi import FastAPI, Request from typing import Dict import openai app = FastAPI() context_store: Dict[str, str] = {} @app.post("/chat") async def chat_endpoint(request: Request): data = await request.json() user_id = data["user_id"] user_query = data["query"] # Retrieve stored context previous_context = context_store.get(user_id, "") # Append context to the new query full_query = previous_context + " " + user_query # Send request to LLM (OpenAI, local LLM, etc.) response = openai.ChatCompletion.create( model="gpt-4", messages=[{"role": "user", "content": full_query}] ) bot_response = response["choices"][0]["message"]["content"] # Store context for future queries context_store[user_id] = bot_response return {"response": bot_response} How MCP Solves Industry-Wide AI Challenges Many industries have AI applications that struggle with user context. Here’s how MCP can help across different domains: 1. Session Persistence with Stateful Tokens Customer service chatbots often treat every user query as independent, leading to disjointed conversations. MCP-based token management systems ensure that interactions remain connected across sessions. Implementation in Python class ChatbotSession: def __init__(self): self.session_data = {} def store_context(self, user_id, key, value): if user_id not in self.session_data: self.session_data[user_id] = {} self.session_data[user_id][key] = value def get_context(self, user_id, key): return self.session_data.get(user_id, {}).get(key, None) 2. Contextual Hierarchy for Response Prioritization Before MCP

Mastering AI Context: How MCP Transforms Conversations into Seamless Interactions

The Frustration of Talking to AI

We've all been there—you ask an AI assistant a simple question, only to get a response that makes no sense. Imagine asking, “What’s the weather like today?” and the assistant replies, “Can you clarify what you mean by ‘weather’?” It’s frustrating, right?

Despite advancements in AI, many models still struggle with context retention, leading to repetitive, irrelevant, or even nonsensical responses. Whether it's a chatbot, voice assistant, or recommendation engine, the inability to remember and process previous interactions can ruin the user experience.

This is a common challenge in industries where AI-driven interactions are crucial. Businesses implementing customer support chatbots, AI-powered search engines, or virtual assistants often face issues where the system fails to understand and retain user context. Just when it seems like AI has reached a dead-end, Model Context Protocol (MCP) emerges as a game-changing approach that makes AI smarter, sharper, and more contextually aware.

What is MCP (Model Context Protocol)?

MCP is a protocol designed to enhance context retention, state management, and interaction consistency in AI-driven systems. It ensures that models understand and retain relevant historical data, leading to more coherent, accurate, and stateful responses.

MCP achieves this by implementing three core principles:

- Session Persistence – Keeping track of past interactions across requests.

- Contextual Hierarchy – Assigning priority to contextual elements to optimize responses.

- Dynamic Adaptation – Allowing models to evolve based on real-time interactions.

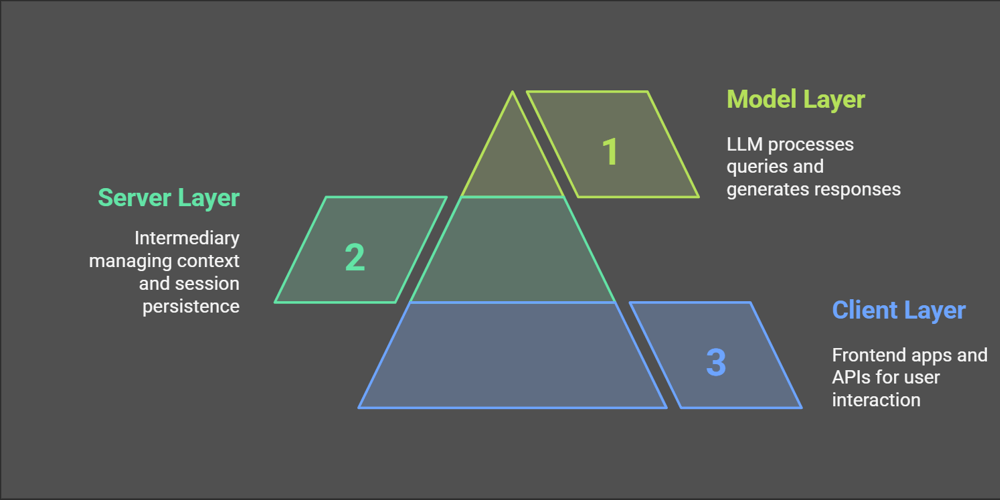

Core Architecture of MCP

The Model Context Protocol (MCP) is built on a flexible, extensible architecture that enables seamless communication between LLM applications and integrations. The architecture consists of three main components:

1. Client Layer (User Interaction)

- This layer includes frontend applications, chat interfaces, or APIs that interact with the user.

- It sends user queries and receives AI-generated responses.

2. Server Layer (MCP Processing & Context Handling)

- MCP acts as an intermediary between the client and the LLM.

- It manages session persistence, stores past interactions, and prioritizes relevant context before forwarding requests to the LLM.

3. Model Layer (LLM & AI Processing)

- The LLM (Large Language Model) processes the query, leveraging stored context to generate a meaningful response.

- It sends the response back to MCP, which refines it before delivering it to the client.

How MCP Connects Clients, Servers, and LLMs

- User Query → Client sends request to MCP.

- Context Processing → MCP retrieves relevant context from past interactions.

- LLM Request → MCP forwards the processed request to the LLM.

- LLM Response → The model generates a response based on the provided context.

- MCP Refinement → MCP verifies and refines the response for coherence.

- Final Response → The refined response is sent back to the client.

Code Example: Implementing an MCP-based API with FastAPI

from fastapi import FastAPI, Request

from typing import Dict

import openai

app = FastAPI()

context_store: Dict[str, str] = {}

@app.post("/chat")

async def chat_endpoint(request: Request):

data = await request.json()

user_id = data["user_id"]

user_query = data["query"]

# Retrieve stored context

previous_context = context_store.get(user_id, "")

# Append context to the new query

full_query = previous_context + " " + user_query

# Send request to LLM (OpenAI, local LLM, etc.)

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": full_query}]

)

bot_response = response["choices"][0]["message"]["content"]

# Store context for future queries

context_store[user_id] = bot_response

return {"response": bot_response}

How MCP Solves Industry-Wide AI Challenges

Many industries have AI applications that struggle with user context. Here’s how MCP can help across different domains:

1. Session Persistence with Stateful Tokens

Customer service chatbots often treat every user query as independent, leading to disjointed conversations. MCP-based token management systems ensure that interactions remain connected across sessions.

Implementation in Python

class ChatbotSession:

def __init__(self):

self.session_data = {}

def store_context(self, user_id, key, value):

if user_id not in self.session_data:

self.session_data[user_id] = {}

self.session_data[user_id][key] = value

def get_context(self, user_id, key):

return self.session_data.get(user_id, {}).get(key, None)

2. Contextual Hierarchy for Response Prioritization

Before MCP, AI-powered search engines and chatbots would sometimes prioritize irrelevant information. MCP helps assign weights to different context layers (session context, user intent, and past interactions) to ensure the most relevant response is provided.

Context Management with Weighted Prioritization

class ContextManager:

def __init__(self):

self.context_priority = {}

def update_context(self, user_id, context, weight):

if user_id not in self.context_priority:

self.context_priority[user_id] = []

self.context_priority[user_id].append((context, weight))

self.context_priority[user_id].sort(key=lambda x: x[1], reverse=True)

3. Dynamic Adaptation for Personalized Conversations

Many recommendation engines struggle to personalize content efficiently. MCP-powered adaptive memory units ensure that AI systems learn user preferences over time, creating a tailored experience.

AI-Based Adaptive Learning with Memory Storage

import random

class AdaptiveAI:

def __init__(self):

self.user_preferences = {}

def update_preference(self, user_id, category):

if user_id not in self.user_preferences:

self.user_preferences[user_id] = {}

self.user_preferences[user_id][category] = self.user_preferences[user_id].get(category, 0) + 1

def recommend(self, user_id):

if user_id in self.user_preferences:

return max(self.user_preferences[user_id], key=self.user_preferences[user_id].get)

return random.choice(["General Tech", "AI", "Cybersecurity"])

Why MCP Matters for Every AI-Powered System

MCP isn’t just useful for chatbots—it can improve the efficiency of various AI applications:

- Voice Assistants (e.g., Siri, Alexa) to maintain conversation state.

- Customer Support Bots to remember user issues across sessions.

- Recommendation Engines for personalized content suggestions.

- Enterprise AI Solutions for consistent document and query handling.

Conclusion

By leveraging Model Context Protocol (MCP), businesses and developers can transform AI systems into highly context-aware, adaptive, and efficient assistants. Whether it’s in customer support, AI-driven search engines, or recommendation systems, MCP ensures that AI understands and responds intelligently, creating a seamless user experience.

If you’ve ever been frustrated with AI forgetting previous interactions, implementing MCP might be the breakthrough you need!