How to Run Models Locally: A Step-by-Step Guide for Non-Techies

Have you ever wondered if there’s a way to experience the power of AI without exposing your data or losing control over what happens at work? Thanks to years of development, running AI models locally on your machine has become not only possible but also increasingly convenient. This guide will show you how to set up and use an offline local language model (LLM), giving you the flexibility to explore AI's capabilities without compromising your privacy. Step 1: Download Ollama First things first—download and install Ollama. It’s a free tool that lets you run AI models locally on your machine. Here’s how: Go to the Ollama website. Choose your operating system (Windows, macOS, or Linux) and download the installer. Once downloaded, run the installer. That’s it! Ollama is now installed on your machine. Step 2: Verify Ollama is Running Before we jump into running a model, we need to make sure Ollama is running. This is dead-simple but will require us to open up the terminal. If you're on Windows and are unsure which terminal to use, you can just open Command Prompt and use that. Once you're in the terminal, just type the following to verify Ollama is running: ollama Press Enter and you should see some info pop up about how to use ollama on the command line. If that doesn't happen and you get output that says something like "command not defined," you may need to try running it again or double-checking that it downloaded. Step 3: Choose a Model Now that Ollama is running, it’s time to pick a model! There are thousands of pre-trained models available directly through Ollama. Here are a few popular options: deepseek-r1: A versatile model for various tasks. llama3.2 - Great for text chat and code generation. mistral:instruct - Fine-tuned for conversational contexts (my personal favorite). If you’re not sure which one to choose, start with a lighter-weight model (like 1B parameters) and go with something multi-purpose like llama3.2. Step 4: Download and Run your Model Pulling and running your model used to be multiple steps, but now all you should need to do is this to download and run it in one go: ollama run deepseek-r1 (Remove deepseek-r1 if you picked a different model.) Step 5: Done! Wait for the installation script to finish, and voilà—you are now chatting with your very own local AI language model. You can start using it immediately. Optional: Use Integrated Tools If you want to use AI within your applications, you may want to checkout some great local LLM integrations for IDEs, note-taking apps, etc. There are tons of tools that work out-of-the-box with Ollama which make working with localized models even easier: Msty - A free chat app built specifically for Ollama users. Continue.dev - A powerful alternative to GitHub Copilot. Open WebUI - A self-hosted interface similar to ChatGPT. These tools require no extra setup and work right out of the box with your local model.

Have you ever wondered if there’s a way to experience the power of AI without exposing your data or losing control over what happens at work? Thanks to years of development, running AI models locally on your machine has become not only possible but also increasingly convenient. This guide will show you how to set up and use an offline local language model (LLM), giving you the flexibility to explore AI's capabilities without compromising your privacy.

Step 1: Download Ollama

First things first—download and install Ollama. It’s a free tool that lets you run AI models locally on your machine. Here’s how:

- Go to the Ollama website.

- Choose your operating system (Windows, macOS, or Linux) and download the installer.

- Once downloaded, run the installer.

That’s it! Ollama is now installed on your machine.

Step 2: Verify Ollama is Running

Before we jump into running a model, we need to make sure Ollama is running. This is dead-simple but will require us to open up the terminal. If you're on Windows and are unsure which terminal to use, you can just open Command Prompt and use that. Once you're in the terminal, just type the following to verify Ollama is running:

ollama

Press Enter and you should see some info pop up about how to use ollama on the command line. If that doesn't happen and you get output that says something like "command not defined," you may need to try running it again or double-checking that it downloaded.

Step 3: Choose a Model

Now that Ollama is running, it’s time to pick a model! There are thousands of pre-trained models available directly through Ollama. Here are a few popular options:

- deepseek-r1: A versatile model for various tasks.

- llama3.2 - Great for text chat and code generation.

- mistral:instruct - Fine-tuned for conversational contexts (my personal favorite).

If you’re not sure which one to choose, start with a lighter-weight model (like 1B parameters) and go with something multi-purpose like llama3.2.

Step 4: Download and Run your Model

Pulling and running your model used to be multiple steps, but now all you should need to do is this to download and run it in one go:

ollama run deepseek-r1

(Remove deepseek-r1 if you picked a different model.)

Step 5: Done!

Wait for the installation script to finish, and voilà—you are now chatting with your very own local AI language model. You can start using it immediately.

Optional: Use Integrated Tools

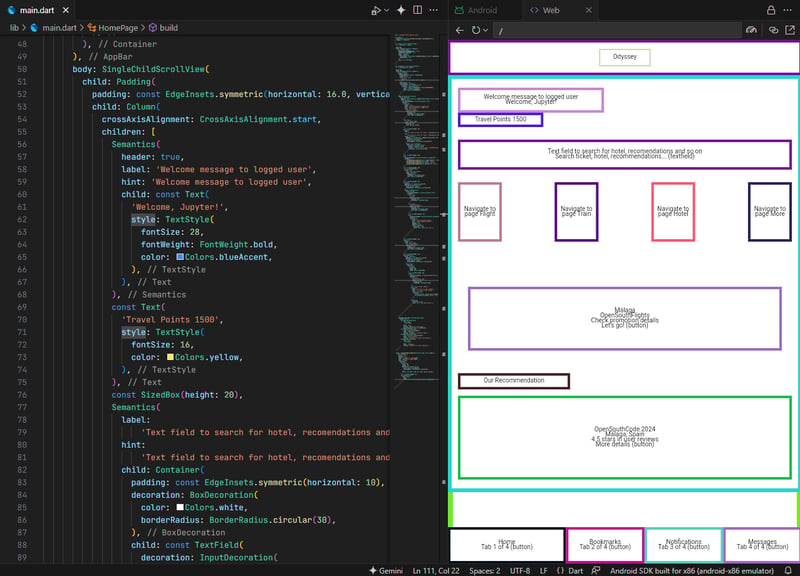

If you want to use AI within your applications, you may want to checkout some great local LLM integrations for IDEs, note-taking apps, etc. There are tons of tools that work out-of-the-box with Ollama which make working with localized models even easier:

- Msty - A free chat app built specifically for Ollama users.

- Continue.dev - A powerful alternative to GitHub Copilot.

- Open WebUI - A self-hosted interface similar to ChatGPT.

These tools require no extra setup and work right out of the box with your local model.

![[FREE EBOOKS] SHIFTS, Your AI Roadmap & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)