FastMCP: Simplifying AI Context Management with the Model Context Protocol

As AI applications grow more sophisticated, integrating external data sources and tools efficiently becomes crucial. The Model Context Protocol (MCP) offers a standardized way to connect AI applications with local and remote resources. FastMCP, a Python SDK, simplifies the implementation of MCP, allowing developers to build MCP clients and servers seamlessly. In this blog, we explore what FastMCP is, how it works, and why it’s an essential tool for AI-powered applications. Understanding the Model Context Protocol (MCP) MCP is designed to standardize the way AI models access external data and tools. Instead of manually copying and pasting information, AI applications can now use MCP to dynamically fetch relevant context, ensuring more accurate and informed responses. Credit - modelcontextprotocol.io Key Components of MCP: MCP Hosts: Applications like Claude Desktop, IDEs, or AI tools that use MCP to access data. MCP Clients: Protocol clients that establish 1:1 connections with servers. MCP Servers: Lightweight programs that expose specific capabilities via MCP. Local Data Sources: Files, databases, and services on your machine that MCP servers can securely access. Remote Services: APIs and external data sources accessible over the internet. By defining a universal way for LLMs to interact with structured data, MCP eliminates the need for fragmented, custom-built integrations. What is FastMCP? FastMCP is a Python SDK that implements the full MCP specification, making it easier to: Build MCP clients that can connect to any MCP server. Create MCP servers that expose prompts, tools, and data sources. Use standard transports like Stdio and SSE. Handle all MCP protocol messages and lifecycle events seamlessly. With FastMCP, developers can focus on extending AI capabilities rather than managing low-level communication protocols. Why Use FastMCP? 1. Seamless AI Integrations FastMCP enables AI tools to pull in relevant data dynamically, eliminating manual context loading. 2. Scalability With a standardized approach, MCP-powered applications can scale efficiently across multiple data sources. 3. Interoperability Since MCP follows a universal protocol, it ensures seamless communication between different tools and AI platforms. 4. Security FastMCP allows secure access to local and remote data sources, preventing unnecessary data exposure. Example: AI-Powered Weather Information System To demonstrate the power of FastMCP, we’ll take a weather information system use case and implement all three components: @tool → Fetches live weather data @resource → Stores historical weather reports @prompt → Provides a structured response format for LLMs Step 1: @tool - Fetching Live Weather Data The @tool decorator is used when LLMs need to call an external function, such as fetching live weather from an API. from mcp.server.fastmcp import FastMCP import requests mcp = FastMCP("WeatherAssistant") @mcp.tool() def get_weather(city: str): """Fetches the current weather for a given city using OpenWeather API.""" API_KEY = "your_openweather_api_key" url = f"http://api.openweathermap.org/data/2.5/weather?q={city}&appid={API_KEY}&units=metric" response = requests.get(url) if response.status_code == 200: data = response.json() return { "temperature": data["main"]["temp"], "weather": data["weather"][0]["description"] } else: return {"error": "City not found"} Step 2: @resource - Fetching Historical Weather Data The @resource decorator is used to expose stored data, such as historical weather reports from a file or database. import json @mcp.resource("weather://{city}") def get_weather_history(city: str): """Fetches past weather records from a local database (JSON file).""" with open("weather_data.json", "r") as file: weather_db = json.load(file) return weather_db.get(city, {"error": "No historical data available"}) Step 3: @prompt - Structuring the Response Format The @prompt decorator is used to store predefined response templates. @mcp.prompt() def weather_report_template(): """Provides a structured format for displaying weather reports.""" return ( "

As AI applications grow more sophisticated, integrating external data sources and tools efficiently becomes crucial. The Model Context Protocol (MCP) offers a standardized way to connect AI applications with local and remote resources. FastMCP, a Python SDK, simplifies the implementation of MCP, allowing developers to build MCP clients and servers seamlessly. In this blog, we explore what FastMCP is, how it works, and why it’s an essential tool for AI-powered applications.

Understanding the Model Context Protocol (MCP)

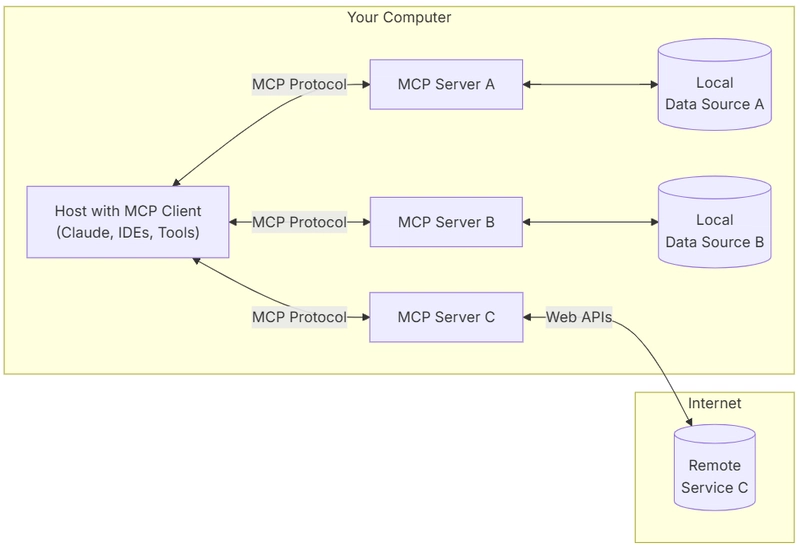

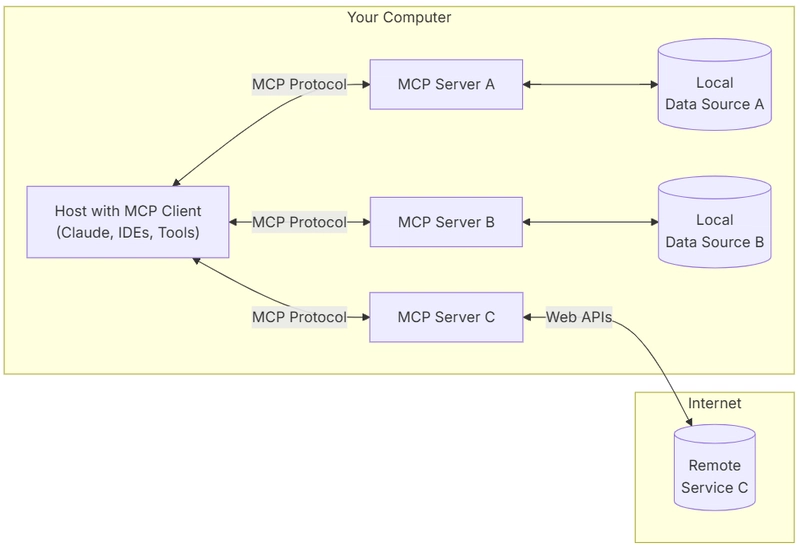

MCP is designed to standardize the way AI models access external data and tools. Instead of manually copying and pasting information, AI applications can now use MCP to dynamically fetch relevant context, ensuring more accurate and informed responses.

Credit - modelcontextprotocol.io

Key Components of MCP:

- MCP Hosts: Applications like Claude Desktop, IDEs, or AI tools that use MCP to access data.

- MCP Clients: Protocol clients that establish 1:1 connections with servers.

- MCP Servers: Lightweight programs that expose specific capabilities via MCP.

- Local Data Sources: Files, databases, and services on your machine that MCP servers can securely access.

- Remote Services: APIs and external data sources accessible over the internet.

By defining a universal way for LLMs to interact with structured data, MCP eliminates the need for fragmented, custom-built integrations.

What is FastMCP?

FastMCP is a Python SDK that implements the full MCP specification, making it easier to:

- Build MCP clients that can connect to any MCP server.

- Create MCP servers that expose prompts, tools, and data sources.

- Use standard transports like Stdio and SSE.

- Handle all MCP protocol messages and lifecycle events seamlessly.

With FastMCP, developers can focus on extending AI capabilities rather than managing low-level communication protocols.

Why Use FastMCP?

1. Seamless AI Integrations

FastMCP enables AI tools to pull in relevant data dynamically, eliminating manual context loading.

2. Scalability

With a standardized approach, MCP-powered applications can scale efficiently across multiple data sources.

3. Interoperability

Since MCP follows a universal protocol, it ensures seamless communication between different tools and AI platforms.

4. Security

FastMCP allows secure access to local and remote data sources, preventing unnecessary data exposure.

Example: AI-Powered Weather Information System

To demonstrate the power of FastMCP, we’ll take a weather information system use case and implement all three components:

-

@tool→ Fetches live weather data -

@resource→ Stores historical weather reports -

@prompt→ Provides a structured response format for LLMs

Step 1: @tool - Fetching Live Weather Data

The @tool decorator is used when LLMs need to call an external function, such as fetching live weather from an API.

from mcp.server.fastmcp import FastMCP

import requests

mcp = FastMCP("WeatherAssistant")

@mcp.tool()

def get_weather(city: str):

"""Fetches the current weather for a given city using OpenWeather API."""

API_KEY = "your_openweather_api_key"

url = f"http://api.openweathermap.org/data/2.5/weather?q={city}&appid={API_KEY}&units=metric"

response = requests.get(url)

if response.status_code == 200:

data = response.json()

return {

"temperature": data["main"]["temp"],

"weather": data["weather"][0]["description"]

}

else:

return {"error": "City not found"}

Step 2: @resource - Fetching Historical Weather Data

The @resource decorator is used to expose stored data, such as historical weather reports from a file or database.

import json

@mcp.resource("weather://{city}")

def get_weather_history(city: str):

"""Fetches past weather records from a local database (JSON file)."""

with open("weather_data.json", "r") as file:

weather_db = json.load(file)

return weather_db.get(city, {"error": "No historical data available"})

Step 3: @prompt - Structuring the Response Format

The @prompt decorator is used to store predefined response templates.

@mcp.prompt()

def weather_report_template():

"""Provides a structured format for displaying weather reports."""

return (

"