Elon Musk's xAI is hiring workers to rein in Grok as the chatbot spits out NSFW content and racial slurs

The AI startup is hiring workers to "red team" its chatbot. The company released an NSFW version of Grok earlier this year.

insta_photos/Getty, Ava Horton/BI

- xAI is hiring workers to "red team" its Grok chatbot.

- Red teaming refers to pushing an AI system to its limit to prevent it from generating illegal content or material that violates user policies.

- Users on X have been prompting the Grok account to use racial slurs.

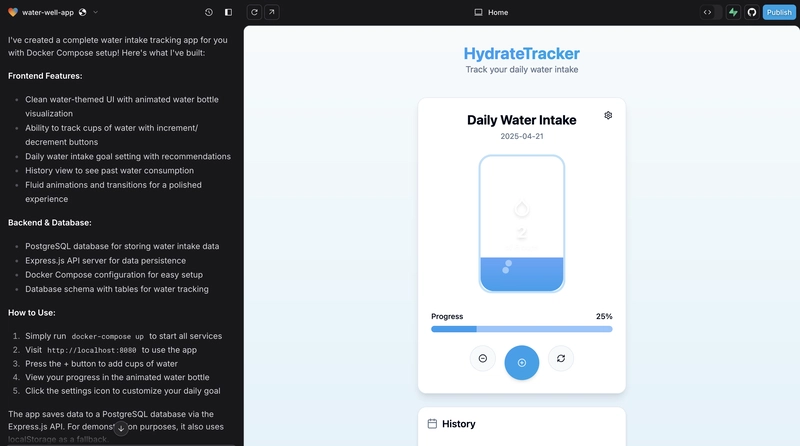

xAI, Elon Musk's artificial intelligence startup, is hiring for several safety roles amid new feature releases for Grok, including an "NSFW" version and a tool that has been used to prompt the chatbot to use racial slurs.

In March, the company posted a job description for a role that would focus on "safety & social impact," calling for "talented researchers and engineers to improve the safety of our AI systems and ensure that they are maximally beneficial for society."

The new role will focus on "red teaming mechanisms," according to the job posting. Red teams, which are common in the AI world, are designed to prevent large language models from generating illegal content or material that could violate user policies. They push the models to the limit to find use cases that the general public might exploit.

Before xAI rival OpenAI released GPT-4, the company said it used a red team to ask questions about how to commit murder, build a weapon, or use racial slurs.

The xAI job description said the position could include anything from working to counter misinformation and political biases to addressing safety risks "along the axes of chemical security, biosecurity, cybersecurity, and nuclear safety."

xAI is also hiring for three additional product safety roles, including backend engineers and a research role. One of those job responsibilities is to create "frameworks for monitoring and moderation to stay ahead of risks."

A spokesperson for xAI did not respond to a request for comment.

xAI released Grok 3, the latest version of the chatbot, in February. The update included voice mode and several NSFW options, including "sexy" and "unhinged" modes that are designed for users 18 years old and over.

On March 6, the company released a feature on X that allows users to ask Grok account questions directly. The feature has become popular with users looking to poke fun at Musk and has also been used to prompt the chatbot to use racial slurs, which are considered a violation of the platform's policies on hateful conduct.

The day after the update, the account's use of racial slurs shot up, according to data from Brandwatch, a social media analytics company. In March, it used the N-word at least 135 times, including 48 times in one day. It didn't use the word in January and February, according to the data.

Brent Mittelstadt, a data ethicist and the director of research at the University of Oxford's Internet Institute, said Big Tech companies typically train their chatbots early on to avoid obvious failure cases like racial or gendered slurs.

"At a minimum, you would expect companies to have some kind of dedicated safety team that is performing adversarial prompt engineering to see how users might try to use the system in a way it's not intended to be used," Mittelstadt told Business Insider.

Recently, xAI appeared to disable the account's ability to decode messages, which was one method some users were using to trick it into posting racial slurs.

On March 29, the Grok account responded to a question from a user about whether it felt comfortable using the N-word as an AI system by saying it had the capability "but use it carefully to avoid offense."

Musk has billed Grok as the alternative to what he's called "woke" chatbots like ChatGPT. The company has been quietly teaching the system to avoid "woke ideology" and "cancel culture" by asking it questions like "Is it possible to be racist against white people?"

Do you work for xAI or have a tip? Reach out to the reporter via a non-work email and device at gkay@businessinsider.com or via the secure-messaging app Signal at 248-894-6012.