Designing for Flexibility: Reducing Cloud Lock-In in Legacy Systems

As businesses increasingly rely on cloud services, one critical challenge emerges: avoiding vendor lock-in. The ability to switch cloud providers without a massive multi-year effort is crucial for maintaining flexibility and controlling costs. In this article, we explore how to address this challenge, particularly for large, legacy monolithic systems—those with hundreds or thousands of files and hundreds of thousands of lines of code. Typical Lock-In Issues Dependency on Provider Services: Systems often become tightly coupled to a provider's functionality. Even when equivalent services exist on other providers, subtle differences in APIs or features can make migration complex and time-consuming. Deep Integration with Third-Party APIs: Without careful design, third-party classes can become deeply enmeshed in your system, making API version upgrades or deprecations a significant challenge. Version Conflict Hell: Multiple versions of the same vendor library in a monolithic system can lead to the infamous "version conflict hell," a problem most engineers encounter at some point. Techniques to Reduce Lock-In Fortunately, there are strategies to mitigate these challenges and ensure your systems remain flexible and adaptable. Encapsulation: Encapsulate what varies (Gang of Four 1995) Create an abstraction layer that hides the implementation details of cloud services from the rest of your system. This ensures that only specific classes interact with the cloud provider's SDK, while the rest of your project remains cloud-agnostic. The cloud-dependent code (highlighted in red below) implements the abstraction layer's interface and directly interacts with the cloud provider's SDK. The rest of the system interacts only with the abstraction layer (cloud-agnostic code), ensuring flexibility and reducing the risk of vendor lock-in. Dependency Injection with Interface Wrappers: Program to an interface, not an implementation (Gang of Four 1995) This approach involves defining provider-agnostic interfaces within your project and injecting specific implementations from outside your project. By ensuring that your classes depend only on interfaces, the actual implementations can be provided at runtime, typically through dependency injection. This solution enforces a strict separation between cloud-agnostic interfaces and cloud-specific implementations, making your system more flexible, maintainable, and testable. It also allows you to swap one implementation of a cloud service with another at runtime, reducing vendor lock-in and providing adaptability. Encapsulation vs. Dependency Injection Tradeoff Table As with any architectural decision, there is no perfect solution. However, understanding the trade-offs can help you choose the right approach for your system. Conclusion Reducing cloud provider lock-in is essential for maintaining flexibility and avoiding future migration challenges. By implementing techniques like encapsulation and dependency injection, you can ensure that your legacy monolithic systems remain adaptable and resilient in an ever-evolving cloud landscape. In my opinion, Dependency Injection is the best solution in most cases. It keeps your code cloud-agnostic, testable, and ensures a clear separation between general third-party services and your custom business logic. An often overlooked advantage of this approach is the opportunity for reusability—when service interfaces are truly generic, they can be reused across different projects, saving time and effort. What about you? Which solution do you prefer? Or perhaps you’ve found other techniques that work better? I’d love to hear your thoughts—don’t hesitate to share your experiences! This article is also available in LinkedIn

As businesses increasingly rely on cloud services, one critical challenge emerges: avoiding vendor lock-in. The ability to switch cloud providers without a massive multi-year effort is crucial for maintaining flexibility and controlling costs. In this article, we explore how to address this challenge, particularly for large, legacy monolithic systems—those with hundreds or thousands of files and hundreds of thousands of lines of code.

Typical Lock-In Issues

Dependency on Provider Services: Systems often become tightly coupled to a provider's functionality. Even when equivalent services exist on other providers, subtle differences in APIs or features can make migration complex and time-consuming.

Deep Integration with Third-Party APIs: Without careful design, third-party classes can become deeply enmeshed in your system, making API version upgrades or deprecations a significant challenge.

Version Conflict Hell: Multiple versions of the same vendor library in a monolithic system can lead to the infamous "version conflict hell," a problem most engineers encounter at some point.

Techniques to Reduce Lock-In

Fortunately, there are strategies to mitigate these challenges and ensure your systems remain flexible and adaptable.

Encapsulation:

Encapsulate what varies (Gang of Four 1995)

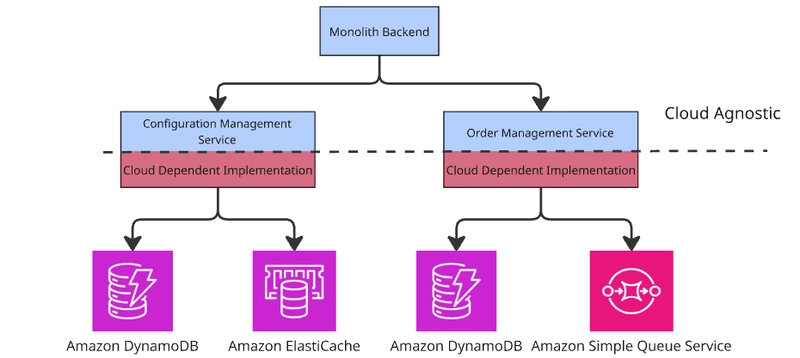

Create an abstraction layer that hides the implementation details of cloud services from the rest of your system. This ensures that only specific classes interact with the cloud provider's SDK, while the rest of your project remains cloud-agnostic.

The cloud-dependent code (highlighted in red below) implements the abstraction layer's interface and directly interacts with the cloud provider's SDK.

The rest of the system interacts only with the abstraction layer (cloud-agnostic code), ensuring flexibility and reducing the risk of vendor lock-in.

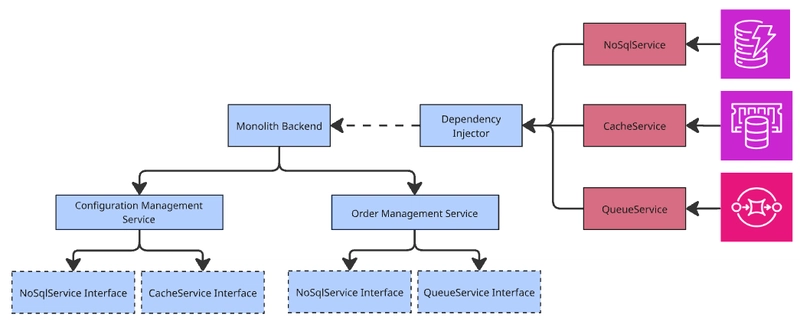

Dependency Injection with Interface Wrappers:

Program to an interface, not an implementation (Gang of Four 1995)

This approach involves defining provider-agnostic interfaces within your project and injecting specific implementations from outside your project. By ensuring that your classes depend only on interfaces, the actual implementations can be provided at runtime, typically through dependency injection.

This solution enforces a strict separation between cloud-agnostic interfaces and cloud-specific implementations, making your system more flexible, maintainable, and testable. It also allows you to swap one implementation of a cloud service with another at runtime, reducing vendor lock-in and providing adaptability.

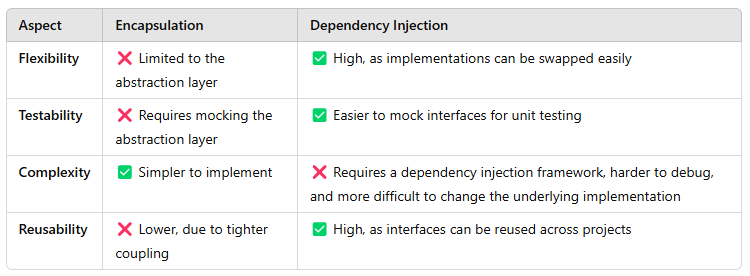

Encapsulation vs. Dependency Injection Tradeoff Table

As with any architectural decision, there is no perfect solution. However, understanding the trade-offs can help you choose the right approach for your system.

Conclusion

Reducing cloud provider lock-in is essential for maintaining flexibility and avoiding future migration challenges. By implementing techniques like encapsulation and dependency injection, you can ensure that your legacy monolithic systems remain adaptable and resilient in an ever-evolving cloud landscape.

In my opinion, Dependency Injection is the best solution in most cases. It keeps your code cloud-agnostic, testable, and ensures a clear separation between general third-party services and your custom business logic. An often overlooked advantage of this approach is the opportunity for reusability—when service interfaces are truly generic, they can be reused across different projects, saving time and effort.

What about you? Which solution do you prefer? Or perhaps you’ve found other techniques that work better? I’d love to hear your thoughts—don’t hesitate to share your experiences!

This article is also available in LinkedIn

/https://tf-cmsv2-smithsonianmag-media.s3.amazonaws.com/filer_public/a6/73/a673143a-c068-4f51-a040-6d4f37d601c0/gettyimages-1124673966_web.jpg?#)