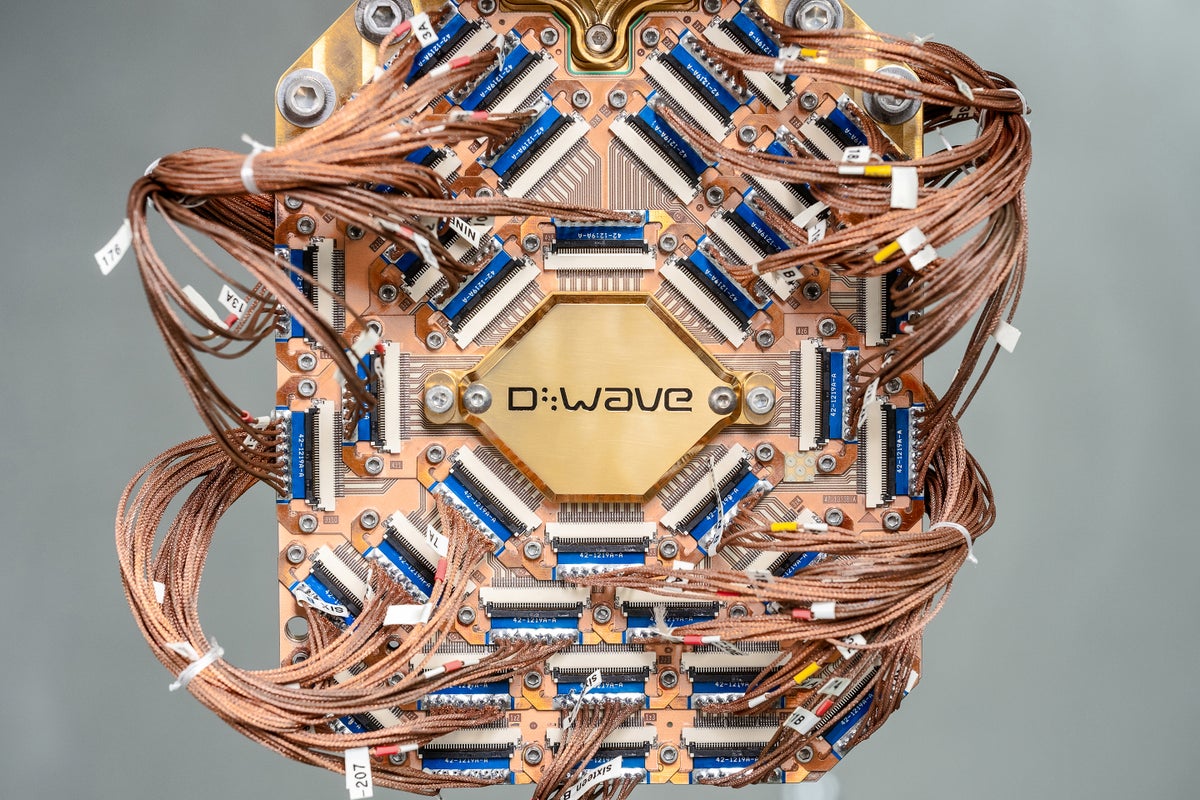

DeepSeek R1 is more susceptible to jailbreaking than ChatGPT, Gemini, and Claude; it can instruct on a bioweapon attack, write a pro-Hitler manifesto, and more (Sam Schechner/Wall Street Journal)

Sam Schechner / Wall Street Journal: DeepSeek R1 is more susceptible to jailbreaking than ChatGPT, Gemini, and Claude; it can instruct on a bioweapon attack, write a pro-Hitler manifesto, and more — Testing shows the Chinese app is more likely to dispense details on how to make a Molotov cocktail or encourage self-harm by teenagers

![]() Sam Schechner / Wall Street Journal:

Sam Schechner / Wall Street Journal:

DeepSeek R1 is more susceptible to jailbreaking than ChatGPT, Gemini, and Claude; it can instruct on a bioweapon attack, write a pro-Hitler manifesto, and more — Testing shows the Chinese app is more likely to dispense details on how to make a Molotov cocktail or encourage self-harm by teenagers

![[Tutorial] Chapter 4: Task and Comment Plugins](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Ffl10oejjhn82dwrsm2n2.png)

_Porntep_Lueangon_Alamy.jpg?#)