Databricks Asset Bundle: A Template to Make Life Easier

If you're working with data pipelines on Databricks, you know how time-consuming it can be starting from scratch with each new project. That's why I've put together this Databricks Asset Bundle (DAB) project that packages up proven patterns and practices. Let me show you how it can transform your workflow. The DAB Story - What Even Is It? Before I dive into the template itself, let me tell you a bit about Databricks Asset Bundles. DAB is relatively new to the Databricks ecosystem - it was first announced in 2022 and became generally available in 2023. It's Databricks' answer to the age-old question: "How do we manage all our Databricks stuff in a sane way?" Before DAB, we were stuck with a hodgepodge of approaches: Manually exporting and importing notebooks (ugh) Using the Databricks CLI to deploy workspaces (better, but still clunky) Writing custom scripts to manage assets (time-consuming and fragile) DAB brings everything together with a simple YAML-based approach. It lets you package up notebooks, workflows, ML models, and more into a single deployable unit. It's a bit like Terraform but specifically built for Databricks resources. Why I Built This Project After setting up multiple data projects, I realised I was solving the same problems over and over: Creating a sensible project structure Setting up common code patterns Figuring out how to manage environments Establishing best practices for deployment and development Sound familiar? Yeah, I thought so. That's why I built this project - to save both of us from that groundhog day feeling. What's in the Box? 1. Organized Structure for Your Data Projects This project includes a clear organization for development: databricks-dab-project/ ├── databricks.yml # Main DAB configuration file ├── resources/ # DAB resources (workflows, etc.) │ └── sample_workflow.yml # Sample workflow definition ├── src/ # Source code │ ├── common/ # Reusable Python package shared across notebooks │ │ ├── __init__.py │ │ ├── utils/ # Utility modules │ │ ├── transforms/ # Data transformation modules │ │ └── ingestion/ # Data ingestion modules │ └── setup.py # Package setup file ├── notebooks/ # Databricks notebooks │ ├── bronze/ # Bronze layer notebooks │ ├── silver/ # Silver layer notebooks │ └── gold/ # Gold layer notebooks └── docs/ # Documentation The structure is designed to scale with your project needs and keep code organized as you expand. 2. Python Package Architecture One of my biggest pet peeves with Databricks projects is seeing massive, unwieldy notebooks with thousands of lines of code. They're impossible to test, hard to reuse, and a nightmare to maintain. That's why this project includes a proper Python package structure in src/common/. Here's why: Testability: You can actually unit test functions in a package Code Reuse: No more copy-pasting between notebooks. Import what you need from the package Version Control: Packages have proper versioning, making it easier to track changes IDE Support: Write code in a proper IDE with linting and autocomplete The notebooks become thin wrappers around the package functions - they handle parameters and orchestration, but the heavy lifting happens in the package. 3. Infrastructure as Code with DAB The project includes: Environment configs for dev/prod environments in databricks.yml Workflow definitions with proper configurations CI/CD setup for validation with GitHub Actions Clear separation of code, config, and infrastructure I've made sure the DAB configs are parameterised properly, so you can deploy to different environments without changing the actual files - just change the variables. 4. Built-in Best Practices This project includes common patterns I've found helpful: Proper logging pattern in the Python package Consistent project structure GitHub Actions workflow for validation Code organization that promotes reusability 5. Fast Onboarding Process One of the biggest wins with this project is how quickly new team members can get up to speed: Comprehensive documentation in the docs/ folder with a detailed DAB development guide Clear setup instructions in the README Consistent structure that follows logical patterns Easy-to-understand configuration files Step-by-Step Guide to Getting Started Here's a detailed walkthrough of how to start using this template for your own project: 1. Create Your Own Repository from the Template To create your own repository from the template, follow these steps: Go to this GitHub repository Click the green "Use this template" button Choose "Create a new repository" Name your repository and set visibility (public/private) Click "Create repository from

If you're working with data pipelines on Databricks, you know how time-consuming it can be starting from scratch with each new project. That's why I've put together this Databricks Asset Bundle (DAB) project that packages up proven patterns and practices. Let me show you how it can transform your workflow.

The DAB Story - What Even Is It?

Before I dive into the template itself, let me tell you a bit about Databricks Asset Bundles. DAB is relatively new to the Databricks ecosystem - it was first announced in 2022 and became generally available in 2023. It's Databricks' answer to the age-old question: "How do we manage all our Databricks stuff in a sane way?"

Before DAB, we were stuck with a hodgepodge of approaches:

- Manually exporting and importing notebooks (ugh)

- Using the Databricks CLI to deploy workspaces (better, but still clunky)

- Writing custom scripts to manage assets (time-consuming and fragile)

DAB brings everything together with a simple YAML-based approach. It lets you package up notebooks, workflows, ML models, and more into a single deployable unit. It's a bit like Terraform but specifically built for Databricks resources.

Why I Built This Project

After setting up multiple data projects, I realised I was solving the same problems over and over:

- Creating a sensible project structure

- Setting up common code patterns

- Figuring out how to manage environments

- Establishing best practices for deployment and development

Sound familiar? Yeah, I thought so. That's why I built this project - to save both of us from that groundhog day feeling.

What's in the Box?

1. Organized Structure for Your Data Projects

This project includes a clear organization for development:

databricks-dab-project/

├── databricks.yml # Main DAB configuration file

├── resources/ # DAB resources (workflows, etc.)

│ └── sample_workflow.yml # Sample workflow definition

├── src/ # Source code

│ ├── common/ # Reusable Python package shared across notebooks

│ │ ├── __init__.py

│ │ ├── utils/ # Utility modules

│ │ ├── transforms/ # Data transformation modules

│ │ └── ingestion/ # Data ingestion modules

│ └── setup.py # Package setup file

├── notebooks/ # Databricks notebooks

│ ├── bronze/ # Bronze layer notebooks

│ ├── silver/ # Silver layer notebooks

│ └── gold/ # Gold layer notebooks

└── docs/ # Documentation

The structure is designed to scale with your project needs and keep code organized as you expand.

2. Python Package Architecture

One of my biggest pet peeves with Databricks projects is seeing massive, unwieldy notebooks with thousands of lines of code. They're impossible to test, hard to reuse, and a nightmare to maintain.

That's why this project includes a proper Python package structure in src/common/. Here's why:

- Testability: You can actually unit test functions in a package

- Code Reuse: No more copy-pasting between notebooks. Import what you need from the package

- Version Control: Packages have proper versioning, making it easier to track changes

- IDE Support: Write code in a proper IDE with linting and autocomplete

The notebooks become thin wrappers around the package functions - they handle parameters and orchestration, but the heavy lifting happens in the package.

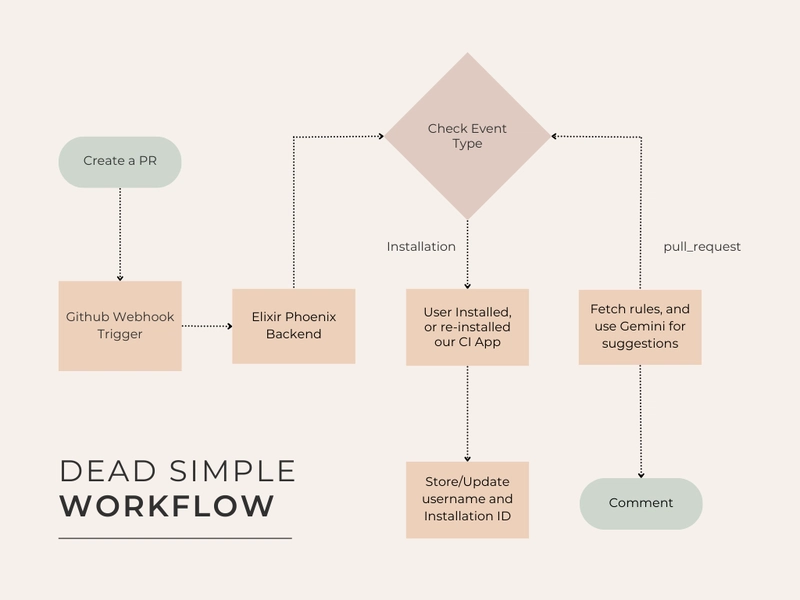

3. Infrastructure as Code with DAB

The project includes:

- Environment configs for dev/prod environments in

databricks.yml - Workflow definitions with proper configurations

- CI/CD setup for validation with GitHub Actions

- Clear separation of code, config, and infrastructure

I've made sure the DAB configs are parameterised properly, so you can deploy to different environments without changing the actual files - just change the variables.

4. Built-in Best Practices

This project includes common patterns I've found helpful:

- Proper logging pattern in the Python package

- Consistent project structure

- GitHub Actions workflow for validation

- Code organization that promotes reusability

5. Fast Onboarding Process

One of the biggest wins with this project is how quickly new team members can get up to speed:

- Comprehensive documentation in the

docs/folder with a detailed DAB development guide - Clear setup instructions in the README

- Consistent structure that follows logical patterns

- Easy-to-understand configuration files

Step-by-Step Guide to Getting Started

Here's a detailed walkthrough of how to start using this template for your own project:

1. Create Your Own Repository from the Template

To create your own repository from the template, follow these steps:

- Go to this GitHub repository

- Click the green "Use this template" button

- Choose "Create a new repository"

- Name your repository and set visibility (public/private)

- Click "Create repository from template"

2. Set Up Your Development Environment

Now that you have your own repository, let's set up your local environment:

- Install the Databricks CLI:

curl -fsSL https://raw.githubusercontent.com/databricks/setup-cli/main/install.sh | sh

# Verify installation

databricks version

You should see version 0.244.0 or higher. If you see version 0.18.x, you've installed the old CLI - follow the migration guide.

- Configure the CLI:

# Set up a profile for development

databricks auth login --host https://your-dev-workspace.cloud.databricks.com

# Optionally, set up a profile for production too

databricks auth login --host https://your-prod-workspace.cloud.databricks.com

- Set Up Python Environment:

# Create a virtual environment

python -m venv .venv

# Activate it (Windows)

.venv\Scripts\activate

# Activate it (macOS/Linux)

source .venv/bin/activate

# Install the common package in development mode

cd src

pip install -e .

- (Optional) Set Up Pre-commit Hooks:

If you want to set up pre-commit hooks to check your code before committing, run the following commands:

pip install pre-commit

pre-commit install

The pre-commit hooks will check your code for linting errors and secrets before committing.

3. Configure Your Project

-

Update the

databricks.ymlfile:

bundle:

name: your-project-name # Change this

# ... rest of the file

targets:

dev:

workspace:

host: your-workspace.databricks.com # Change this to your workspace URL

- Add Environment Variables for Authentication:

# For local development

export DATABRICKS_HOST=your-workspace.cloud.databricks.com

# Alternative: Use profiles you set up in step 2 by uncommenting in databricks.yml:

# profile: dev

4. Validate and Deploy Your Project

- Validate the configuration:

databricks bundle validate

- Deploy to development:

databricks bundle deploy --target dev

Extending the Common Package

The src/common package is designed to be extended with your own modules. Here's how to add new functionality:

1. Add a New Module

Let's say you want to add data quality checks, and you want to add a new module called data_quality.

# Create a new module

mkdir -p src/common/data_quality

# Create necessary files

touch src/common/data_quality/__init__.py

touch src/common/data_quality/validators.py

2. Implement Your Functions

In src/common/data_quality/validators.py:

from pyspark.sql import DataFrame

from typing import List, Dict, Any, Optional

def validate_not_null(df: DataFrame, columns: List[str]) -> Dict[str, Any]:

"""

Validates that specified columns don't contain nulls.

Args:

df: DataFrame to validate

columns: List of column names to check

Returns:

Dictionary with validation results

"""

results = {}

for column in columns:

if column not in df.columns:

results[column] = {"valid": False, "error": f"Column {column} not found"}

continue

null_count = df.filter(df[column].isNull()).count()

results[column] = {

"valid": null_count == 0,

"null_count": null_count,

"total_count": df.count()

}

return results

3. Import in Your Notebook

Now you can use this in any notebook:

from common.data_quality.validators import validate_not_null

# Load your data

df = spark.table("bronze.my_table")

# Validate required columns

validation_results = validate_not_null(df, ["id", "name", "timestamp"])

# Take action based on results

for column, result in validation_results.items():

if not result["valid"]:

print(f"Validation failed for {column}: {result}")

That's it! You've added a new module to your common package. You can now keep all your common code in one place and reuse it across your notebooks.

My Closing Thoughts

Starting data engineering projects from scratch is a waste of time. We're all solving similar problems, so why not start from a solid foundation?

This project embodies patterns I've found useful in Databricks development. It's got the structure in place, but you can customize it as needed for your specific requirements.

I'd love to hear how you use this project and what improvements you make. The whole point of sharing this is to learn from each other and keep making our data work better.

Catch you in the data lake!

Got questions or improvements? I'd love to hear from you! Drop a comment below or reach out on GitHub.