Data Loading with Python and AI

Modern data pipelines are the backbone of data engineering, enabling organizations to collect, process, and leverage massive volumes of information efficiently. But building and maintaining these pipelines isn't always straightforward. From API rate ...

Modern data pipelines are the backbone of data engineering, enabling organizations to collect, process, and leverage massive volumes of information efficiently. But building and maintaining these pipelines isn't always straightforward. From API rate limits and changing data schemas to ensuring consistent loading and transformation, engineers face many challenges that can disrupt operations. Mastering data ingestion, the process of collecting and importing data for immediate use or storage, is important for building resilient, scalable systems that can evolve with business needs.

We just published a course on the freeCodeCamp.org YouTube channel that will teach you all about mastering data ingestion for data engineering using Python. Created by Alexey Grigorev and Adrian Brudaru and supported by a grant from dlthub.com, this comprehensive course dives deep into the core challenges of building robust data pipelines and provides practical, real-world solutions. Whether you're an aspiring data engineer or a developer looking to level up, this course equips you with senior-level strategies to design pipelines that gracefully handle schema evolution, API limitations, and more.

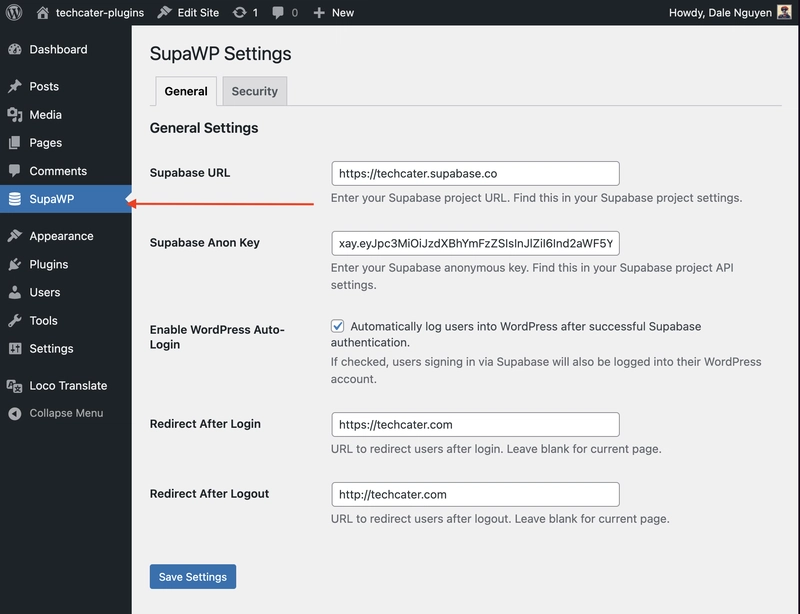

In Alexey's section of the course, you'll start with the foundations: understanding what data ingestion really means and how to approach it through streaming, batching, and working with REST APIs. You'll learn to normalize incoming data, load it into tools like DuckDB, and implement dynamic schema management to future-proof your pipelines.

Adrian then teaches how to use DLT (Data Load Tool), an open-source Python library for data loading, to simplify and scale your pipeline implementations. You'll go hands-on with configuring secrets, managing data contracts, handling incremental loading, tuning performance, and deploying your pipelines using tools like GitHub Actions, Crontab, Dagster, and Airflow. There’s even an exciting section on creating data pipelines using LLMs, where you’ll learn to craft effective prompts and integrate generative AI into your workflows.

Here is the full list of sections in this course:

Alexey's part

Introduction

What is data ingestion

Extracting data: Data Streaming & Batching

Extracting data: Working with RestAPI

Normalizing data

Loading data into DuckDB

Dynamic schema management

What is next?

Adrian's part

Introduction

Overview

Extracting data with dlt: dlt RestAPI Client

dlt Resources

How to configure secrets

Normalizing data with dlt

Data Contracts

Alerting schema changes

Loading data with dlt

Write dispositions

Incremental loading

Loading data from SQL database to SQL database

Backfilling

SCD2

Performance tuning

Loading data to Data Lakes & Lakehouses & Catalogs

Loading data to Warehouses/MPPs,Staging

Deployment & orchestration

Deployment with Git Actions

Deployment with Crontab

Deployment with Dagster

Deployment with Airflow

Create pipelines with LLMs: Understanding the challenge

Create pipelines with LLMs: Creating prompts and LLM friendly documentation

Create pipelines with LLMs: Demo

Check out the full course for free on the freeCodeCamp.org YouTube channel.