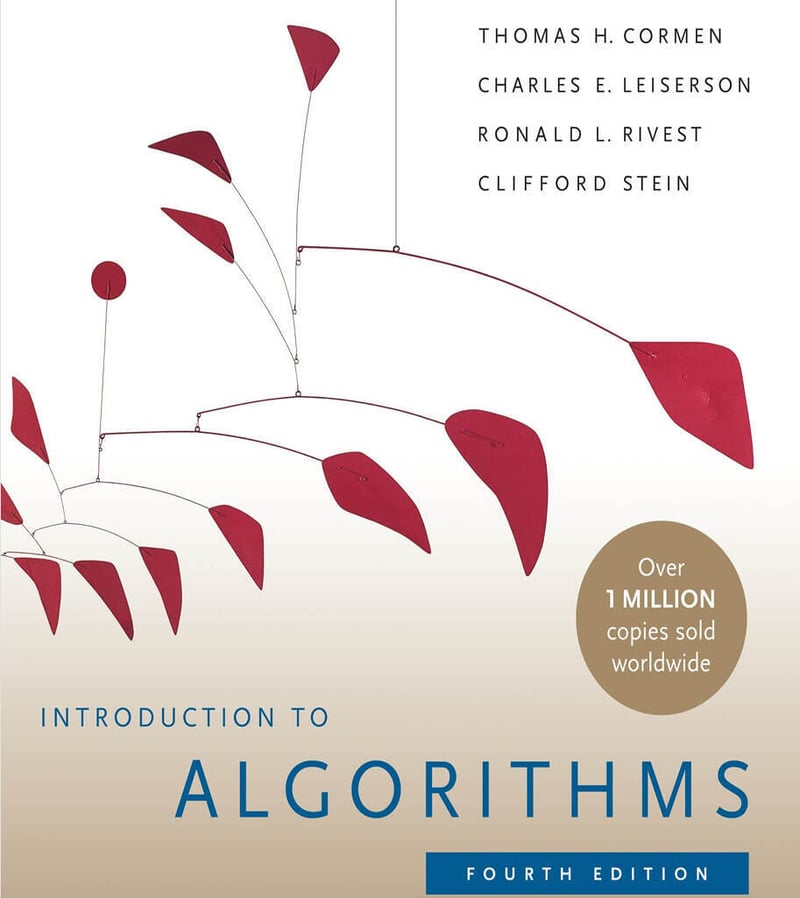

Algorithms

MIT 6.006 Introduction to Algorithms, Fall 2011 https://youtube.com/playlist?list=PLUl4u3cNGP61Oq3tWYp6V_F-5jb5L2iHb Algorithm design: https://youtu.be/2P-yW7LQr08 https://atekihcan.github.io/CLRS/ https://icefox-saber.github.io/CLRS/Chap01/1.1/ Part I Foundations 1 The Role of Algorithms in Computing 1.1-2 Other than speed, what other measures of efficiency might you need to consider in a real-world setting? ⇒ Computer memory usage, network bandwidth, power consumption 1.1-4 How are the shortest-path and traveling-salesperson problems given above similar? How are they different? Definitions ● Shortest-Path Problem: Given a graph (a set of nodes connected by edges with weights, like distances or costs), find the path between two specific nodes (a source and a destination) that minimizes the total weight. A well-known algorithm for this is Dijkstra’s algorithm for single-source shortest paths in graphs with non-negative weights. ● Traveling-Salesperson Problem (TSP): Given a set of nodes and the distances between each pair, find the shortest possible route that visits every node exactly once and returns to the starting node (a Hamiltonian cycle with minimal total weight). This is an optimization problem with no simple, efficient solution for large instances. Similarities Graph-Based Problems: ○ Both problems are defined on graphs, where nodes represent locations (e.g., cities, points) and edges represent connections (e.g., roads, distances). ○ The weights on edges typically represent costs, distances, or times to be minimized. Optimization Goals: ○ Each seeks to minimize a total cost: the shortest-path problem minimizes the cost between two points, while TSP minimizes the cost of a complete tour. ○ Both involve finding an optimal solution among many possible paths or routes. Real-World Applications: ○ They apply to navigation, logistics, and network design. For example, shortest-path is used in GPS routing, while TSP models delivery truck routes or circuit board drilling. Dynamic Programming and Greedy Approaches: ○ Algorithms for both can use optimization techniques. For instance, Dijkstra’s algorithm (shortest-path) is greedy, and some TSP approximations use greedy heuristics. Differences Scope of the Problem: ○ Shortest-Path: Focuses on a single pair of nodes (source to destination). You’re finding one path, not necessarily visiting all nodes. ○ TSP: Requires visiting all nodes exactly once and returning to the start, forming a complete cycle. Path vs. Cycle: ○ Shortest-Path: The solution is an open path (e.g., A → B → C) with a start and end, which may not revisit nodes. ○ TSP: The solution is a closed loop (e.g., A → B → C → A), a Hamiltonian cycle that covers every node. Complexity: ○ Shortest-Path: Polynomial-time solvable. Dijkstra’s algorithm runs in O(V2) O(V^2) O(V2) or O(E+VlogV) O(E + V \log V) O(E+VlogV) with a priority queue, where V V V is the number of vertices and E E E is the number of edges. ○ TSP: NP-hard. No known polynomial-time algorithm exists for the general case; exact solutions rely on exponential-time methods like dynamic programming (O(n22n) O(n^2 2^n) O(n22n)), and practical solutions often use approximations. Constraints: ○ Shortest-Path: No requirement to visit all nodes—nodes can be skipped if they’re not on the optimal path. ○ TSP: Must visit every node exactly once, which adds significant combinatorial complexity. Output: ○ Shortest-Path: A sequence of nodes from start to end (e.g., A → D → F). ○ TSP: A tour that cycles back (e.g., A → B → C → A). Example Imagine a graph with nodes A, B, C, and edge weights: ● A → B: 4 ● B → C: 3 ● A → C: 2 ● Shortest-Path (A to C): The solution is A → B → C (weight 7), skipping a direct A → C path (weight 2). ● TSP (visit all and return): The solution might be A → B → C → A (weight 4 + 3 + 10 = 17), assuming a return edge C → A exists. TSP must include all nodes and close the loop, even if it’s less efficient between specific pairs. Summary The shortest-path problem and TSP are similar in their use of graphs and focus on minimizing costs, but they differ fundamentally in scope and complexity. Shortest-path finds a single efficient route between two points, while TSP demands a full tour of all points, making it far more computationally challenging. The former is about efficiency between endpoints; the latter is about comprehensive coverage. 1.1-5 Suggest a real-world problem in which only the best solution will do. Then come up with one in which “approximately” the best solution is good enough. ⇒ Computer memory usage Here are two real-world problems, one requiring the absolute best solution and another where an approximate solution suffices: Problem Requiring the Best Solution: Airline Crew Scheduling ● Description: Airlines need to assign pilots and flight attendants to flights in a way that minimizes costs (e.g., salaries, overtime, travel expenses) while adhering to strict regulations (e.g., maximum wo

MIT 6.006 Introduction to Algorithms, Fall 2011

https://youtube.com/playlist?list=PLUl4u3cNGP61Oq3tWYp6V_F-5jb5L2iHb

Algorithm design: https://youtu.be/2P-yW7LQr08

https://atekihcan.github.io/CLRS/

https://icefox-saber.github.io/CLRS/Chap01/1.1/

Part I Foundations

1 The Role of Algorithms in Computing

1.1-2

Other than speed, what other measures of efficiency might you need to consider in a real-world setting?

⇒ Computer memory usage, network bandwidth, power consumption

1.1-4

How are the shortest-path and traveling-salesperson problems given above similar? How are they different?

Definitions

● Shortest-Path Problem: Given a graph (a set of nodes connected by edges with weights, like distances or costs), find the path between two specific nodes (a source and a destination) that minimizes the total weight. A well-known algorithm for this is Dijkstra’s algorithm for single-source shortest paths in graphs with non-negative weights.

● Traveling-Salesperson Problem (TSP): Given a set of nodes and the distances between each pair, find the shortest possible route that visits every node exactly once and returns to the starting node (a Hamiltonian cycle with minimal total weight). This is an optimization problem with no simple, efficient solution for large instances.

Similarities

- Graph-Based Problems: ○ Both problems are defined on graphs, where nodes represent locations (e.g., cities, points) and edges represent connections (e.g., roads, distances). ○ The weights on edges typically represent costs, distances, or times to be minimized.

Optimization Goals:

○ Each seeks to minimize a total cost: the shortest-path problem minimizes the cost between two points, while TSP minimizes the cost of a complete tour.

○ Both involve finding an optimal solution among many possible paths or routes.

Real-World Applications:

○ They apply to navigation, logistics, and network design. For example, shortest-path is used in GPS routing, while TSP models delivery truck routes or circuit board drilling.

Dynamic Programming and Greedy Approaches:

○ Algorithms for both can use optimization techniques. For instance, Dijkstra’s algorithm (shortest-path) is greedy, and some TSP approximations use greedy heuristics.

Differences

- Scope of the Problem: ○ Shortest-Path: Focuses on a single pair of nodes (source to destination). You’re finding one path, not necessarily visiting all nodes. ○ TSP: Requires visiting all nodes exactly once and returning to the start, forming a complete cycle.

Path vs. Cycle:

○ Shortest-Path: The solution is an open path (e.g., A → B → C) with a start and end, which may not revisit nodes.

○ TSP: The solution is a closed loop (e.g., A → B → C → A), a Hamiltonian cycle that covers every node.

Complexity:

○ Shortest-Path: Polynomial-time solvable. Dijkstra’s algorithm runs in O(V2) O(V^2) O(V2) or O(E+VlogV) O(E + V \log V) O(E+VlogV) with a priority queue, where V V V is the number of vertices and E E E is the number of edges.

○ TSP: NP-hard. No known polynomial-time algorithm exists for the general case; exact solutions rely on exponential-time methods like dynamic programming (O(n22n) O(n^2 2^n) O(n22n)), and practical solutions often use approximations.

Constraints:

○ Shortest-Path: No requirement to visit all nodes—nodes can be skipped if they’re not on the optimal path.

○ TSP: Must visit every node exactly once, which adds significant combinatorial complexity.

- Output:

○ Shortest-Path: A sequence of nodes from start to end (e.g., A → D → F).

○ TSP: A tour that cycles back (e.g., A → B → C → A).

Example

Imagine a graph with nodes A, B, C, and edge weights:

● A → B: 4

● B → C: 3

● A → C: 2

● Shortest-Path (A to C): The solution is A → B → C (weight 7), skipping a direct A → C path (weight 2).

● TSP (visit all and return): The solution might be A → B → C → A (weight 4 + 3 + 10 = 17), assuming a return edge C → A exists. TSP must include all nodes and close the loop, even if it’s less efficient between specific pairs.

Summary

The shortest-path problem and TSP are similar in their use of graphs and focus on minimizing costs, but they differ fundamentally in scope and complexity. Shortest-path finds a single efficient route between two points, while TSP demands a full tour of all points, making it far more computationally challenging. The former is about efficiency between endpoints; the latter is about comprehensive coverage.

1.1-5

Suggest a real-world problem in which only the best solution will do.

Then come up with one in which “approximately” the best solution is

good enough.

⇒ Computer memory usage

Here are two real-world problems, one requiring the absolute best solution and another where an approximate solution suffices:

Problem Requiring the Best Solution: Airline Crew Scheduling

● Description: Airlines need to assign pilots and flight attendants to flights in a way that minimizes costs (e.g., salaries, overtime, travel expenses) while adhering to strict regulations (e.g., maximum working hours, rest requirements) and ensuring every flight is covered. The schedule must also account for crew qualifications, base locations, and unexpected disruptions like delays.

● Why Only the Best Solution Will Do:

○ Safety is non-negotiable—crew must be rested and qualified, with no room for error in compliance with aviation laws.

○ Costs are massive, and even a 1% improvement in efficiency can save millions annually for a large airline.

○ Suboptimal schedules could lead to flight cancellations or legal violations, which are unacceptable.

● Approach: This is often modeled as an integer programming problem or a variant of the set-covering problem, solved with exact optimization techniques like branch-and-bound or constraint programming to guarantee the optimal solution.

Problem Where “Approximately” the Best Solution is Good Enough: Delivery Route Planning for a Local Bakery

● Description: A small bakery delivers fresh bread to 20 local cafes each morning. The goal is to find a route for the delivery van that minimizes travel time, starting and ending at the bakery, while ensuring all cafes receive their orders within a reasonable window (e.g., before 8 AM).

● Why an Approximate Solution is Good Enough:

○ Time constraints are flexible as long as deliveries are reasonably prompt—slight delays don’t ruin the business.

○ Fuel costs are a factor, but for a small operation, the difference between the absolute shortest route and a near-optimal one might be negligible (e.g., a few dollars daily).

○ Real-world variables like traffic or road closures change daily, so chasing the exact optimum might be overkill when conditions are unpredictable.

● Approach: This resembles the Traveling Salesperson Problem (TSP). A heuristic like the nearest-neighbor algorithm or a metaheuristic like simulated annealing can quickly provide a route that’s close to optimal (e.g., within 5-10% of the best), which is practical and sufficient for the bakery’s needs.

Contrast

● Precision vs. Practicality: Airline scheduling demands precision due to high stakes (safety, cost, reliability), while the bakery can tolerate minor inefficiencies for simplicity and speed.

● Scale and Complexity: The airline problem involves hundreds of crew and flights with rigid constraints, necessitating exactness. The bakery’s smaller scope (20 stops) and looser requirements make approximation viable.

● Consequences: A suboptimal airline schedule could ground planes or violate laws; a slightly longer bakery route just means a bit more gas or a few extra minutes.

These examples highlight when perfection is critical versus when “good enough” keeps the world turning without undue effort.

1.1-6

Describe a real-world problem in which sometimes the entire input is

available before you need to solve the problem, but other times the

input is not entirely available in advance and arrives over time.

⇒ offline: convert an epub file to pdf

online: ChatGPT, Google, any ecommerce sites like Amazon

1.2-1

Give an example of an application that requires algorithmic content at

the application level, and discuss the function of the algorithms

involved.

⇒ Check out process in an ecommerce site

1.2-2

Suppose that for inputs of size n on a particular computer, insertion

sort runs in 8n^2 steps and merge sort runs in 64 n lg n steps. For which

values of n does insertion sort beat merge sort?

⇒ 8n^2 = 64 n lg n ⇒ n = 8 lg n

n = 43.xxx, so merge sort starts beating at n=44

844^2 > 64 44 * lg 44

1.2-3

What is the smallest value of n such that an algorithm whose running

time is 100n^2 runs faster than an algorithm whose running time is 2^n on

the same machine?

⇒ 15

n 100n^2 2^n

14 19,600 16,384

15 22,500 32,768

Part 1: Illustration of Insertion Sort

Let's illustrate the operation of Insertion Sort on the array initially containing the sequence:

⟨31, 41, 59, 26, 41, 58⟩.

Insertion Sort Algorithm

Insertion Sort works by iteratively inserting each element into its correct position in the sorted portion of the array.

Step-by-Step Execution

Initial Array:

[31, 41, 59, 26, 41, 58]

After 1st Iteration (i = 1):

The first element 31 is already in the sorted portion.

Sorted portion: [31]

Array: [31, 41, 59, 26, 41, 58]

After 2nd Iteration (i = 2):

Insert 41 into the sorted portion [31].

41 is greater than 31, so it stays in place.

Sorted portion: [31, 41]

Array: [31, 41, 59, 26, 41, 58]

After 3rd Iteration (i = 3):

Insert 59 into the sorted portion [31, 41].

59 is greater than 41, so it stays in place.

Sorted portion: [31, 41, 59]

Array: [31, 41, 59, 26, 41, 58]

After 4th Iteration (i = 4):

Insert 26 into the sorted portion [31, 41, 59].

26 is smaller than 59, 41, and 31, so it moves to the front.

Sorted portion: [26, 31, 41, 59]

Array: [26, 31, 41, 59, 41, 58]

After 5th Iteration (i = 5):

Insert 41 into the sorted portion [26, 31, 41, 59].

41 is equal to the last 41, so it stays in place.

Sorted portion: [26, 31, 41, 41, 59]

Array: [26, 31, 41, 41, 59, 58]

After 6th Iteration (i = 6):

Insert 58 into the sorted portion [26, 31, 41, 41, 59].

58 is smaller than 59, so it moves before 59.

Sorted portion: [26, 31, 41, 41, 58, 59]

Array: [26, 31, 41, 41, 58, 59]

Final Sorted Array:

[26, 31, 41, 41, 58, 59]

Slightly less efficient version of ascending insertion sort:

`def insertion_sort_ascending(numbers):

for i in range(1, len(numbers)):

if (i > 1):

for j in range(i, 0, -1):

if (numbers[j-1] > numbers[j]):

swap(numbers, j-1, j)

def swap(array, idx_a, idx_b):

tmp = array[idx_a]

array[idx_a] = array[idx_b]

array[idx_b] = tmp`

Part 2: Loop Invariant for SUM-ARRAY

The SUM-ARRAY procedure computes the sum of the numbers in the array A[1 : n]. Here is the procedure:

SUM-ARRAY(A, n)

sum = 0

for i = 1 to n

sum = sum + A[i]

return sum

Loop Invariant

A loop invariant is a condition that is true before and after each iteration of the loop. For the SUM-ARRAY procedure, the loop invariant is:

At the start of each iteration of the for loop, the variable sum contains the sum of the elements in the subarray A[1 : i-1].

Proof of Correctness Using Loop Invariant

Initialization:

Before the first iteration (i = 1), the subarray A[1 : 0] is empty.

The variable sum is initialized to 0, which is the sum of an empty array.

Thus, the loop invariant holds before the first iteration.

Maintenance:

Assume the loop invariant holds at the start of the i-th iteration, i.e., sum contains the sum of A[1 : i-1].

During the i-th iteration, sum is updated to sum + A[i].

After the update, sum contains the sum of A[1 : i].

Thus, the loop invariant holds at the start of the next iteration.

Termination:

The loop terminates when i = n + 1.

At this point, the loop invariant states that sum contains the sum of A[1 : n].

The procedure returns sum, which is the correct sum of all elements in the array.

Conclusion

The loop invariant ensures that the SUM-ARRAY procedure correctly computes the sum of the numbers in the array A[1 : n]. By the properties of initialization, maintenance, and termination, the procedure is proven to be correct.

Part 1: Insertion Sort for Monotonically Decreasing Order

To modify the Insertion Sort algorithm to sort in monotonically decreasing order, we need to change the comparison condition. Instead of inserting elements into the correct position in an increasing sequence, we insert them into the correct position in a decreasing sequence.

Pseudocode for Decreasing Insertion Sort

INSERTION-SORT-DECREASING(A)

for j = 2 to A.length

key = A[j]

i = j - 1

// Insert A[j] into the sorted sequence A[1 : j-1] in decreasing order

while i > 0 and A[i] < key

A[i + 1] = A[i]

i = i - 1

A[i + 1] = key

Slightly less efficient version of ascending insertion sort:

`def insertion_sort_descending(numbers):

for i in range(1, len(numbers)):

if (i > 1):

for j in range(i, 0, -1):

if (numbers[j-1] < numbers[j]):

swap(numbers, j-1, j)

def swap(array, idx_a, idx_b):

tmp = array[idx_a]

array[idx_a] = array[idx_b]

array[idx_b] = tmp`

Explanation

The outer loop iterates over each element in the array.

The inner loop shifts elements to the right until the correct position for key is found in the decreasing sequence.

The condition A[i] < key ensures that the sequence remains monotonically decreasing.

Part 2: Linear Search Pseudocode and Correctness Proof

Pseudocode for Linear Search

LINEAR-SEARCH(A, x)

for i = 1 to A.length

if A[i] == x

return i

return NIL

Slightly more explicit linear search:

def linear_search(numbers, value):

ret = None

for i in range(1, len(numbers)):

if (numbers[i] == value):

ret = i

return ret

return ret

Loop Invariant

The loop invariant for the LINEAR-SEARCH algorithm is:

At the start of each iteration of the for loop, the element x does not appear in the subarray A[1 : i-1].

Proof of Correctness Using Loop Invariant

Initialization:

Before the first iteration (i = 1), the subarray A[1 : 0] is empty.

By definition, x does not appear in an empty array.

Thus, the loop invariant holds before the first iteration.

Maintenance:

Assume the loop invariant holds at the start of the i-th iteration, i.e., x does not appear in A[1 : i-1].

During the i-th iteration, the algorithm checks if A[i] == x.

If A[i] == x, the algorithm returns i, and the loop terminates.

If A[i] != x, the loop invariant holds for the next iteration (i + 1).

Thus, the loop invariant is maintained.

Termination:

The loop terminates when either x is found or i exceeds A.length.

If x is found, the algorithm returns the correct index.

If x is not found, the algorithm returns NIL, which is correct because the loop invariant ensures that x does not appear in A[1 : A.length].

Part 3: Adding Two n-Bit Binary Integers

The problem involves adding two n-bit binary integers stored in arrays A and B and storing the result in an (n + 1)-bit array C.

Pseudocode for ADD-BINARY-INTEGERS

ADD-BINARY-INTEGERS(A, B, n)

let C[0 : n] be a new array

carry = 0

for i = n - 1 downto 0

sum = A[i] + B[i] + carry

C[i + 1] = sum % 2 // Store the least significant bit

carry = sum // 2 // Update the carry

C[0] = carry

return C

Explanation

Initialization:

Create a new array C of size n + 1 to store the result.

Initialize carry to 0.

Loop:

Iterate from the least significant bit (LSB) to the most significant bit (MSB).

Compute the sum of A[i], B[i], and carry.

Store the LSB of the sum in C[i + 1].

Update the carry for the next iteration.

Final Carry:

After the loop, store the final carry in C[0].

Return:

Return the array C containing the sum.

`def add_two_bin_numbers(array_a, array_b, size):

ret = []

carry_over = 0

for i in range(size-1, -1, -1):

bit_sum = array_a[i] + array_b[i] + carry_over

if (bit_sum > 1):

carry_over = 1

ret.insert(0, bit_sum % 2)

else:

carry_over = 0

ret.insert(0, bit_sum)

ret.insert(0, carry_over)

return ret

bin_number_a = [1, 0, 1, 0]

bin_number_b = [1, 1, 1, 0]

print ("sum = ", add_two_bin_numbers(bin_number_a, bin_number_b, len(bin_number_b)))

`

sum = [1, 1, 0, 0, 0]

Example

Input:

A = 1, 0, 1, 1

B = 1, 1, 0, 1

n = 4

Execution:

Iteration 1: sum = 1 + 1 + 0 = 2, C[4] = 0, carry = 1

Iteration 2: sum = 1 + 0 + 1 = 2, C[3] = 0, carry = 1

Iteration 3: sum = 0 + 1 + 1 = 2, C[2] = 0, carry = 1

Iteration 4: sum = 1 + 1 + 1 = 3, C[1] = 1, carry = 1

Final Carry: C[0] = 1

Output:

C = 1, 1, 0, 0, 0

Summary

Insertion Sort for Decreasing Order: Modify the comparison condition to sort in decreasing order.

Linear Search: Use a loop invariant to prove correctness.

Binary Addition: Implement a procedure to add two n-bit binary integers and store the result in an (n + 1)-bit array.

2.2 Analyzing algorithms

In some particular cases, we’ll be interested in the average-case

running time of an algorithm. We’ll see the technique of probabilistic

analysis applied to various algorithms throughout this book.

⇒ I’ve seen one such case in matrix manipulation where most common inputs are sporadic matrices.

Exercises

2.2-1

Express the function n3/1000 + 100n2 – 100n + 3 in terms of Θ-notation.

⇒ Θ(n^3)

2.2-2

Consider sorting n numbers stored in array A[1 : n]

⇒

def selection_sort_ascending(numbers):

size = len(numbers)

for i in range(0, size):

local_min_index = size-1

for j in range(size-1, i, -1):

if (numbers[j] < numbers[local_min_index]):

local_min_index = j

if (numbers[local_min_index] < numbers[i]):

swap(numbers, local_min_index, i)

2.2-3

Consider linear search again (see Exercise 2.1-4). How many elements

of the input array need to be checked on the average, assuming that the

element being searched for is equally likely to be any element in the

array? How about in the worst case?

⇒ Average: n/2 (Θ(n)), worst case: n (Θ(n))

2.3 Designing algorithms

Merge-Sort

`def merge_sort_ascending(numbers, start, end):

if (start+1 >= end):

return

merged = []

mid = (start + end) // 2

merge_sort_ascending(numbers, start, mid)

merge_sort_ascending(numbers, mid, end)

left = 0

right = 0

while left < mid-start or right < end-mid:

if(left < mid-start and right < end-mid):

if (numbers[start+left] <= numbers[mid+right]):

merged.append(numbers[start+left])

left = left+1

else:

merged.append(numbers[mid+right])

right = right+1

elif(left < mid-start):

merged.append(numbers[start+left])

left = left+1

elif(right < end-mid):

merged.append(numbers[mid+right])

right = right+1

else:

break

numbers[start:end] = merged

`

Exercises

2.3-1

2.3-2

2.3-3

State a loop invariant for the while loop of lines 12–18 of the MERGE

procedure. Show how to use it, along with the while loops of lines 20–23

and 24–27, to prove that the MERGE procedure is correct.

11// As long as each of the arrays L and R contains an unmerged

element,

// copy the smallest unmerged element back into A[p : r].

12while i < nL and j < nR

13 if L[i] ≤ R[j]

14 A[k] = L[i]

15 i = i + 1

16 else A[k] = R[j]

17 j = j + 1

18 k = k + 1

19// Having gone through one of L and R entirely, copy the

// remainder of the other to the end of A[p : r].

20while i < nL

21 A[k] = L[i]

22 i = i + 1

23 k = k + 1

24while j < nR

25 A[k] = R[j]

26 j = j + 1

27 k = k + 1

Loop invariants help us understand why an algorithm is correct.

When you’re using a loop invariant, you need to show three things:

Initialization: It is true prior to the first iteration of the loop.

Maintenance: If it is true before an iteration of the loop, it remains true

before the next iteration.

Termination: The loop terminates, and when it terminates, the invariant

—usually along with the reason that the loop terminated—gives us a

useful property that helps show that the algorithm is correct.

loop invariant: at the start of each iteration A[p:k-1] consists of the smallest elements of union of L and R at sort order.

when the while loop of lines 12-18 finish, A[p:k-1] consists of the smallest elements of union of L and R at sort order, either while loop of lines 20–23 or 24–27 will be run, L and R has the biggest rest elements in sort order.when the second while loop finish, they are added to the end of A, and A consist of all elements at sort order.

2.3-4

Use mathematical induction to show that when n ≥ 2 is an exact power

of 2, the solution of the recurrence

Is T(n) = n lg n.

When n = 2, T(2) = 2 = 2 * ln 2 = 2 ⇒ so it’s true

When n > 2, let n = 2^(t+1), then T(n) = 2^(t+1) lg 2^(t+1) = 2 2^t (lg 2^t + lg 2)

= 2 2^t lg 2^t + 2 2^t lg 2

= 2 2^t lg 2^t + 2^(t+1)

= 2 T(n/2) + 2^(t+1)

2.3-5

You can also think of insertion sort as a recursive algorithm. In order

to sort A[1 : n], recursively sort the subarray A[1 : n – 1] and then insert

A[n] into the sorted subarray A[1 : n – 1]. Write pseudocode for this

recursive version of insertion sort. Give a recurrence for its worst-case

running time.

INSERTION-RECURSIVE-SORT(A, n)

if 2 = n

if A[1] > A[2]

swap A[1], A[2]

otherwise

INSERTION-RECURSIVE-SORT(A, n-1)

for i = n to 2

if A[i-1] > A[i]

exchange A[i] with A[i – 1]

otherwise

break

The worst-case running time when the input is in the reverse order

= ((n-1)(n-2) / 2 ) * = Θ(n^2)

2.3-6

⇒ If the item is not found at the end of binary search, it’s Θ(lg n).

2.3-7

The while loop of lines 5–7 of the INSERTION-SORT procedure in

Section 2.1 uses a linear search to scan (backward) through the sorted

subarray A[1 : j – 1]. What if insertion sort used a binary search (see

Exercise 2.3-6) instead of a linear search? Would that improve the

overall worst-case running time of insertion sort to Θ(n lg n)?

⇒ No, binary search wouldn’t work here since binary search works on an already-sorted list..

2.3-8

Describe an algorithm that, given a set S of n integers and another

integer x, determines whether S contains two elements that sum to

exactly x. Your algorithm should take Θ(n lg n) time in the worst case.

⇒ First sort the set with the merge sort which takes Θ(n lg n) time and do a linear search for pairs which amount to x, which takes Θ(n) time. Since the highest Θ determines the entire process time, the overall is still Θ(n lg n) time.

For example, let’s suppose we have a sorted integer set [ -30, -12, 0, 1, 2, 9, 23, 28, 92, 192 ]

and we look for pairs whose sum is 30.

Then we start searching with the first number i.e. -30 from the left.

Since its counterpart should be 30 - (-30) = 60, we look for 60 from the right.

However, 60 is not in the set.

So with the “left” pointer, we move on to the next number which is -12, and with the “right” pointer, we look for 30-(-12) = 42.

We keep doing this until the left pointer reaches 2. With that we find a matching pair of (2, 28).

We move on. However, this time, the left pointer and the right point cross each other, so we stop searching.