AI Updates: Semantic Commit for Resolving Intent Conflicts at Scale

This is a Plain English Papers summary of a research paper called AI Updates: Semantic Commit for Resolving Intent Conflicts at Scale. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. The Challenge of Updating AI Memory as User Intent Changes How do we update AI memory when user intent changes? This is the central question addressed by researchers who developed SemanticCommit, a system for managing semantic changes with non-local effects when updating repositories of natural language data. Think of it as making "semantic commits" - committing ideas to projects like we commit code, and dealing with the "merge conflicts" that occur. Inspired by software engineering concepts like impact analysis, this research explores methods for detecting and resolving semantic conflicts within documents that represent user intent. Our SemanticCommit interface, providing users myriad ways to detect and resolve conflicts at global and local levels. The screenshot depicts a short list describing a "Squirrel Game," where the user is integrating a new feature. Potential conflicts are highlighted in red and pink to mark degree, and the AI has added a new piece of information to the store and proposed an edit to another piece, both marked for human verification. The researchers developed an interface called SemanticCommit to understand how users resolve conflicts when updating "intent specifications" such as Cursor Rules (guidelines for AI programming assistants) and game design documents. Behind the scenes, a knowledge graph-based RAG pipeline drives conflict detection, while large language models assist in suggesting resolutions. In a study with 12 participants, half adopted a workflow of impact analysis - first flagging conflicts without AI revisions, then resolving conflicts locally - despite having access to a global revision feature. This suggests that AI agent interfaces should provide affordances for impact analysis and help users validate AI retrieval independently from generation. The work speaks to how AI agent designers should think about updating memory as a process that involves human feedback and decision-making rather than pure automation. Intent Specifications: The Common Ground Between Humans and AI Agents As people increasingly coordinate with AI agent systems, a new type of document is emerging - the "intent specification." These documents serve as an intermediate layer between humans and AI systems, grounding AI decision-making and establishing common ground. A high-level depiction of interaction between humans and AI assistants for long-term projects. The human-readable intent specification serves as an intermediate layer for enhancing common ground between the human and the AI, and grounds the AI's decision-making. Intent specifications are already appearing in various forms: Cursor Rules in the Cursor programming IDE - markdown documents that AIs read to ground their behavior in user preferences CLAUDE.md files in Anthropic's Claude Code - project-level directives that help Claude "remember project conventions, architecture decisions, or coding standards" Game design specifications - lists of features and behaviors used by everyday users to coordinate with AI systems These documents serve multiple purposes. They keep track of details and goals, surface implicit assumptions made by AI, and act as an intermediate representation of an AI system's "understanding" that users can inspect and edit. However, the integration of new information into an intent specification is not straightforward. People and ideas change. New information can conflict with existing information in ways that are not immediately obvious. This creates the need for semantic navigation tools that help users identify and resolve these conflicts. Unlike simple document synchronization or version control, which operate on pre-defined structure and syntax, semantic conflict detection operates at the level of meaning and concepts. This requires more sophisticated approaches, especially as intent specifications grow in complexity over time. Designing for Semantic Conflict Detection and Resolution Based on a review of past literature and learnings from pilot studies, the researchers identified key design goals for interfaces that help users detect and resolve semantic conflicts: Initial design goals: Foresee impact: Users should be able to perform semantic impact analysis—see the potential impact of a change before making any changes Detect conflicts: The system should help identify potential conflicts between existing and new information Understand conflicts: The system should help users understand the reason for conflicts Leave non-conflicting information unchanged: Only touch pieces of information that are in conflict Support local changes: Allow inspection of proposed changes in situ Assist conflict resolution: Help users resolve conflicts a

This is a Plain English Papers summary of a research paper called AI Updates: Semantic Commit for Resolving Intent Conflicts at Scale. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

The Challenge of Updating AI Memory as User Intent Changes

How do we update AI memory when user intent changes? This is the central question addressed by researchers who developed SemanticCommit, a system for managing semantic changes with non-local effects when updating repositories of natural language data.

Think of it as making "semantic commits" - committing ideas to projects like we commit code, and dealing with the "merge conflicts" that occur. Inspired by software engineering concepts like impact analysis, this research explores methods for detecting and resolving semantic conflicts within documents that represent user intent.

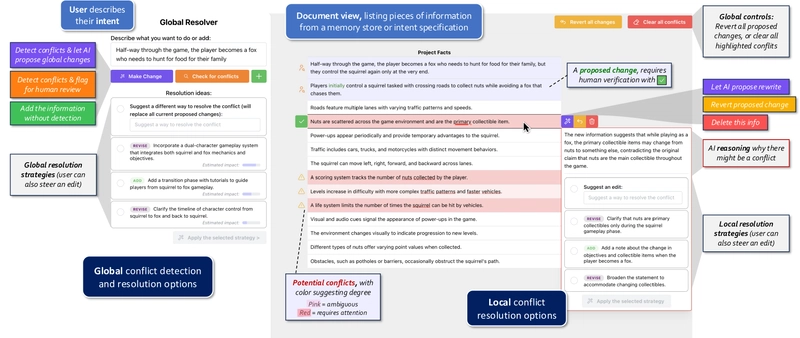

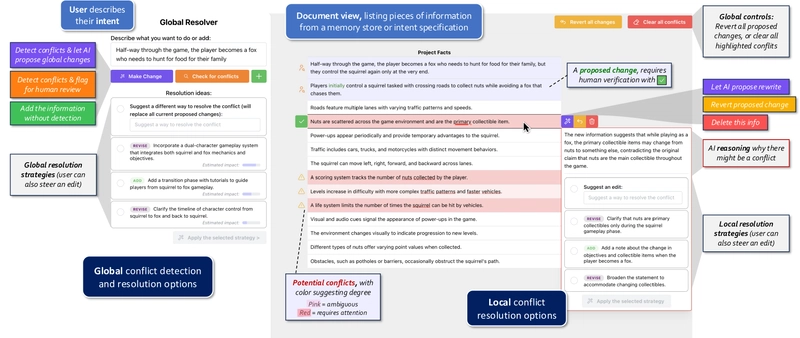

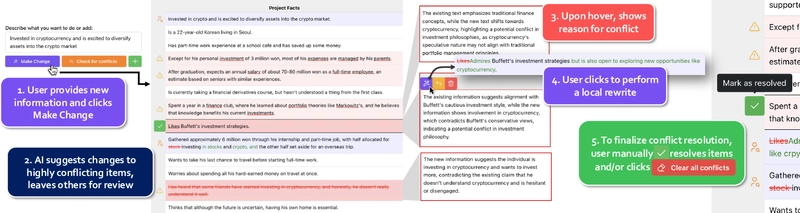

Our SemanticCommit interface, providing users myriad ways to detect and resolve conflicts at global and local levels. The screenshot depicts a short list describing a "Squirrel Game," where the user is integrating a new feature. Potential conflicts are highlighted in red and pink to mark degree, and the AI has added a new piece of information to the store and proposed an edit to another piece, both marked for human verification.

The researchers developed an interface called SemanticCommit to understand how users resolve conflicts when updating "intent specifications" such as Cursor Rules (guidelines for AI programming assistants) and game design documents. Behind the scenes, a knowledge graph-based RAG pipeline drives conflict detection, while large language models assist in suggesting resolutions.

In a study with 12 participants, half adopted a workflow of impact analysis - first flagging conflicts without AI revisions, then resolving conflicts locally - despite having access to a global revision feature. This suggests that AI agent interfaces should provide affordances for impact analysis and help users validate AI retrieval independently from generation.

The work speaks to how AI agent designers should think about updating memory as a process that involves human feedback and decision-making rather than pure automation.

Intent Specifications: The Common Ground Between Humans and AI Agents

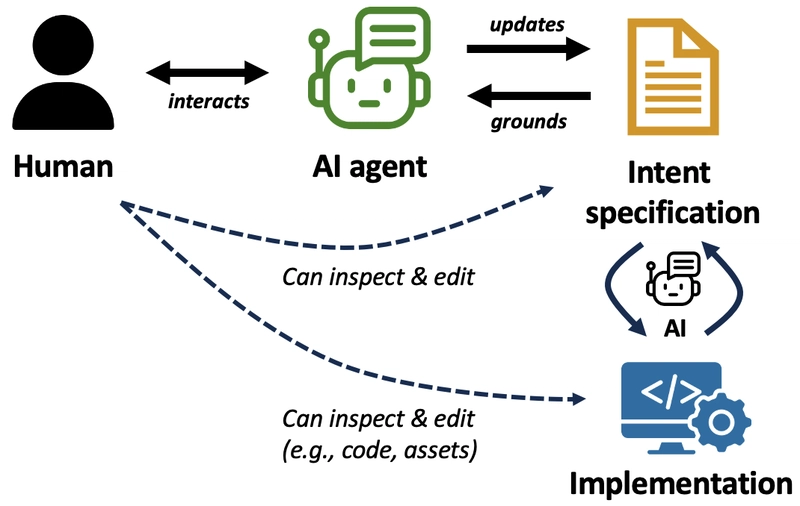

As people increasingly coordinate with AI agent systems, a new type of document is emerging - the "intent specification." These documents serve as an intermediate layer between humans and AI systems, grounding AI decision-making and establishing common ground.

A high-level depiction of interaction between humans and AI assistants for long-term projects. The human-readable intent specification serves as an intermediate layer for enhancing common ground between the human and the AI, and grounds the AI's decision-making.

Intent specifications are already appearing in various forms:

- Cursor Rules in the Cursor programming IDE - markdown documents that AIs read to ground their behavior in user preferences

- CLAUDE.md files in Anthropic's Claude Code - project-level directives that help Claude "remember project conventions, architecture decisions, or coding standards"

- Game design specifications - lists of features and behaviors used by everyday users to coordinate with AI systems

These documents serve multiple purposes. They keep track of details and goals, surface implicit assumptions made by AI, and act as an intermediate representation of an AI system's "understanding" that users can inspect and edit.

However, the integration of new information into an intent specification is not straightforward. People and ideas change. New information can conflict with existing information in ways that are not immediately obvious. This creates the need for semantic navigation tools that help users identify and resolve these conflicts.

Unlike simple document synchronization or version control, which operate on pre-defined structure and syntax, semantic conflict detection operates at the level of meaning and concepts. This requires more sophisticated approaches, especially as intent specifications grow in complexity over time.

Designing for Semantic Conflict Detection and Resolution

Based on a review of past literature and learnings from pilot studies, the researchers identified key design goals for interfaces that help users detect and resolve semantic conflicts:

Initial design goals:

- Foresee impact: Users should be able to perform semantic impact analysis—see the potential impact of a change before making any changes

- Detect conflicts: The system should help identify potential conflicts between existing and new information

- Understand conflicts: The system should help users understand the reason for conflicts

- Leave non-conflicting information unchanged: Only touch pieces of information that are in conflict

- Support local changes: Allow inspection of proposed changes in situ

- Assist conflict resolution: Help users resolve conflicts at both global and local levels

- Revert changes: Allow reverting changes at global and local levels

- Edit manually at any time: Enable manual editing or addition of information

- Work at scale: Function effectively for lengthy intent specifications

After two pilot studies, additional design goals emerged:

- Recall-first: Favor recall over precision for conflict detection (users viewed false negatives as catastrophic)

- List view: Use standard document views that present information sequentially

- Visualize degree: Help users understand the degree or importance of a conflict at a glance

- Help recover from AI errors: Support fast iteration when AI makes mistakes

One crucial finding from the pilots was that color-coding of cards marked as conflicts drew user attention entirely away from manual inspection of non-marked cards. This led to a design that favors recall over precision, with a visualization scheme showing "degree" of conflict through color intensity.

The final interface used a three-tier classification system: direct conflicts (red), ambiguous conflicts (pink), and non-conflicts (no highlighting). This semantic mastery approach gives users more nuanced information about the conflicts they need to resolve.

How SemanticCommit Works: Interface and Workflow

The SemanticCommit interface provides several key operations for managing semantic conflicts:

- Check for Conflicts button: Performs impact analysis, highlighting potential conflicts without suggesting changes

- Make Changes button: Detects conflicts and proposes rewrites for conflicting information

- Add Info button: Allows users to manually add a piece of information

Example of the SemanticCommit workflow, showing integration of new information into an AI memory of a South Korean student's financial habits. The workflow includes: describing new information, detecting conflicts, viewing reasoning, making local rewrites, and resolving remaining conflicts.

Local conflict resolution options include:

- Letting the AI rewrite the conflicting item

- Steering a rewrite with specific instructions

- Applying a suggested resolution strategy

- Reverting a change

- Deleting the information

Three examples demonstrate the system in action:

1. Investment Advice Agent Memory

Consider an AI agent for investment advice that has accumulated memory about a South Korean college student. When the user invests in cryptocurrency and expresses excitement about diversifying more assets into crypto, SemanticCommit detects potential conflicts with existing information, including that the user likes Warren Buffet's investment strategies (Buffet has called cryptocurrencies "rat poison squared").

2. AI Software Engineer Rules

Cursor Rules adapted from the Instructor library, loaded into SemanticCommit UI. The user has added a new directive to squash commits before pushing a feature branch. The system adds the new rule and flags potential conflicts.

When a user adds a new directive to "squash commits before pushing a feature branch" to a list of Cursor Rules, SemanticCommit highlights potential conflicts with existing rules, such as "Keep commits focused on a single change."

3. Game Design Document

When a game designer changes their game setting from Mars to Venus, the AI detects this is a significant change and requests clarification from the user before proceeding. After clarification, conflict detection proceeds, with the AI making obvious changes (like changing "Mars" to "Venus") while flagging other potential conflicts for review, such as references to Mars-specific features like sandstorms.

These examples illustrate that conflicts:

- May require general world-knowledge to detect

- May be hard to resolve

- May involve creative decision-making, not just mechanical changes

The SemanticCommit interface is implemented in React and TypeScript, with a Flask Python backend for the knowledge graph-based retrieval architecture. The researchers used ChainForge to evaluate and iterate on prompts, optimizing performance against their benchmarks.

Technical Implementation: Knowledge Graph-Based Conflict Detection

To enable conflict resolution at scale, the researchers implemented a sophisticated back-end system using a knowledge graph-based approach.

During early prototyping, they found that simple methods—giving the entire context to the LLM, or generating string-replace operations—were prone to miss conflicts or introduce superfluous changes. These techniques rely on a single prediction, which takes as input the entire memory store and produces either a reformulated version or a set of suggested edits.

Instead, they implemented a retrieval-augmented generation (RAG) approach consisting of two phases:

- Pre-processing phase: Constructs a knowledge graph by extracting entities from the memory store and linking them

-

Inference phase: Detects semantic conflicts using a multi-stage information retrieval pipeline:

- Retrieval: Finds relevant chunks of information using the knowledge graph

- Conflict classification: Identifies which retrieved chunks conflict with the edit

To evaluate their approach, they created benchmarks across four domains:

| Benchmark | Ch | M | CS (Min, Median, Max) |

|---|---|---|---|

| Labyrinth | 35 | 17 | (0,4,10) |

| Mars | 30 | 25 | (0,2,14) |

| FinMem | 30 | 17 | (0,4,10) |

| CursorRules | 65 | 19 | (0,3,25) |

Benchmark details including number of chunks (Ch), number of prepared modifications (M), and conflict statistics (CS) (min, median, max) across modifications.

They compared their approach against two simpler methods:

- DropAllDocs: Takes all documents in context to classify them without a retrieval stage

- InkSync: Generates a list of string-replace operations

The results showed that SemanticCommit achieved higher recall (1.6× and 2.2× higher) than DropAllDocs and InkSync, respectively, while retaining similar accuracy. This better addresses user preferences observed in pilot studies, reducing the risk of false negatives (missed conflicts).

The researchers selected GPT-4o for their implementation due to its slightly better performance and comparable latency to GPT-4o-mini, while being twice the speed of o3-mini.

User Study: How People Integrate New Information

To understand how users integrate new information in practice, the researchers conducted a controlled within-subjects study with 12 participants, comparing SemanticCommit with OpenAI's ChatGPT Canvas as a baseline.

Participants completed two tasks (updating a game design document and integrating new information into financial advice AI agent memory) using both tools, with tool order and task order counterbalanced.

Participants' self-reported cognitive load and preference scores comparing the two conditions.

Key findings from the study:

1. Preferred Workflows

Participants adopted three main workflows with SemanticCommit:

- Impact analysis first (6 participants): Started by checking for conflicts to gain insight on the impact before making changes

- Immediate changes with conflict review (5 participants): Started with the Make Change feature to see conflicts and potential changes at once

- Skim to resolve false positives before proceeding (4 participants): Quickly reviewed all conflicts to dismiss false positives before addressing actual conflicts

In contrast, with Canvas, 8 participants predominantly used global prompts, instructing ChatGPT to perform edits across the entire document.

2. Improved Ability to Catch Semantic Conflicts

Nine participants explicitly stated that SemanticCommit was better at identifying conflicts compared to Canvas. This preference stemmed from:

- The granularity of information and color-highlighted conflicts enabling easy identification

- The rationale provided by SemanticCommit helping users understand why a conflict occurred

3. Control and Understanding

Participants reported feeling more in control with SemanticCommit, appreciating the ability to:

- Choose between local and global edits

- See all conflicts at once before making changes

- Understand the AI's reasoning for marking conflicts

4. Engagement Despite Similar Workload

Despite SemanticCommit requiring more explicit action from users to review and resolve conflicts, participants did not report significantly higher workload compared to Canvas, suggesting that the benefits of increased control offset the costs of additional interaction.

Design Implications for AI Agent Interfaces

Based on the study findings, several important design implications emerge for AI agent interfaces:

AI agent interfaces should help users perform impact analysis

The most surprising finding was participants' preference for performing impact analysis: finding conflicts first before making any edits. Instead of automatically applying changes and prompting users to verify afterward, AI agent systems should encourage users to first understand the impact of the change and only then choose to explicitly suggest and/or trigger changes.

This bears important implications for current AI agent interfaces, which tend to first let the AI make changes, and then have users validate them. For instance, in AI-powered programming IDEs like Cursor and Visual Studio, the agent makes changes across documents and then presents the revisions for human review.

Instead, AI agent interfaces should provide affordances for impact analysis: helping users foresee the impact or location of AI changes before necessarily suggesting concrete changes. This reflects the principle of feedforward in communication theory—where a communicator provides "the context of what one was planning to talk about" prior to talking about it, in order to "pre-test the impact of its output" on the listener.

Impact analysis is not simply about pausing before enacting a change. It's also about weighing how extensive a change might be, the work required, and potential unintended side-effects. Users can use impact analysis to back out of an in-progress change before damage is done or they become overloaded.

Let the user walk the spectrum of control

When designing mixed-initiative systems where users and AI collaborate, there's a trade-off between control and efficiency. SemanticCommit's affordances for adjustable autonomy enabled users to dynamically select their preferred balance between automation and manual oversight depending on the context, complexity of tasks, trust in the AI, or familiarity with the content.

This suggests that AI agent interfaces should offer both highly controlled (step-by-step approvals) and streamlined (global changes) workflows to adapt to varying user needs. Providing detailed explanations about identified conflicts and recommended resolutions empowers users to make informed decisions.

Start global, then accelerate local review

Though users started globally, they preferred to make local edits, liberally using local options rather than global steering prompts and global resolution strategies. In the baseline Canvas condition, it was the opposite: users appeared resigned to global steering in chat and became frustrated by lack of granular control.

This suggests future interfaces for semantic conflict resolution should better support and accelerate local review, rather than focusing on features for global steering after the initial interaction.

Future Directions and Connections

The research opens up several avenues for future work:

Interfaces and APIs for management of AI memory of user intent

What would a more assistive command-line interface for memory updates look like? When and how should systems raise conflicts for user review? What rises to the level of "direct conflict" that must be addressed, versus an ambiguity that the AI could still proceed under?Cognitive forcing functions to mitigate over-reliance

Systems should incorporate mechanisms that deliberately encourage active user involvement, fostering sustained cognitive engagement and reducing the likelihood of critical oversights resulting from blind trust in AI-generated outputs.Interfaces to support requirements-oriented prompting

As "requirements-oriented" prompting gains popularity, HCI will need to focus on training users to be good requirements engineers and helping them update requirements to reduce conflicts, inconsistencies, and ambiguities.Semantic commits for long-form writing

The challenges addressed in this research also apply to long-form writing tasks like novel writing, where changing a scene might have ripple effects hundreds of pages later.

This research contributes to our understanding of how humans and AI systems can collaboratively update intent specifications, emphasizing that AI memory management should be a process involving human feedback and decision-making rather than pure automation.

Human-readable intent specifications are emerging as a crucial mechanism for grounding AI decision-making in user intent. As these specifications grow in complexity over time, tools like SemanticCommit will become increasingly important for maintaining their consistency, accuracy, and alignment with user goals.

![From fast food worker to cybersecurity engineer with Tae'lur Alexis [Podcast #169]](https://cdn.hashnode.com/res/hashnode/image/upload/v1745242807605/8a6cf71c-144f-4c91-9532-62d7c92c0f65.png?#)