A2A vs MCP: Two complementary protocols for the emerging agent ecosystem

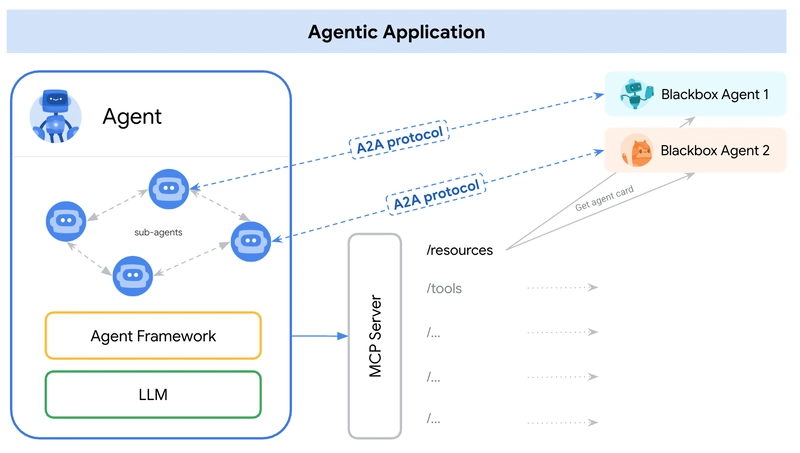

This article is created by Logto, an open-source solution that helps developers implement secure auth in minutes instead of months. The increasing adoption of AI agents — autonomous or semi-autonomous software entities that perform reasoning and actions on behalf of users — is giving rise to a new layer in application architecture. In early 2025, two distinct protocols emerged to address this — A2A (Agent-to-Agent) and MCP (Model Context Protocol). One simple way to understand their roles is: A2A: How do agents interact with each other MCP: How do agents interact with tools or external context reference: https://google.github.io/A2A/#/topics/a2a_and_mcp They address the core challenge of building systems with multiple agents, multiple LLMs, and multiple sources of context — all needing to collaborate. One way to frame it is: “MCP provides vertical integration (application-to-model), while A2A provides horizontal integration (agent-to-agent) Whether you’re a developer or not, anyone building AI products or agentic systems should understand the foundational architecture — because it shapes how we design products, user interactions, ecosystems, and long-term growth. This article introduces both protocols in a simple, easy-to-understand way and highlights key takeaways for developers and AI product builders. What is A2A (Agent-to-Agent)? A2A (Agent-to-Agent) is an open protocol developed by Google and over 50 industry partners. Its purpose is to enable interoperability between agents — regardless of who built them, where they’re hosted, or what framework they use. A2A protocol mechanism A2A uses JSON-RPC 2.0 over HTTP(S) as the communication mechanism, with support for Server-Sent Events (SSE) to stream updates. A2A communication models A2A defines a structured model for how two agents interact. One agent takes the role of the “client” agent, which initiates a request or task, and another acts as the “remote” agent, which receives the request and attempts to fulfill it . The client agent first may perform capability discovery to figure out which agent is best suited for a given job. Here comes to a question, how agent discover each other. Each agent can publish an Agent Card (a JSON metadata document, often hosted at a standard URL like /.well-known/agent.json) describing its capabilities, skills, API endpoints, and auth requirements. By reading an Agent Card, a client agent can identify a suitable partner agent for the task at hand – essentially a directory of what that agent knows or can do. Once a target agent is chosen, the client agent formulates a Task object to send over. reference: https://google.github.io/A2A/#/ Task management All interaction in A2A is oriented around performing tasks. A task is a structured object (defined by the protocol’s schema) that includes details of the request and tracks its state . In A2A, each agent plays one of two roles: Client Agent: initiates a task Remote Agent: receives and processes the task Tasks can include any form of work: generate a report, retrieve data, initiate a workflow. Results are returned as artifacts, and agents can send structured messages during execution to coordinate or clarify. Collaboration and content negotiation A2A supports more than simple task requests — agents can exchange rich, multi-part messages that include text, JSON, images, video, or interactive content. This enables format negotiation based on what each agent can handle or display. For example, a remote agent could return a chart as either raw data or an image, or request to open an interactive form. This design supports flexible, modality-agnostic communication, without requiring agents to share internal tools or memory. Use case example Here’s a real-world example of how A2A could be used in an enterprise scenario: A new employee is hired at a large company. Multiple systems and departments are involved in onboarding: HR needs to create a record and send a welcome email IT needs to provision a laptop and company accounts Facilities needs to prepare a desk and access badge Traditionally, these steps are handled manually or through tightly coupled integrations between internal systems. Instead of custom APIs between every system, each department exposes its own agent using the A2A protocol: Agent Responsibility hr-agent.company.com Create employee record, send documents it-agent.company.com Create email account, order laptop facilities-agent.company.com Assign desk, print access badge A multi-agent system — let’s call it OnboardingPro (e.g. onboarding-agent.company.com) — coordinates the entire onboarding workflow. Discovery: It reads each agent’s.well-known/agent.json to understand capabilities and auth. Task delegation: Sends a createEmployee task to the HR agent. Sends setupEmailAccount and orderHardware tasks to the IT agent. Sends assignDesk and gen

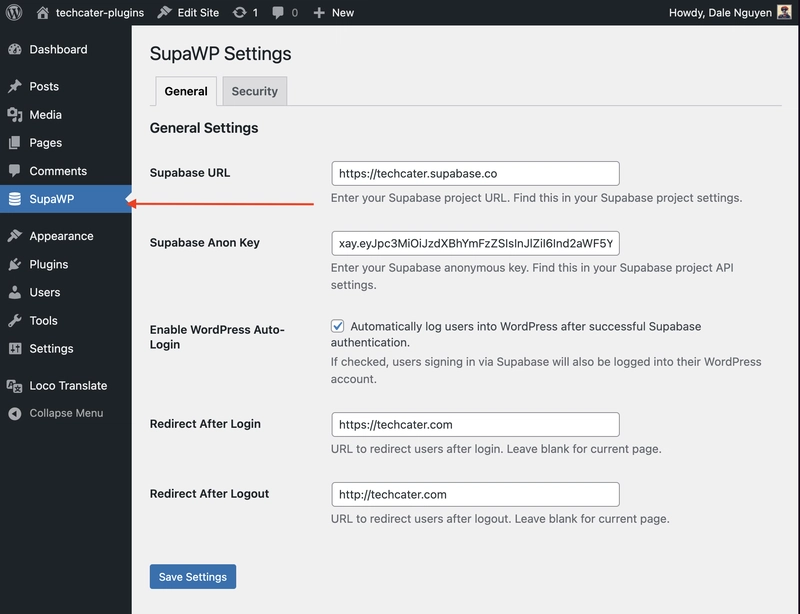

This article is created by Logto, an open-source solution that helps developers implement secure auth in minutes instead of months.

The increasing adoption of AI agents — autonomous or semi-autonomous software entities that perform reasoning and actions on behalf of users — is giving rise to a new layer in application architecture.

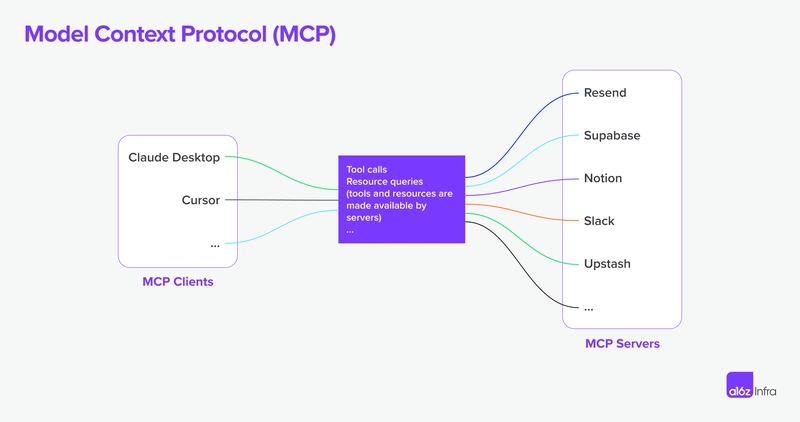

In early 2025, two distinct protocols emerged to address this — A2A (Agent-to-Agent) and MCP (Model Context Protocol). One simple way to understand their roles is:

A2A: How do agents interact with each other

MCP: How do agents interact with tools or external context

reference: https://google.github.io/A2A/#/topics/a2a_and_mcp

They address the core challenge of building systems with multiple agents, multiple LLMs, and multiple sources of context — all needing to collaborate.

One way to frame it is: “MCP provides vertical integration (application-to-model), while A2A provides horizontal integration (agent-to-agent)

Whether you’re a developer or not, anyone building AI products or agentic systems should understand the foundational architecture — because it shapes how we design products, user interactions, ecosystems, and long-term growth.

This article introduces both protocols in a simple, easy-to-understand way and highlights key takeaways for developers and AI product builders.

What is A2A (Agent-to-Agent)?

A2A (Agent-to-Agent) is an open protocol developed by Google and over 50 industry partners. Its purpose is to enable interoperability between agents — regardless of who built them, where they’re hosted, or what framework they use.

A2A protocol mechanism

A2A uses JSON-RPC 2.0 over HTTP(S) as the communication mechanism, with support for Server-Sent Events (SSE) to stream updates.

A2A communication models

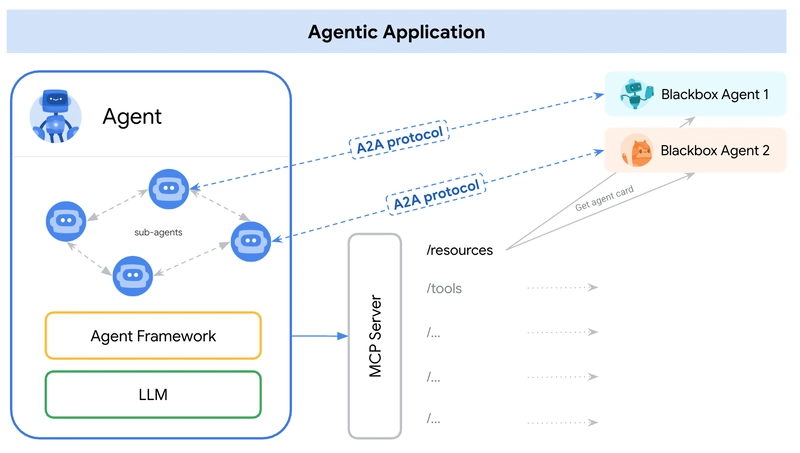

A2A defines a structured model for how two agents interact. One agent takes the role of the “client” agent, which initiates a request or task, and another acts as the “remote” agent, which receives the request and attempts to fulfill it . The client agent first may perform capability discovery to figure out which agent is best suited for a given job.

Here comes to a question, how agent discover each other. Each agent can publish an Agent Card (a JSON metadata document, often hosted at a standard URL like /.well-known/agent.json) describing its capabilities, skills, API endpoints, and auth requirements.

By reading an Agent Card, a client agent can identify a suitable partner agent for the task at hand – essentially a directory of what that agent knows or can do. Once a target agent is chosen, the client agent formulates a Task object to send over.

reference: https://google.github.io/A2A/#/

Task management

All interaction in A2A is oriented around performing tasks. A task is a structured object (defined by the protocol’s schema) that includes details of the request and tracks its state .

In A2A, each agent plays one of two roles:

- Client Agent: initiates a task

- Remote Agent: receives and processes the task

Tasks can include any form of work: generate a report, retrieve data, initiate a workflow. Results are returned as artifacts, and agents can send structured messages during execution to coordinate or clarify.

Collaboration and content negotiation

A2A supports more than simple task requests — agents can exchange rich, multi-part messages that include text, JSON, images, video, or interactive content. This enables format negotiation based on what each agent can handle or display.

For example, a remote agent could return a chart as either raw data or an image, or request to open an interactive form. This design supports flexible, modality-agnostic communication, without requiring agents to share internal tools or memory.

Use case example

Here’s a real-world example of how A2A could be used in an enterprise scenario:

A new employee is hired at a large company. Multiple systems and departments are involved in onboarding:

- HR needs to create a record and send a welcome email

- IT needs to provision a laptop and company accounts

- Facilities needs to prepare a desk and access badge

Traditionally, these steps are handled manually or through tightly coupled integrations between internal systems.

Instead of custom APIs between every system, each department exposes its own agent using the A2A protocol:

| Agent | Responsibility |

|---|---|

| hr-agent.company.com | Create employee record, send documents |

| it-agent.company.com | Create email account, order laptop |

| facilities-agent.company.com | Assign desk, print access badge |

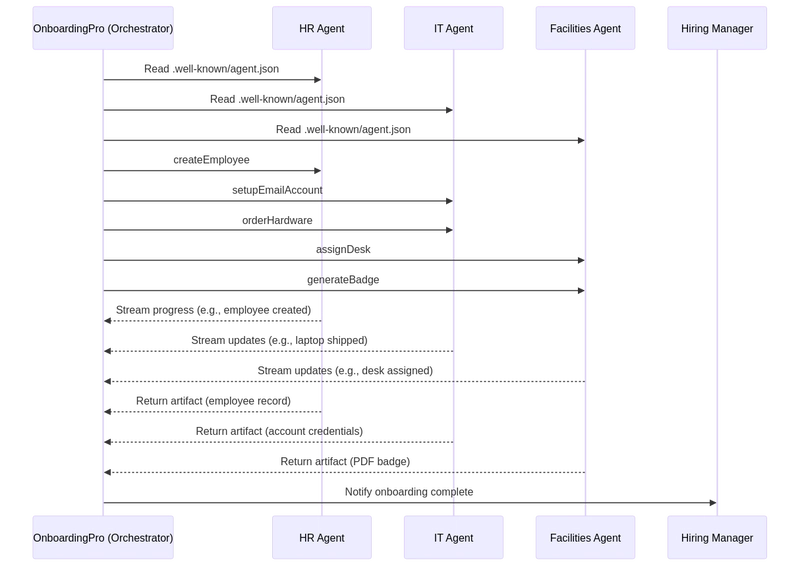

A multi-agent system — let’s call it OnboardingPro (e.g. onboarding-agent.company.com) — coordinates the entire onboarding workflow.

-

Discovery: It reads each agent’s

.well-known/agent.jsonto understand capabilities and auth. -

Task delegation:

- Sends a

createEmployeetask to the HR agent. - Sends

setupEmailAccountandorderHardwaretasks to the IT agent. - Sends

assignDeskandgenerateBadgeto the Facilities agent.

- Sends a

- Streaming updates: Agents stream progress back using Server-Sent Events (e.g. “laptop shipped”, “desk assigned”).

- Artifact collection: Final results (e.g. PDF badge, confirmation emails, account credentials) are returned as A2A artifacts.

- Completion: The OnboardingPro notifies the hiring manager when onboarding is complete.

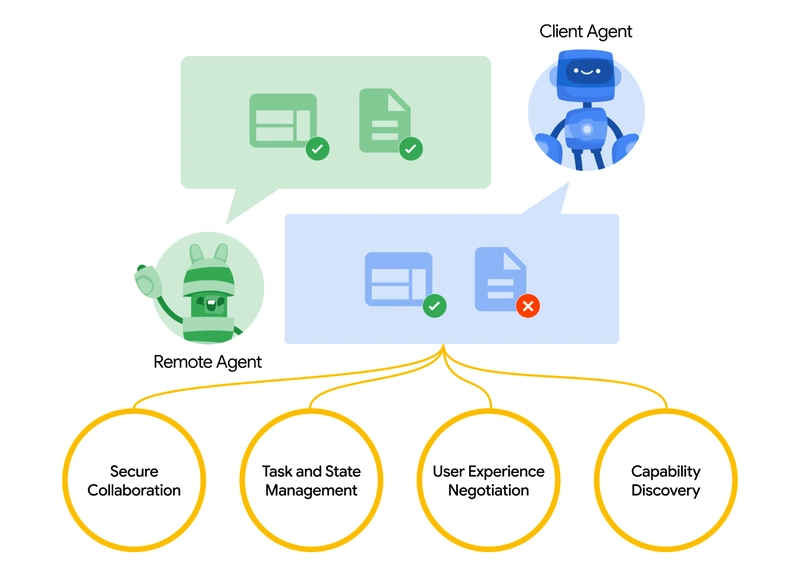

What is MCP(Model Context Protocol)?

MCP (Model Context Protocol), developed by Anthropic, addresses a different problem: how external applications can provide structured context and tools to a language model-based agent at runtime.

Rather than enabling inter-agent communication, MCP focuses on the context window — the working memory of an LLM. Its goal is to:

- Dynamically inject relevant tools, documents, API functions, or user state into a model’s inference session

- Let models call functions or fetch documents without hardcoding the prompt or logic

MCP key architecture

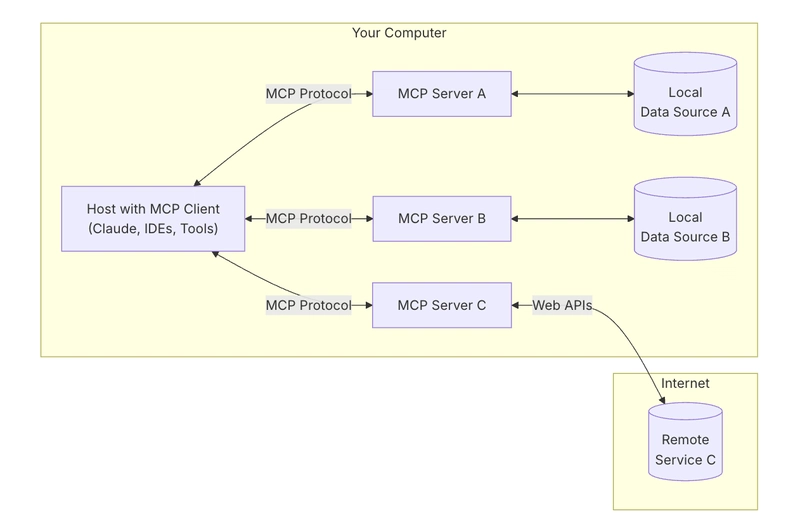

To understand MCP, you first need to understand the overall architecture — how all the parts work together.

MCP Host: “The AI you’re talking to”

Think of the MCP Host as the AI app itself — like Claude Desktop or your coding assistant.

It’s the interface you’re using — the place where you type or talk.

It wants to pull in tools and data that help the model give better answers.

MCP Client: “The connector”

The MCP Client is the software piece that connects your AI host (like Claude) to the outside world. It’s like a switchboard — it manages secure, one-on-one connections with different MCP Servers. When the AI wants access to something, it goes through the client.

It’s helpful to think of tools like ChatGPT, Claude chat, or Cursor IDE as MCP hosts — they provide the interface you interact with. Behind the scenes, they use an MCP client to connect to different tools and data sources through MCP servers.

refrence: https://modelcontextprotocol.io/introduction

MCP Server: “The tool provider”

An MCP Server is a small, focused program that exposes one specific tool or capability — like:

- Searching files on your computer

- Looking up data in a local database

- Calling an external API (like weather, finance, calendar)

Each server follows the MCP protocol, so the AI can understand what it can do and how to call it.

Local data sources: “Your own files & services”

Some MCP Servers connect to things on your own machine — like:

- Documents and folders

- Code projects

- Databases or local apps

This lets the AI search, retrieve, or compute things without uploading your data to the cloud.

Remote services: “APIs & Online tools”

Other MCP Servers are connected to the internet — they talk to:

- APIs (e.g. Stripe, Notion, GitHub)

- SaaS tools

- Company databases in the cloud

So the AI can say, for example: “Call the GitHub server and fetch the list of open PRs.”

MCP now supports connecting to remote MCP servers. This means an MCP client can gain more powerful capabilities. In theory,

With the right set of MCP servers, users can turn every MCP client into an “everything app.

reference: https://a16z.com/a-deep-dive-into-mcp-and-the-future-of-ai-tooling/

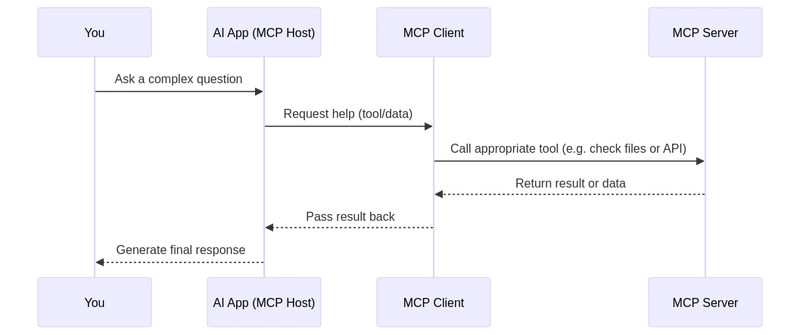

Putting it all together

Now let’s use a diagram to see how everything works together.

- You ask the AI for something complex

- The AI (the host) asks the client for help

- The client calls the right MCP server — maybe one that checks files or hits an API

- The server sends back data or runs a function

- The result flows back to the model to help it complete the task

A2A vs MCP — Comparison

| Category | A2A (Agent-to-Agent) | MCP (Model Context Protocol) |

|---|---|---|

| Primary Goal | Enable inter-agent task exchange | Enable LLMs to access external tools or context |

| Designed For | Communication between autonomous agents | Enhancing single-agent capabilities during inference |

| Focus | Multi-agent workflows, coordination, delegation | Dynamic tool usage, context augmentation |

| Execution model | Agents send/receive tasks and artifacts | LLM selects and executes tools inline during reasoning |

| Security | OAuth 2.0, API keys, declarative scopes | Handled at application integration layer |

| Developer Role | Build agents that expose tasks and artifacts via endpoints | Define structured tools and context the model can use |

| Ecosystem Partners | Google, Salesforce, SAP, LangChain, etc. | Anthropic, with emerging adoption in tool-based LLM UIs |

How they work together

Rather than being alternatives, A2A and MCP are complementary. In many systems, both will be used together.

Example workflow

- A user submits a complex request in an enterprise agent interface.

- The orchestrating agent uses A2A to delegate subtasks to specialized agents (e.g., analytics, HR, finance).

- One of those agents uses MCP internally to invoke a search function, fetch a document, or compute something using a model.

- The result is returned as an artifact via A2A, enabling end-to-end agent collaboration with modular tool access.

This architecture separates inter-agent communication (A2A) from intra-agent capability invocation (MCP) — making the system easier to compose, scale, and secure.

Conclusion

A2A is about agents talking to other agents over a network — securely, asynchronously, and task-centrically.

MCP is about injecting structured capabilities into a model session, letting LLMs reason over tools and data contextually.

Used together, they support composable, multi-agent systems that are both extensible and interoperable.

How the MCP + A2A base infrastructure could shape the future of agent product marketplaces

Lastly, I want to talk about how this core technical foundation could shape the future of the AI marketplace — and what it means for people building AI products.

The change of human computer of interaction

A clear example of this shift can be seen in developer and service workflows. With MCP servers now integrated into IDEs and coding agents, the way developers interact with tools is fundamentally changing.

Previously, a typical workflow involved searching for the right service, setting up hosting, reading documentation, integrating APIs manually, writing code in the IDE, and configuring features through a low-code dashboard. It was a fragmented experience, requiring context switching and technical overhead at each step.

Now, with MCP-connected coding agents, much of that complexity can be abstracted away. Developers can discover and use tools more naturally through conversational prompts. API integration is becoming part of the coding flow itself — often without needing a separate UI or manual setup. (Just think about how complex AWS or Microsoft’s dashboards can be.). The interaction becomes smoother — more about guiding behavior than assembling features.

In this model, user or developer interaction shifts from configuring features to orchestrating behaviors. This changes the role of product design too.

Instead of using UIs to “patch over” technical challenges (e.g. “this is too hard to code, let’s make a config panel”), we now need to:

- Think about the end-to-end experience

- Design how and when AI + user interactions should come together

- Let the AI handle the logic, and guide users through intent and flow

The challenge becomes deciding when and how AI and user input should come together, let the AI handle the logic, and guide users through intent and flow and how to insert the right interactions at the right time.

I used a developer service and API product as an example to show how user interaction could change — but the same applies to business software. For a long time, business tools have been complex and hard to use. Natural language interaction has the potential to simplify many of those workflows.

Agentic product paradigms and their impact on SaaS

We’re starting to see a growing number of MCP servers emerge. Imagine Airbnb offering a booking MCP server, or Google Maps exposing a map MCP server. An agent (as an MCP client) could connect to many of these servers at once — unlocking workflows that previously required custom integrations or tightly bound apps.

Compared to the SaaS era, where integrations were often manual and rigid, this model enables more autonomous workflows and fluid connections between services. Here are two examples:

-

Design from documents

You write a PRD in Notion. A Figma agent reads the document and automatically creates a wireframe that lays out the core concepts — no manual handoff needed.

-

Competitor research, end-to-end

You ask for a competitor analysis. A group of agents searches the web, signs up for relevant services on your behalf (with secure auth), collects the results, and delivers the artifacts back — already organized in your Notion workspace.

Challenges with authentication and authorization boundaries

With the rise of agent to agent connections, MCP client to MCP server connections, there are lots of underling needs about authentication and authorization because agent will act on behalf of human and users and credentials must be secured through this journye.

So far there are several scenarios specific to the new rise of agent to agent and MCP.

- Agent vs SaaS & WebsiteApp

- MCP client (Agent) vs MCP server

- User vs Agent

- Agent vs Agent

Another interesting use case is multi-identity federation Google mentioned:

For example, User U is working with Agent A requiring A-system's identifier. If Agent A then depends on Tool B or Agent B which requires B-system identifier, the user may need to provide identities for both A-system and B-system in a single request. (Assume A-system is an enterprise LDAP identity and B-system is a SaaS-provider identity).

Logto is an OIDC and OAuth provider, well-suited for the future of AI integrations.

With its flexible infrastructure, we’re actively expanding its capabilities and have published a series of tutorials to help developers get started quickly.

Have questions?

Reach out to us — or dive in and explore what you can build with Logto today.