Top 10 AI Models Every Developer Should Know in 2025

Before diving into specific models, let's clarify the distinct categories of AI models available today: Category What They Do Example Use Cases LLMs Process and generate human-like text Code generation, content creation, API documentation Generative Models Create content across multiple modalities Custom UI assets, wireframes, mockups Computer Vision Process and understand visual data OCR integration, document analysis, UI testing Recommendation Systems Predict user preferences In-app content personalization, user engagement Time Series Models Analyze sequential data patterns System monitoring, anomaly detection Reinforcement Learning Learn through trial and error Training agents, optimization problems Graph Neural Networks Process node-edge relationships Network analysis, dependency mapping GANs Generate realistic synthetic data Testing data, simulation environments Transformer Models Process sequences with attention The foundation for most modern LLMs Decision Tree Models Make predictions through branches Rapid classification, feature importance How I selected the models in this list In this article, I listed 10 AI models that you can use to build AI-powered applications. These recommendations come from personal experience (I will also list use cases so you can better understand when to use each one), research papers, articles on models that achieved the best performance for certain tasks (like YOLO for computer vision), some lesser know Reddit threads (which often acts as a gold mine of information to me) and Peter Yang’s famous article on “An Opinionated Guide on Which AI Model to Use in 2025”. When it comes to models, we are spoiled with choices now (Even while writing this article, I learnt about Llama 4 being launched). I personally use 2-3 different models for different types of tasks. My go-to ones are Claude 3.7 Sonnet for coding and 4o for creative tasks such as writing. Along with these, I also use tools like v0/Bolt to build the frontend, Pieces for help within the IDE, and acting as a second brain for me. While I cannot cover everything AI-related in this one blog, I will list some of the best Gen AI models that you should know about and also cover how you can use them in your daily tasks. Top 10 AI Models for Developers in 2025 1. GPT-4o: The Versatile Creator Strengths: Multimodal capabilities (text, image, audio) 128k token context window (16x larger than GPT-4) Significant improvements in image understanding and generation 50% cheaper API costs than previous models When to use it: GPT-4o excels at creative tasks requiring imagination and flair. It's particularly strong for generating UI copy, ideation, creating documentation, and producing visual assets. // Example: Using GPT-4o API for image generation const response = await openai.images.generate({ model: "gpt-4o", prompt: "Create a Ghibli-style UI dashboard for a plant monitoring application", n: 1, size: "1024x1024", }); Developer tip: While powerful for creative tasks, GPT-4o can struggle with complex, multi-step code challenges. It's best paired with a more code-focused model for development workflows. 2. Claude 3.7 Sonnet: The Developer's Companion Strengths: Exceptional at code generation and debugging Strong reasoning capabilities with extended thinking mode Excellent at extracting information from complex diagrams and technical docs Better at following technical instructions precisely When to use it: Claude 3.7 Sonnet shines when you need accurate, well-structured code, especially for front-end projects. It's particularly valuable for understanding and generating code from diagrams, screenshots, and technical documentation. # Example: Using Claude API for code generation from a diagram import anthropic client = anthropic.Client(api_key="your_key") response = client.messages.create( model="claude-3-7-sonnet-20250219", max_tokens=4000, system="You're an expert React developer.", messages=[ {"role": "user", "content": [ {"type": "text", "text": "Convert this wireframe into React code with Tailwind CSS"}, {"type": "image", "source": {"type": "base64", "media_type": "image/png", "data": "..."}} ]} ] ) Developer tip: Claude's "extended thinking" mode (available to Pro users) significantly improves its performance on complex reasoning tasks, making it worth the investment for intricate development problems. 3. YOLO (You Only Look Once): Computer Vision Workhorse Strengths: Single-pass architecture for real-time object detection Works on resource-constrained devices (mobile, Raspberry Pi) Supports multiple tasks beyond object detection Easy integration via multiple libraries/formats When to use it: YOLO is indispensable when your app needs to "see" the world - whether for real-time object detection, gesture recognition, or augmented rea

Before diving into specific models, let's clarify the distinct categories of AI models available today:

| Category | What They Do | Example Use Cases |

|---|---|---|

| LLMs | Process and generate human-like text | Code generation, content creation, API documentation |

| Generative Models | Create content across multiple modalities | Custom UI assets, wireframes, mockups |

| Computer Vision | Process and understand visual data | OCR integration, document analysis, UI testing |

| Recommendation Systems | Predict user preferences | In-app content personalization, user engagement |

| Time Series Models | Analyze sequential data patterns | System monitoring, anomaly detection |

| Reinforcement Learning | Learn through trial and error | Training agents, optimization problems |

| Graph Neural Networks | Process node-edge relationships | Network analysis, dependency mapping |

| GANs | Generate realistic synthetic data | Testing data, simulation environments |

| Transformer Models | Process sequences with attention | The foundation for most modern LLMs |

| Decision Tree Models | Make predictions through branches | Rapid classification, feature importance |

How I selected the models in this list

In this article, I listed 10 AI models that you can use to build AI-powered applications.

These recommendations come from personal experience (I will also list use cases so you can better understand when to use each one), research papers, articles on models that achieved the best performance for certain tasks (like YOLO for computer vision), some lesser know Reddit threads (which often acts as a gold mine of information to me) and Peter Yang’s famous article on “An Opinionated Guide on Which AI Model to Use in 2025”.

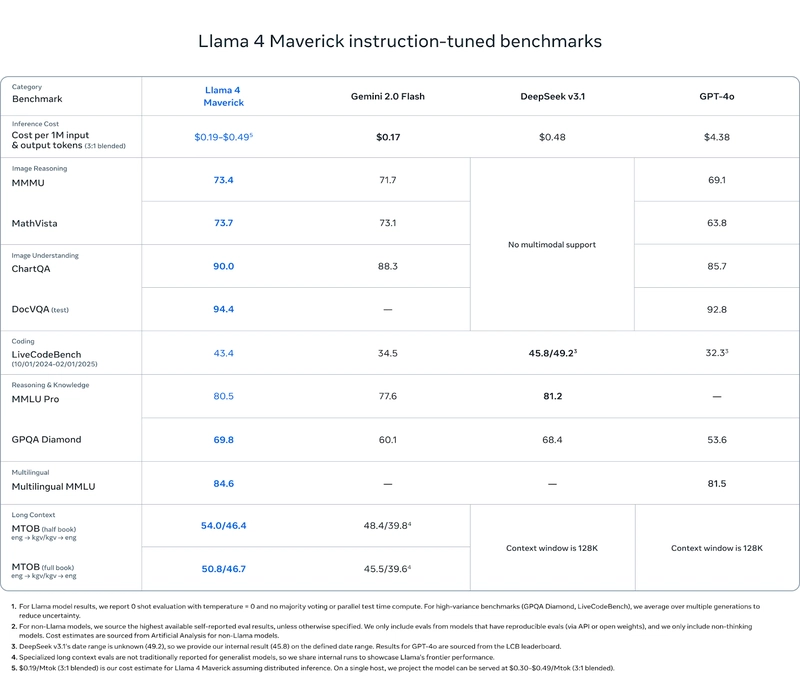

When it comes to models, we are spoiled with choices now (Even while writing this article, I learnt about Llama 4 being launched).

I personally use 2-3 different models for different types of tasks.

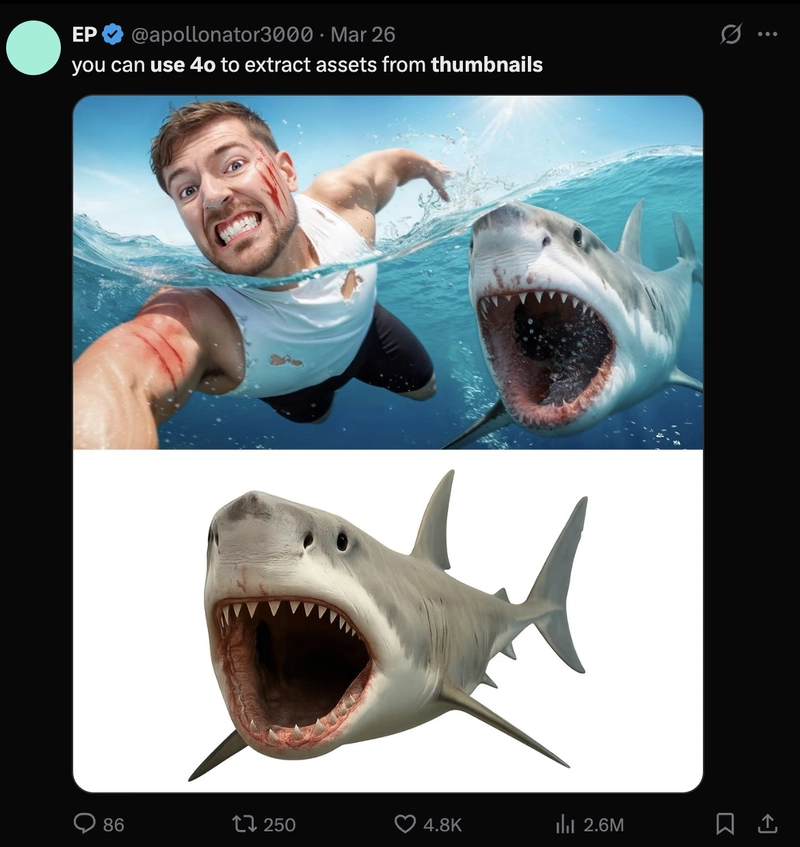

My go-to ones are Claude 3.7 Sonnet for coding and 4o for creative tasks such as writing. Along with these, I also use tools like v0/Bolt to build the frontend, Pieces for help within the IDE, and acting as a second brain for me.

While I cannot cover everything AI-related in this one blog, I will list some of the best Gen AI models that you should know about and also cover how you can use them in your daily tasks.

Top 10 AI Models for Developers in 2025

1. GPT-4o: The Versatile Creator

Strengths:

- Multimodal capabilities (text, image, audio)

- 128k token context window (16x larger than GPT-4)

- Significant improvements in image understanding and generation

- 50% cheaper API costs than previous models

When to use it:

GPT-4o excels at creative tasks requiring imagination and flair. It's particularly strong for generating UI copy, ideation, creating documentation, and producing visual assets.

// Example: Using GPT-4o API for image generation

const response = await openai.images.generate({

model: "gpt-4o",

prompt: "Create a Ghibli-style UI dashboard for a plant monitoring application",

n: 1,

size: "1024x1024",

});

Developer tip: While powerful for creative tasks, GPT-4o can struggle with complex, multi-step code challenges. It's best paired with a more code-focused model for development workflows.

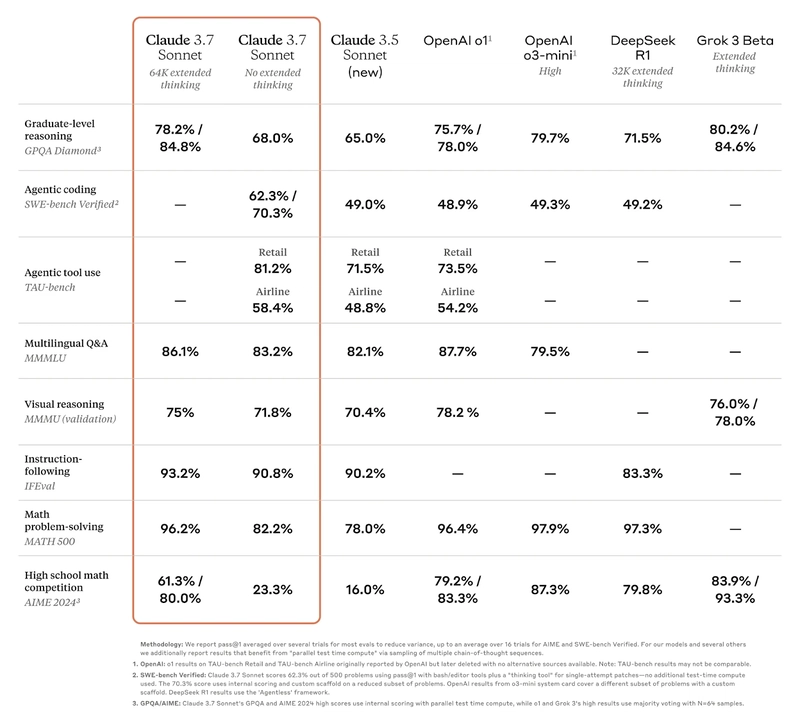

2. Claude 3.7 Sonnet: The Developer's Companion

Strengths:

- Exceptional at code generation and debugging

- Strong reasoning capabilities with extended thinking mode

- Excellent at extracting information from complex diagrams and technical docs

- Better at following technical instructions precisely

When to use it:

Claude 3.7 Sonnet shines when you need accurate, well-structured code, especially for front-end projects. It's particularly valuable for understanding and generating code from diagrams, screenshots, and technical documentation.

# Example: Using Claude API for code generation from a diagram

import anthropic

client = anthropic.Client(api_key="your_key")

response = client.messages.create(

model="claude-3-7-sonnet-20250219",

max_tokens=4000,

system="You're an expert React developer.",

messages=[

{"role": "user", "content": [

{"type": "text", "text": "Convert this wireframe into React code with Tailwind CSS"},

{"type": "image", "source": {"type": "base64", "media_type": "image/png", "data": "..."}}

]}

]

)

Developer tip: Claude's "extended thinking" mode (available to Pro users) significantly improves its performance on complex reasoning tasks, making it worth the investment for intricate development problems.

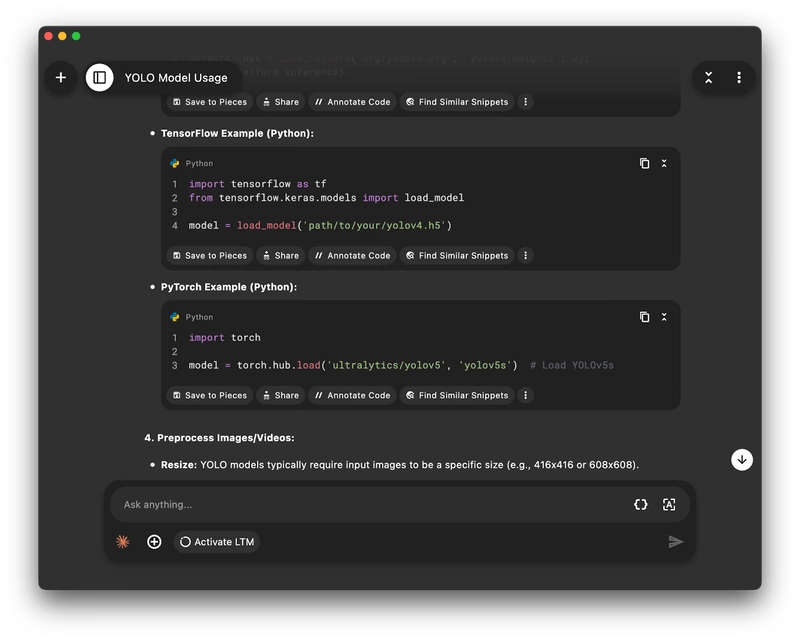

3. YOLO (You Only Look Once): Computer Vision Workhorse

Strengths:

- Single-pass architecture for real-time object detection

- Works on resource-constrained devices (mobile, Raspberry Pi)

- Supports multiple tasks beyond object detection

- Easy integration via multiple libraries/formats

When to use it:

YOLO is indispensable when your app needs to "see" the world - whether for real-time object detection, gesture recognition, or augmented reality applications.

# YOLOv8 implementation for real-time object detection

from ultralytics import YOLO

# Load the model

model = YOLO('yolov8n.pt')

# Run inference on an image

results = model('path/to/image.jpg')

# Process results

for r in results:

boxes = r.boxes

for box in boxes:

x1, y1, x2, y2 = box.xyxy[0]

confidence = box.conf[0]

class_id = box.cls[0]

Developer tip: YOLOv8 can be exported to ONNX, TFLite, and other formats for cross-platform deployment, making it ideal for edge device implementations.

4. BERT: The NLP Foundation Model

Strengths:

- Bidirectional understanding of context

- Pre-trained versions available for specific domains

- Excellent at classification and semantic understanding

- Lightweight compared to modern LLMs

When to use it:

BERT remains a go-to choice for specialized text classification, sentiment analysis, named entity recognition, and information extraction tasks where full LLMs would be overkill.

# Using BERT for sentiment analysis with Hugging Face

from transformers import AutoTokenizer, AutoModelForSequenceClassification

import torch

# Load tokenizer and model

tokenizer = AutoTokenizer.from_pretrained("nlptown/bert-base-multilingual-uncased-sentiment")

model = AutoModelForSequenceClassification.from_pretrained("nlptown/bert-base-multilingual-uncased-sentiment")

# Analyze sentiment

text = "Your app has transformed my development workflow!"

inputs = tokenizer(text, return_tensors="pt")

with torch.no_grad():

logits = model(**inputs).logits

# Get sentiment (1-5 stars)

sentiment = torch.argmax(logits, dim=1).item() + 1

Developer tip: For search functionality, BERT-based embeddings can provide better semantic understanding than traditional keyword approaches at a fraction of the cost of newer embedding models.

5. LLaMA: Open Source Foundation

Strengths:

- Fully open-source

- Runs locally for privacy and reduced latency

- Available in multiple sizes (7B to 70B parameters)

- Strong foundation for fine-tuning custom models

When to use it:

LLaMA models are ideal for applications requiring local inference, privacy guarantees, or customized behavior through fine-tuning.

# Running LLaMA locally with Ollama

import ollama

# Generate text with LLaMA

response = ollama.generate(

model='llama:4',

prompt='Explain how to implement JWT authentication in a Node.js app',

options={'temperature': 0.7}

)

print(response['response'])

Developer tip: The latest LLaMA 4 models offer multimodal capabilities similar to cloud services but with the privacy benefits of local execution, making them worth considering for sensitive applications.

6. Whisper: Audio Intelligence

Strengths:

- Exceptional multilingual speech recognition

- Handles noisy audio gracefully

- Works offline with optimized implementations

- No fine-tuning needed for most use cases

When to use it:

Whisper is the go-to model for any application requiring speech-to-text capabilities, from podcast transcription to voice commands and meeting notes.

# Transcribing audio with Whisper

import whisper

model = whisper.load_model("base") # Options: tiny, base, small, medium, large

# Transcribe file

result = model.transcribe("audio.mp3")

# Get transcription text

print(result["text"])

Developer tip: For real-time applications, consider using whisper.cpp, which offers significantly faster performance on CPU-only environments while maintaining quality.

7. XGBoost: The ML Reliable

Strengths:

- Exceptional performance on structured data

- Built-in cross-validation

- Interpretable feature importance

- Low resource requirements

When to use it:

XGBoost remains the first choice for predictive analytics on tabular data, from user behavior prediction to fraud detection and recommendation systems.

# Basic XGBoost implementation for classification

import xgboost as xgb

from sklearn.model_selection import train_test_split

# Prepare data

X_train, X_test, y_train, y_test = train_test_split(features, labels, test_size=0.2)

# Train model

model = xgb.XGBClassifier(

n_estimators=100,

learning_rate=0.1,

max_depth=5

)

model.fit(X_train, y_train)

# Get feature importance

importance = model.feature_importances_

Developer tip: XGBoost's feature importance metrics provide valuable insights for product development, helping identify which user behaviors or attributes most strongly predict outcomes.

8. Stable Diffusion: Visual Creation Engine

Strengths:

- Generates high-quality images from text prompts

- Runs locally on consumer GPUs

- Highly customizable through fine-tuning

- Extensive ecosystem of extensions

When to use it:

Stable Diffusion is perfect for generating design assets, mockups, illustrations, and visual content for applications and websites.

# Using Stable Diffusion with Hugging Face

from diffusers import StableDiffusionPipeline

import torch

pipe = StableDiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-3.5")

pipe = pipe.to("cuda")

# Generate image

prompt = "A minimalist mobile app UI for plant care tracking, isometric view"

image = pipe(prompt).images[0]

image.save("app-mockup.png")

Developer tip: The latest Stable Diffusion 3.5 version introduces significant quality improvements for technical illustrations and UI mockups, making it particularly useful for developers visualizing concepts.

9. Mistral 7B: The Efficient Performer

Strengths:

- Exceptional performance-to-size ratio

- Low latency inference

- Works well on limited hardware

- Open weights for customization

When to use it:

Mistral 7B is ideal for production applications requiring responsive AI capabilities without enterprise-level infrastructure, such as in-app assistants and real-time generators.

# Using Mistral 7B with Hugging Face

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("mistralai/Mistral-7B-v0.1")

model = AutoModelForCausalLM.from_pretrained("mistralai/Mistral-7B-v0.1")

# Generate response

input_text = "Explain how to implement pagination in a REST API"

inputs = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(**inputs, max_length=200)

response = tokenizer.decode(outputs[0])

Developer tip: Mistral's efficient architecture makes it an excellent choice for edge deployments where you need LLM capabilities closer to the user.

10. Granite 3.0: Enterprise-Ready AI

Strengths:

- Developer-friendly license (Apache 2.0)

- Strong multilingual support

- Trained on 100+ programming languages

- Reduced bias and toxicity

When to use it:

Granite 3.0 is perfect for building enterprise applications requiring clear licensing terms and robust safety guardrails.

# Using IBM's Granite 3.0 with Hugging Face

from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = "IBM/granite-3b-code-instruct"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id)

prompt = """

Write a Node.js function to securely store user passwords using bcrypt.

"""

inputs = tokenizer(prompt, return_tensors="pt")

outputs = model.generate(**inputs, max_length=500)

response = tokenizer.decode(outputs[0], skip_special_tokens=True)

Developer tip: Granite's strong focus on programming languages makes it particularly valuable for code generation tasks in enterprise environments where licensing concerns are paramount.

Making the Right Choice: A Developer's Decision Framework

When selecting an AI model for your project, consider this decision framework:

- Task specificity: Is this a specialized task (like object detection) or a general task (like text generation)?

- Infrastructure constraints: Local deployment or cloud-based? CPU or GPU?

- Latency requirements: Is real-time performance critical?

- Licensing needs: Open source or proprietary? Commercial use constraints?

- Customization level: Off-the-shelf or fine-tuned to your domain?

Conclusion: Build Your AI Stack Strategically

The most effective developers in 2025 aren't using a single AI model for everything - they're strategically combining specialized models into a cohesive AI stack:

- GPT-4o for creative tasks and initial prototyping

- Claude 3.7 Sonnet for precise code generation and technical documentation

- YOLO for computer vision components

- Whisper for voice interfaces

- Domain-specific models (like BERT variants) for specialized functions

By understanding the strengths and limitations of each model, you can leverage AI as a true force multiplier in your development workflow, focusing your human creativity and problem-solving skills where they matter most.

Remember, the goal isn't to know every model out there - it's to build an intuition for which tool fits which job, and to stay curious about emerging capabilities that could transform your development process.