TokenBridge: Bridging The Gap Between Continuous and Discrete Token Representations In Visual Generation

Autoregressive visual generation models have emerged as a groundbreaking approach to image synthesis, drawing inspiration from language model token prediction mechanisms. These innovative models utilize image tokenizers to transform visual content into discrete or continuous tokens. The approach facilitates flexible multimodal integrations and allows adaptation of architectural innovations from LLM research. However, the field has […] The post TokenBridge: Bridging The Gap Between Continuous and Discrete Token Representations In Visual Generation appeared first on MarkTechPost.

Autoregressive visual generation models have emerged as a groundbreaking approach to image synthesis, drawing inspiration from language model token prediction mechanisms. These innovative models utilize image tokenizers to transform visual content into discrete or continuous tokens. The approach facilitates flexible multimodal integrations and allows adaptation of architectural innovations from LLM research. However, the field has a critical challenge of determining the optimal token representation strategy. The choice between discrete and continuous token representations remains a fundamental dilemma, significantly impacting model complexity and generation quality.

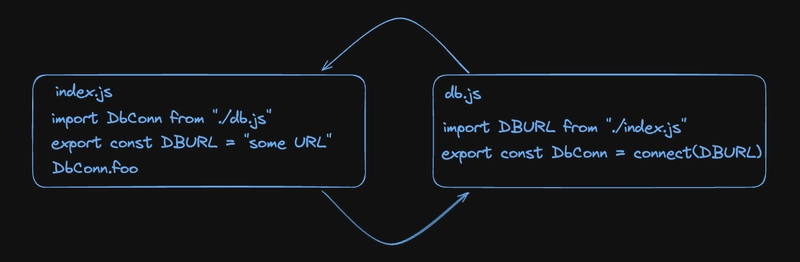

Existing methods include visual tokenization that explores two primary approaches: continuous and discrete token representations. Variational autoencoders establish continuous latent spaces that maintain high visual fidelity, becoming foundational in diffusion model development. Discrete methods like VQ-VAE and VQGAN enable straightforward autoregressive modeling but encounter significant limitations, including codebook collapse and information loss. Autoregressive image generation evolves from computationally intensive pixel-based methods to more efficient token-based strategies. While models like DALL-E show promising results, hybrid methods such as GIVT and MAR introduce complex architectural modifications to improve generation quality, making the traditional autoregressive modeling pipeline complex.

Researchers from the University of Hong Kong, ByteDance Seed, Ecole Polytechnique, and Peking University have proposed TokenBridge to bridge the critical gap between continuous and discrete token representations in visual generation. It utilizes the strong representation capacity of continuous tokens while maintaining the modeling simplicity of discrete tokens. TokenBridge decouples the discretization process from initial tokenizer training by introducing a novel post-training quantization technique. Moreover, it implements a unique dimension-wise quantization strategy that independently discretizes each feature dimension, complemented by a lightweight autoregressive prediction mechanism. It efficiently manages the expanded token space while preserving high-quality visual generation capabilities.

TokenBridge introduces a training-free dimension-wise quantization technique that operates independently on each feature channel, effectively addressing previous token representation limitations. The approach capitalizes on two crucial properties of Variational Autoencoder features: their bounded nature due to KL constraints and near-Gaussian distribution. The autoregressive model adopts a Transformer architecture with two primary configurations: a default L model comprising 32 blocks with 1024 width (approx 400 million parameters) for initial studies and a larger H model with 40 blocks and 1280 width (around 910 million parameters) for final evaluations. This design allows a detailed exploration of the proposed quantization strategy across different model scales.

The results show that TokenBridge outperforms traditional discrete token models, achieving superior Frechet Inception Distance (FID) scores with significantly fewer parameters. For instance, TokenBridge-L secures an FID of 1.76 with only 486 million parameters, compared to LlamaGen’s 2.18 using 3.1 billion parameters. When benchmarked against continuous approaches, TokenBridge-L outperforms GIVT, achieving a FID of 1.76 versus 3.35. The H-model configuration further validates the method’s effectiveness, matching MAR-H in FID (1.55) while delivering superior Inception Score and Recall metrics with marginally fewer parameters. These results show TokenBridge’s capability to bridge discrete and continuous token representations.

In conclusion, researchers introduced TokenBridge, which bridges the longstanding gap between discrete and continuous token representations. It achieves high-quality visual generation with remarkable efficiency by introducing a post-training quantization approach and dimension-wise autoregressive decomposition. The research demonstrates that discrete token approaches using standard cross-entropy loss can compete with state-of-the-art continuous methods, eliminating the need for complex distribution modeling techniques. The approach provides a promising pathway for future investigations, potentially transforming how researchers conceptualize and implement token-based visual synthesis technologies.

Check out the Paper, GitHub Page and Project. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 85k+ ML SubReddit.

The post TokenBridge: Bridging The Gap Between Continuous and Discrete Token Representations In Visual Generation appeared first on MarkTechPost.