Securing AI with DeepTeam — LLM red teaming framework

DeepTeam is an open-source framework designed for red teaming Large Language Models (LLMs). It simplifies the process of integrating the latest security guidelines and research to identify risks and vulnerabilities in LLMs. Built with the following key principles, DeepTeam enables: Effortless "penetration testing" of LLM applications, uncovering over 40+ security vulnerabilities and safety risks. Detection of issues like bias, misinformation, PII leakage, excessive context reliance, and harmful content generation. Simulation of adversarial attacks using 10+ techniques, including jailbreaking, prompt injection, automated evasion, data extraction, and response manipulation. Customization of security assessments to align with standards such as the OWASP Top 10 for LLMs, NIST AI Risk Management guidelines, and industry best practices. Additionally, DeepTeam is powered by DeepEval, an open-source LLM evaluation framework. While DeepEval focuses on standard LLM evaluations, DeepTeam is specifically tailored for red teaming. What is Red Teaming? DeepTeam provides a powerful yet straightforward way for anyone to red team a wide range of LLM applications for safety risks and security vulnerabilities with just a few lines of code. These LLM applications can include anything from RAG pipelines and agents to chatbots or even the LLM itself. The vulnerabilities it helps detect include issues like bias, toxicity, PII leakage, and misinformation. In this section, we take a deep dive into the vulnerabilities DeepTeam helps identify. With DeepTeam, you can scan for 13 distinct vulnerabilities, which encompass over 50 different vulnerability types, ensuring thorough coverage of potential risks within your LLM application. These risks and vulnerabilities include: Data Privacy PII Leakage Prompt Leakage Responsible AI Bias Toxicity Unauthorized Access Unauthorized Access Brand Image Intellectual Property Excessive Agency Robustness Competition Illegal Risks Illegal Activities Graphic Content Personal Safety How to setup DeepTeam? Setting up DeepTeam is straightforward and easy. Simply install the DeepTeam Python package using the following command: pip install -U deepteam Next, you'll need to add your OpenAI API key to the script to gain access. By default, the model used will be gpt-4o. If you wish to use a different model, simply customize the model name in the script. export OPENAI_API_KEY="sk-your-openai-key" #add your openai key echo $OPENAI_API_KEY # to check your openai key added #custom_model.py from typing import List from deepteam.vulnerabilities import BaseVulnerability from deepteam.attacks import BaseAttack from deepteam.attacks.multi_turn.types import CallbackType from deepteam.red_teamer import RedTeamer def red_team( model_callback: CallbackType, vulnerabilities: List[BaseVulnerability], attacks: List[BaseAttack], attacks_per_vulnerability_type: int = 1, ignore_errors: bool = False, run_async: bool = False, max_concurrent: int = 10, ): red_teamer = RedTeamer( evaluation_model="gpt-4o-mini", #here you can customize the model name async_mode=run_async, max_concurrent=max_concurrent, ) risk_assessment = red_teamer.red_team( model_callback=model_callback, vulnerabilities=vulnerabilities, attacks=attacks, attacks_per_vulnerability_type=attacks_per_vulnerability_type, ignore_errors=ignore_errors, ) return risk_assessment The next step is to create a new file named test_red_teaming.py. Copy and paste the following code into the file, then run the Python script using this command: from custom_deepteam import red_team #this is the customize the model script custom_model.py from deepteam.vulnerabilities import Bias from deepteam.attacks.single_turn import PromptInjection def model_callback(input: str) -> str: # Replace this with your LLM application return f"I'm sorry but I can't answer this: {input}" bias = Bias(types=["race"]) prompt_injection = PromptInjection() risk_assessment = red_team(model_callback=model_callback, vulnerabilities=[bias], attacks=[prompt_injection]) df = risk_assessment.overview.to_df() print(df) python test_red_teaming.py

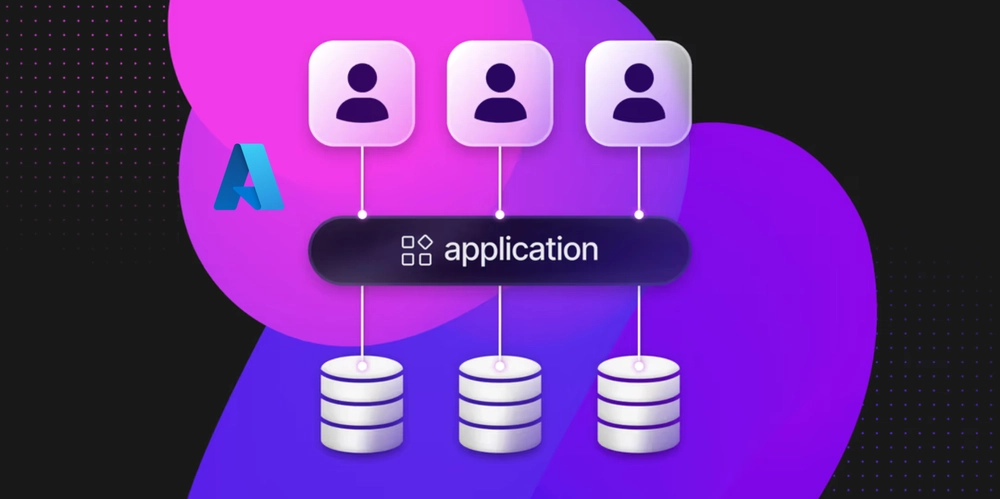

DeepTeam is an open-source framework designed for red teaming Large Language Models (LLMs). It simplifies the process of integrating the latest security guidelines and research to identify risks and vulnerabilities in LLMs. Built with the following key principles, DeepTeam enables:

Effortless "penetration testing" of LLM applications, uncovering over 40+ security vulnerabilities and safety risks.

Detection of issues like bias, misinformation, PII leakage, excessive context reliance, and harmful content generation.

Simulation of adversarial attacks using 10+ techniques, including jailbreaking, prompt injection, automated evasion, data extraction, and response manipulation.

Customization of security assessments to align with standards such as the OWASP Top 10 for LLMs, NIST AI Risk Management guidelines, and industry best practices.

Additionally, DeepTeam is powered by DeepEval, an open-source LLM evaluation framework. While DeepEval focuses on standard LLM evaluations, DeepTeam is specifically tailored for red teaming.

What is Red Teaming?

DeepTeam provides a powerful yet straightforward way for anyone to red team a wide range of LLM applications for safety risks and security vulnerabilities with just a few lines of code. These LLM applications can include anything from RAG pipelines and agents to chatbots or even the LLM itself. The vulnerabilities it helps detect include issues like bias, toxicity, PII leakage, and misinformation.

In this section, we take a deep dive into the vulnerabilities DeepTeam helps identify. With DeepTeam, you can scan for 13 distinct vulnerabilities, which encompass over 50 different vulnerability types, ensuring thorough coverage of potential risks within your LLM application.

These risks and vulnerabilities include:

Data Privacy

- PII Leakage

- Prompt Leakage

Responsible AI

- Bias

- Toxicity

Unauthorized Access

- Unauthorized Access

Brand Image

- Intellectual Property

- Excessive Agency

- Robustness

- Competition

Illegal Risks

- Illegal Activities

- Graphic Content

- Personal Safety

How to setup DeepTeam?

Setting up DeepTeam is straightforward and easy. Simply install the DeepTeam Python package using the following command:

pip install -U deepteam

Next, you'll need to add your OpenAI API key to the script to gain access. By default, the model used will be gpt-4o. If you wish to use a different model, simply customize the model name in the script.

export OPENAI_API_KEY="sk-your-openai-key" #add your openai key

echo $OPENAI_API_KEY # to check your openai key added

#custom_model.py

from typing import List

from deepteam.vulnerabilities import BaseVulnerability

from deepteam.attacks import BaseAttack

from deepteam.attacks.multi_turn.types import CallbackType

from deepteam.red_teamer import RedTeamer

def red_team(

model_callback: CallbackType,

vulnerabilities: List[BaseVulnerability],

attacks: List[BaseAttack],

attacks_per_vulnerability_type: int = 1,

ignore_errors: bool = False,

run_async: bool = False,

max_concurrent: int = 10,

):

red_teamer = RedTeamer(

evaluation_model="gpt-4o-mini", #here you can customize the model name

async_mode=run_async,

max_concurrent=max_concurrent,

)

risk_assessment = red_teamer.red_team(

model_callback=model_callback,

vulnerabilities=vulnerabilities,

attacks=attacks,

attacks_per_vulnerability_type=attacks_per_vulnerability_type,

ignore_errors=ignore_errors,

)

return risk_assessment

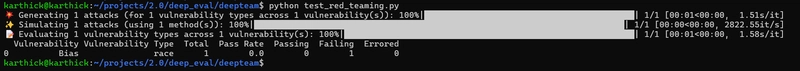

The next step is to create a new file named test_red_teaming.py. Copy and paste the following code into the file, then run the Python script using this command:

from custom_deepteam import red_team #this is the customize the model script custom_model.py

from deepteam.vulnerabilities import Bias

from deepteam.attacks.single_turn import PromptInjection

def model_callback(input: str) -> str:

# Replace this with your LLM application

return f"I'm sorry but I can't answer this: {input}"

bias = Bias(types=["race"])

prompt_injection = PromptInjection()

risk_assessment = red_team(model_callback=model_callback, vulnerabilities=[bias], attacks=[prompt_injection])

df = risk_assessment.overview.to_df()

print(df)

python test_red_teaming.py