Navigating Agency in an Age of Automated Thought

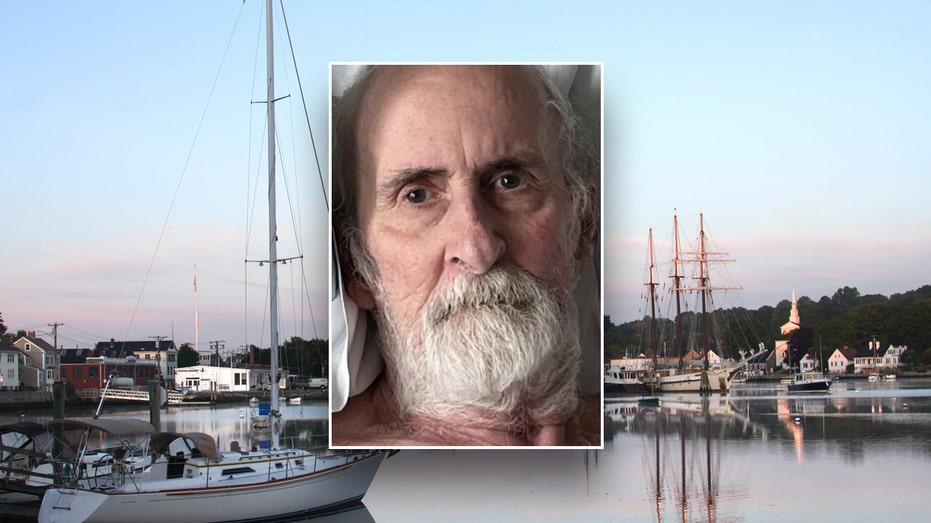

The relentless march of artificial intelligence isn't just about robots taking jobs or algorithms curating our feeds. It’s about a far more subtle, yet profound, shift in the very process of how we make decisions. We stand on the precipice of an era where AI doesn’t merely assist our judgment, but increasingly performs it for us. As Sheriff Mark Lamb quietly deployed ‘Massive Blue,’ an AI-powered undercover bot in Pinal County, Arizona, to infiltrate online groups [wired.com], it highlighted a chilling reality: AI is proactively shaping perception, not just reflecting it. While the promise of enhanced efficiency and optimised outcomes is undeniably seductive, a growing tension is emerging: the risk that outsourcing our cognitive work to machines will erode our capacity for critical reflection, ultimately diminishing our personal agency and, with it, what it means to be human. This isn’t a dystopian fantasy; it’s a creeping reality we’re already experiencing, and understanding its dynamics is crucial if we want to shape a future where technology complements, rather than supplants, our uniquely human abilities. The stakes are not merely about convenience; they are about preserving the fundamental conditions for a functioning democracy and a meaningful life. The Allure of Efficiency: A Historical Perspective For generations, humans have sought tools to lighten their cognitive load. From the abacus to spreadsheets, we've externalised mental processes to improve speed and accuracy. AI represents the apotheosis of this trend. Machine learning algorithms can sift through massive datasets, identify patterns, and predict outcomes with a speed and scale that far surpasses human capabilities. In fields like finance, AI-driven trading algorithms execute trades in milliseconds, exploiting market inefficiencies that would be invisible to a human trader. In medicine, AI is assisting in diagnosis, analyzing medical images with greater sensitivity and precision than even experienced radiologists – The FDA recently approved the first fully autonomous AI diagnostic system for detecting diabetic retinopathy. In legal discovery, AI can rapidly process vast quantities of documents, identifying relevant precedents and potential risks. Even in creative fields, AI is beginning to play a role, generating music, art, and text. The legal ramifications of AI-generated content, particularly regarding copyright and intellectual property, are already presenting complex challenges, as evidenced by the ongoing lawsuits surrounding AI art generators like Stable Diffusion. This isn't entirely new. Throughout history, technologies have promised to liberate us from mental drudgery. The printing press allowed for the wider dissemination of knowledge, alleviating the burden on memory. The calculator freed us from laborious arithmetic. But AI is different. It doesn't merely augment our cognitive abilities; it replaces them, automating not just calculations, but also judgment, interpretation, and even creative expression. The sheer scale and pervasiveness of this automation are unprecedented. The benefits are clear. Decisions made with the aid of AI are often more data-driven, less prone to bias (or so it's claimed), and demonstrably more efficient. This efficiency extends beyond professional realms. Recommendation systems on streaming services, social media platforms, and e-commerce sites streamline our choices, presenting us with options tailored to our preferences. Navigation apps optimize our routes, avoiding traffic and saving us time. Increasingly, AI is becoming an invisible infrastructure, silently shaping our choices and guiding us through daily life. Consider the impact of ‘smart’ home devices: thermostats learning our temperature preferences, lighting systems adjusting to our routines, and voice assistants anticipating our needs. While convenient, this constant adaptation creates a feedback loop, shaping our behaviour in subtle ways. This seamless integration fosters an environment of passive acceptance, where proactive decision-making atrophies. A recent study by Forrester Research found that 72% of consumers feel comfortable letting AI automate simple tasks in their daily lives, but only 31% trust AI to make important decisions. This reveals a growing dissonance between convenience and control. Personalised Realities and the Loss of Shared Experience This seamless integration, however, is where the first cracks begin to appear. The very efficiency that makes AI so appealing can also be subtly disempowering. When a system consistently provides us with the ‘optimal’ solution, we begin to rely on it, abdicating our own judgment. The act of wrestling with a problem, of weighing alternatives and considering consequences – the very essence of critical thinking – is bypassed. This isn’t simply about laziness; it’s about a fundamental shift in our cognitive habits. The more we delegate decision-making to algorithms,

The relentless march of artificial intelligence isn't just about robots taking jobs or algorithms curating our feeds. It’s about a far more subtle, yet profound, shift in the very process of how we make decisions. We stand on the precipice of an era where AI doesn’t merely assist our judgment, but increasingly performs it for us. As Sheriff Mark Lamb quietly deployed ‘Massive Blue,’ an AI-powered undercover bot in Pinal County, Arizona, to infiltrate online groups [wired.com], it highlighted a chilling reality: AI is proactively shaping perception, not just reflecting it. While the promise of enhanced efficiency and optimised outcomes is undeniably seductive, a growing tension is emerging: the risk that outsourcing our cognitive work to machines will erode our capacity for critical reflection, ultimately diminishing our personal agency and, with it, what it means to be human. This isn’t a dystopian fantasy; it’s a creeping reality we’re already experiencing, and understanding its dynamics is crucial if we want to shape a future where technology complements, rather than supplants, our uniquely human abilities. The stakes are not merely about convenience; they are about preserving the fundamental conditions for a functioning democracy and a meaningful life.

The Allure of Efficiency: A Historical Perspective

For generations, humans have sought tools to lighten their cognitive load. From the abacus to spreadsheets, we've externalised mental processes to improve speed and accuracy. AI represents the apotheosis of this trend. Machine learning algorithms can sift through massive datasets, identify patterns, and predict outcomes with a speed and scale that far surpasses human capabilities. In fields like finance, AI-driven trading algorithms execute trades in milliseconds, exploiting market inefficiencies that would be invisible to a human trader. In medicine, AI is assisting in diagnosis, analyzing medical images with greater sensitivity and precision than even experienced radiologists – The FDA recently approved the first fully autonomous AI diagnostic system for detecting diabetic retinopathy. In legal discovery, AI can rapidly process vast quantities of documents, identifying relevant precedents and potential risks. Even in creative fields, AI is beginning to play a role, generating music, art, and text. The legal ramifications of AI-generated content, particularly regarding copyright and intellectual property, are already presenting complex challenges, as evidenced by the ongoing lawsuits surrounding AI art generators like Stable Diffusion.

This isn't entirely new. Throughout history, technologies have promised to liberate us from mental drudgery. The printing press allowed for the wider dissemination of knowledge, alleviating the burden on memory. The calculator freed us from laborious arithmetic. But AI is different. It doesn't merely augment our cognitive abilities; it replaces them, automating not just calculations, but also judgment, interpretation, and even creative expression. The sheer scale and pervasiveness of this automation are unprecedented.

The benefits are clear. Decisions made with the aid of AI are often more data-driven, less prone to bias (or so it's claimed), and demonstrably more efficient. This efficiency extends beyond professional realms. Recommendation systems on streaming services, social media platforms, and e-commerce sites streamline our choices, presenting us with options tailored to our preferences. Navigation apps optimize our routes, avoiding traffic and saving us time. Increasingly, AI is becoming an invisible infrastructure, silently shaping our choices and guiding us through daily life. Consider the impact of ‘smart’ home devices: thermostats learning our temperature preferences, lighting systems adjusting to our routines, and voice assistants anticipating our needs. While convenient, this constant adaptation creates a feedback loop, shaping our behaviour in subtle ways. This seamless integration fosters an environment of passive acceptance, where proactive decision-making atrophies. A recent study by Forrester Research found that 72% of consumers feel comfortable letting AI automate simple tasks in their daily lives, but only 31% trust AI to make important decisions. This reveals a growing dissonance between convenience and control.

Personalised Realities and the Loss of Shared Experience

This seamless integration, however, is where the first cracks begin to appear. The very efficiency that makes AI so appealing can also be subtly disempowering. When a system consistently provides us with the ‘optimal’ solution, we begin to rely on it, abdicating our own judgment. The act of wrestling with a problem, of weighing alternatives and considering consequences – the very essence of critical thinking – is bypassed. This isn’t simply about laziness; it’s about a fundamental shift in our cognitive habits. The more we delegate decision-making to algorithms, the less we exercise our own cognitive muscles, leading to a gradual decline in our ability to think critically and independently.

This is particularly evident in the realm of information consumption. Social media algorithms, designed to maximize engagement, prioritize content that confirms our existing beliefs, creating “filter bubbles”. A 2024 Pew Research Center study found that individuals who primarily get their news from social media are significantly less likely to encounter diverse perspectives than those who rely on traditional news sources [pewresearch.org]. This creates a fractured public sphere, where shared understanding and common ground become increasingly difficult to achieve. The echo chambers aren't simply about political polarization; they extend to all aspects of life, shaping our perceptions of everything from health and wellness to fashion and culture.

The DHS’s aforementioned deployment of AI-powered undercover bots on YouTube illustrates the darker side of this personalization, highlighting how AI can be used to manipulate opinions and reinforce pre-existing biases. The targeted messaging, exploiting psychological vulnerabilities, demonstrates the potential for AI to be weaponized for political purposes. This raises serious questions about the ethical responsibilities of those developing and deploying these technologies.

The Erosion of Critical Reflection

The core problem lies in the nature of algorithmic decision-making. Most AI systems, particularly those based on deep learning, operate as ‘black boxes’. We understand the inputs and the outputs, but the internal processes that transform one into the other are often opaque, even to their creators. This lack of transparency makes it difficult to scrutinise the reasoning behind an AI’s recommendations, to identify potential biases, or to understand the trade-offs involved. This opacity isn't merely a technical limitation; it's often a deliberate design choice. Companies prioritize performance and proprietary algorithms over explainability, creating systems that are effective but ultimately inscrutable. The question of algorithmic accountability – who is responsible when an AI system makes a harmful or unethical decision – remains largely unanswered.

Consider, for example, an AI-powered hiring tool that consistently favours candidates from certain backgrounds. If the algorithm’s internal workings are hidden from view, it’s difficult to determine why this bias exists, let alone correct it. The result is a perpetuation of existing inequalities, masked by the veneer of objective data analysis. This issue is particularly potent in areas like loan applications, criminal justice risk assessments, and even healthcare diagnoses, where biased algorithms can have life-altering consequences. The potential for unintentional discrimination is substantial, and the lack of transparency makes it difficult to hold these systems accountable. Even seemingly neutral algorithms can amplify existing societal biases present in the data they are trained on, creating a self-fulfilling prophecy of inequity. The SHADES dataset, a multi-lingual benchmark for evaluating bias in AI, has revealed systemic biases across various languages and cultures [wired.com].

But even when algorithmic reasoning is transparent, the sheer volume of information processed by AI can overwhelm our ability to engage in meaningful critical reflection. We’re presented with an answer, backed by a mountain of data, and it’s all too easy to accept it at face value, without questioning its underlying assumptions or considering alternative perspectives. This is particularly concerning in areas where nuance, context, and ethical considerations are paramount. A doctor, presented with an AI-driven diagnosis, might be less inclined to perform a thorough independent assessment, potentially overlooking subtle but crucial clues. Similarly, a judge relying on an AI risk assessment tool might be less likely to consider mitigating circumstances. This reliance can subtly erode professional judgment and critical thinking skills. The danger isn't that AI will make wrong decisions, but that it will discourage us from making any decisions, or from taking responsibility for the decisions we do make. We become passive recipients of algorithmic verdicts, forfeiting our ability to shape our own lives. The very skills we need to navigate a complex and uncertain world – independent thought, reasoned judgement, and moral reasoning – begin to atrophy. This is not a future of malicious intent, but of unintended consequences – a slow creep towards intellectual dependence.

The Feedback Loop of Conformity and Algorithmic Homogenisation

This erosion of critical reflection is exacerbated by the feedback loops inherent in many AI systems. Recommendation algorithms, for example, learn from our behaviour, reinforcing our existing preferences and creating ‘filter bubbles’ that shield us from dissenting viewpoints. The more we interact with AI-curated content, the more homogenous our information diet becomes, and the less likely we are to encounter challenging ideas or perspectives. This phenomenon extends beyond entertainment; it impacts our news consumption, our political views, and even our social interactions. The result is a fractured public sphere, where shared understanding and common ground become increasingly difficult to achieve.

This creates a self-reinforcing cycle of conformity. AI algorithms, trained on our past behaviour, predict what we’ll like and show us more of it. We consume this content, further solidifying our preferences, which in turn informs the algorithm’s predictions. The result is an algorithmic echo chamber, where our beliefs are constantly validated and our worldview is never seriously challenged. The implications for societal cohesion are profound. When individuals are isolated within their own ideological bubbles, empathy diminishes, and constructive dialogue becomes increasingly difficult. This polarization can contribute to political gridlock, social unrest, and the erosion of trust in institutions. A recent study published in Nature Human Behaviour demonstrated a significant correlation between prolonged exposure to personalized news feeds and increased political radicalization [nature.com].

Beyond the level of individual preference, a more subtle – yet equally concerning – phenomenon is emerging: algorithmic homogenisation. As AI systems are increasingly used to generate content, from news articles (using tools like GPT-3 and newer models) to product descriptions, there's a risk that they will converge on a narrow range of stylistic and thematic conventions, leading to a loss of originality and diversity. This isn't about intentional censorship; it's about the inherent tendency of algorithms to optimise for predictability and conformity. The result could be a world where creativity is stifled and cultural expression becomes increasingly bland and uniform. The rise of AI-generated music, while potentially democratizing music creation, also raises concerns about the standardization of musical styles, and the potential displacement of human musicians. Consider the lawsuits filed by artists against companies using AI to replicate their voices and styles without permission—a clear indication that the creative landscape is being dramatically reshaped [rollingstone.com]. Imagine a future where all news articles read the same, all music sounds the same, and all art looks the same – a chilling prospect for anyone who values originality and innovation. This homogenization isn't limited to creative fields; it extends to scientific research as well, where AI-driven literature reviews can inadvertently steer researchers towards established paradigms, hindering genuinely novel insights.

A Multifaceted Approach to Reclaiming Agency

The challenge, then, is not to reject AI outright, but to harness its power while safeguarding our human agency. This requires a multifaceted approach, encompassing technological innovation, educational reform, and a fundamental shift in our cultural values. Developing AI systems that are more transparent, explainable, and accountable is paramount. ‘Black box’ algorithms are simply unacceptable in contexts where human lives are affected. We need to demand that AI developers prioritize interpretability, allowing us to understand the reasoning behind their recommendations and to identify potential biases. Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) are promising steps in this direction [arxiv.org], but require wider adoption and refinement. Furthermore, robust mechanisms for auditing and redress are essential, ensuring that AI systems are held accountable for their actions. The EU AI Act represents a step in this direction, but its effectiveness remains to be seen, particularly in regards to enforcement and adaptability to rapidly evolving technologies.

Policy Recommendations:

Algorithmic Impact Assessments: Mandatory impact assessments for all AI systems deployed in high-stakes domains (healthcare, finance, criminal justice) to identify and mitigate potential risks. These assessments should be publicly available.

Data Transparency Regulations: Regulations requiring organizations to disclose the data used to train AI algorithms, enabling independent scrutiny for bias. This must include details about data collection methods, demographic representation, and potential sources of bias.

Right to Explanation: A legal right for individuals to receive a clear and understandable explanation of decisions made by AI systems that affect them, including the factors that contributed to the outcome.

Investment in AI Literacy: Public funding for educational programs to promote AI literacy and critical thinking skills across all age groups, starting from primary school. This should include training on identifying misinformation and evaluating algorithmic bias.

Establishment of an AI Ethics Board: An independent body responsible for developing ethical guidelines for AI development and deployment, and for investigating complaints of algorithmic harm. This board should include diverse stakeholders, including ethicists, technologists, legal experts, and representatives from affected communities.

Algorithmic Auditing Standards: Establishing standardized procedures for auditing AI systems for bias, fairness, and transparency.

However, technological solutions alone are insufficient. We need to reimagine education for the age of AI, focusing on cultivating the skills that AI cannot easily replicate – critical thinking, creativity, problem-solving, emotional intelligence, and ethical reasoning. Education should equip individuals with the ability to question assumptions, to evaluate evidence, and to form their own informed opinions. Incorporating media literacy and computational thinking into the curriculum is crucial, empowering students to understand how algorithms work and to critically assess the information they encounter online. But equally important is fostering a sense of intellectual curiosity and a lifelong love of learning – a capacity for continuous adaptation and self-improvement.

What Does Automated Judgment Mean for Human Agency?

Beyond the practical considerations, the rise of AI raises profound philosophical questions about the nature of human agency, moral responsibility, and the meaning of life. If AI can make decisions that are objectively ‘better’ than those made by humans, does this diminish the value of human judgment? Does it challenge our sense of self-worth and our belief in free will? The ancient philosophical debate between determinism and free will takes on new relevance in the age of AI. If our choices are increasingly influenced – or even dictated – by algorithms, can we still claim to be truly free?

Existentialist thinkers like Jean-Paul Sartre argued that we are “condemned to be free,” meaning that we are fully responsible for our choices and the consequences thereof. But what happens when those choices are made for us by a machine? This echoes the concerns raised by thinkers like Hannah Arendt about the “banality of evil,” suggesting that unthinking adherence to systems can lead to morally reprehensible outcomes. Furthermore, the reliance on AI raises questions about the nature of moral responsibility. If an autonomous vehicle causes an accident, who is to blame? The programmer? The manufacturer? The owner? Or the AI itself? Our existing legal and ethical frameworks are ill-equipped to address these questions. Assigning blame to a non-sentient entity feels intuitively wrong, but holding humans accountable for the actions of AI systems can be equally problematic.

Perhaps the most fundamental challenge posed by AI is to our understanding of what it means to be human. For centuries, we have defined ourselves by our capacity for reason, creativity, and moral judgment. But if AI can replicate these abilities, what distinguishes us from machines? Is there something uniquely human that cannot be reduced to algorithms and data? The answer to this question will determine the kind of future we create.

Designing for Cognitive Resilience

An often overlooked aspect of reclaiming agency is the deliberate introduction of “intentional friction” into our interactions with AI systems. This means resisting the temptation to optimize everything. Instead of seeking the most efficient solution, we should sometimes choose to engage in the effortful process of problem-solving ourselves. This could involve deliberately turning off automated features, seeking out diverse sources of information, or engaging in activities that challenge our cognitive abilities.

For example, rather than relying solely on a navigation app, we could occasionally try to navigate using a map. Instead of accepting the recommendations of a streaming service, we could browse through different genres and artists. Instead of relying on an AI-powered grammar checker, we could take the time to carefully proofread our own writing. These seemingly small acts of resistance can help to maintain our cognitive sharpness and to prevent us from becoming overly reliant on technology. This echoes the Stoic principle of premeditatio malorum – deliberately contemplating potential obstacles to build resilience and prepare for adversity.

The value of effort should not be underestimated. The struggle to overcome challenges, to learn new skills, and to make informed decisions is essential for personal growth and development. In a world increasingly driven by automation, it’s crucial to preserve opportunities for meaningful effort – to ensure that we don’t become passive consumers of AI-generated solutions. We need to actively cultivate a culture that values intellectual curiosity, critical thinking, and a willingness to embrace complexity.

The Pursuit of Meaning in an Algorithmic Age

Ultimately, the tension between AI and human agency is not simply about efficiency versus autonomy. It's about the very purpose of human life. AI is a powerful tool, capable of optimising processes and solving problems with unparalleled speed and accuracy. But it cannot provide us with meaning, purpose, or a sense of belonging. These are uniquely human concerns, and they require the exercise of our own judgment, creativity, and moral reasoning. The relentless pursuit of optimisation risks stripping away the very qualities that make life meaningful.

As we navigate this new era of increasingly sophisticated AI, we must remember that technology is a means to an end, not an end in itself. Our goal should not be simply to optimise our lives, but to live them more fully, more authentically, and more meaningfully. This requires embracing the challenges of critical reflection, reclaiming our agency, and safeguarding the uniquely human qualities that make life worth living. The algorithmic echo chamber may be alluring, but true fulfillment lies beyond its walls, in the vibrant, chaotic, and ultimately unpredictable world of human experience. The future isn't about humans versus machines; it’s about humans with machines – but only if we choose to remain firmly in control of our own minds, and consciously cultivate the skills and values that define our humanity. It demands a continual reassessment of our relationship with technology, and a commitment to building a future where human flourishing, not algorithmic efficiency, is the ultimate goal.

Citations

- wired.com: For context on SHADES dataset and AI bias.

- wired.com: For the example of "Massive Blue" and AI intrusion into online spaces.

- wired.com: For the DHS YouTube ad campaign example.

- pewresearch.org: For statistics on news consumption via social media.

- fda.gov: For information on the FDA's approval of AI diagnostic systems.

- theverge.com: For details on the Stable Diffusion copyright lawsuit.

- forrester.com: For data on consumer trust in AI.

- nature.com: For research on personalised news feeds and political radicalisation.

- arxiv.org: For information on LIME and SHAP explainable AI techniques.

.jpg?#)