JavaScript Lambda Functions Using a Bun Custom Runtime

I've previously tried out Lambda functions with a custom runtime using Deno, and it had great security and convenience benefits. But Deno isn't the only alternative to the Node.js runtime. Bun is a more recent entrant to the space, but it has an impressive number of features, including not requiring TypeScript to be transpiled, and it makes a lot of claims around speed. Bun also has everything for a custom Lambda runtime buried in its GitHub repository. Custom Lambda Runtimes Custom runtimes are distributed as Lambda Layers, and the OS-only Lambda runtimes are used as their base. The OS-only runtimes are Amazon Linux 2 and Amazon Linux 2023 and are the foundation on which the rest of the runtime builds. The custom runtimes have certain APIs and processes that they have to implement to work with the Lambda service and be able to respond to events. I visualize and think of Lambda runtimes and layers like Docker or other OCI-compatible containers. I wouldn't be surprised if that's actually how things work, considering Lambda supports using container images, but I have nothing to prove that is how the Lambda service works. Containers are made up of different layers. If you've ever pulled down a container image from Docker Hub, GitHub, or elsewhere, you probably saw multiple progress bars. Each of those was for a layer of that image. Each layer is generally dependent on the layer before it, and when loaded on top of each other, they make the complete container image. Having multiple layers and the dependencies between them means you don't have to rebuild the entire container image if you make a change. Instead, only the layer you changed and any layers that depend on it need to be rebuilt. The Lambda OS-only runtime is effectively the base image for a container, which has the Lambda layers applied on top of it, with the source code you provide as the last layer applied. This is an oversimplification of container images, and not something you need to know if you want to use a custom runtime, but I've found this visualization useful when troubleshooting complex Lambda situations. Building & Deploying the Bun Layer Bun's readme for the bun-lambda package provides the commands necessary to build and deploy the Lambda layer to your AWS account. You need Bun and the AWS CLI installed and your terminal configured with access to the AWS account where you want the layer deployed. The commands default to building and deploying a layer for arm64 Lambdas and call the layer "bun". Since Lambda still defaults to the x86_64 architecture, I suggest a slightly different set of commands. The architecture for the layer does matter and determines which version of the bun runtime is downloaded, so I recommend deploying layers for both the x86_64 and arm64 architectures with a naming convention similar to the AWS Parameters and Secrets Lambda Extensions. Instead of running the publish layer script as detailed in the README, run the commands below. I ran into issues with the publish script correctly detecting the configured AWS region. I suggest manually entering the AWS region (or regions) you want the layer published to. bun run publish-layer --layer bun \ --arch x64 \ --region us-east-1 us-east-2 bun run publish-layer --layer bun-arm64 \ --arch aarch64 \ --region us-east-1 us-east-2 After running these commands, I had four Lambda layers in my AWS account, and the script output the ARNS for each of them: arn:aws:lambda:us-east-1:000000000000:layer:bun:1 arn:aws:lambda:us-east-1:000000000000:layer:bun-arm64:1 arn:aws:lambda:us-east-2:000000000000:layer:bun:1 arn:aws:lambda:us-east-2:000000000000:layer:bun-arm64:1 Creating the Lambda Function The process of creating the Lambda function is mostly the same as you're used to, but with two important differences: You have to add the bun or bun-arm64 Lambda layer to the Lambda function, depending on which architecture you chose for your Lambda You have to return a Response object from your function handler instead of a JavaScript object. One of the quirks of the Bun runtime is the requirement to use the Response class as the return value from your handler functions unless you're working with websockets, which I'll get to in a moment. Below is a basic function handler that returns a JSON string with a message: export async function handler(request, server) { return new Response(JSON.stringify({message:"Hello from Lambda!"})); } The Bun Server Format The Bun runtime has built-in functionality for a high-performance web server, including WebSocket support. You can leverage this format to build your Lambda function, which lets you easily test your code locally without setting up containers or additional tools. Below is the code sample Bun provides for a Lambda that will respond to Amazon API Gateway requests: export default { async fetch(request: Request): Promise { console.log(re

I've previously tried out Lambda functions with a custom runtime using Deno, and it had great security and convenience benefits. But Deno isn't the only alternative to the Node.js runtime. Bun is a more recent entrant to the space, but it has an impressive number of features, including not requiring TypeScript to be transpiled, and it makes a lot of claims around speed. Bun also has everything for a custom Lambda runtime buried in its GitHub repository.

Custom Lambda Runtimes

Custom runtimes are distributed as Lambda Layers, and the OS-only Lambda runtimes are used as their base. The OS-only runtimes are Amazon Linux 2 and Amazon Linux 2023 and are the foundation on which the rest of the runtime builds. The custom runtimes have certain APIs and processes that they have to implement to work with the Lambda service and be able to respond to events.

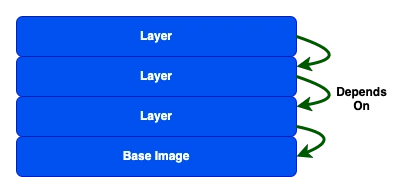

I visualize and think of Lambda runtimes and layers like Docker or other OCI-compatible containers. I wouldn't be surprised if that's actually how things work, considering Lambda supports using container images, but I have nothing to prove that is how the Lambda service works.

Containers are made up of different layers. If you've ever pulled down a container image from Docker Hub, GitHub, or elsewhere, you probably saw multiple progress bars. Each of those was for a layer of that image. Each layer is generally dependent on the layer before it, and when loaded on top of each other, they make the complete container image. Having multiple layers and the dependencies between them means you don't have to rebuild the entire container image if you make a change. Instead, only the layer you changed and any layers that depend on it need to be rebuilt.

The Lambda OS-only runtime is effectively the base image for a container, which has the Lambda layers applied on top of it, with the source code you provide as the last layer applied.

This is an oversimplification of container images, and not something you need to know if you want to use a custom runtime, but I've found this visualization useful when troubleshooting complex Lambda situations.

Building & Deploying the Bun Layer

Bun's readme for the bun-lambda package provides the commands necessary to build and deploy the Lambda layer to your AWS account. You need Bun and the AWS CLI installed and your terminal configured with access to the AWS account where you want the layer deployed.

The commands default to building and deploying a layer for arm64 Lambdas and call the layer "bun". Since Lambda still defaults to the x86_64 architecture, I suggest a slightly different set of commands. The architecture for the layer does matter and determines which version of the bun runtime is downloaded, so I recommend deploying layers for both the x86_64 and arm64 architectures with a naming convention similar to the AWS Parameters and Secrets Lambda Extensions.

Instead of running the publish layer script as detailed in the README, run the commands below. I ran into issues with the publish script correctly detecting the configured AWS region. I suggest manually entering the AWS region (or regions) you want the layer published to.

bun run publish-layer --layer bun \

--arch x64 \

--region us-east-1 us-east-2

bun run publish-layer --layer bun-arm64 \

--arch aarch64 \

--region us-east-1 us-east-2

After running these commands, I had four Lambda layers in my AWS account, and the script output the ARNS for each of them:

arn:aws:lambda:us-east-1:000000000000:layer:bun:1

arn:aws:lambda:us-east-1:000000000000:layer:bun-arm64:1

arn:aws:lambda:us-east-2:000000000000:layer:bun:1

arn:aws:lambda:us-east-2:000000000000:layer:bun-arm64:1

Creating the Lambda Function

The process of creating the Lambda function is mostly the same as you're used to, but with two important differences:

- You have to add the bun or bun-arm64 Lambda layer to the Lambda function, depending on which architecture you chose for your Lambda

- You have to return a Response object from your function handler instead of a JavaScript object.

One of the quirks of the Bun runtime is the requirement to use the Response class as the return value from your handler functions unless you're working with websockets, which I'll get to in a moment.

Below is a basic function handler that returns a JSON string with a message:

export async function handler(request, server) {

return new Response(JSON.stringify({message:"Hello from Lambda!"}));

}

The Bun Server Format

The Bun runtime has built-in functionality for a high-performance web server, including WebSocket support. You can leverage this format to build your Lambda function, which lets you easily test your code locally without setting up containers or additional tools.

Below is the code sample Bun provides for a Lambda that will respond to Amazon API Gateway requests:

export default {

async fetch(request: Request): Promise<Response> {

console.log(request.headers.get("x-amzn-function-arn"));

// ...

return new Response("Hello from Lambda!", {

status: 200,

headers: {

"Content-Type": "text/plain",

},

});

},

};

The Bun custom Lambda runtime does not support the routes functionality of the Bun HTTP server added in Bun v1.2.3. It only supports the catch-all fetch function and the websocket function.

I haven't dug into the WebSocket functionality; it seems like it is intended for use with API Gateway WebSocket APIs, but that isn't something I have had the occasion to use.

It may seem like the Bun custom runtime is focused on supporting integrations with API Gateway, but it does support other event sources. This is an example of what a basic handler might look like for SQS, SNS, S3, EventBridge, and other services:

export default {

async fetch(request: Request): Promise<Response> {

const event = await request.json();

// ...

return new Response();

},

};

Testing Locally

If you use the Bun server format, you can run your handler function locally using Bun and your browser or an API testing tool.

> bun run handler.ts

Started development server: http://localhost:3000

An HTTP server gets started on localhost, and you can use whatever tools you want to make requests to it. If you've ever used the Lambda testing capabilities of the SAM CLI, this is similar, except the Docker container used by the SAM CLI makes things a little more similar to the AWS environment.

With the Bun server, it doesn't matter what kind of request you make, GET request, POST, or otherwise, it will execute the code. If you're really trying to mimic the AWS environment, you will need to put together the right HTTP body to make the request your function receives look like a request in AWS.

It's handy to test something locally, but it's important to remember this isn't a full testing harness and isn't mocking over any AWS services. You either need to create those or use a tool like LocalStack.

Is it Fast?

In a very unscientific test, I created a Bun Lambda function that creates a JWT using the function ARN and the current ISO 8601 timestamp as the payload. It's not much computational work, but the Lambda function does everything and doesn't rely on outside systems. I invoked the function several times, waited a while, and repeated the process. This helped ensure I had a couple of cold starts to get initialization numbers.

I had three cold starts in my dataset, and the initialization of the Lambda averaged 629.48ms. That initialization time is the extra time added by the cold start. Including the initialization time, the Lambdas averaged 75.66ms with 46 invocations. Removing that initialization time from consideration and focusing strictly on the handler's execution time, the average was 34.61ms.

I adjusted the code to work in a normal Node.js 22 Lambda and followed the same procedure. The average execution time was 1.99ms, and the cold starts averaged 134.65ms. I know the Lambda team has a lot of optimizations in place for the base runtimes, and I don't know how much of this difference is due to those optimizations or to differences in Node and Bun.

I may not be able to get super precise numbers, but I'm considering doing a more rigorous comparison between Node.js, Deno, and Bun Lambdas in the future.

Wrapping it Up

Regardless of the numbers, the Bun Lambda is still fast, and with an easier way to test it locally, the trade-off may be worthwhile. Bun has excellent Node.js compatibility and has a lot of features that are great for the developer experience. I've used it for some automation scripts and doing local data transformations, and I'd be open to using it in production applications at this point, even in a Lambda.