How My AI Image Search Engine Learned to Love Porn

When I launched Instapics, the goal was simple: create a platform where users could discover visually content through their interactions and a search engine. The algorithm measures screen time of content, click rate, saves and votes to score content. It recommends most scored content and even has a personalized ranking of content for you. The idea was that you always find something interesting when you open the page. A diverse feed of art, funny cats, cars or whatever cool stuff you like. But reality struck hard. As the platform grew, so did an unexpected problem: users weren’t searching for art, landscapes, memes and anime. They overwhelmingly searched for explicit content, and the algorithm dutifully amplified it. The top keywords? Let’s just say they weren’t “sunset” or “puppies”. The algorithm uses clip to calculate and store embeddings for images. It uses cosine correlation to compare the embeddings with keyword embeddings to deliver results. But it is also coded to prioritize content similar to what the users like. And it is coded to expand its index based on what users like. As of now, it seems to have indexed about 2.000.000 images from every nsfw subreddit it could find. I initially did some manual intervention in the database to increase the search counter for keywords like “dogs” “memes” and “landscapes”. It was just stupid when new users open the site and the first suggested search keywords they see are about how to drill anime girls. A solution was needed. And it was needed fast. So i added a setting to show/hide nsfw images. Too bad that clip has no idea what images are sfw and what not. So i tried around with with different AI nsfw detectors and let them run over all the images. (I guess it is still running….) But even with a >90% true classification, since the site is so massively flooded with nsfw, even just 10% false negatives on a database where 95% is nsfw still makes bs results. At this point I am not sure how to continue. The project is abandoned for a few months now. I dont want to have it shutdown. I was really proud of my algorithm. I guess it is not it’s fault. It was coded to deliver whatever users want to see. Maybe manually flooding the database with sfw content can help reduce this problem. But for now I have different plans for different projects…

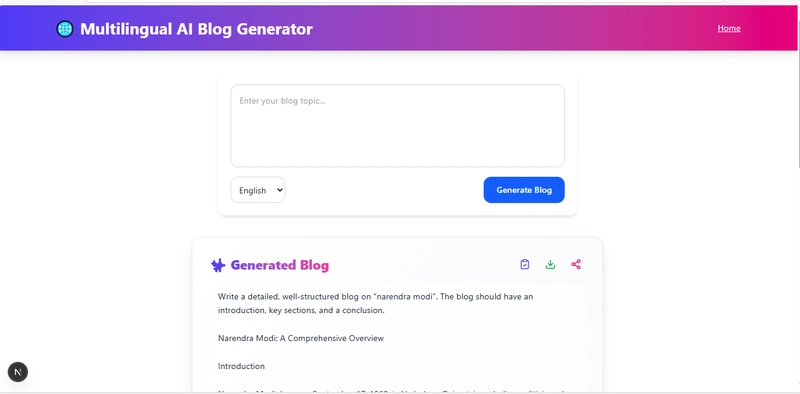

When I launched Instapics, the goal was simple: create a platform where users could discover visually content through their interactions and a search engine. The algorithm measures screen time of content, click rate, saves and votes to score content. It recommends most scored content and even has a personalized ranking of content for you. The idea was that you always find something interesting when you open the page. A diverse feed of art, funny cats, cars or whatever cool stuff you like.

But reality struck hard. As the platform grew, so did an unexpected problem: users weren’t searching for art, landscapes, memes and anime. They overwhelmingly searched for explicit content, and the algorithm dutifully amplified it. The top keywords? Let’s just say they weren’t “sunset” or “puppies”.

The algorithm uses clip to calculate and store embeddings for images. It uses cosine correlation to compare the embeddings with keyword embeddings to deliver results. But it is also coded to prioritize content similar to what the users like. And it is coded to expand its index based on what users like. As of now, it seems to have indexed about 2.000.000 images from every nsfw subreddit it could find.

I initially did some manual intervention in the database to increase the search counter for keywords like “dogs” “memes” and “landscapes”. It was just stupid when new users open the site and the first suggested search keywords they see are about how to drill anime girls.

A solution was needed. And it was needed fast. So i added a setting to show/hide nsfw images. Too bad that clip has no idea what images are sfw and what not. So i tried around with with different AI nsfw detectors and let them run over all the images. (I guess it is still running….)

But even with a >90% true classification, since the site is so massively flooded with nsfw, even just 10% false negatives on a database where 95% is nsfw still makes bs results.

At this point I am not sure how to continue. The project is abandoned for a few months now. I dont want to have it shutdown. I was really proud of my algorithm. I guess it is not it’s fault. It was coded to deliver whatever users want to see.

Maybe manually flooding the database with sfw content can help reduce this problem. But for now I have different plans for different projects…