Data Science: From School to Work, Part III

Good practices for Python error handling and logging The post Data Science: From School to Work, Part III appeared first on Towards Data Science.

Introduction

Writing code is about solving problems, but not every problem is predictable. In the real world, your software will encounter unexpected situations: missing files, invalid user inputs, network timeouts, or even hardware failures. This is why handling errors isn’t just a nice-to-have; it’s a critical part of building robust and reliable applications for production.

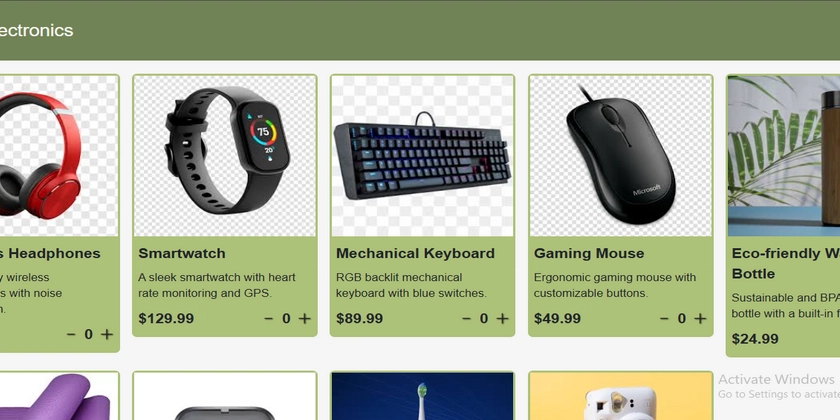

Imagine an e-commerce website. A customer places an order, but during the checkout process, a database connection issue occurs. Without proper Error Handling, this issue could cause the application to crash, leaving the customer frustrated and the transaction incomplete. Worse, it might create inconsistent data, leading to even bigger problems down the line. Thus, error handling is a fundamental skill for any Python developer who wants to write code for production.

However, good error handling also goes hand in hand with a good logging system. It’s rare to have access to the console when the code is running in production. So there’s no chance of your print being seen by anyone. To ensure that you can monitor your application and investigate any incidents, you need to set up a logging system. This is where the loguru package comes into play, which I will introduce in this article.

I – How to handle Python errors?

In this part I present the best practices of error handling in Python, from try-except blocks and the use of raise to the finally statement. These concepts will help you write cleaner, more maintainable code that is suitable for a production environment.

The try-expect blocks

The try-except block is the main tool for handling errors in Python. It allows you to catch potential errors during code execution and prevent the program from crashing.

def divide(a, b):

try:

return a / b

except ZeroDivisionError:

print(f"Only Chuck Norris can divide by 0!")In this trivial function, the try-except block allows the error caused by a division by 0 to be intercepted. The code in the try block is executed, and if an error occurs, the except block checks whether it is a ZeroDivisionError and print a message. But only this type of error is caught. For example, if b is a string, an error occurs. To avoid this, you can add a TypeError. So, it is important to test all possible errors.

The function becomes:

def divide(a, b):

try:

return a / b

except ZeroDivisionError:

print(f"Only Chuck Norris can divide by 0!")

except TypeError:

print("Do not compare apples and orange!")Raise an exception

You can use the raise statement to manually raise an exception. This is useful if you want to report a user-defined error or impose a specific restriction on your code.

def divide(a, b):

if b == 0:

raise ValueError("Only Chuck Norris can divide by 0!")

return a / b

try:

result = divide(10, 0)

except ValueError as e:

print(f"Error: {e}")

except TypeError:

print("Do not compare apples and orange!")In this example, a ValueError exception is triggered if the divisor is zero. In this way, you can explicitly control the error conditions. In the print function, the message will be “Error: Only Chuck Norris can divide by 0!“.

Some of the most common exceptions

ValueError: The type of a value is correct but its value is invalid.

try:

number = math.sqrt(-10)

except ValueError:

print("It's too complex to be real!")KeyError: Trying to access a key that doesn’t exist in a dictionary.

data = {"name": "Alice"}

try:

age = data["age"]

except KeyError:

print("Never ask a lady her age!")IndexError: Trying to access a non-existent index in a list.

items = [1, 2, 3]

try:

print(items[3])

except IndexError:

print("You forget that indexing starts at 0, don't you?")TypeError: Performing an operation on incompatible types.

try:

result = "text" + 5

except TypeError:

print("Do not compare apples and orange!")FileNotFoundError: Trying to open a non-existing file.

try:

with open("notexisting_file.txt", "r") as file:

content = file.read()

except FileNotFoundError:

print("Are you sure of your path?")Custom Error: You can trigger predefined exceptions or also define your own exception classes:

class CustomError(Exception):

pass

try:

raise CustomError("This is a custom error")

except CustomError as e:

print(f"Catched error: {e}")Clean with the finally statement

The finally block is executed in every case, regardless of whether the error has occurred or not. It is often used to perform cleanup actions, such as closing a connection to a database or releasing resources.

import sqlite3

try:

conn = sqlite3.connect("users_db.db") # Connect to a database

cursor = conn.cursor()

cursor.execute("SELECT * FROM users") # Execute a query

results = cursor.fetchall() # Get result of the query

print(results)

except sqlite3.DatabaseError as e:

print("Database error:", e)

finally:

print("Closing the database connection.")

if 'conn' in locals():

conn.close() # Ensures the connection is closedBest practices for error handling

- Catch specific exceptions: Avoid using a generic except block without specifying an exception, as it may mask unexpected errors. Prefer specifying the exception:

# Bad practice

try:

result = 10 / 0

except Exception as e:

print(f"Error: {e}")

# Good practice

try:

result = 10 / 0

except ZeroDivisionError as e:

print(f"Error: {e}")- Provide explicit messages: Add clear and descriptive messages when raising or handling exceptions.

- Avoid silent failures: If you catch an exception, ensure it is logged or re-raised so it doesn’t go unnoticed.

import logging

logging.basicConfig(level=logging.ERROR)

try:

result = 10 / 0

except ZeroDivisionError:

logging.error("Division by zero detected.")- Use

elseandfinallyblocks: Theelseblock runs only if no exception is raised in thetryblock.

try:

result = 10 / 2

except ZeroDivisionError:

logging.error("Division by zero detected.")

else:

logging.info(f"Success: {result}")

finally:

logging.info("End of processing.")II – How to handle Python logs?

Good error-handling is one thing, but if no one knows that an error has occurred, the whole point is lost. As explained in the introduction, the monitor is rarely consulted or even seen when a program is running in production. No one will see print. Therefore, good error handling must be accompanied by a good logging system.

What are logs?

Logs are records of messages generated by a program to track the events that occur during its execution. These messages may contain information about errors, warnings, successful actions, process milestones or other relevant events. Logs are essential for debugging, tracking performance and monitoring the health of an application. They allow developers to understand what is going on in a program without having to interrupt its execution, making it easier to solve problems and continuously improve the software.

The loguru package

Python already has a native logging package: logging. But we prefer the loguru package, which is much simpler to use and easier to configure. In fact, complete output formatting is already preconfigured.

from loguru import logger

logger.debug("A pretty debug message!")

All the important elements are included directly in the message:

- Time stamp

- Log level, indicating the seriousness of the message.

- File location, module and line number. In this example, the file location is __main__ because it was executed directly from the command line. The module is

due to the fact that the log is not located in a class or function. - The message.

The different logging levels

There are several log levels to take into account the importance of the message displayed (which is more complicated in a print). Each level has a name and an associated number:

- TRACE (5): used to record detailed information on the program’s execution path for diagnostic purposes.

- DEBUG (10): used by developers to record messages for debugging purposes.

- INFO (20): used to record information messages describing normal program operation.

- SUCCESS (25): similar to INFO, but used to indicate the success of an operation.

- WARNING (30): used to indicate an unusual event that may require further investigation.

- ERROR (40): used to record error conditions that have affected a specific operation.

- CRITICAL (50): used to record error conditions that prevent a main function from working.

The package naturally handles different formatting depending on the level used

from loguru import logger

logger.trace("A trace message.")

logger.debug("A debug message.")

logger.info("An information message.")

logger.success("A success message.")

logger.warning("A warning message.")

logger.error("An error message.")

logger.critical("A critical message.")

The trace message was not displayed because the default minimum level used by loguru is debug. It therefore ignores all messages at lower levels.

It is possible to define new log levels with the level method and is used with the log method

logger.level("FATAL", no=60, color="", icon="!!!")

logger.log("FATAL", "A FATAL event has just occurred.") - name : the name of the log level.

- no : the corresponding severity value (must be an integer).

- color : color markup.

- icon : the level icon.

The logger configuration

It is possible to recreate a logger with a new configuration by deleting the old one with the remove command and generating a new logger with a new configuration with the add function. This function takes the following arguments:

- sink [mandatory]: specifies a target for each data set created by the logger. By default, this value is set to

sys.stderr(which corresponds to the standard error output). We can also store all output in a “.log” file (except if you have a log collector). - level: Sets the minimum logging level for the recorder.

- format: is useful to define a custom format for your logs. To maintain the coloring of the logs in the terminal, this must be specified (see example below).

- filter: is used to determine whether a log should be recorded or not.

- colorize: takes a boolean value and determines whether the terminal coloring should be activated or not.

- serialize: causes the log to be displayed in JSON format if it is set to

True. - backtrace: determines whether the exception trace should go beyond the point at which the error was recorded in order to facilitate troubleshooting.

- diagnose: Determines whether variable values should be displayed in the exception trace. This option must be set to

Falsein production environments so that no sensitive information is leaked. - enqueue: If this option is activated, the log data records are placed in a queue to avoid conflicts if several processes connect to the same target.

- catch: If an unexpected error occurs when connecting to the server specified sink, you can detect it by setting this option to

True. The error will be displayed in the standard error.

import sys

from loguru import logger

logger_format = (

"{time:YYYY-MM-DD HH:mm:ss.SSS} | "

"{level: <8} | "

"{name}:{function}:{line}"

)

logger.remove()

logger.add(sys.stderr, format=logger_format)

Note:

Colors disappear in a file. This is because there are special characters (called ansi codes) that display colors in the terminal, but this formatting does not exist in the files.

Add context to logs

For complex applications, it can be useful to add further information to the logs to enable sorting and facilitate troubleshooting.

For example, if a user changes a database, it can be useful to have the user ID in addition to the change information.

Before you start recording context data, you need to make sure that the {extra} directive is included in your custom format. This variable is a Python dictionary that contains context data for each log entry (if applicable).

Here is an example of a customization where an extra user_id is added. In this format, the colors.

import sys

from loguru import logger

logger_format = (

"{time:YYYY-MM-DD HH:mm:ss.SSS} | "

"{level: <8} | "

"{name} :{function} :{line} | "

"User ID: {extra[user_id]} - {message} "

)

logger.configure(extra={"user_id": ""}) # Default value

logger.remove()

logger.add(sys.stderr, format=logger_format)It is now possible to use the bind method to create a child logger inheriting all the data from the parent logger.

childLogger = logger.bind(user_id="001")

childLogger.info("Here a message from the child logger")

logger.info("Here a message from the parent logger")

Another way to do this is to use the contextualize method in a with block.

with logger.contextualize(user_id="001"):

logger.info("Here a message from the logger with user_id 001")

logger.info("Here a message from the logger without user_id")

Instead of the with block, you can use a decorator. The preceding code then becomes

@logger.contextualize(user_id="001")

def child_logger():

logger.info("Here a message from the logger with user_id 001")

child_logger()

logger.info("Here a message from the logger without user_id")The catch method

Errors can be automatically logged when they occur using the catch method.

def test(x):

50/x

with logger.catch():

test(0)

But it’s simpler to use this method as a decorator. This results in the following code

@logger.catch()

def test(x):

50/x

test(0)The log file

A production application is designed to run continuously and uninterrupted. In some cases, it is important to predict the behavior of the file, otherwise you will have to consult pages of logs in the event of an error.

Here are the different conditions under which a file can be modified:

- rotation: specifies a condition under which the current log file is closed and a new file is created. This condition can be an int, a datetime or a str. Str is recommended as it is easier to read.

- retention: specifies how long each log file should be kept before it is deleted from the file system.

- compression: The log file is converted to the specified compression format if this option is activated.

- delay: If this option is set to True, the creation of a new log file is delayed until the first log message has been pushed.

- mode, buffering, encoding : Parameters that are passed to the Python function open and determine how Python opens log files.

Note:

Usually, in the case of a production application, a log collector will be set up to retrieve the app’s outputs directly. It is therefore not necessary to create a log file.

Conclusion

Error handling in Python is an important step in writing professional and reliable code. By combining try-except blocks, the raise statement, and the finally block, you can handle errors predictably while maintaining readable and maintainable code.

Moreover, a good logging system improves the ability to monitor and debug your application. Loguru provides a simple and flexible package for logging messages and can therefore be easily integrated into your codebase.

In summary, combining effective error handling with a comprehensive logging system can significantly improve the reliability, maintainability, and debugging capability of your Python applications.

References

1 – Error handling in Python: official Python documentation on exceptions

2 – The loguru documentation: https://loguru.readthedocs.io/en/stable/

3 – Guide about loguru: https://betterstack.com/community/guides/logging/loguru/

The post Data Science: From School to Work, Part III appeared first on Towards Data Science.

![Get 15% Amazon Discount With Discover Card Cashback [YMMV]](https://boardingarea.com/wp-content/uploads/2025/03/b3bb83beb4bb9391e57685a6d7d537ef.png?#)