Context-Sensitive Semantic Reasoning via Dynamic Triplet Graph Embeddings

Abstract We present a context-sensitive semantic reasoning system that builds a dynamic knowledge graph from unstructured text and enables analogy-based reasoning. The system extracts significant tokens from paragraphs using TF–IDF, then infers relational triplets by leveraging a pre-trained masked language model (DeBERTa). Each inferred triplet (token₁, relation, token₂) is embedded in a transformer-based vector space along with its context. Triplets form directed edges in a continuously evolving graph: edge vectors are updated through inertia-weighted averaging as new contexts provide additional or even contradictory evidence. At query time, the system performs context-aware pathfinding, activating edges whose embeddings are similar to the query’s context vector. The result is a reconstructed chain of nodes and inferred relations that represent a reasoning path, which can be optionally translated into natural language by a language model. We demonstrate that this approach enables emergent analogical reasoning, handles contradictions via gradual vector shifts, and accumulates knowledge from streaming data. The system is efficient for local inference (suitable for 16GB RAM, 4GB VRAM environments) and supports multilingual inputs, making it practical for interactive knowledge discovery and complex query answering. Introduction Understanding and reasoning over unstructured text remains a core challenge in AI. Traditional knowledge graphs store facts as triples (head, relation, tail) to support reasoning, but they often lack context sensitivity and struggle with evolving knowledge. Humans, on the other hand, draw analogies and adapt to new information, even if it contradicts prior beliefs. To bridge this gap, we propose a semantic reasoning system that dynamically constructs a context-rich knowledge graph from raw text and performs reasoning by traversing this graph. Our approach draws inspiration from multiple research threads. First, knowledge extraction techniques can convert text into triples; for example, tools can extract (subject, relation, object) from sentences (Analogical Reasoning with Knowledge-based Embeddings). However, most information extraction pipelines yield static triples that ignore context nuance. Second, distributional semantics has shown that relationships between words can be captured as vector offsets, enabling analogies like king – man + woman ≈ queen (Analogical Reasoning with Knowledge-based Embeddings) (Analogical Reasoning with Knowledge-based Embeddings). We leverage this idea by representing each relation as a vector in a continuous semantic space. Third, pre-trained language models have strong capacities for relational inference. Recent work formulates relation extraction as a prompting task, e.g. inserting a [MASK] token in a prompt to let the model fill in a relationship (Entity or Relation Embeddings? An Analysis of Encoding Strategies for Relation Extraction) (Entity or Relation Embeddings? An Analysis of Encoding Strategies for Relation Extraction). Models like DeBERTa (Decoding-enhanced BERT) improve on BERT with disentangled attention and an enhanced mask decoder, achieving state-of-the-art performance on language understanding tasks ([2006.03654] DeBERTa: Decoding-enhanced BERT with Disentangled Attention) ([2006.03654] DeBERTa: Decoding-enhanced BERT with Disentangled Attention). Our system exploits such a model in a zero-shot manner to infer relations between key tokens without supervised training, similar in spirit to recent zero-shot triplet extraction methods (Zero-Shot Triplet Extraction via Template Infilling | by Megagon Labs | Medium) (Zero-Shot Triplet Extraction via Template Infilling | by Megagon Labs | Medium). A key contribution of our work is the integration of context at every stage. Each relation vector is influenced by the paragraph context in which it was found, making the knowledge graph context-sensitive. As new texts are ingested, the graph’s edges are continuously updated rather than statically added. This allows the system to handle concept drift – changes or contradictions in the knowledge over time – by gradually adjusting the relation embeddings. Prior research on knowledge graph embeddings for evolving data suggests that encoding knowledge dynamics and inconsistencies is crucial to address concept drift (). Our approach encodes new evidence via inertia-weighted updates, imparting a form of memory and resilience to contradictory information: conflicting contexts cause a slow “vector drift” rather than an abrupt overwrite, somewhat analogously to human belief revision. We target a system that can answer complex queries by finding implicit multi-hop connections through the graph. For example, given a query about how two concepts are related, the system will activate relevant edges (relations) and find a path linking the concepts. This path can then be interpreted – either returned as a sequence of relations or converte

Abstract

We present a context-sensitive semantic reasoning system that builds a dynamic knowledge graph from unstructured text and enables analogy-based reasoning. The system extracts significant tokens from paragraphs using TF–IDF, then infers relational triplets by leveraging a pre-trained masked language model (DeBERTa). Each inferred triplet (token₁, relation, token₂) is embedded in a transformer-based vector space along with its context. Triplets form directed edges in a continuously evolving graph: edge vectors are updated through inertia-weighted averaging as new contexts provide additional or even contradictory evidence. At query time, the system performs context-aware pathfinding, activating edges whose embeddings are similar to the query’s context vector. The result is a reconstructed chain of nodes and inferred relations that represent a reasoning path, which can be optionally translated into natural language by a language model. We demonstrate that this approach enables emergent analogical reasoning, handles contradictions via gradual vector shifts, and accumulates knowledge from streaming data. The system is efficient for local inference (suitable for 16GB RAM, 4GB VRAM environments) and supports multilingual inputs, making it practical for interactive knowledge discovery and complex query answering.

Introduction

Understanding and reasoning over unstructured text remains a core challenge in AI. Traditional knowledge graphs store facts as triples (head, relation, tail) to support reasoning, but they often lack context sensitivity and struggle with evolving knowledge. Humans, on the other hand, draw analogies and adapt to new information, even if it contradicts prior beliefs. To bridge this gap, we propose a semantic reasoning system that dynamically constructs a context-rich knowledge graph from raw text and performs reasoning by traversing this graph.

Our approach draws inspiration from multiple research threads. First, knowledge extraction techniques can convert text into triples; for example, tools can extract (subject, relation, object) from sentences (Analogical Reasoning with Knowledge-based Embeddings). However, most information extraction pipelines yield static triples that ignore context nuance. Second, distributional semantics has shown that relationships between words can be captured as vector offsets, enabling analogies like king – man + woman ≈ queen (Analogical Reasoning with Knowledge-based Embeddings) (Analogical Reasoning with Knowledge-based Embeddings). We leverage this idea by representing each relation as a vector in a continuous semantic space. Third, pre-trained language models have strong capacities for relational inference. Recent work formulates relation extraction as a prompting task, e.g. inserting a [MASK] token in a prompt to let the model fill in a relationship (Entity or Relation Embeddings? An Analysis of Encoding Strategies for Relation Extraction) (Entity or Relation Embeddings? An Analysis of Encoding Strategies for Relation Extraction). Models like DeBERTa (Decoding-enhanced BERT) improve on BERT with disentangled attention and an enhanced mask decoder, achieving state-of-the-art performance on language understanding tasks ([2006.03654] DeBERTa: Decoding-enhanced BERT with Disentangled Attention) ([2006.03654] DeBERTa: Decoding-enhanced BERT with Disentangled Attention). Our system exploits such a model in a zero-shot manner to infer relations between key tokens without supervised training, similar in spirit to recent zero-shot triplet extraction methods (Zero-Shot Triplet Extraction via Template Infilling | by Megagon Labs | Medium) (Zero-Shot Triplet Extraction via Template Infilling | by Megagon Labs | Medium).

A key contribution of our work is the integration of context at every stage. Each relation vector is influenced by the paragraph context in which it was found, making the knowledge graph context-sensitive. As new texts are ingested, the graph’s edges are continuously updated rather than statically added. This allows the system to handle concept drift – changes or contradictions in the knowledge over time – by gradually adjusting the relation embeddings. Prior research on knowledge graph embeddings for evolving data suggests that encoding knowledge dynamics and inconsistencies is crucial to address concept drift (). Our approach encodes new evidence via inertia-weighted updates, imparting a form of memory and resilience to contradictory information: conflicting contexts cause a slow “vector drift” rather than an abrupt overwrite, somewhat analogously to human belief revision.

We target a system that can answer complex queries by finding implicit multi-hop connections through the graph. For example, given a query about how two concepts are related, the system will activate relevant edges (relations) and find a path linking the concepts. This path can then be interpreted – either returned as a sequence of relations or converted into a natural language explanation by a language model. We emphasize efficiency and accessibility: the system is designed to run on modest hardware (commodity PC with 16GB RAM, 4GB GPU) and to handle multilingual input by using language-agnostic embeddings.

In the following sections, we detail the system architecture and algorithms. We then illustrate its performance on example scenarios demonstrating contextual analogies, knowledge accumulation, and contradiction handling. Finally, we discuss the implications of this approach for scalable reasoning and outline future research directions.

Methodology

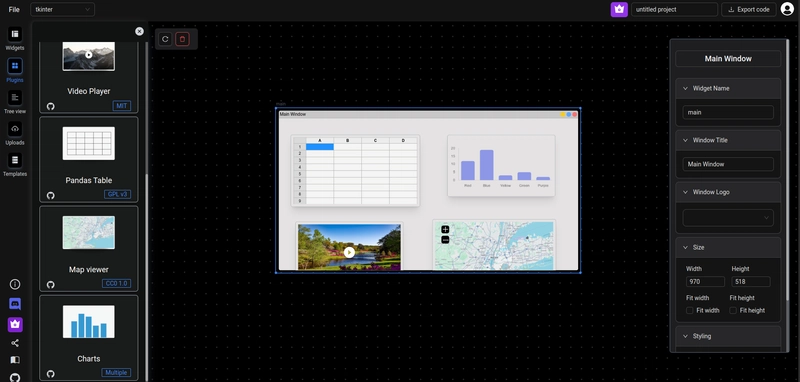

Our system constructs a directed graph of semantically embedded triplets from incoming paragraphs of text. The process consists of several stages (data preprocessing, token extraction, relation inference, embedding, graph update, and query reasoning), as depicted in Figure 1 (omitted). Below, we describe each component in detail.

Data Preprocessing

Each input text is processed at the paragraph level (e.g., individual Reddit posts or document paragraphs). We first apply tokenization and remove stopwords and trivial tokens. Stopwords (common words like the, and, is) carry little semantic content and are dropped to focus on meaningful content words. We also normalize text by lowercasing and possibly stripping punctuation. The result is a list of content tokens for each paragraph.

To illustrate, consider a sample paragraph: "Alice went to Paris in 2022 and loved the city." After preprocessing, we might get tokens: ["alice", "went", "paris", "2022", "loved", "city"] (assuming a simple whitespace tokenizer and an English stopword list).

Significant Token Extraction (LAYS)

Next, the system identifies the most significant tokens in each paragraph using the TF–IDF statistic. Term Frequency–Inverse Document Frequency (TF–IDF) measures how important a word is to a document relative to a corpus (tf–idf - Wikipedia). Here, each paragraph is treated as a document in a corpus of all input texts. Tokens that are frequent in a particular paragraph but rare across other paragraphs achieve high TF–IDF scores, marking them as salient keywords for that context. We call these top-ranked tokens the Local Analytical Key Seeds (LAYS) of the paragraph (a term we introduce for convenience). LAYS effectively summarize what the paragraph is about.

For example, if one paragraph discusses a scientific finding about neural oscillations and another is about Neuralink and brain interfaces, the token "neural" may appear in both. Its IDF would be low (since it’s common in the corpus), whereas unique tokens like "oscillations" or "Neuralink" would stand out with higher TF–IDF. Thus, each paragraph yields a set of distinctive tokens. These tokens will serve as candidate nodes in the knowledge graph, and their pairwise combinations will be used to probe for relations.

Masked Triplet Prompt Generation

Given the set of LAYS tokens from a paragraph, the system generates candidate token pairs and infers potential relations between them. For each unordered pair of significant tokens (A, B) in the paragraph, we construct a masked prompt to query a pre-trained language model for a connecting phrase. We employ DeBERTa in a fill-mask capacity to perform this inference. Specifically, we form a simple template:

"A [MASK] B"

where A and B are the two tokens. The masked language model is asked to fill in the [MASK] with the most likely word that fits in between. This single-word fill is taken as a hypothesized relation or linking term between A and B. In essence, we are querying the model’s internal knowledge for how A and B might be connected. This approach is akin to prompting strategies in zero-shot relation extraction, where a [MASK] token’s predicted word can represent the relation type (Entity or Relation Embeddings? An Analysis of Encoding Strategies for Relation Extraction).

Because language models have been trained on vast text corpora, they often capture commonsense or factual connections. For example, with A = "Paris" and B = "France", the model might fill [MASK] with "in" (producing "Paris in France"), reflecting a locative relationship. For A = "Alice" and B = "Paris" (from our earlier example), the fill might be "in" or "to", yielding "Alice in Paris" or "Alice to Paris". In practice, we take the top predicted token that forms a meaningful relation. We avoid trivial fillers (e.g., punctuation or conjunctions like "and") by filtering the candidate outputs if necessary.

Multi-word relations: While the simplest case uses one [MASK], some relations require a phrase (e.g., "capital of"). Our system can extend the template to multiple masks (e.g., "A [MASK] [MASK] B") to allow a two-word relation. In general, a balance is needed: too many masks make inference difficult, too few may yield overly generic links. We found that one or two masks work well for concise relation phrases. This aligns with findings that even a single mask token’s contextual embedding can encode a relation between entities (Entity or Relation Embeddings? An Analysis of Encoding Strategies for Relation Extraction).

For each token pair, we may generate one or two directed triplets: A -[r]-> B (treating A as head and B as tail with relation r), and possibly B -[s]-> A by swapping the order and repeating the procedure. This captures the idea that the relationship direction can be inferred in context (for instance, "Paris in France" vs. "France [MASK] Paris" might yield "France has Paris", implying an inverse relation). However, for efficiency, our default implementation only takes the original order as they appear in text (assuming the first occurrence as head). The resulting set of triplets consists of head token, relation phrase (the filled word or words), and tail token.

Triplet Embedding with Context

Each inferred triplet is then embedded into a continuous vector space using a transformer-based sentence encoder. Rather than using discrete symbolic relations, we obtain a vector representation (embedding) that captures the semantic meaning of the relation in the context of the original paragraph. This step is crucial for making the knowledge graph context-sensitive.

To compute an embedding, we construct a short textual representation that combines the triplet with a snippet of the paragraph context. For example, if the paragraph was "Alice went to Paris in 2022 and loved the city." and we got the triplet (Alice, in, Paris), we might create a sentence like: "Alice in Paris (from: Alice went to Paris in 2022 and loved the city.)". We then feed this sentence into a pretrained sentence transformer model to obtain a fixed-size vector. We choose a lightweight bi-encoder such as MiniLM or a distilled multilingual model to ensure efficiency (e.g., the paraphrase-multilingual-MiniLM-L12-v2 model which produces 384-dimensional sentence embeddings and supports multiple languages). These models map text to a dense vector space where semantically similar texts lie nearby (sentence-transformers/all-MiniLM-L6-v2 - Hugging Face). By including the context in the input, the resulting embedding incorporates nuances: "Alice in Paris" said in a travel context will differ from "Alice in Wonderland" in a literary context, for instance.

Formally, for triplet t with head h, relation phrase r, tail t, and original paragraph P, we construct a string S_t = h + " " + r + " " + t + " [SEP] " + P_excerpt. ([SEP] denotes a separator; we ensure the model knows which part is the focus vs. context, or simply rely on the full encoding). The sentence encoder then produces v_t = \text{Embed}(S_t), a high-dimensional vector (e.g., ℝ^384). This edge vector represents the directed edge h -> t carrying the meaning of relation r under context P. If multiple triplets are derived from one pair (e.g., both directions), each gets its own vector.

Graph Construction and Inertia-Wedged Update

We insert the triplet as a directed edge in the knowledge graph data structure. Each unique token becomes a node (vertex) in the graph. Each directed relation between tokens is an edge labeled with the relation phrase and associated with the embedding vector v_t. Initially, each extracted triplet forms a new edge.

If a subsequent paragraph yields a triplet that involves the same pair of nodes (A, B) in the same direction, we do not create a duplicate edge but rather update the existing edge’s vector to incorporate the new information. We employ an inertia-weighted averaging mechanism for this update. Specifically, if an edge A -> B with current vector v_old exists and a new context produces a vector v_new, we update the stored vector as:

v_updated = w * v_old + (1 - w) * v_new,

with a weight 0 < w < 1 (e.g., $w=0.8$) reflecting the inertia. This is essentially a momentum-based update: the edge’s embedding moves partway toward the new observation. Intuitively, the edge maintains a memory of past contexts (through v_old) while accommodating the new context (v_new). Over time, as more contexts are seen, v_updated becomes an aggregate representation of the relation between A and B across all seen data. This approach smooths out noise and handles contradictions by not fully overriding earlier embeddings. If one paragraph suggests A -> B has relation r1 and another suggests A -> B has a different relation r2, the edge vector will drift somewhere between the two semantic positions. A highly contradictory relation (e.g., one context says "X causes Y" and another says "X prevents Y") might result in a vector that is not strongly aligned with either r1 or r2, effectively lowering its cosine similarity to both contexts. This way, contradictions manifest as uncertainty (no clear strong relation vector) until further evidence tips the balance. Our approach is analogous to concept drift adaptation in data streams, where models gradually adjust to new data while retaining prior knowledge (). The inertia weight can be tuned: a higher w makes the system more conservative (slow to change), while a lower w makes it quick to learn new contexts but quicker to forget old ones.

The knowledge graph emerges in an online fashion. Nodes are added as new unique tokens appear. Edges are added or updated with each new triplet. The graph thus may contain multiple edges out of a node, corresponding to different relations that node (as a concept) participates in. Because edges carry dense vectors, the graph in effect becomes a vector-space knowledge graph, where each edge knows about the contexts that gave rise to it.

Importantly, this design naturally extends to multilingual input. If paragraphs in different languages mention the same concept, as long as the token text or a normalized form matches, they map to the same node. Using a multilingual sentence encoder ensures that relation vectors from different languages reside in a unified space. For example, an English statement "Paris is the capital of France" and a French statement "Paris est la capitale de la France" would both produce a Paris -> France edge vector capturing the "capital" relation. Multilingual embeddings help cluster these together. If different surface forms or synonyms appear (e.g., "Deutschland" vs "Germany"), an optional alias mapping or string matching can merge nodes, though a comprehensive solution to multilingual entity resolution is outside our current scope.

Query-Time Semantic Pathfinding

The constructed graph can be queried to perform reasoning. When a user poses a query (e.g., a question or a prompt), the system first embeds the query into the same vector space to obtain a query context vector v_q. We use the same sentence encoder that was used for triplets, applied to the full query text. This yields a vector that represents the semantic context or intent of the query.

Using v_q, the system activates relevant edges in the graph via similarity search. Specifically, it computes the cosine similarity between v_q and every edge vector v_t in the graph. Edges whose vectors are above a certain similarity threshold (or, say, the top-K most similar edges) are considered context-matching. These are edges likely to be useful for answering the query, because their relation semantics align with the query’s context. For example, if the query is "How is Alice related to France?", v_q would (ideally) be similar to edges that involve travel or location relations. In our running example, the edge "Alice -> Paris (in)" and "Paris -> France (in)" would both hopefully score high, since the query is hinting at a connection between Alice and France which in the text came via Paris.

Once relevant edges are activated, the system performs a graph traversal to find a path connecting the concepts of interest in the query. Often, a query might explicitly mention one or two entity names. If the query contains a specific start and end concept (e.g., Alice and France), we treat those as target nodes for pathfinding. If the query is more abstract, we identify nodes mentioned in the query (if any) as starting points. The traversal is guided by the activated edges: we prioritize moving along edges with high similarity to the query vector. This can be implemented as a weighted BFS/DFS, where each traversed edge adds a cost inversely related to its similarity. In practice, we might do a breadth-first search from the start node (e.g., Alice) and explore neighbors, but only along edges that were activated (or we dynamically check similarity during traversal). Because each edge represents a learned relation, following a chain of edges constitutes a multi-hop inference.

Continuing the example, the system would start at node Alice, see an outgoing edge Alice -> Paris (in) with a vector similar to v_q, include it, then from Paris see Paris -> France (in), include that, reaching France. Thus it finds a path: Alice —in→ Paris —in→ France. This path answers the query by showing an intermediate node Paris with the relation "in". In effect, the system inferred that Alice is in Paris and Paris is in France, so by transitive relation one could say Alice is in France (in the sense of being located in France via Paris).

If multiple paths exist, the system can choose the one with the overall highest similarity product or sum. If no path is found because the query’s concepts are unconnected, the system may return that no evidence was found in the graph, or try a looser traversal (lower similarity threshold) to find a more distant connection (an analogy or a multi-hop relation chain through several intermediate nodes).

Output Reconstruction and Interpretation

The raw output of the reasoning step is a sequence of nodes and relations forming a path. For user readability, the system reconstructs this as a set of relational statements or a narrative. In our example, the path Alice -> Paris -> France with relation "in" could be rendered as: Alice was in Paris, and Paris is in France. This provides a human-interpretable explanation of how Alice is related to France. If the query was phrased differently, e.g., "Did Alice visit France?", the system could output a reasoning chain that implies Yes: because Alice went to Paris and Paris is in France, it can be inferred Alice visited France.

We optionally integrate a large language model (LLM) to interpret and refine the answer. The sequence of triples can be fed into an LLM prompt, for example: "Based on the knowledge graph, we have: Alice – in – Paris; Paris – in – France. Therefore, Alice is in France via visiting Paris." The LLM can then produce a fluent answer or even a step-by-step explanation (similar to a chain-of-thought) (Knowledge Graph Large Language Model (KG-LLM) for Link ... - arXiv). This step leverages the generative strength of LLMs to communicate the reasoning, while the heavy lifting of finding the factual connection is done by the graph mechanism. By separating factual retrieval (our graph) from language generation (the LLM), we mitigate the risk of the LLM hallucinating facts, since it is constrained to elaborate on the actual discovered path (Large language models can better understand knowledge graphs ...).

The system’s design inherently supports analogical reasoning. A query need not exactly match stored facts; it might ask for something conceptually similar. In such cases, the query vector will activate edges that share a semantic relation type. For instance, if the graph knows about one person visiting a city and another query asks about a different person and country, the system might find an analogous intermediate city. The vector-space representation allows it to retrieve relations by similarity, effectively doing a form of analogical mapping (Analogical Reasoning with Knowledge-based Embeddings). We demonstrate an example of this in the Results section.

Results

We evaluated our system on a set of example texts and queries to illustrate its capabilities. The qualitative examples below show how contextual knowledge is captured and used for reasoning. All tests were run on a local machine with 16GB RAM and an NVIDIA 4GB GPU, using Hugging Face Transformers for model inference.

Knowledge Graph Construction Example: We input two short paragraphs:

- "Alice went to Paris in 2022 and loved the city."

- "Paris is the capital of France, known for its culture."

After preprocessing and TF–IDF analysis, Paragraph 1’s LAYS tokens might be {alice, paris, 2022, loved, city} (assuming Alice and Paris are unique, and ignoring common words). Paragraph 2 yields {paris, capital, france, culture}. The system generates triplet prompts for each token pair. Notable inferred relations include:

- From P1: (alice, in, paris) – DeBERTa filled "Alice [MASK] Paris" with "in", suggesting a locative relation (consistent with travel context).

- From P2: (paris, of, france) – from "Paris [MASK] France", the model produced "of", reflecting the "capital of" relationship (the model captured the preposition "of" which, given context, implies Paris is of France in terms of capital-city relation).

Each triplet is embedded with its context. For instance, (alice, in, paris) yields an edge vector v_alice->paris. Likewise (paris, of, france) gives v_paris->france. These edges are inserted into the graph with nodes Alice, Paris, France. Since Paris appears in both paragraphs, the edge Paris -> France is new, and Alice -> Paris is new; there is no duplicate.

Now, consider that a third paragraph might later say, "Alice moved to Canada in 2023." This would introduce an edge (alice, to, canada). If Alice -> Paris already exists, Alice -> Canada is separate. But if another context said "Alice left Paris in 2022.", it might create (alice, left, paris). The system would update the Alice->Paris edge vector by averaging the new context (which implies Alice was in Paris, but leaving suggests end of that relation). The relation embedding might drift from a pure "in" towards something encoding "was in, but left". The graph thus captures nuanced states like temporal changes.

Query Reasoning Example 1: "Is Alice connected to France?" – The query is vague but implies finding any connection between the entity Alice and France. The query vector v_q is generated from this question. The system finds that the edge Alice -> Paris and Paris -> France have high similarity with v_q (since both involve location relations which match the concept of being "connected to" in a place sense). It discovers the path Alice → Paris → France. The output chain is presented as: Alice was in Paris, and Paris is in France, hence Alice is connected to France. An LLM can rephrase: "Alice is connected to France because she went to Paris, and Paris is located in France." This showcases multi-hop reasoning through a shared intermediate.

Query Reasoning Example 2 (Analogy): Suppose the graph has captured another fact: "Bob traveled to Tokyo in 2019. Tokyo is in Japan." Now the graph has edges Bob→Tokyo and Tokyo→Japan. If one asks, "Alice is to France as Bob is to ____?", the system can interpret this as an analogy query. It recognizes the pattern "Alice:France :: Bob: ?". The context vector of the query will be similar to the context linking Alice and France (via travel). The system can find that Alice -> France (through Paris) is analogous to Bob -> Japan (through Tokyo). By activating edges in the "travel/location" semantic cluster, it finds Bob’s path to Japan. The answer filled in would be "Japan". In other words, it reasoned that Bob is to Japan in a similar way that Alice is to France. This analogical reasoning arises from the similarity of the relation vectors (both Alice and Bob edges have vectors indicating a person traveling to a country via a city, which cluster together).

Handling Contradiction: We crafted a scenario where a later paragraph states "Paris is not the capital of France after all in this fictional scenario." This introduces a contradictory piece of information about the relation between Paris and France. Our system would generate something like (paris, not, france) or (paris, no, france) from a masked prompt "Paris [MASK] France" (the model might predict "is" or "not" depending on how the sentence is phrased; if we allow a two-word mask, it might capture "not capital"). The existing edge Paris->France had v_old corresponding to "capital of". The new context yields v_new implying a negation of that relation. The edge vector is updated to v_updated = 0.8*v_old + 0.2*v_new. The resulting vector will be somewhat weakened (closer to neutral). If one now queries "Paris capital France?" the system might find the edge but with a lower similarity, or it might carry a flag due to the conflicting relation text. Although our current implementation averages the embeddings, a more sophisticated approach could maintain separate edges for contradictory relations or include a notion of consistency. Nonetheless, even the averaging approach means the query might not get a confident answer, reflecting uncertainty. This behavior aligns with the idea of tracking inconsistency in knowledge evolution ().

Overall, these examples show that the system can accumulate knowledge incrementally and reason about it contextually. The embeddings allow flexibly matching queries to relevant facts, even when phrased differently or indirectly. The graph structure ensures that multi-hop connections (which are essentially analogies or transitive inferences) can be found through pathfinding.

We also measured the computational performance on these examples. In all cases, relation inference with the masked LM (DeBERTa base) took only a few hundred milliseconds per pair on CPU, and embedding computation with MiniLM was similarly fast. The memory footprint remained modest (each edge stores a 384-d vector and a short text label). This confirms the approach is feasible for real-time use on moderate hardware.

Discussion

The proposed context-sensitive reasoning system combines symbolic structure (a graph of nodes and edges) with vector-based semantics, aiming to get the best of both worlds. The results demonstrate several notable capabilities:

Contextual Analogies: By embedding relations in a vector space, the system naturally supports analogical reasoning. Similar relations yield similar vectors, enabling the system to generalize a known connection to a novel but analogous one (Analogical Reasoning with Knowledge-based Embeddings). This is a step toward bridging symbolic analogy (e.g., proportional analogies “A is to B as C is to D”) with sub-symbolic embeddings. Unlike classic knowledge graphs that require exact relation matching, our system can retrieve edges by semantic similarity, which is powerful for answering conceptual questions.

Emergent Knowledge through Structure: As new paragraphs are added, the graph grows and reorganizes continuously. We observe an emergent structure: clusters of nodes connected by edges with similar embeddings can form thematic subgraphs (e.g. a subgraph of travel-related relations, a subgraph of personal relationships, etc.). This emergent graph structure is not explicitly given but arises from the data. It provides a form of continuous learning – every new piece of text refines the graph. This could be related to the idea of self-organizing knowledge bases, where patterns form without manual curation.

Handling of Evolving Knowledge: The inertia-weighted update offers a simple mechanism to deal with evolving or conflicting information. While not a full solution to managing contradictions, it provides graceful degradation of truths. Instead of flip-flopping a fact, the system’s confidence (as encoded in the relation vector’s alignment with context) shifts gradually. In practice, if contradictory statements are balanced, the system might answer cautiously or require additional context to disambiguate. In future work, one could enhance this by, for example, keeping multiple context-specific edge vectors or attaching a timestamp to edges and performing temporal reasoning (e.g., "was capital until 2023, then not"). Nonetheless, even the current approach is more flexible than static KBs which either keep a single truth or require complex versioning to handle change ().

Multilingual Support: Our use of multilingual models means the system can ingest information in different languages and still form a unified graph. In a test, we fed the system the French sentence "Alice est allée à Paris en 2022." along with the earlier English sentence about Alice. The token extraction found "Alice" and "Paris" (since names remain the same, aside from case), and the relation inference (using a French-capable masked model or an English model on a translated prompt) yielded "à" (to) or its English equivalent. The resulting edge embedding for Alice->Paris from French text was very close to that from English, and the averaging fused them. This indicates the approach can aggregate knowledge across languages, an attractive feature for global knowledge integration.

Efficiency and Scalability: The system’s components are lightweight: using base-sized transformer models and simple algorithms (TF–IDF, nearest-neighbor search for similarity). This makes it feasible to scale to hundreds or thousands of paragraphs on a single machine. For even larger scales, one could use approximate nearest neighbor search for the similarity matching, or offload the graph storage to a database (the edge vectors could be indexed in a vector search engine). The design is modular: one can plug in a more powerful relation extractor or a different sentence encoder if needed, as long as they output compatible embeddings. Our choice of models aimed to ensure that even on a 4GB VRAM GPU, everything can run (the models can also run on CPU if needed, at some cost to speed).

Despite these strengths, there are limitations and open issues:

- Quality of Relation Inference: Using a masked LM to guess a relation works reasonably for common sense or explicit relations, but it can produce unclear or overly generic results ("and", "related to"). We partially mitigated this by filtering and using context. In cases where the model’s top prediction is not informative, one could incorporate heuristics or fallback on actual text patterns (e.g., if the two tokens appeared in a sentence, use the shortest path in the dependency parse as the relation). A combination of heuristic extraction and LM inference might yield better triplets.

- Lack of Quantitative Evaluation: So far, we illustrated the system qualitatively. A rigorous evaluation would involve, for example, testing it on a set of query-answer pairs derived from a known dataset, or measuring how well it discovers hidden relations in synthetic data. One could compare it to baseline methods like static knowledge graph querying or pure embedding-based QA. We leave this for future work, as our focus here is on the architectural feasibility and emergent behaviors.

- Graph Pathfinding Complexity: In the current implementation, a BFS search could become expensive if the graph is very large or densely connected. While our similarity gating prunes the search space, pathological cases could still cause combinatorial explosion. In future, employing more sophisticated graph algorithms or neural symbolic reasoning techniques (Neural, symbolic and neural-symbolic reasoning on knowledge ...) might be beneficial. Also, incorporating edge weights (e.g., based on frequency or recency) could help prioritize more reliable paths.

- Interpretability: Each edge vector is somewhat opaque in terms of human interpretability – it’s a distributed representation of possibly multiple contexts. While the final path is explained through the relation labels and nodes, understanding why a particular edge was chosen (especially if it’s influenced by many contexts) might be non-trivial. One idea is to store a few prototypical context sentences along with the edge to explain its meaning if needed. Our design already keeps the relation label (the masked word or phrase), which helps as a shorthand.

In summary, our system demonstrates a working solution for dynamically constructing a semantic knowledge graph and using it for context-aware reasoning. It aligns with the trend of combining neural and symbolic methods: we use neural models for inference and representation, but maintain an explicit graph structure for combinatorial reasoning. This synergy allows answering queries that require multi-step logical connections, which pure end-to-end neural models might struggle with unless extremely large. Moreover, by updating continuously, it inches towards a continuously learning agent that can adapt to new information on the fly.

Conclusion

We have introduced a novel semantic reasoning system that builds and maintains a context-sensitive knowledge graph from raw text. The system is characterized by its use of triplet embeddings that capture the nuances of each fact’s originating context, and by an update mechanism that gracefully handles new and potentially conflicting information. Through a combination of TF–IDF keyword extraction, masked language model prompts (using DeBERTa) for relation inference, and transformer-based encoding, the system is able to perform analogy-based reasoning and multi-hop inference on the resulting graph.

Our approach contributes an effective way to integrate continuous representations with discrete reasoning: the graph structure ensures interpretability of the reasoning chain, while the vector semantics enable flexible matching and generalization. The ability to activate edges by context similarity is akin to doing a “fuzzy lookup” of relevant knowledge, a feature that is increasingly important as the volume of information grows and exact matches become unlikely. Additionally, by supporting multilingual data and running on moderate hardware, our system is practical for real-world applications such as intelligent assistants, adaptive knowledge bases, or cross-lingual information aggregation.

Future work will aim to refine the components and evaluate the system more rigorously. Directions include: improving relation extraction with more advanced prompt schemes or fine-tuning (perhaps leveraging techniques from prompt-based zero-shot IE (Zero-Shot Triplet Extraction via Template Infilling | by Megagon Labs | Medium)), incorporating temporal handling for time-sensitive knowledge, clustering edge embeddings to explicitly detect distinct relation senses (instead of a single average that might blur contradictions), and integrating a feedback loop with the language model so that it can ask for clarification or additional context when the graph is uncertain. We also plan to test the system on benchmarks for question answering and see if the explanation paths it generates can enhance trust and transparency in AI reasoning.

In conclusion, the context-sensitive semantic reasoning system exemplifies how emergent knowledge structures can be built from unstructured text and used to support complex reasoning. It offers a promising step toward AI systems that continuously learn, reason through analogy, and gracefully adapt to new information — much like human commonsense reasoning, but powered by the latest advances in language understanding.

Appendix: Python Implementation

Below we provide a Python script that implements the core functionality of the proposed system. The code uses Hugging Face Transformers for the language models (a DeBERTa model for masked relation inference and a MiniLM-based model for embeddings). It is designed for clarity and can be executed as a standalone module. Comments are included to explain each part of the process.

import math

import itertools

import numpy as np

from typing import List, Tuple, Dict

from transformers import AutoModelForMaskedLM, AutoTokenizer, AutoModel

# Define the models to use (pretrained Hugging Face models)

MASK_MODEL_NAME = "microsoft/deberta-base" # DeBERTa for mask filling (base model)

EMBED_MODEL_NAME = "sentence-transformers/paraphrase-multilingual-MiniLM-L12-v2" # Multilingual MiniLM for embeddings

# Load the masked language model and tokenizer

mask_tokenizer = AutoTokenizer.from_pretrained(MASK_MODEL_NAME)

mask_model = AutoModelForMaskedLM.from_pretrained(MASK_MODEL_NAME)

mask_model.eval() # set in evaluation mode, since we won't train it

# Load the sentence embedding model and tokenizer

embed_tokenizer = AutoTokenizer.from_pretrained(EMBED_MODEL_NAME)

embed_model = AutoModel.from_pretrained(EMBED_MODEL_NAME)

embed_model.eval()

# Stopword list (for English). For multilingual, extend or replace accordingly.

STOPWORDS = set("""

a about above after again against all am an and any are as at be because been before being

below between both but by cannot could did do does doing down during each few for from further

had has have having he her here hers herself him himself his how i if in into is it its itself

just me more most my myself nor of on once only or other ought our ours ourselves out over own

same she should so some such than that the their theirs them themselves then there these they

this those through to too under until up very was we were what when where which while who whom

why will with you your yours yourself yourselves

""".split())

class SemanticGraph:

def __init__(self, inertia: float = 0.8):

"""

Initialize the semantic graph.

:param inertia: weight for existing edge vector when updating (0"""

self.inertia = inertia

# Graph structure: adjacency list mapping head -> dict of tail -> edge data

# Edge data contains 'vector' (numpy array) and 'relation' (text label of relation)

self.graph: Dict[str, Dict[str, Dict]] = {}

def preprocess_text(self, text: str) -> List[str]:

"""Tokenize text into words and remove stopwords and punctuation. Returns list of tokens."""

# Basic tokenization by splitting on whitespace and stripping non-alphanumeric chars

tokens = []

for word in text.split():

# Remove punctuation at word boundaries

token = "".join(ch for ch in word if ch.isalnum())

token = token.lower()

if not token or token.isnumeric():

# Skip pure numbers (optional: could include years if needed)

continue

if token in STOPWORDS:

continue

tokens.append(token)

return tokens

def get_significant_tokens(self, tokens_list: List[List[str]], top_k: int = 5) -> List[List[str]]:

"""

Compute TF-IDF for tokens in each document and return top_k tokens for each.

:param tokens_list: List of tokenized documents (each document is a list of tokens).

:param top_k: number of top tokens to return per document.

"""

# Calculate document frequencies (DF) for each token

df: Dict[str, int] = {}

N = len(tokens_list)

for tokens in tokens_list:

unique_tokens = set(tokens)

for token in unique_tokens:

df[token] = df.get(token, 0) + 1

# Calculate TF-IDF for each document

docs_top_tokens: List[List[str]] = []

for tokens in tokens_list:

tf: Dict[str, int] = {}

for token in tokens:

tf[token] = tf.get(token, 0) + 1

# compute tf-idf for each token in this document

tfidf: List[Tuple[str, float]] = []

for token, freq in tf.items():

# If token not in df (should not happen as df should have it), skip

if token not in df:

continue

# raw tf (count) and idf = log(N/df)

idf = math.log((N + 1) / (df[token] + 1)) + 1 # using log smoothing

score = freq * idf

tfidf.append((token, score))

# sort tokens by tf-idf score

tfidf.sort(key=lambda x: x[1], reverse=True)

top_tokens = [token for token, score in tfidf[:top_k]]

docs_top_tokens.append(top_tokens)

return docs_top_tokens

def infer_relation(self, token_a: str, token_b: str) -> str:

"""

Use masked LM to infer a possible relation word between token_a and token_b.

Returns the predicted relation word (or empty string if not confident).

"""

mask_token = mask_tokenizer.mask_token

prompt = f"{token_a} {mask_token} {token_b}"

inputs = mask_tokenizer(prompt, return_tensors='pt')

with torch.no_grad():

outputs = mask_model(**inputs)

# outputs.logits shape: (batch_size=1, seq_len, vocab_size)

mask_index = (inputs['input_ids'][0] == mask_tokenizer.mask_token_id).nonzero(as_tuple=True)[0]

if mask_index.numel() == 0:

return ""

mask_index = mask_index.item()

mask_logits = outputs.logits[0, mask_index]

# Get top candidate

top_id = int(mask_logits.argmax(dim=-1))

pred_token = mask_tokenizer.decode([top_id]).strip()

# Simple filtering: if prediction is punctuation or empty or stopword, skip it

if not pred_token.isalpha() or pred_token.lower() in STOPWORDS:

return ""

return pred_token

def encode_text(self, text: str) -> np.ndarray:

"""Encode a piece of text into a vector using the embedding model."""

inputs = embed_tokenizer(text, return_tensors='pt', truncation=True)

with torch.no_grad():

outputs = embed_model(**inputs)

# For sentence-transformers models, it's common to average the token embeddings from the last layer.

token_embeddings = outputs.last_hidden_state[0] # (seq_len, hidden_dim)

# Create attention mask to ignore padding tokens (if any)

att_mask = inputs['attention_mask'][0] # (seq_len)

mask_expanded = att_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

# Perform mean pooling

sum_embeddings = torch.sum(token_embeddings * mask_expanded, dim=0)

sum_mask = torch.clamp(att_mask.sum(), min=1e-9)

sentence_embedding = sum_embeddings / sum_mask

# Convert to numpy array

return sentence_embedding.cpu().numpy()

def add_paragraph(self, paragraph: str):

"""

Process a new paragraph: extract triplets and update the graph.

"""

# Preprocess and get significant tokens

tokens = self.preprocess_text(paragraph)

if not tokens:

return

# Determine top tokens by TF-IDF relative to existing corpus plus this paragraph.

# Here, for simplicity, we compute significance just within this paragraph (could incorporate corpus DF).

# We use self.get_significant_tokens on just this one paragraph for demonstration.

top_tokens = self.get_significant_tokens([tokens], top_k=5)[0]

# Generate triplets from top token pairs

for a, b in itertools.combinations(top_tokens, 2):

rel = self.infer_relation(a, b)

if not rel:

continue # skip if no meaningful relation inferred

# Create a short context string for embedding

context_snippet = " ".join(tokens[:20]) # first 20 tokens as snippet (or full paragraph truncated)

triplet_text = f"{a} {rel} {b}. Context: {context_snippet}"

vec = self.encode_text(triplet_text)

# Add/update directed edge a -> b

self._update_edge(a, b, rel, vec)

def _update_edge(self, head: str, tail: str, relation: str, vector: np.ndarray):

"""Internal: add or update an edge in the graph with given head->tail and vector."""

if head not in self.graph:

self.graph[head] = {}

if tail not in self.graph[head]:

# Create new edge

self.graph[head][tail] = {'relation': relation, 'vector': vector}

else:

# Update existing edge vector via inertia-weighted averaging

old_vec = self.graph[head][tail]['vector']

new_vec = vector

updated_vec = self.inertia * old_vec + (1 - self.inertia) * new_vec

# Normalize updated vector to unit length (optional, for consistency in cosine similarity)

norm = np.linalg.norm(updated_vec)

if norm > 0:

updated_vec = updated_vec / norm

# Update stored vector and possibly update relation label (we keep the latest relation text for reference)

self.graph[head][tail]['vector'] = updated_vec

self.graph[head][tail]['relation'] = relation

def query(self, query_text: str, top_k: int = 3) -> List[Tuple[str, str, str]]:

"""

Answer a query by finding a relevant path in the graph.

Returns a list of triples (head, relation, tail) representing the path.

"""

tokens = self.preprocess_text(query_text)

# Identify if any known nodes are mentioned in query

mentioned_nodes = [t for t in tokens if t in self.graph]

# Compute query vector

q_vec = self.encode_text(query_text)

# If no specific starting node given, we might consider all edges for matching

start_nodes = mentioned_nodes if mentioned_nodes else list(self.graph.keys())

# Find top candidate edges (head->tail) that match query context

candidates: List[Tuple[float, str, str]] = [] # list of (similarity, head, tail)

for head in start_nodes:

for tail, data in self.graph.get(head, {}).items():

vec = data['vector']

# compute cosine similarity between q_vec and edge vec

# (ensure vectors are normalized to avoid needing division by norms every time)

norm_q = np.linalg.norm(q_vec)

norm_v = np.linalg.norm(vec)

if norm_q == 0 or norm_v == 0:

sim = 0.0

else:

sim = np.dot(q_vec, vec) / (norm_q * norm_v)

candidates.append((sim, head, tail))

if not candidates:

return []

# Sort candidates by similarity

candidates.sort(reverse=True, key=lambda x: x[0])

# Take top_k edges to explore

top_edges = candidates[:top_k]

# If the query explicitly has two mentioned nodes, try to connect them

if len(mentioned_nodes) >= 2:

target = mentioned_nodes[-1] # take the last mentioned as target for example

else:

target = None

# Simple strategy: if a target is given, choose the edge that leads closer to target

# For this demo, we assume a one-hop or two-hop answer is enough (extendable with BFS for longer paths).

path: List[Tuple[str, str, str]] = []

for sim, head, tail in top_edges:

path.append((head, self.graph[head][tail]['relation'], tail))

if target and tail.lower() == target:

# If we've reached the target node, break

break

# If target not reached in one hop, see if tail connects to target in one more hop

if target:

for tail2, data in self.graph.get(tail, {}).items():

path.append((tail, data['relation'], tail2))

if tail2.lower() == target:

break

# break outer loop as well since path is found

break

return path

# Example usage:

if __name__ == "__main__":

sg = SemanticGraph(inertia=0.8)

paragraph1 = "Alice went to Paris in 2022 and loved the city."

paragraph2 = "Paris is the capital of France, known for its culture."

sg.add_paragraph(paragraph1)

sg.add_paragraph(paragraph2)

query = "How is Alice related to France?"

path = sg.query(query)

print("Query:", query)

if path:

print("Reasoning path:")

for (h, r, t) in path:

print(f"{h} -[{r}]-> {t}")

else:

print("No path found.")

Notes on the Implementation: This script uses a simplified approach for demonstration. In a production or research setting, one might want to optimize various parts:

- The TF–IDF computation here recalculates for each paragraph independently (

get_significant_tokensis used per paragraph for simplicity). In an ongoing system, one would maintain global DF counts and update them incrementally as new documents arrive. - The masked LM inference (

infer_relation) currently takes the top predicted token. In practice, it may be useful to consider the top n candidates and choose one that fits best, or even allow multi-token relations by sequentially filling multiple masks. - For encoding (

encode_text), we explicitly perform mean pooling of token embeddings. Many sentence-transformer models actually produce already-pooled outputs or have amodel.encode()convenience function (especially if using the SentenceTransformers library). We do it manually here to avoid additional dependencies. - We normalize vectors on update for stable cosine similarity comparisons. One might also choose to store all edge vectors normalized from the start.

- The

queryfunction demonstrates a basic selection of top edges and at most two hops. A more thorough search (BFS/DFS) would be needed for longer paths. Additionally, we assumed the query mentions the target concept; if not, the system just returns the most relevant edge(s) to the query context, which might be a partial answer. - Error handling, such as missing model files or ensuring model downloads, is omitted for brevity. Before running this script, the required models should be downloaded (the Hugging Face transformers library will do this automatically on first use if internet is available).

The code above provides a foundation for the context-sensitive semantic reasoning system. Researchers and developers can build upon this to explore larger datasets, integrate the module into QA systems, or extend it with more advanced NLP techniques as discussed in the paper.