AI safety controls at scale with Amazon Bedrock Guardrails

This week I'm back with another "learn with me" on a topic that consistently weighs heavily on me: AI safety. It's no doubt the world of AI has changed drastically since the days of AlexNet, ResNet and similar pioneering results. I don't remember exactly what I was doing in November of 2022, but I can certainly remember the hype surrounding the public release of then newcomer ChatGPT. As the number of training parameters for foundation models increased from millions to billions and now trillions, the question remained- how do we responsibly use these models and ensure they're not creating more downstream misinformation for the general public? There are numerous ways to tackle this topic of course, but today I'd like to focus on how developers can use Amazon Bedrock Guardrails to mitigate some of these challenges. This is my first time getting hands on with Guardrails since they were in preview back in 2023, so let's see what's changed

This week I'm back with another "learn with me" on a topic that consistently weighs heavily on me: AI safety.

It's no doubt the world of AI has changed drastically since the days of AlexNet, ResNet and similar pioneering results. I don't remember exactly what I was doing in November of 2022, but I can certainly remember the hype surrounding the public release of then newcomer ChatGPT.

As the number of training parameters for foundation models increased from millions to billions and now trillions, the question remained- how do we responsibly use these models and ensure they're not creating more downstream misinformation for the general public?

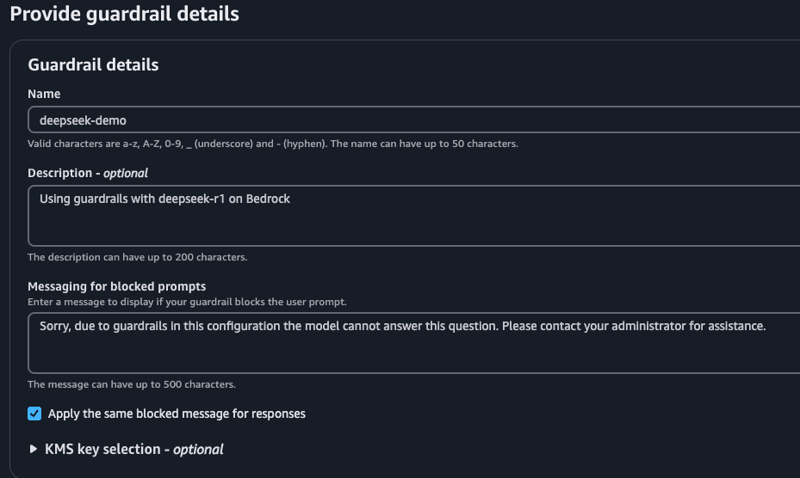

There are numerous ways to tackle this topic of course, but today I'd like to focus on how developers can use Amazon Bedrock Guardrails to mitigate some of these challenges. This is my first time getting hands on with Guardrails since they were in preview back in 2023, so let's see what's changed