Accelerating LLM Inference on ECS: Leveraging SOCI with AWS Fargate for Lightning-Fast Container Startup

Automated SOCI implementation with AWS Fargate using AWS-CDK In today’s AI/ML engineering and operations landscape, container startup time has become a critical bottleneck, particularly when deploying Large Language Models (LLMs). The immense size of these model containers can lead to frustratingly slow deployments and costly scaling operations. Our innovative solution using Seekable OCI (SOCI) with AWS Fargate demonstrates a revolutionary approach to this challenge, dramatically reducing startup times while maintaining inference quality all using Infrastructure-as-code (IaC) approach with AWS-CDK. The Container Startup Challenge for LLMs When deploying LLMs in production environments, we often find ourselves waiting minutes for large container images to download before the service becomes operational. This delay isn’t just an inconvenience — it directly impacts costs, scaling responsiveness, and ultimately user experience. Traditional container runtimes download entire container images before allowing services to start, creating a fundamental inefficiency when we only need a fraction of the image to begin operation. Introducing SOCI: A Game-Changer for Container Startup Seekable OCI (SOCI) a technology open sourced by AWS, represents a significant advancement in container image handling. Rather than downloading an entire container image before starting, SOCI enables “lazy loading” — pulling only the essential parts of an image needed for initial execution, then fetching additional layers on-demand as required. The core innovation lies in a pre-built index that maps file paths to their locations within container layers, allowing targeted retrieval of only necessary components. This approach dramatically reduces initial startup time, often by 50% or more for large images like those containing LLMs. Architecture: A Side-by-Side Performance Comparison Our demonstration constructs two parallel environments using AWS CDK to showcase the performance difference: A SOCI-enabled ECS Fargate service pulling an Ollama container with the DeepSeek-R1 1.5B parameter model An identical setup without SOCI-enabled Fargate service Both environments instrumented with metrics collection CloudWatch dashboard showing real-time performance comparison This A/B test architecture provides irrefutable evidence of SOCI’s benefits in real-world conditions. The Infrastructure Code Explained Let’s examine our CDK code that powers this demonstration: const sociTaskDef = this.createTaskDefinition( amilazyLogGroup, appImageAsset, amilazy, 'SociTaskDef', true, executionRole, taskRole, ollamaRepoSoci ); const nonSociTaskDef = this.createTaskDefinition( amilazyLogGroup, appImageAsset, amilazy, 'NonSociTaskDef', false, executionRole, taskRole, ollamaRepoNonSoci ); This code creates two parallel Fargate task definitions with identical configurations except for SOCI enablement. Both tasks run the same Ollama container with DeepSeek-R1 models, but with fundamentally different container loading mechanisms (soci/overlayfs). The infrastructure ensures fair comparison by deploying both containers to the same VPC with identical network conditions: const vpc = new ec2.Vpc(this, 'OllamaVpc', { maxAzs: 2, subnetConfiguration: [ { name: 'public-subnet', subnetType: ec2.SubnetType.PUBLIC, cidrMask: 24, } ], natGateways: 0, }); This creates a lightweight VPC with public subnets only, eliminating network variables that might skew our performance comparison. The Secret Sauce: SOCI Index Creation What makes the SOCI magic happen is the creation of a special index for the container image: // Create SOCI index after the image has been deployed const sociIndexBuild = new SociIndexBuild(this, 'Index', { repository: ollamaRepoSoci, imageTag: appImageAsset.imageTag, }); // Ensure SOCI index creation happens after image deployment sociIndexBuild.node.addDependency(imageDeploymentSoci); This simple line of code creates a SOCI index in the Amazon ECR private repository for our Ollama container image, enabling the precise file-level addressing that powers lazy loading. The index is essentially a map that connects file paths to their exact locations within container layers, allowing targeted retrieval. Note: There are multiple ways to build a SOCI index. Here, we use an automated approach with SociIndexBuild from the deploy-time-build npm package. This method removes the need to push the index locally from your laptop, which is not recommended for production. Additionally, the manual approach requires a containerd runtime, which can be complex to set up on a Mac — though it’s now possible using Finch, a topic we’ll cover in a future post. Validating Lazy Loading with Am-I-Lazy To verify that SOCI is actually performing lazy loading (and not silently falling back to traditional loa

Automated SOCI implementation with AWS Fargate using AWS-CDK

In today’s AI/ML engineering and operations landscape, container startup time has become a critical bottleneck, particularly when deploying Large Language Models (LLMs). The immense size of these model containers can lead to frustratingly slow deployments and costly scaling operations. Our innovative solution using Seekable OCI (SOCI) with AWS Fargate demonstrates a revolutionary approach to this challenge, dramatically reducing startup times while maintaining inference quality all using Infrastructure-as-code (IaC) approach with AWS-CDK.

The Container Startup Challenge for LLMs

When deploying LLMs in production environments, we often find ourselves waiting minutes for large container images to download before the service becomes operational. This delay isn’t just an inconvenience — it directly impacts costs, scaling responsiveness, and ultimately user experience. Traditional container runtimes download entire container images before allowing services to start, creating a fundamental inefficiency when we only need a fraction of the image to begin operation.

Introducing SOCI: A Game-Changer for Container Startup

Seekable OCI (SOCI) a technology open sourced by AWS, represents a significant advancement in container image handling. Rather than downloading an entire container image before starting, SOCI enables “lazy loading” — pulling only the essential parts of an image needed for initial execution, then fetching additional layers on-demand as required.

The core innovation lies in a pre-built index that maps file paths to their locations within container layers, allowing targeted retrieval of only necessary components. This approach dramatically reduces initial startup time, often by 50% or more for large images like those containing LLMs.

Architecture: A Side-by-Side Performance Comparison

Our demonstration constructs two parallel environments using AWS CDK to showcase the performance difference:

- A SOCI-enabled ECS Fargate service pulling an Ollama container with the DeepSeek-R1 1.5B parameter model

- An identical setup without SOCI-enabled Fargate service

- Both environments instrumented with metrics collection

- CloudWatch dashboard showing real-time performance comparison

This A/B test architecture provides irrefutable evidence of SOCI’s benefits in real-world conditions.

The Infrastructure Code Explained

Let’s examine our CDK code that powers this demonstration:

const sociTaskDef = this.createTaskDefinition(

amilazyLogGroup,

appImageAsset,

amilazy,

'SociTaskDef',

true,

executionRole,

taskRole,

ollamaRepoSoci

);

const nonSociTaskDef = this.createTaskDefinition(

amilazyLogGroup,

appImageAsset,

amilazy,

'NonSociTaskDef',

false,

executionRole,

taskRole,

ollamaRepoNonSoci

);

This code creates two parallel Fargate task definitions with identical configurations except for SOCI enablement. Both tasks run the same Ollama container with DeepSeek-R1 models, but with fundamentally different container loading mechanisms (soci/overlayfs).

The infrastructure ensures fair comparison by deploying both containers to the same VPC with identical network conditions:

const vpc = new ec2.Vpc(this, 'OllamaVpc', {

maxAzs: 2,

subnetConfiguration: [

{

name: 'public-subnet',

subnetType: ec2.SubnetType.PUBLIC,

cidrMask: 24,

}

],

natGateways: 0,

});

This creates a lightweight VPC with public subnets only, eliminating network variables that might skew our performance comparison.

The Secret Sauce: SOCI Index Creation

What makes the SOCI magic happen is the creation of a special index for the container image:

// Create SOCI index after the image has been deployed

const sociIndexBuild = new SociIndexBuild(this, 'Index', {

repository: ollamaRepoSoci,

imageTag: appImageAsset.imageTag,

});

// Ensure SOCI index creation happens after image deployment

sociIndexBuild.node.addDependency(imageDeploymentSoci);

This simple line of code creates a SOCI index in the Amazon ECR private repository for our Ollama container image, enabling the precise file-level addressing that powers lazy loading. The index is essentially a map that connects file paths to their exact locations within container layers, allowing targeted retrieval.

Note: There are multiple ways to build a SOCI index. Here, we use an automated approach with SociIndexBuild from the deploy-time-build npm package. This method removes the need to push the index locally from your laptop, which is not recommended for production. Additionally, the manual approach requires a containerd runtime, which can be complex to set up on a Mac — though it’s now possible using Finch, a topic we’ll cover in a future post.

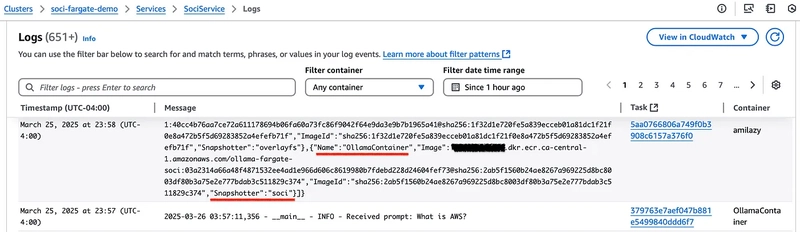

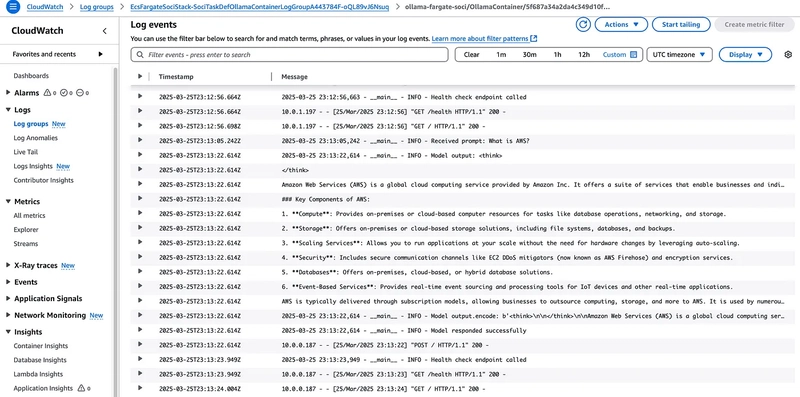

Validating Lazy Loading with Am-I-Lazy

To verify that SOCI is actually performing lazy loading (and not silently falling back to traditional loading mechanisms), we include a sidecar container called “Am-I-Lazy”:

// Add amilazy sidecar container

taskDef.addContainer('amilazy', {

image: ecs.ContainerImage.fromDockerImageAsset(amilazy),

logging: ecs.LogDrivers.awsLogs({

streamPrefix: 'amilazy'+ (enableSoci ? '-soci' : '-non-soci'),

mode: ecs.AwsLogDriverMode.NON_BLOCKING,

logGroup: amilazyLogGroup,

}),

essential: false,

});

This sidecar container, inspired by AWS, inspects the container runtime to verify the snapshotter in use is soci or overlayfs. It logs runtime confirmation in the ECS Task CloudWatch logs, ensuring our experiment is functioning as expected.

Note: Alternatively, we can manually verify the snapshotter used in ECS Fargate task by querying the $ECS_CONTAINER_METADATA_URI_V4 endpoint accessible inside ECS Exec. We'll cover this manual approach in a future post.

Instrumentation: Measuring What Matters

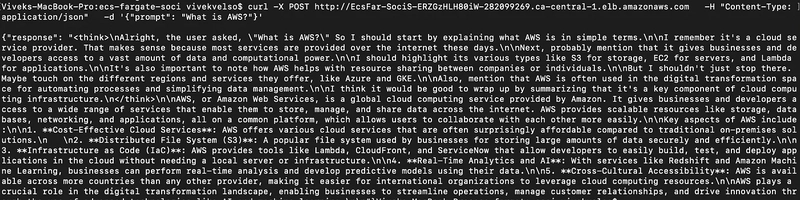

The Python code running inside our containers collects precise metrics to quantify the performance difference:

# Calculate image pull time using task metadata

if 'PullStartedAt' in task_metadata and 'PullStoppedAt' in task_metadata:

try:

pull_started_at = parse_timestamp(task_metadata['PullStartedAt'])

pull_stopped_at = parse_timestamp(task_metadata['PullStoppedAt'])

if pull_started_at and pull_stopped_at:

image_pull_time = (pull_stopped_at - pull_started_at).total_seconds()

logger.info(f"Image pull time: {image_pull_time:.2f} seconds")

dimensions = [{"Name": "ServiceName", "Value": SERVICE_NAME}]

# Publish the metric

publish_metrics(IMAGE_PULL_TIME_METRIC, image_pull_time, dimensions)

This code extracts precise timestamps from the ECS task metadata and calculates the exact image pull duration, then publishes these metrics to CloudWatch for visualization.

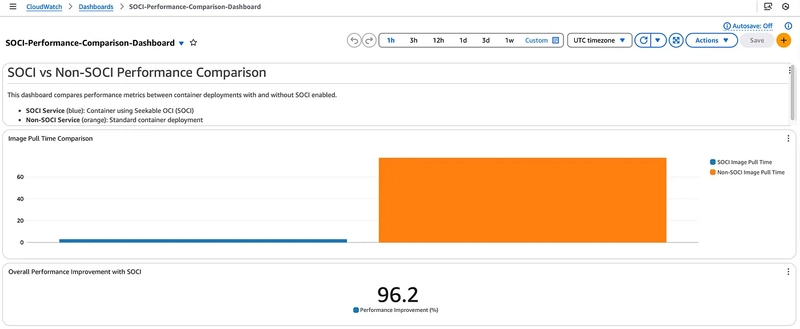

Visualizing the Performance Difference

Our CloudWatch dashboard typescript code brings the performance comparison to life:

// Image Pull Latency Comparison

const sociImagePullMetric = new cloudwatch.Metric({

namespace: namespace,

metricName: 'ImagePullTime',

dimensionsMap: { ServiceName: 'SociService' },

statistic: 'Average',

label: 'SOCI Image Pull Time'

});

const nonSociImagePullMetric = new cloudwatch.Metric({

namespace: namespace,

metricName: 'ImagePullTime',

dimensionsMap: { ServiceName: 'NonSociService' },

statistic: 'Average',

label: 'Non-SOCI Image Pull Time'

});

This CloudWatch dashboard provides a real-time visual comparison between SOCI and traditional image pulls, making the performance difference immediately apparent to anyone viewing the dashboard.

The dashboard even calculates and displays the percentage improvement, making the business value clear:

// Overall Improvement Percentage

const improvementMetric = new cloudwatch.MathExpression({

expression: '100 * (m2 - m1) / m2',

label: 'Performance Improvement (%)',

usingMetrics: {

m1: sociImagePullMetric,

m2: nonSociImagePullMetric,

},

period: cdk.Duration.minutes(5),

});

This expression calculates how much faster SOCI is compared to traditional methods as a percentage — a number that often exceeds 50% for large LLM model images.

Beyond Metrics: Real-World Impact

While the metrics tell a compelling story, the real-world implications are even more significant. Faster container startup means:

- Reduced costs: When using Fargate, you pay for the time your containers are running, including startup time. Cutting this in half directly reduces your bill.

- Improved scaling responsiveness: When traffic spikes, SOCI-enabled containers can scale up in half the time, maintaining consistent user experience under varying loads.

- Enhanced development velocity: Faster startup times accelerate development and testing cycles, allowing teams to iterate more rapidly.

- More reliable deployments: Reducing the time window for network-related failures during container pulls improves overall deployment reliability.

How to get started

To get started with your own SOCI implementation, the complete code for this demonstration is available in our GitHub repository. Try it today and experience the difference that intelligent lazy loading can make for your AI/ML operations.

git clone https://github.com/awsdataarchitect/ecs-fargate-soci.git

cd ecs-fargate-soci

npm install -g aws-cdk

npm install

cdk bootstrap aws:///

cdk deploy

Below is a quick summary of the key resources deployed by this CDK stack along with an estimated deploy time:

Resources Deployed

- VPC and Subnets: Creates a VPC with two Availability Zones and public subnets (no NAT gateways).

- IAM Roles:

- Task Execution Role: Grants ECS tasks permissions for CloudWatch logging and metrics.

- Task Role: Grants ECS tasks permissions to access ECR, CloudWatch, and other necessary services.

- ECR Repositories: Two repositories are created:

-

ollama-fargate-soci: Stores the SOCI-enabled container. -

ollama-fargate-non-soci: Stores the standard, non-SOCI container. - Docker Image Build and Deployment: Docker images are built from specified directories.

- Builds images from specified directories and pushes them to ECR.

- Uses a custom AWS CodeBuild resource (

SociIndexBuild) to generate a SOCI index post-deployment. - ECS Cluster and Task Definitions: Deploys an ECS cluster within the VPC.

- Creates two Fargate task definitions (SOCI-enabled & non-SOCI), each with a sidecar (

amilazy). - Fargate Services: Two Application Load-Balanced Fargate Services, each with:

- Public load balancers.

- Health checks configured on port 11434.

- Deployment settings optimized for faster rollouts.

- CloudWatch Dashboard: A CloudWatch dashboard is set up with text, graph, and single-value widgets to compare performance metrics (image pull times) between the SOCI and non-SOCI services.

Estimated Deploy Time

The overall deployment is estimated to take around 6 minutes and approx. cost of running this stack is around ~$0.12 to $0.15 per hour. This estimate factors in:

- VPC and IAM role provisioning.

- ECS cluster and service creation.

- ECR image build, push, and custom SOCI index creation steps.

Actual deployment time may vary slightly based on network conditions, image build times, and AWS service latencies.

Conclusion: The Future of Container Optimization

The integration of SOCI with AWS Fargate represents a step change in how we think about container optimization. Rather than accepting slow startup times as an inevitable cost of using large, sophisticated models, we can now deploy these capabilities with dramatically improved responsiveness.

As models continue to grow in size and complexity, innovations like SOCI will become increasingly critical to maintaining operational efficiency.

The next time you’re facing container startup delays with your AI/ML deployments, remember that these bottlenecks are no longer inevitable — they’re simply problems waiting for the right solution. And with SOCI on AWS Fargate, that solution is now available to all (although, I must point out that at the time of writing this blog, there is an internal known issue enabling SOCI snapshotter with AWS Fargate, and you can refer to my reply on AWS re:Post here for the interim solution).